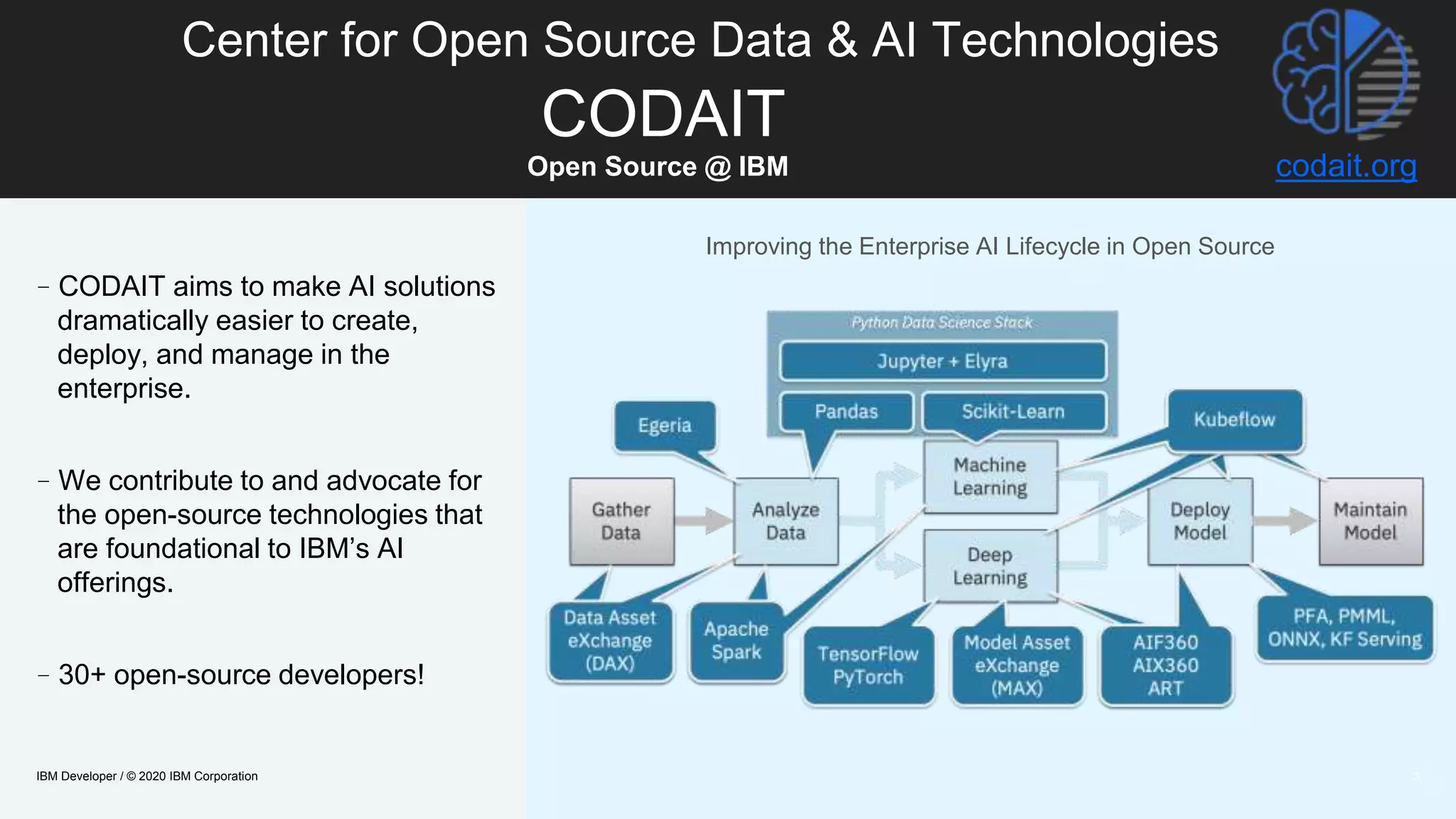

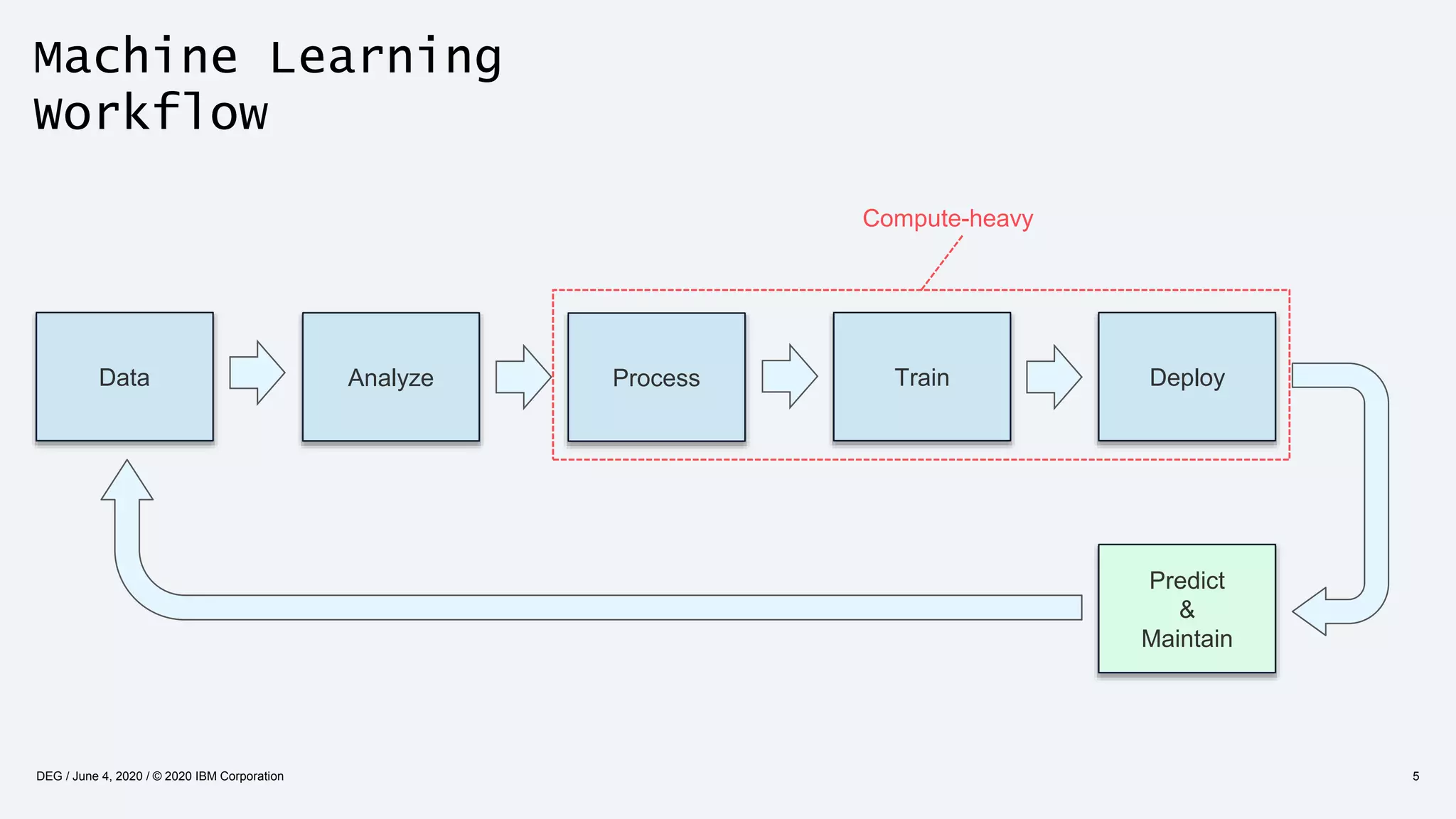

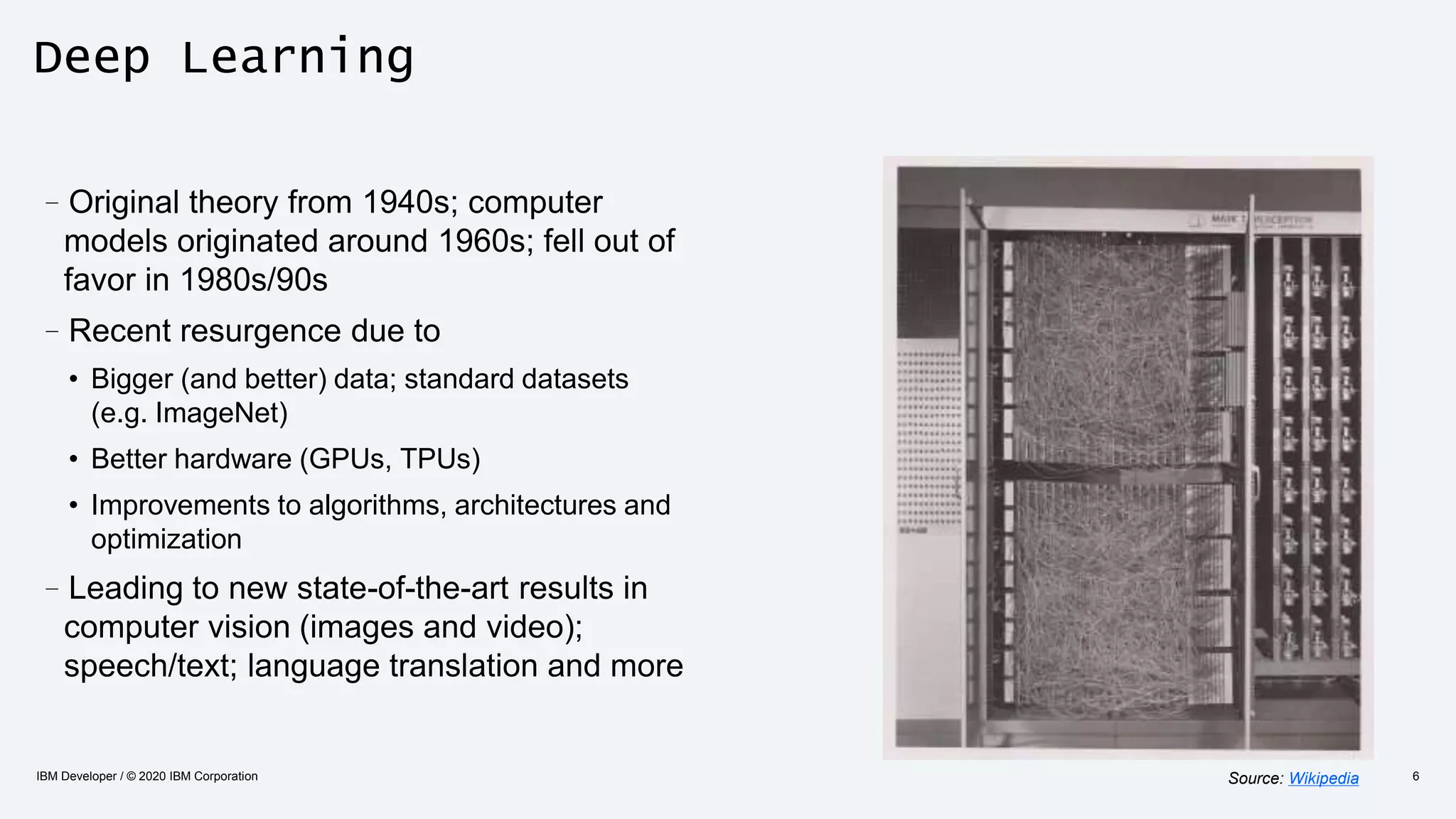

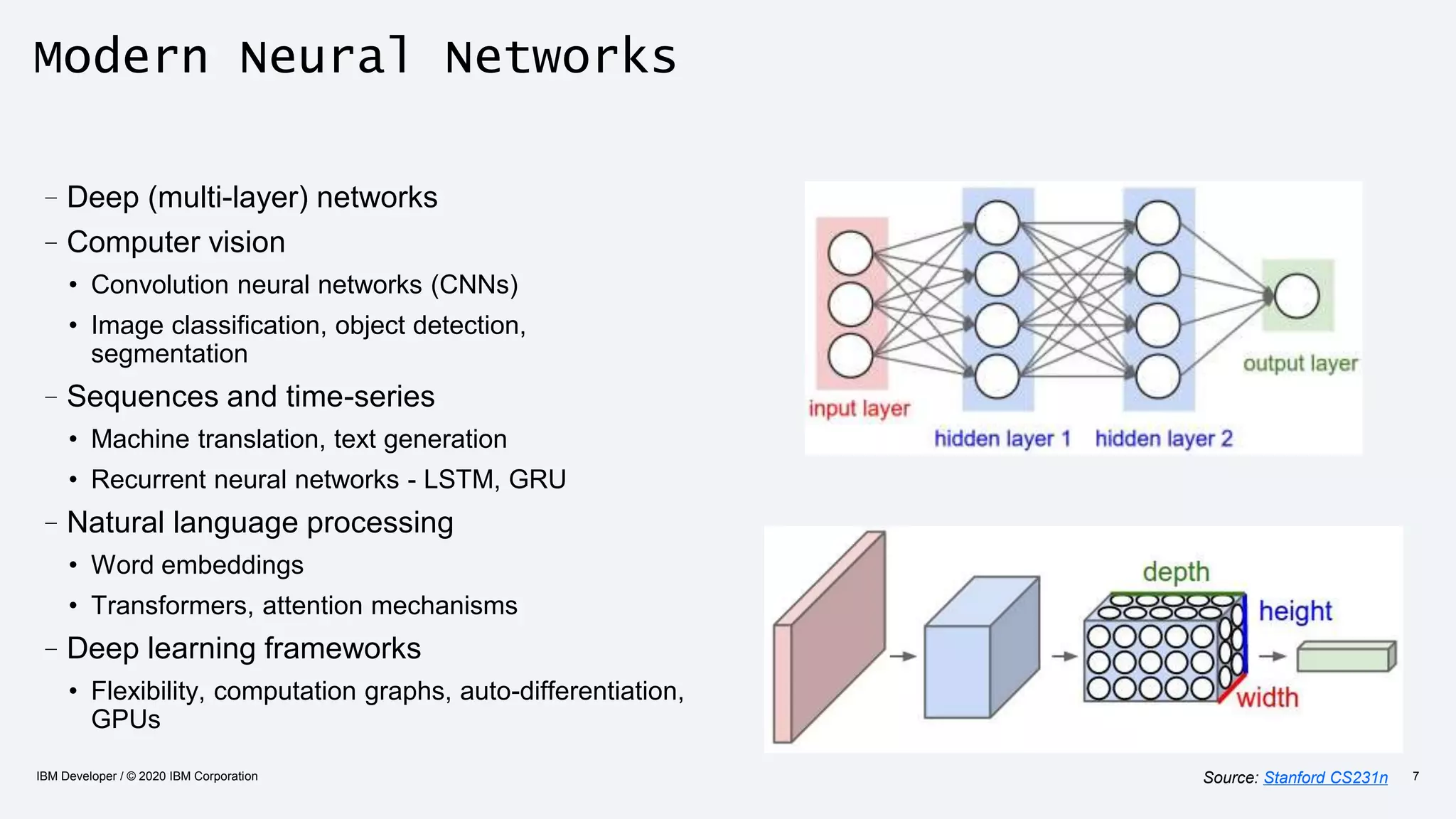

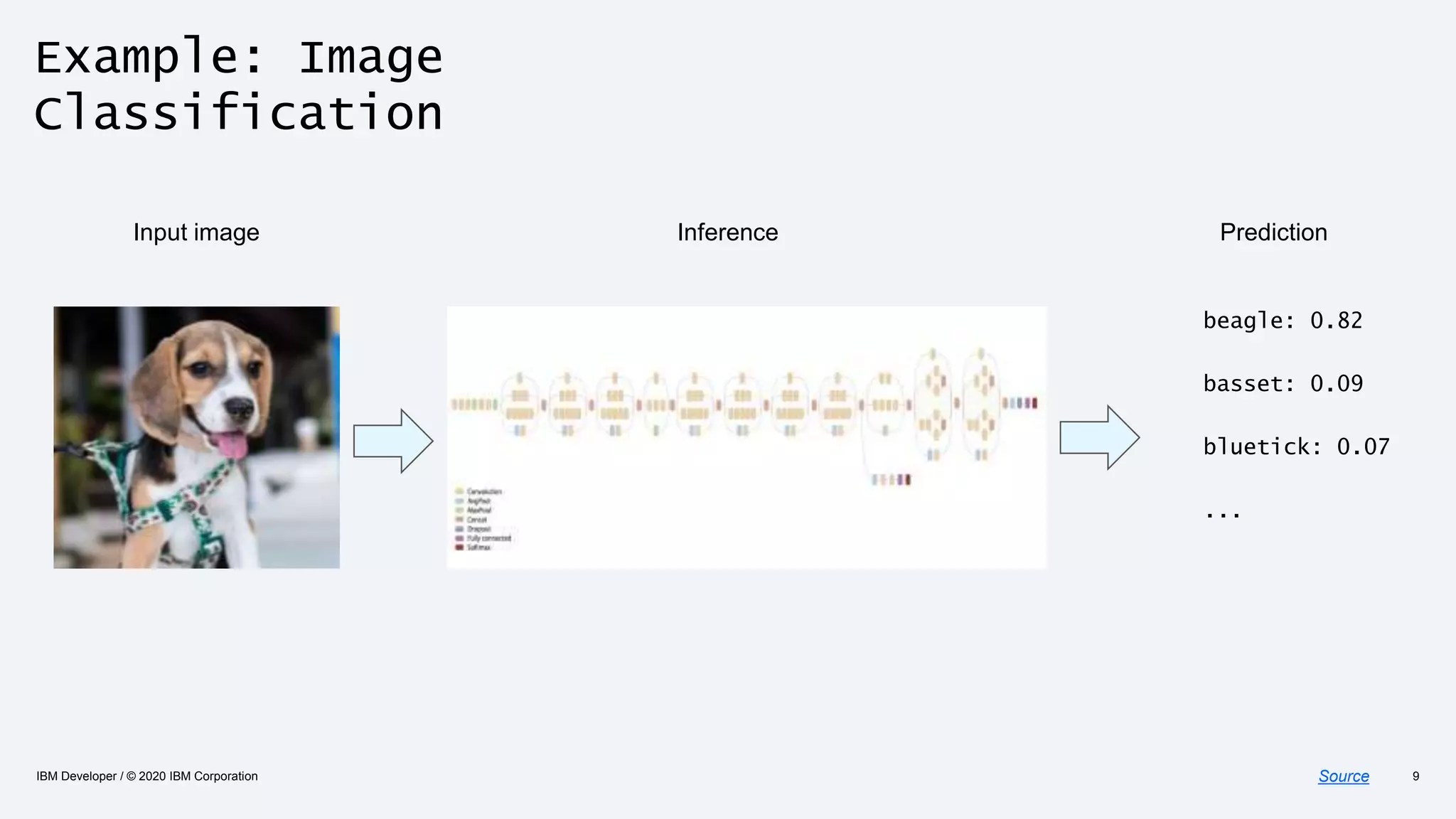

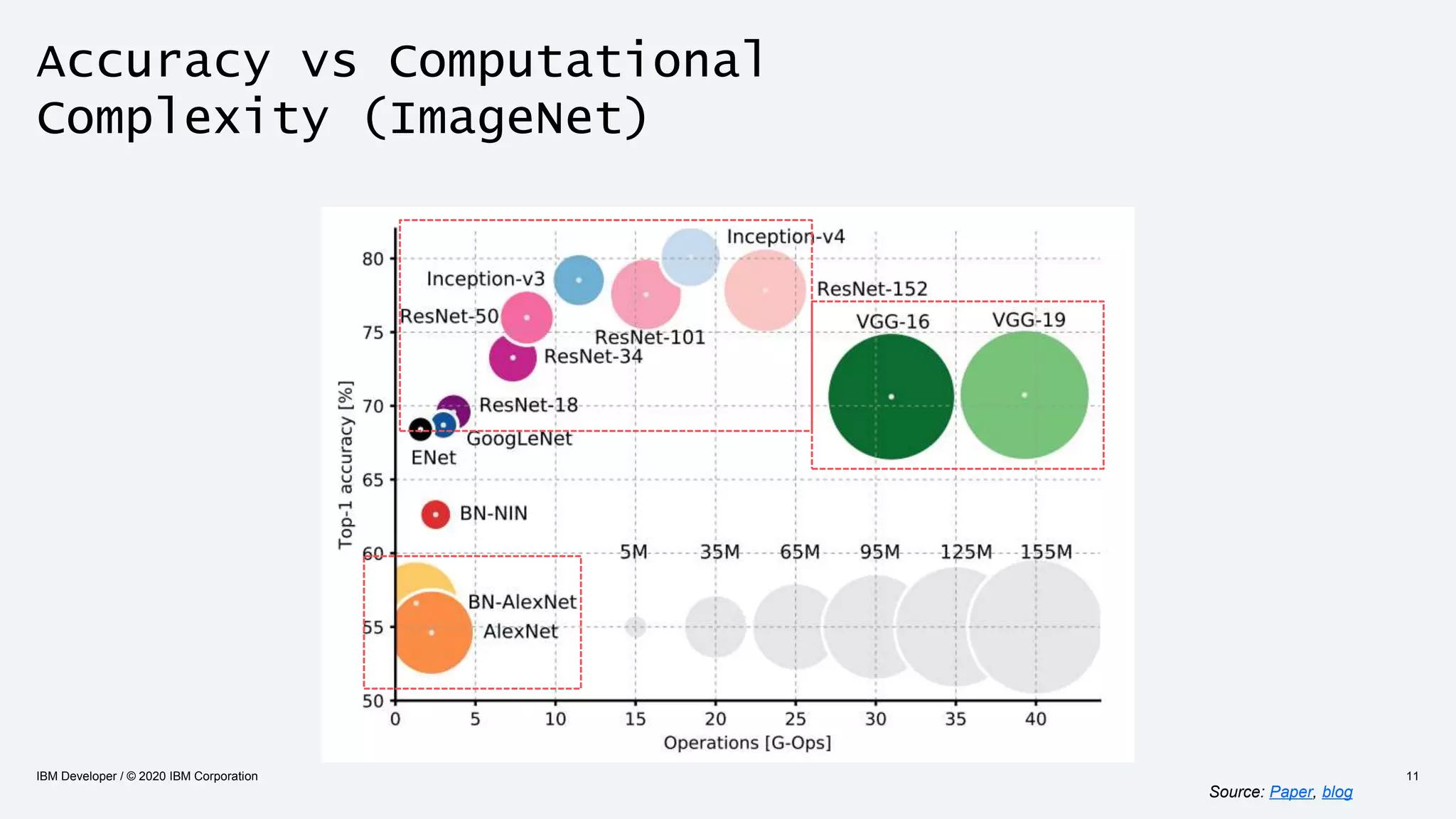

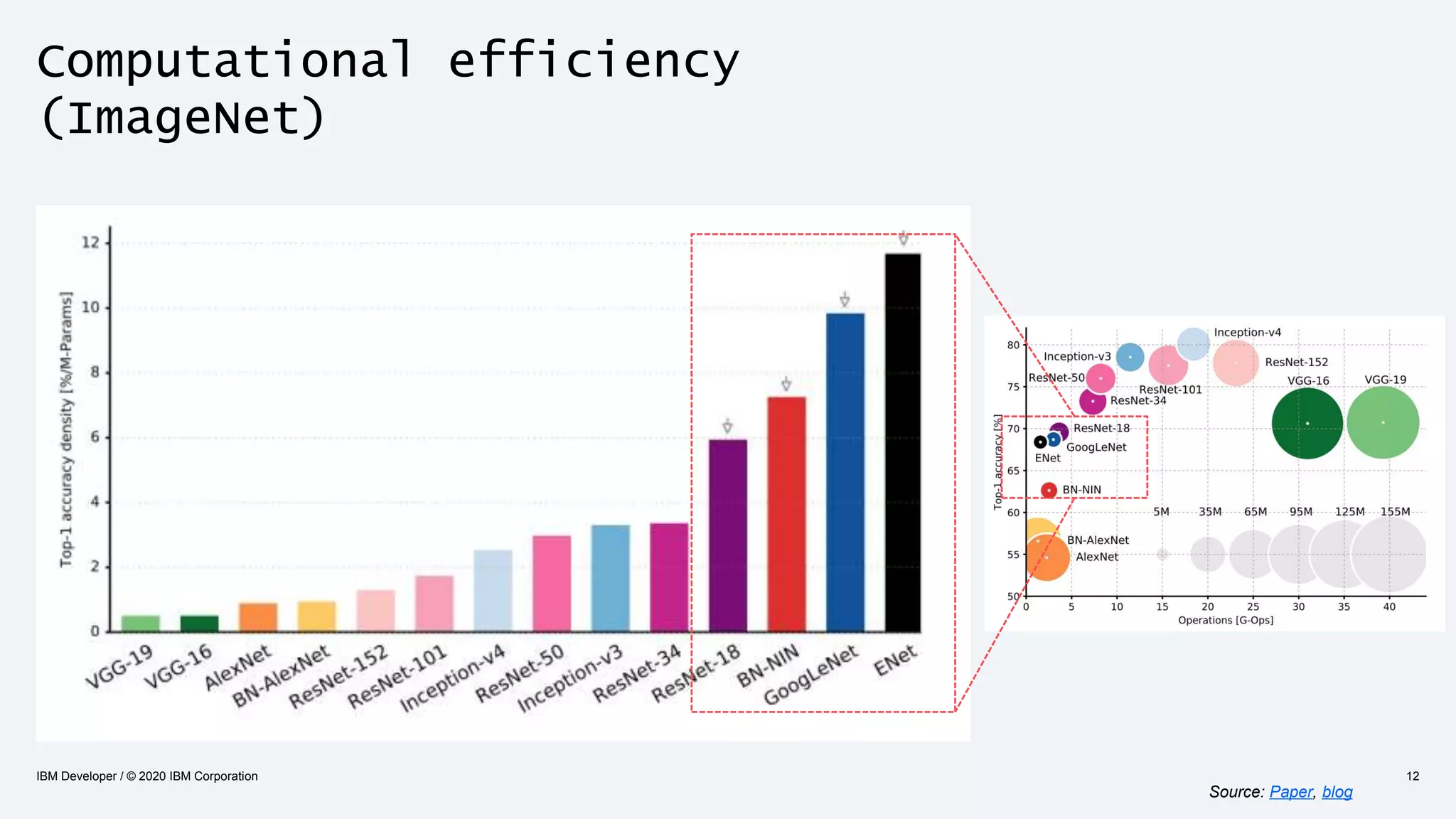

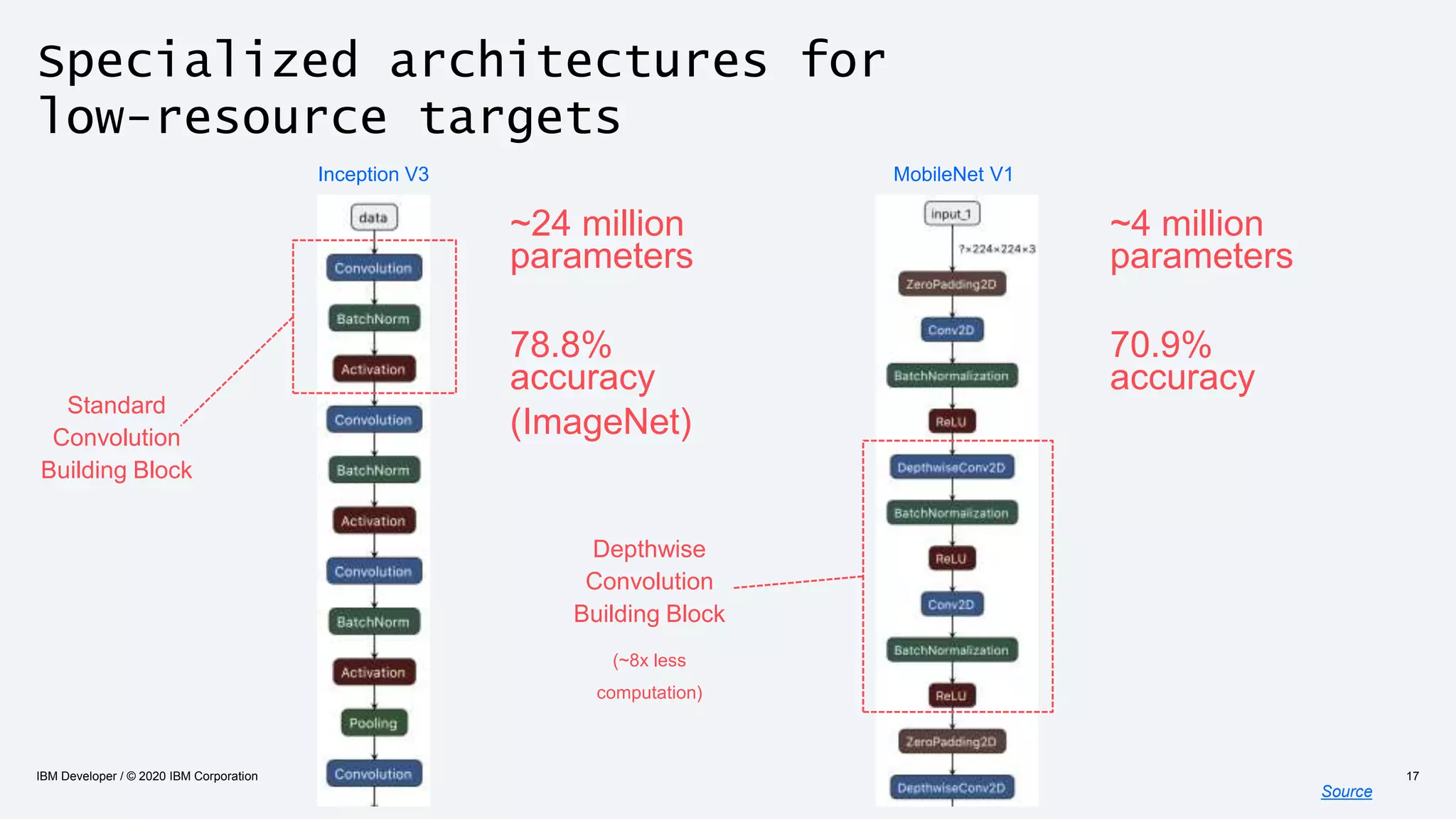

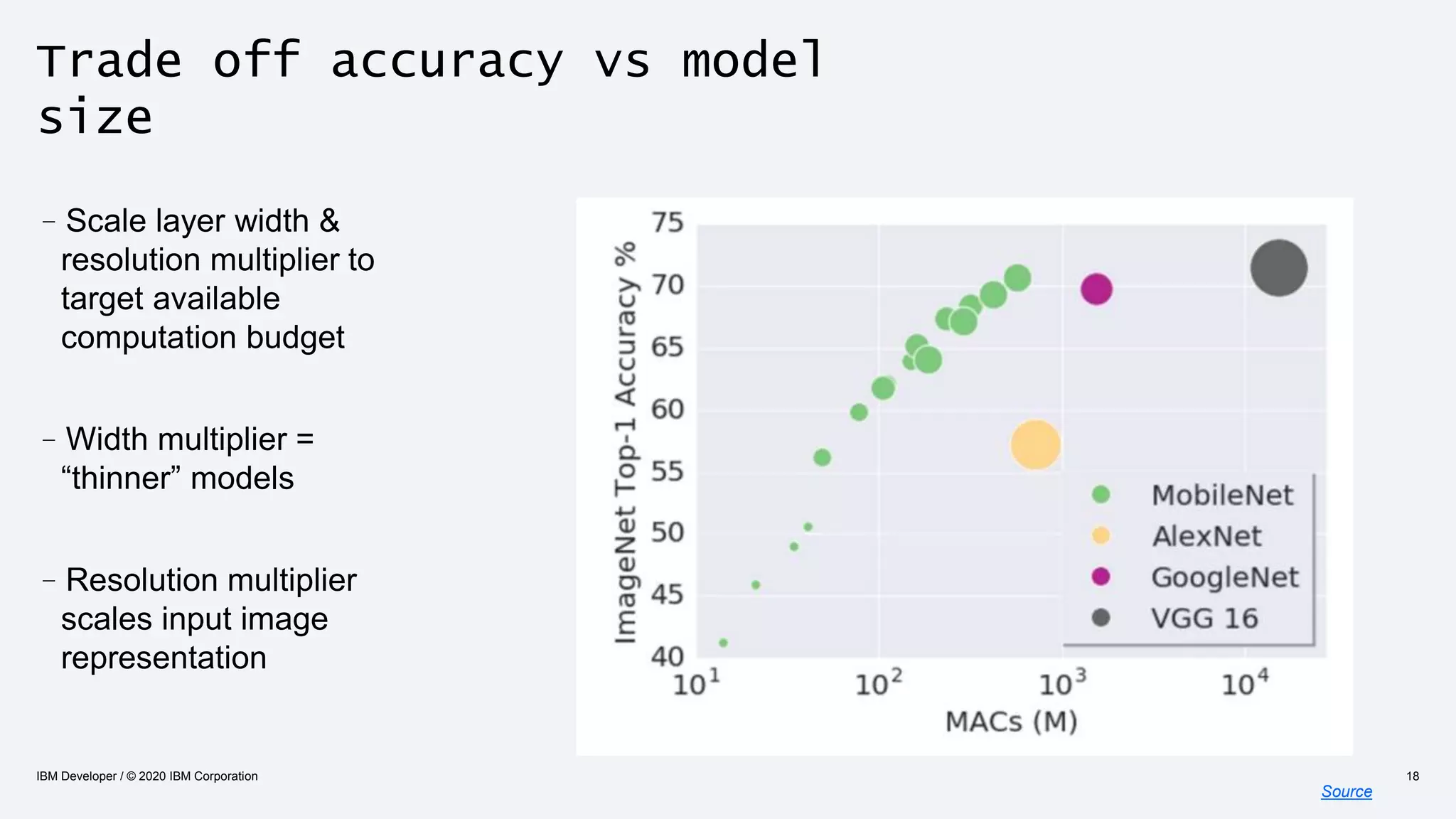

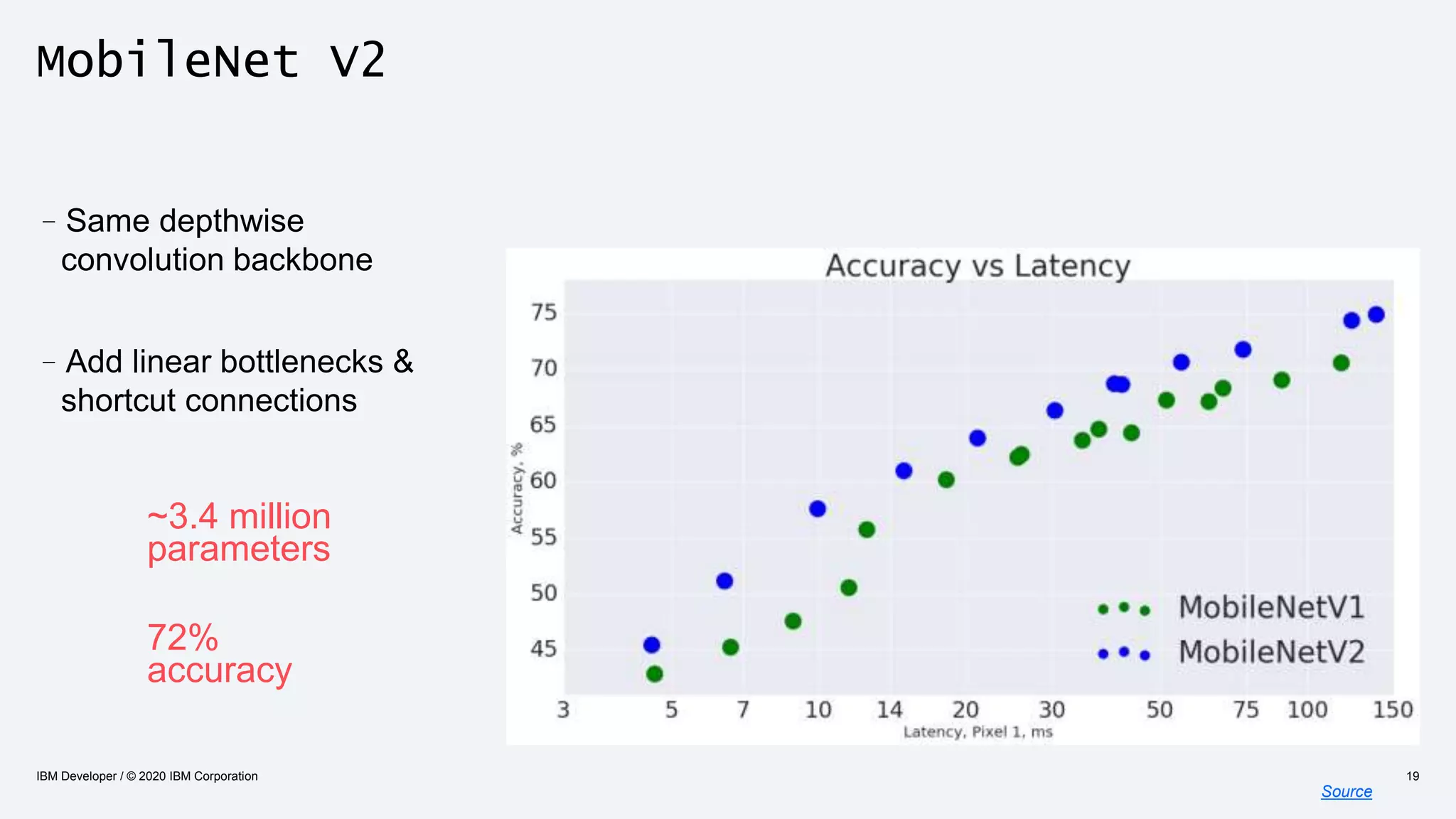

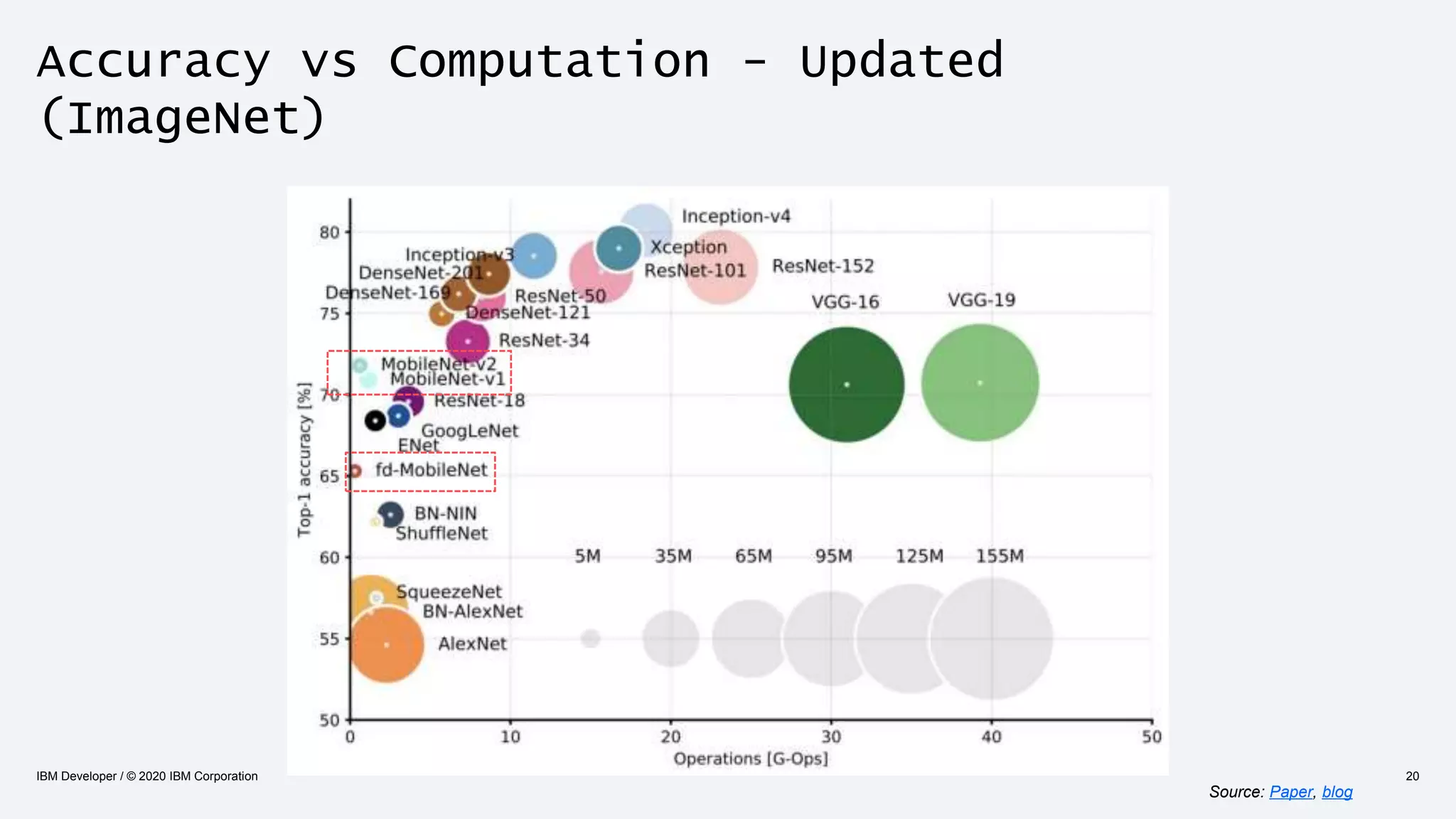

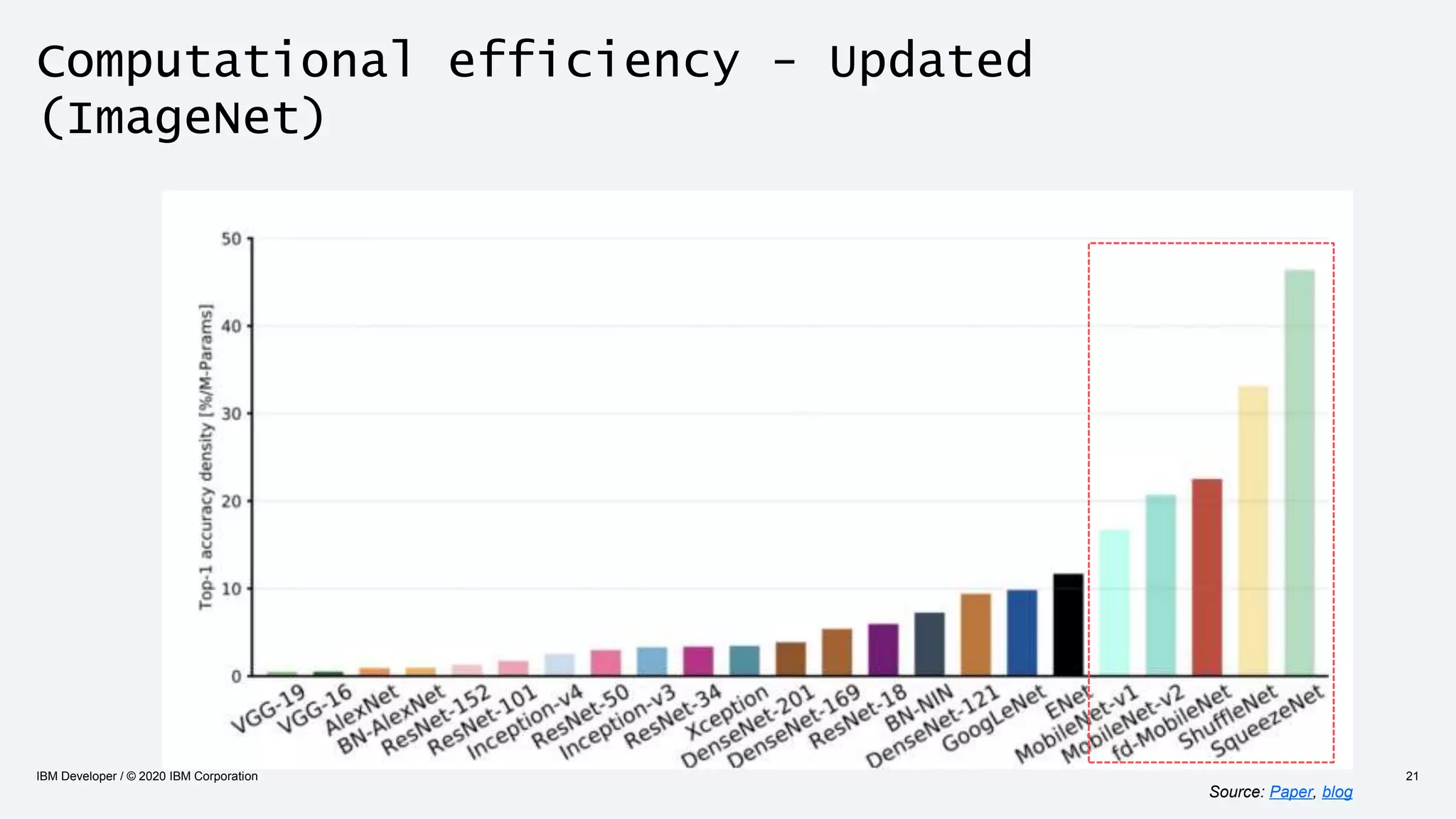

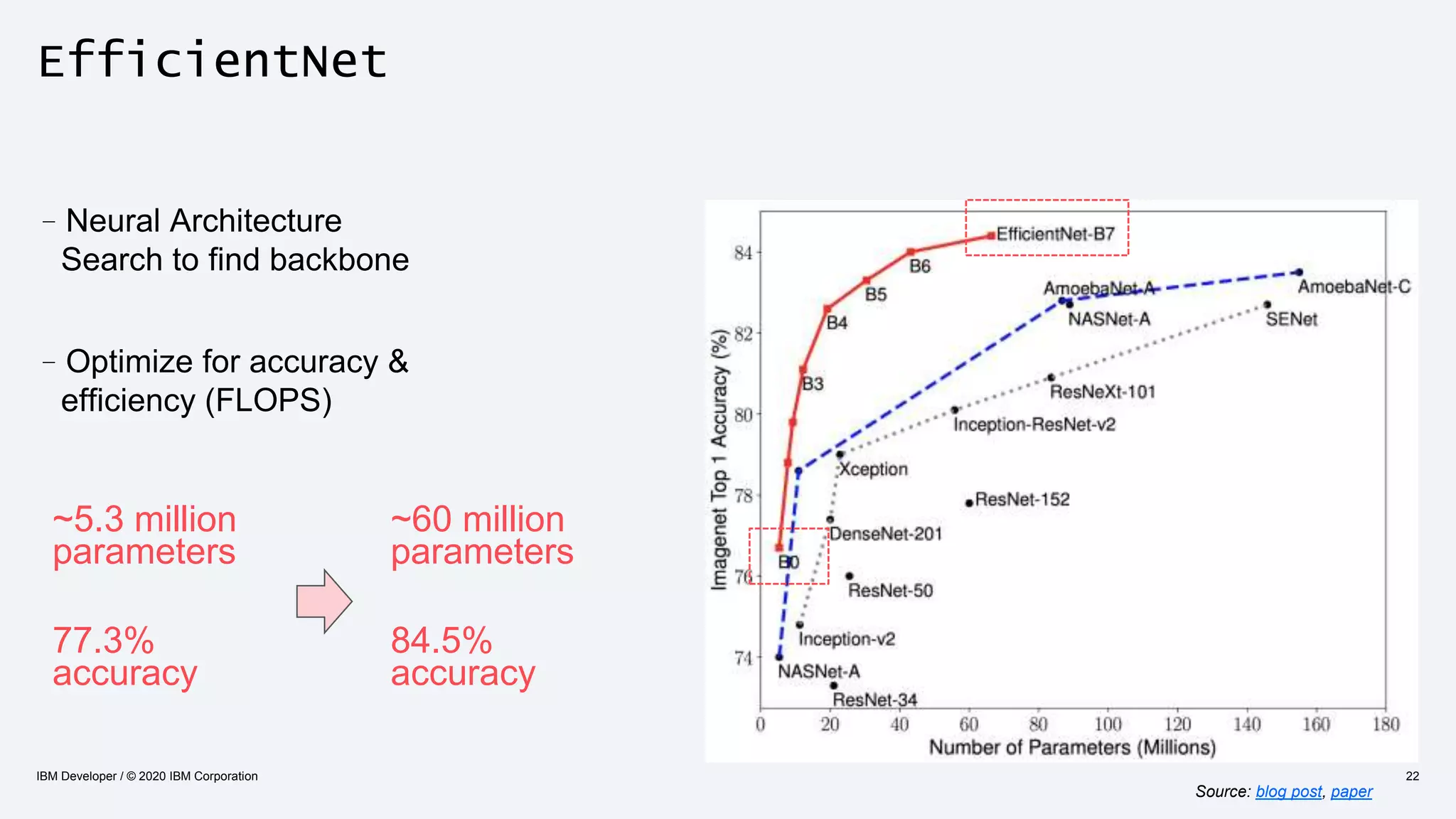

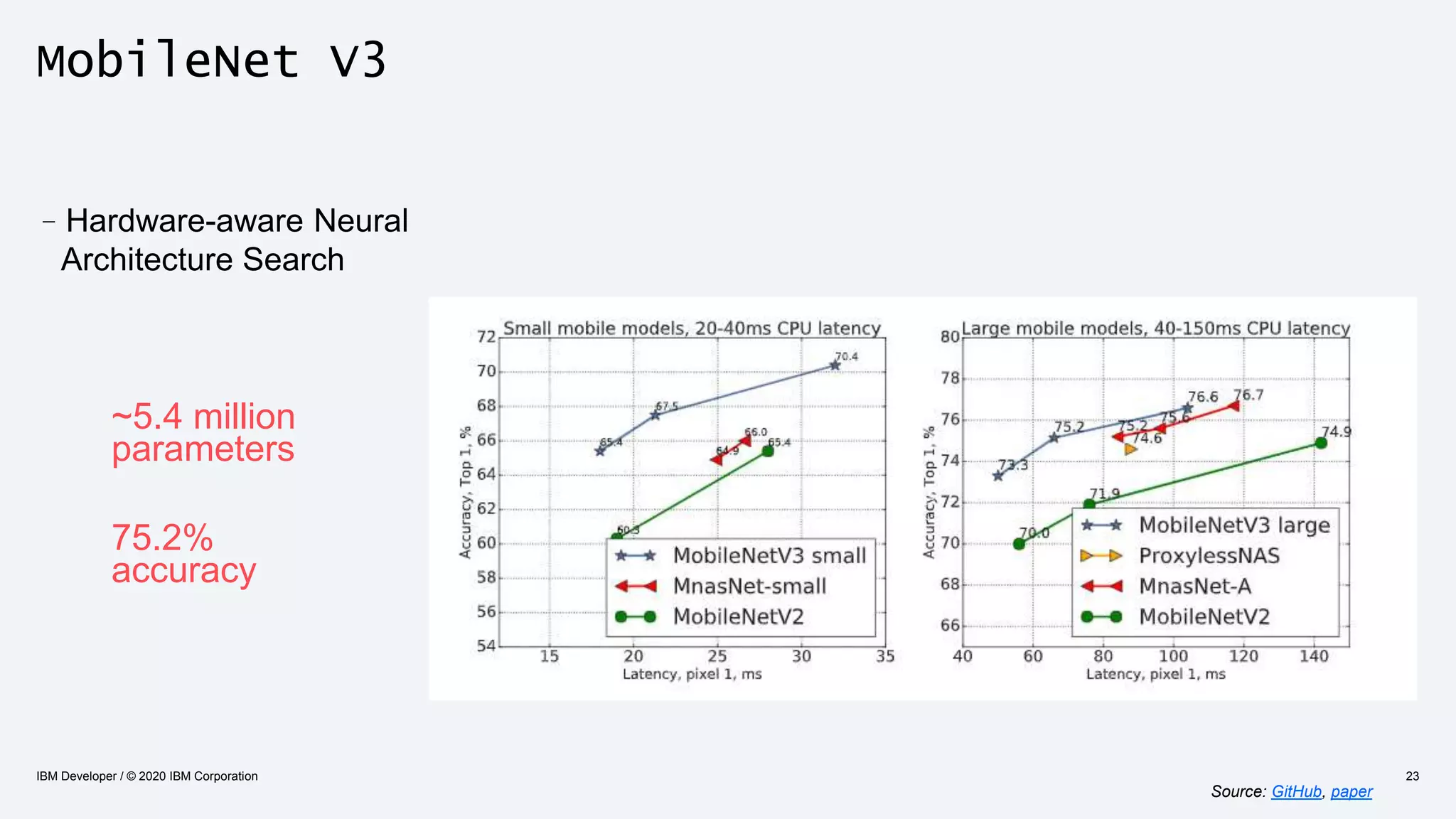

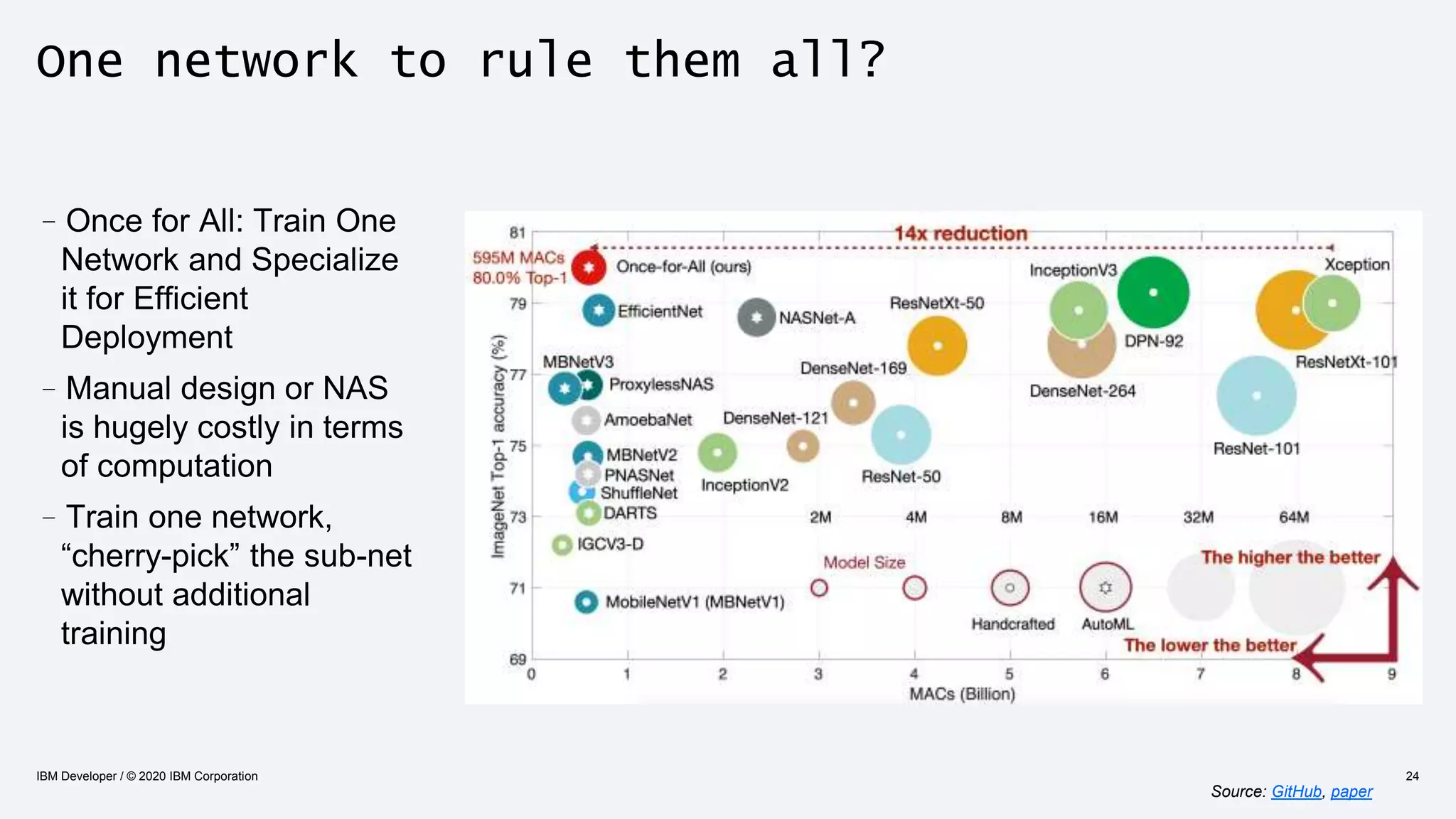

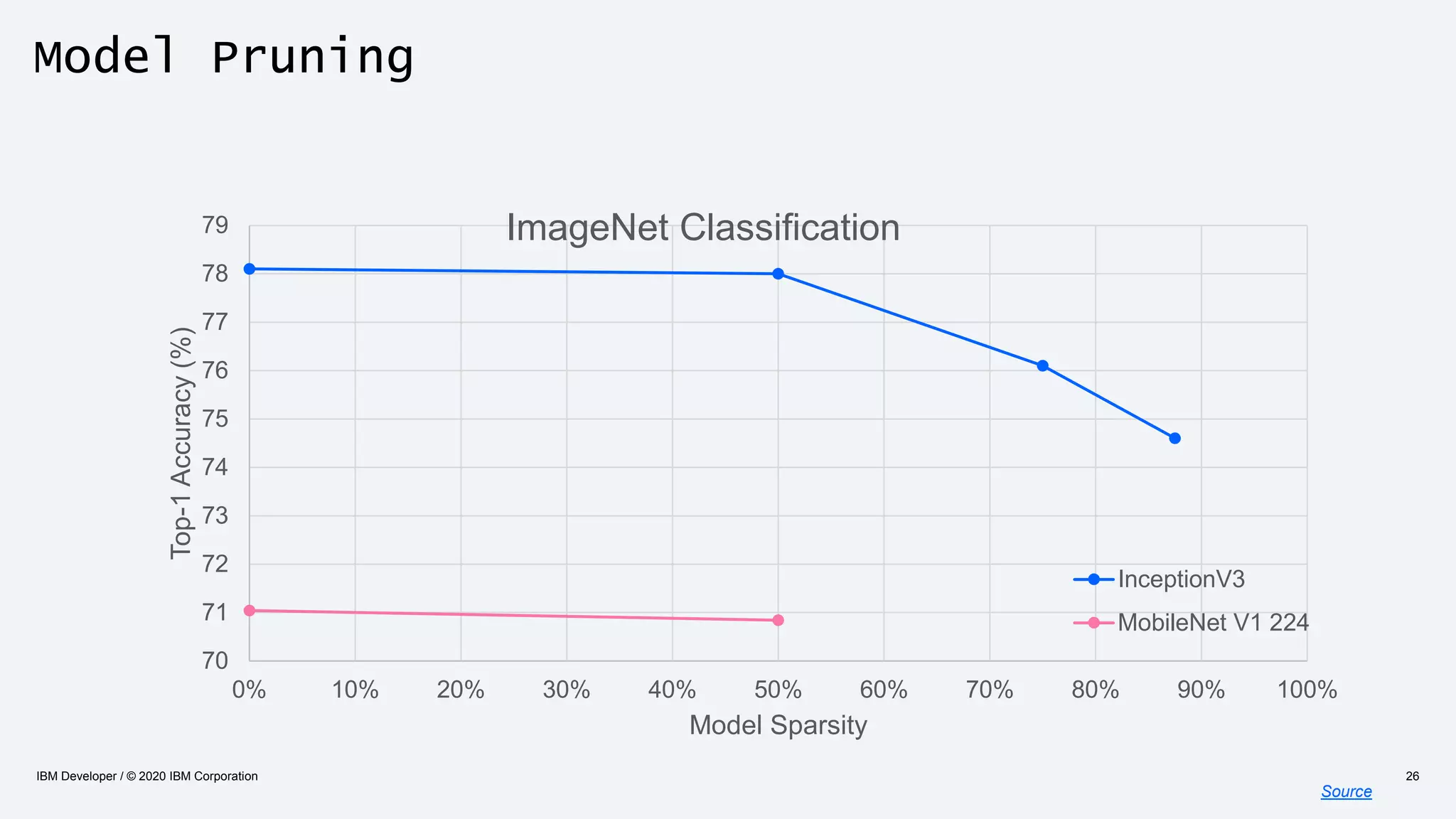

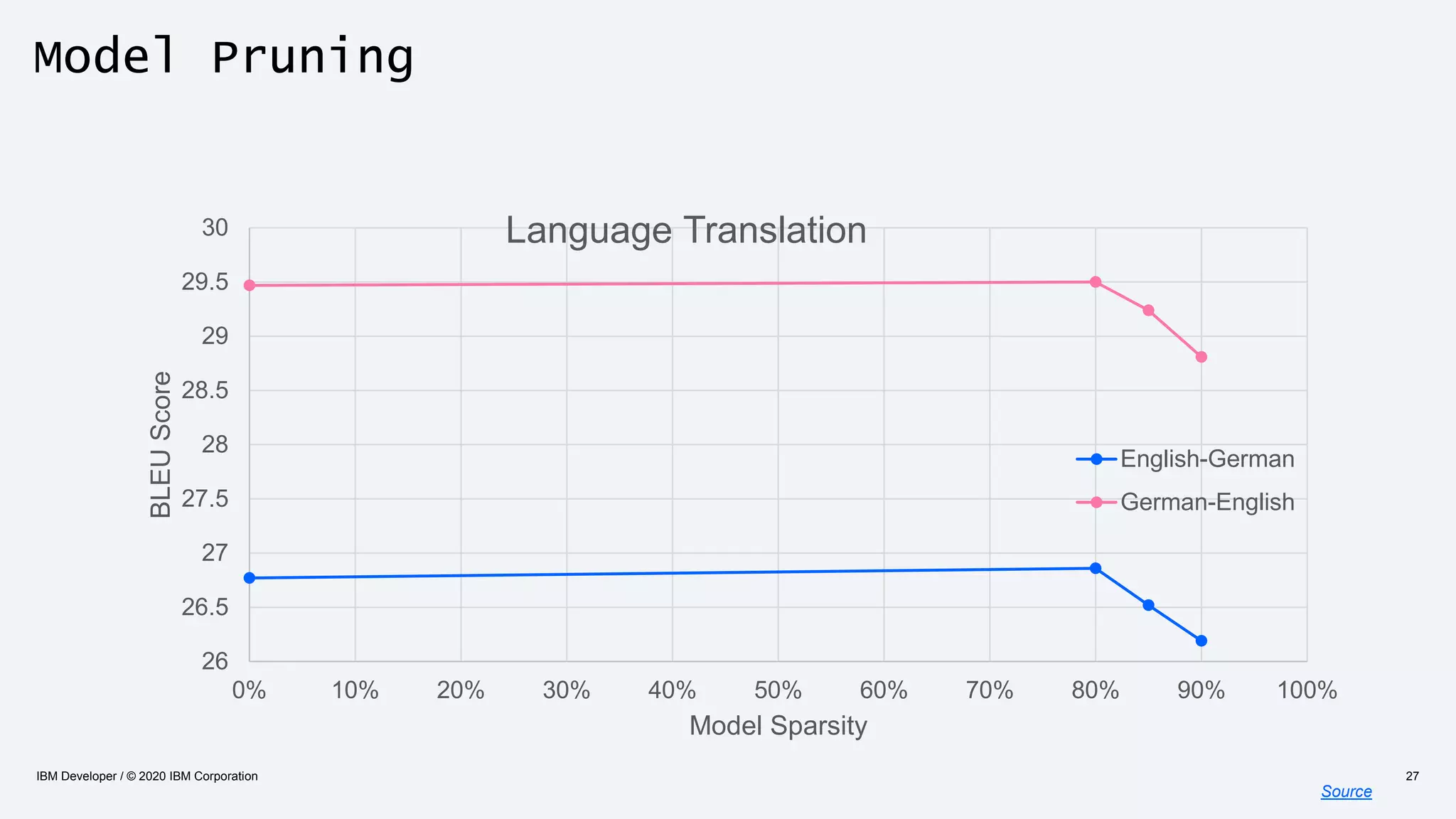

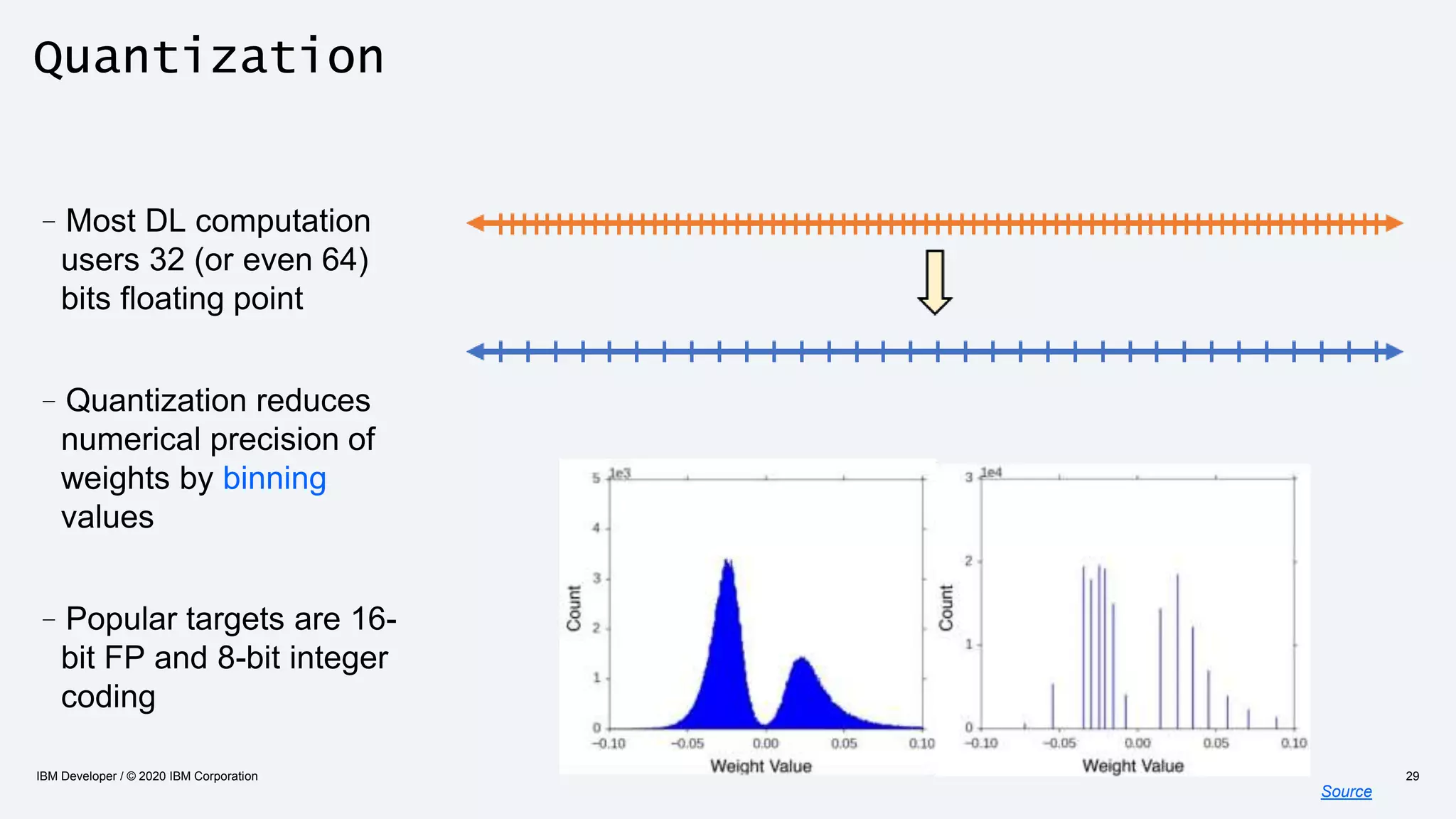

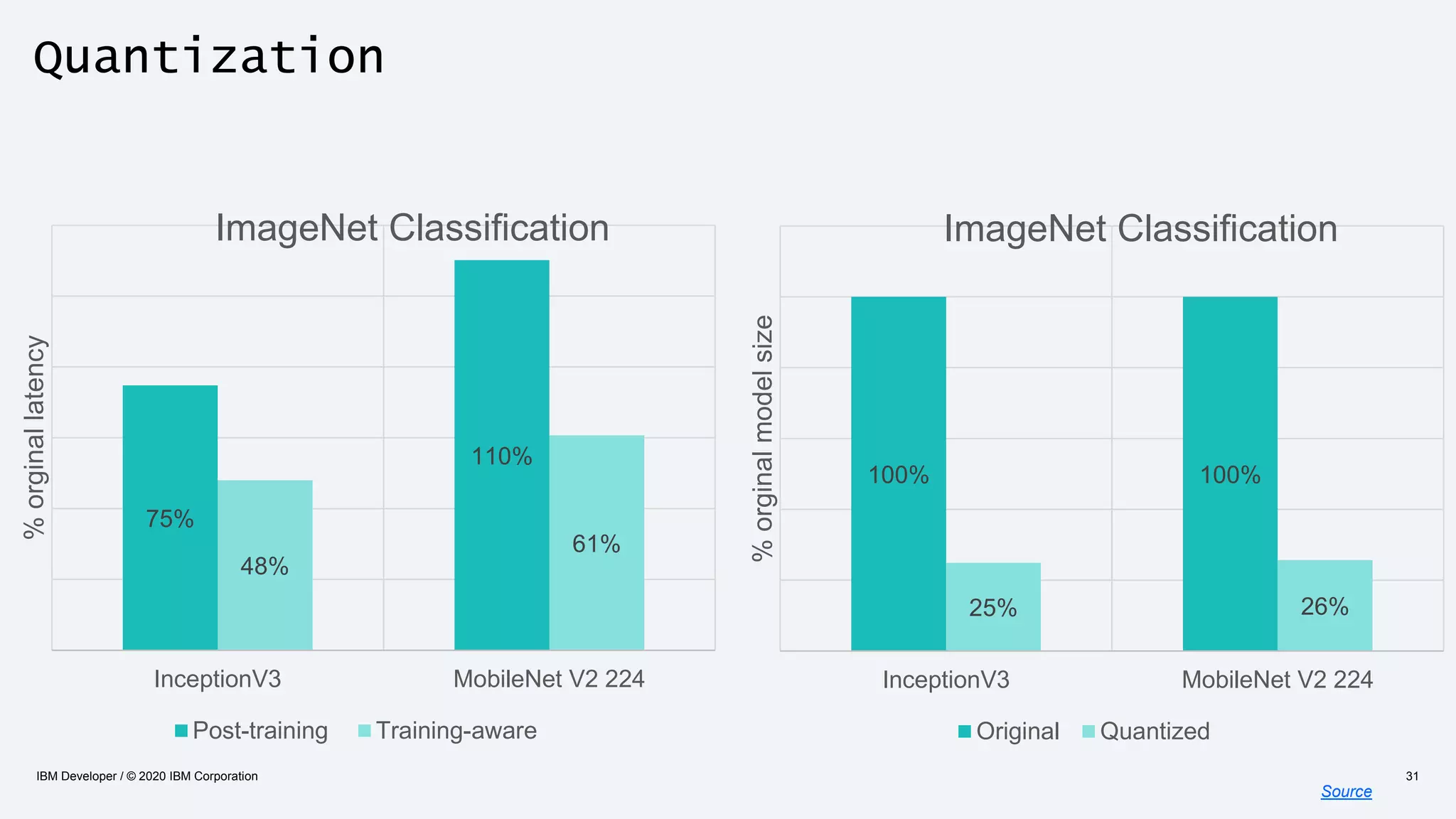

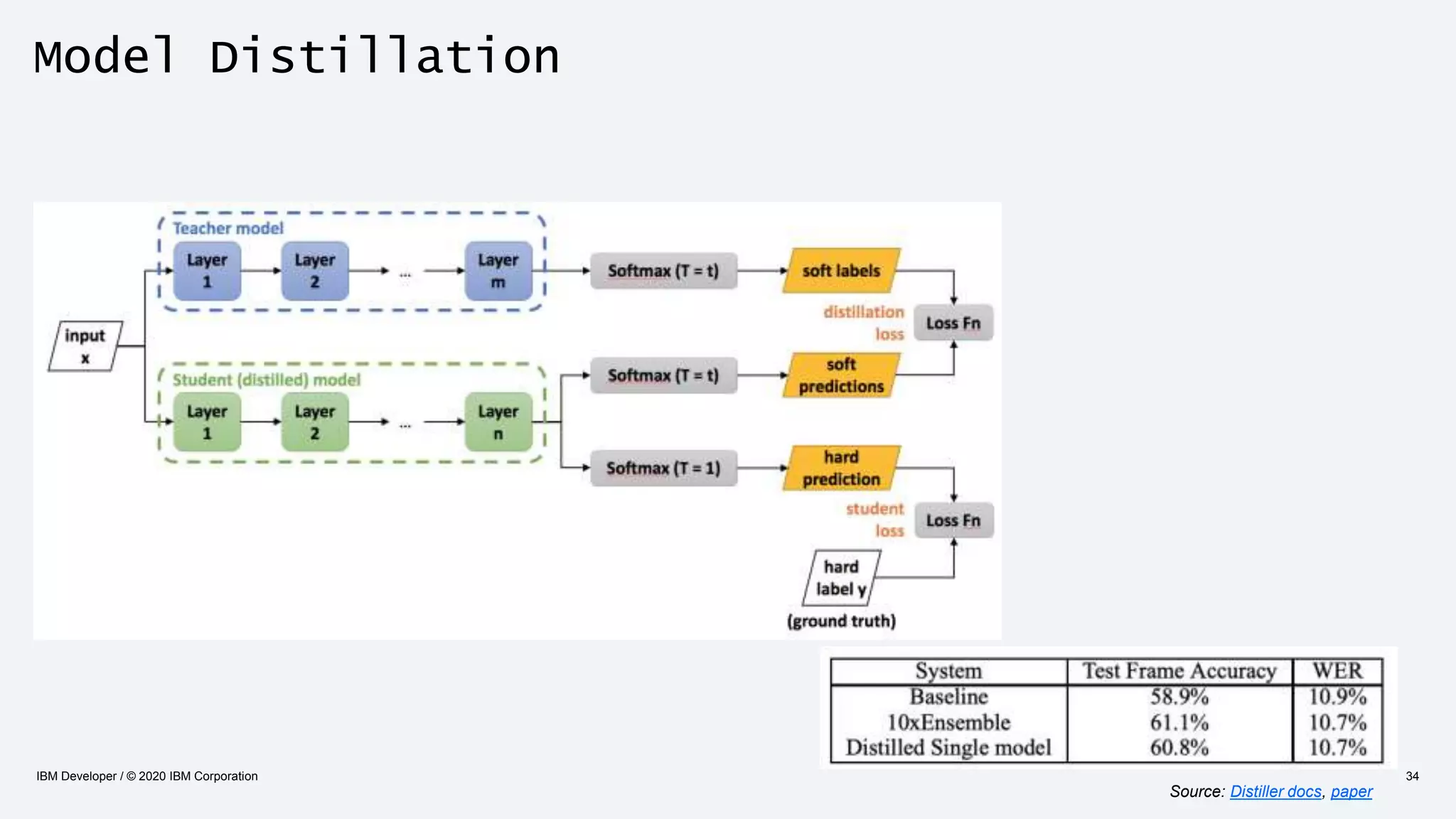

The document discusses strategies for enhancing the efficiency of deep learning workflows, focusing on model architectures, model compression, and model distillation. It highlights the importance of adapting machine learning models for deployment on resource-constrained environments like edge devices while maintaining computational efficiency. Techniques such as quantization and pruning are presented as methods to optimize model performance and reduce resource requirements.