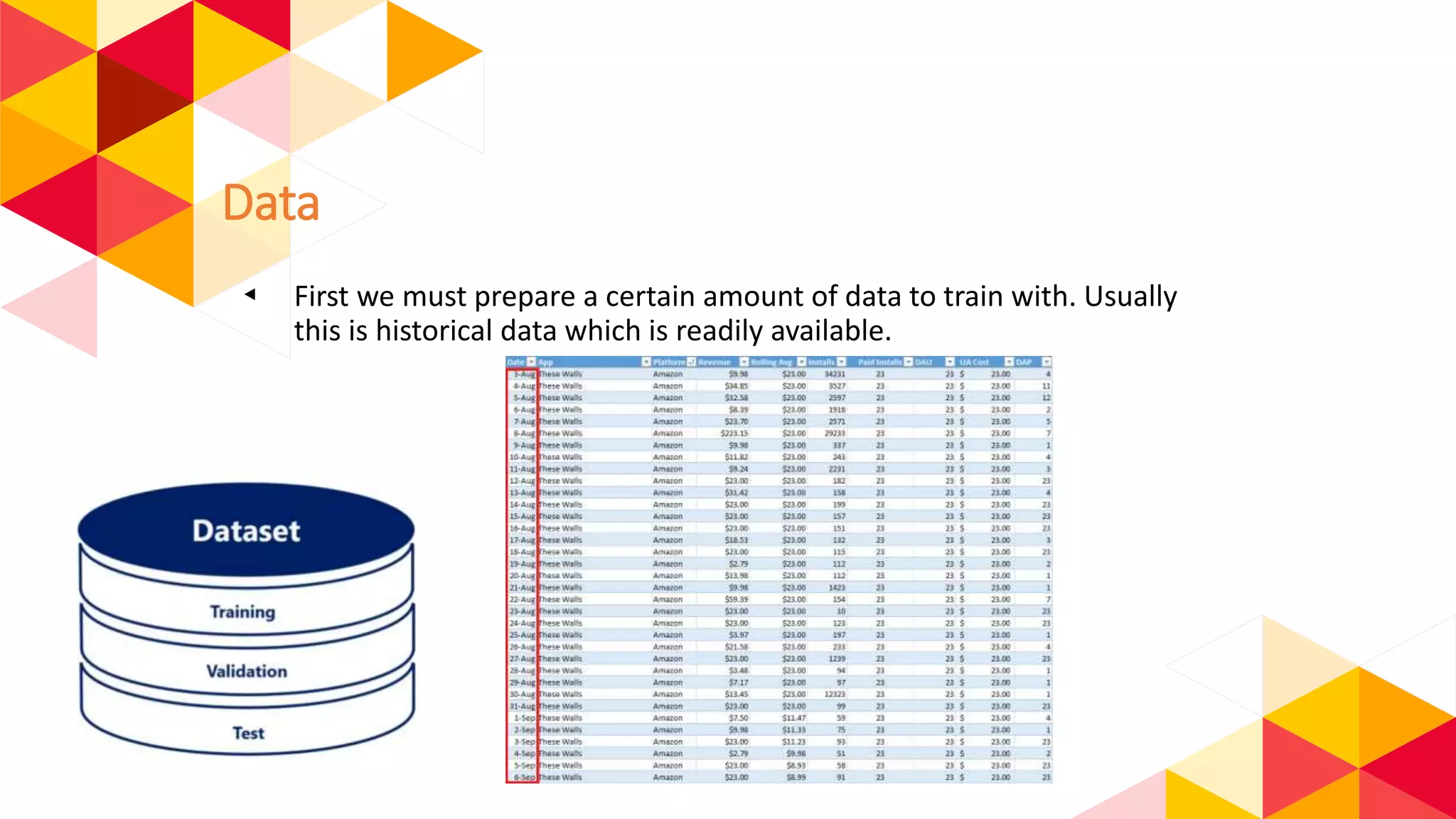

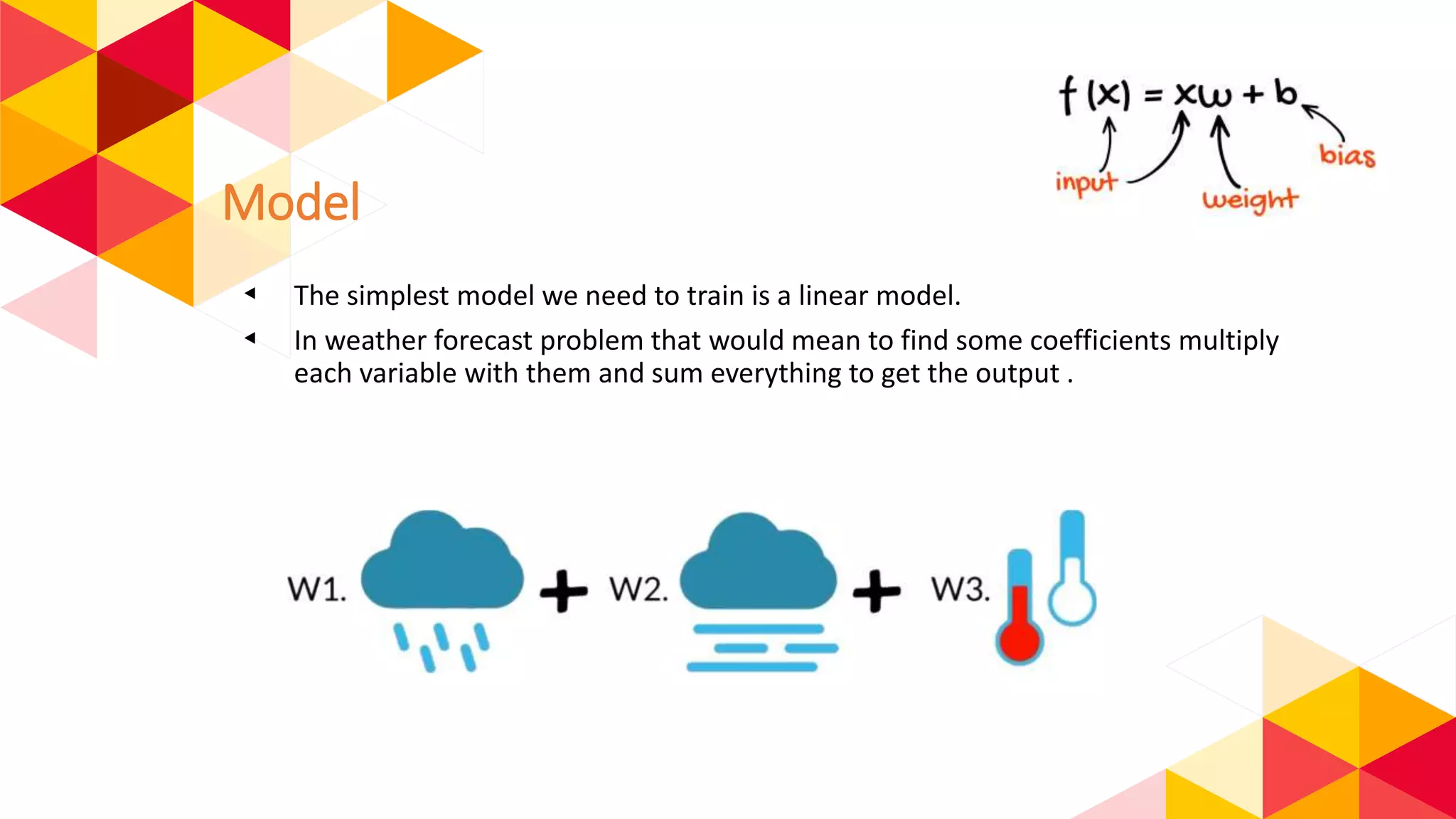

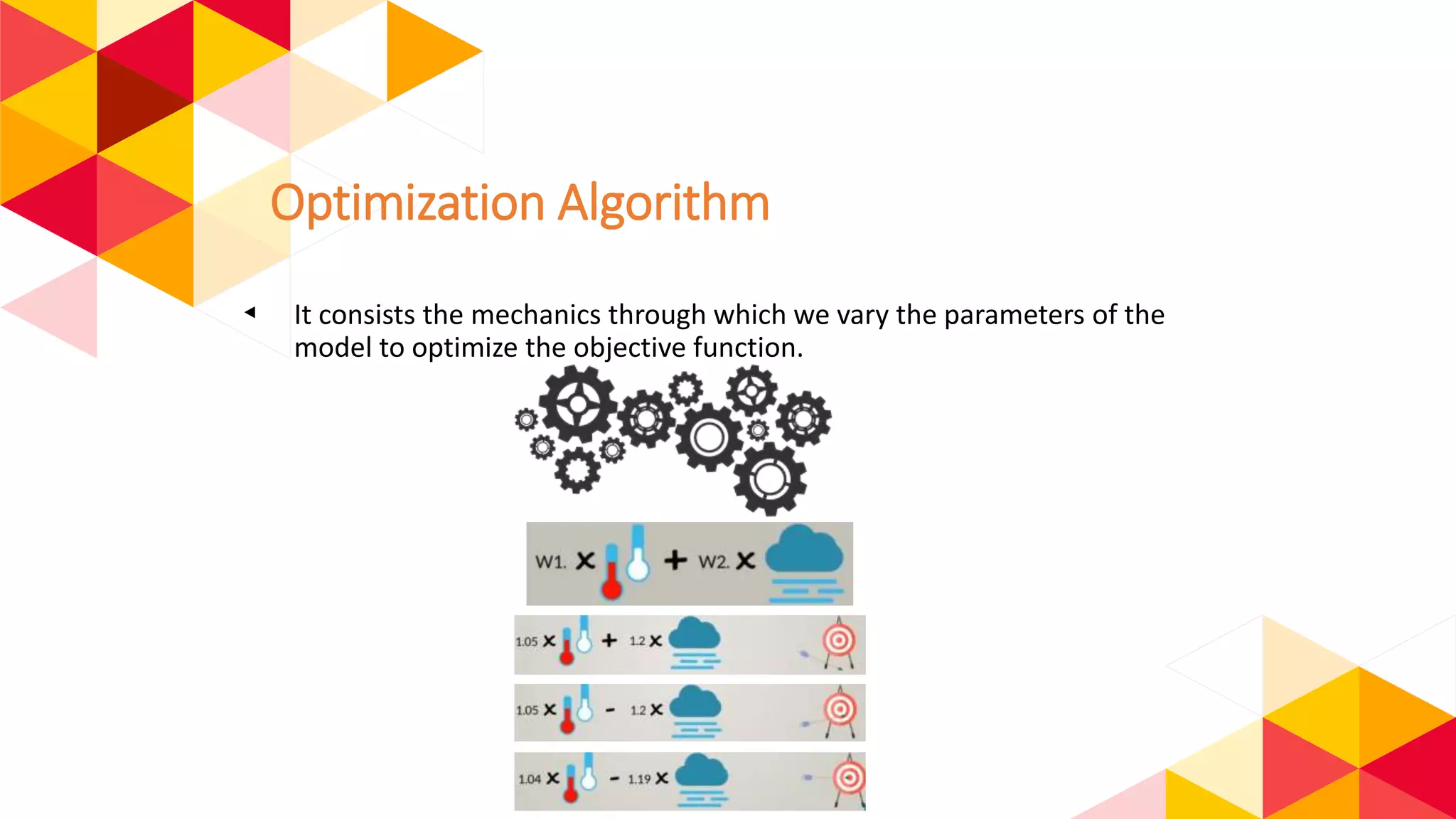

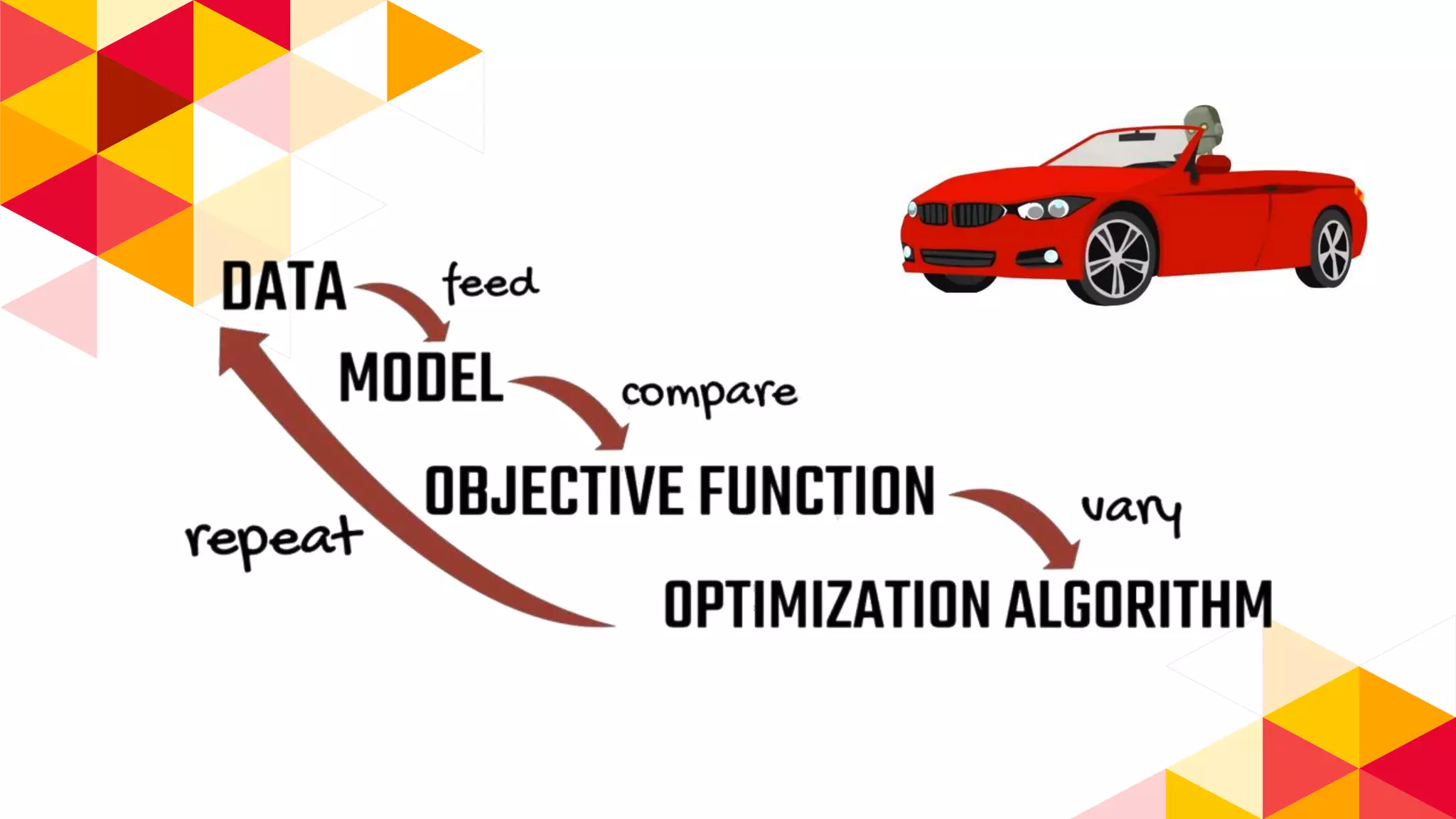

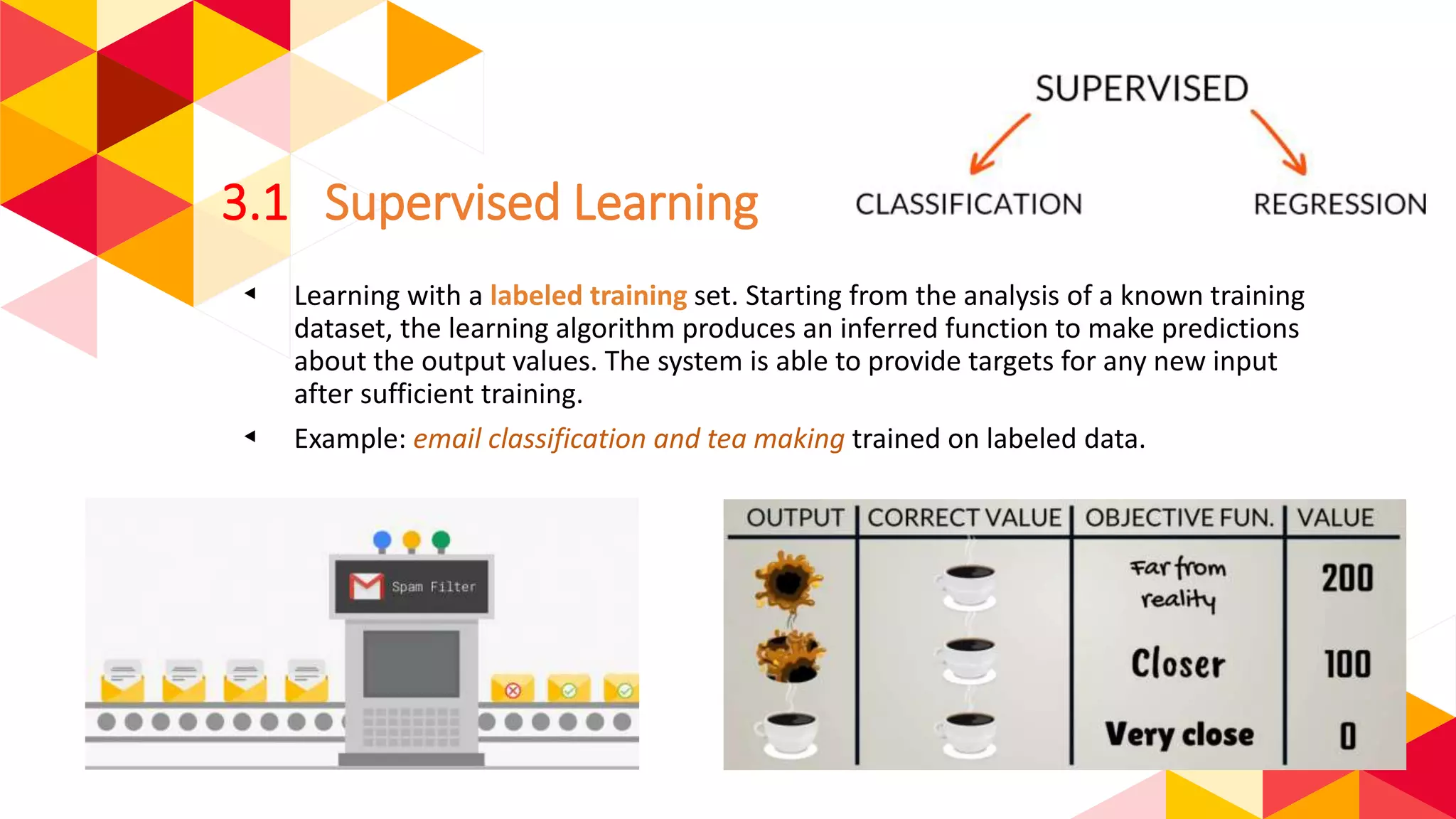

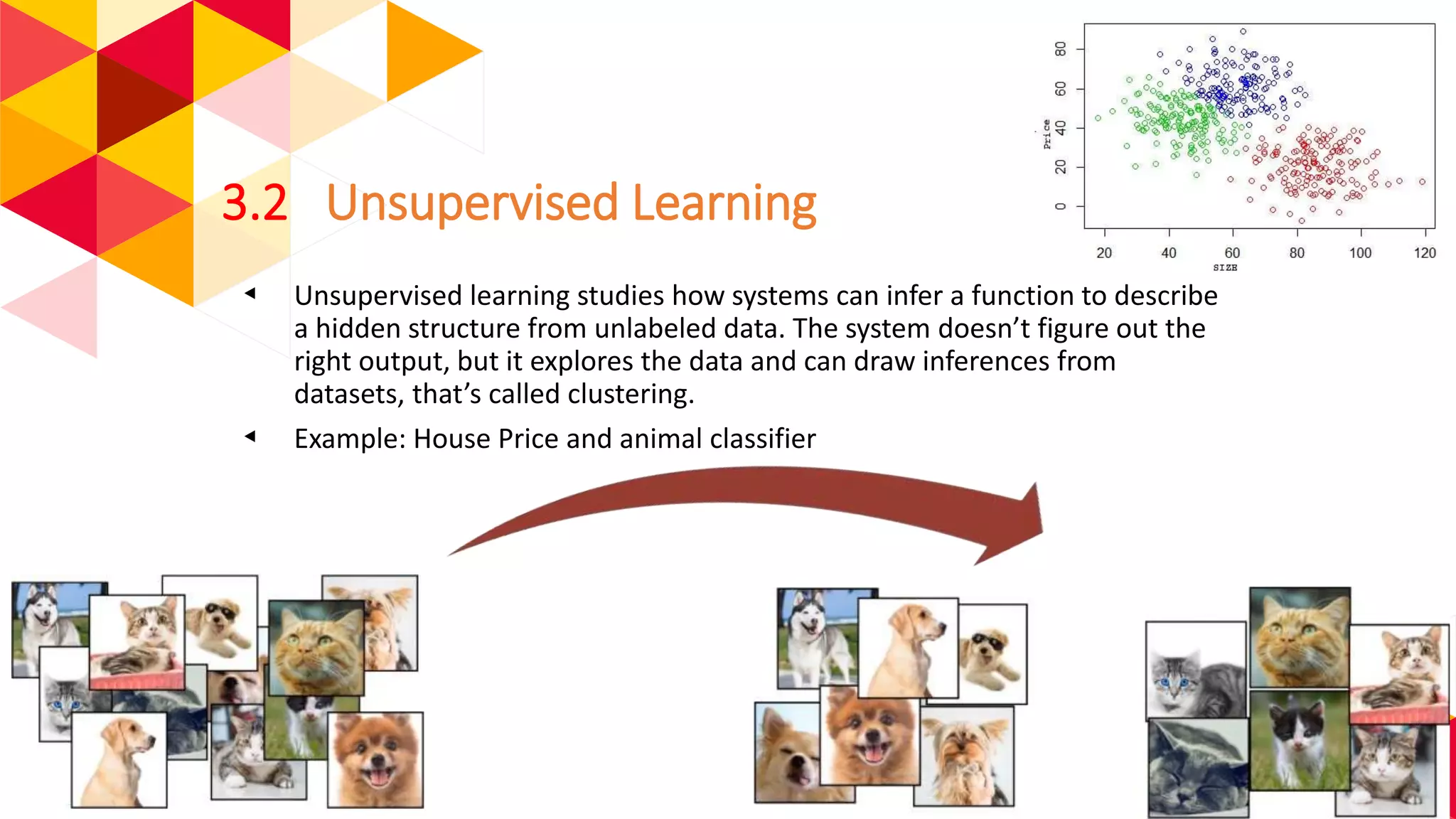

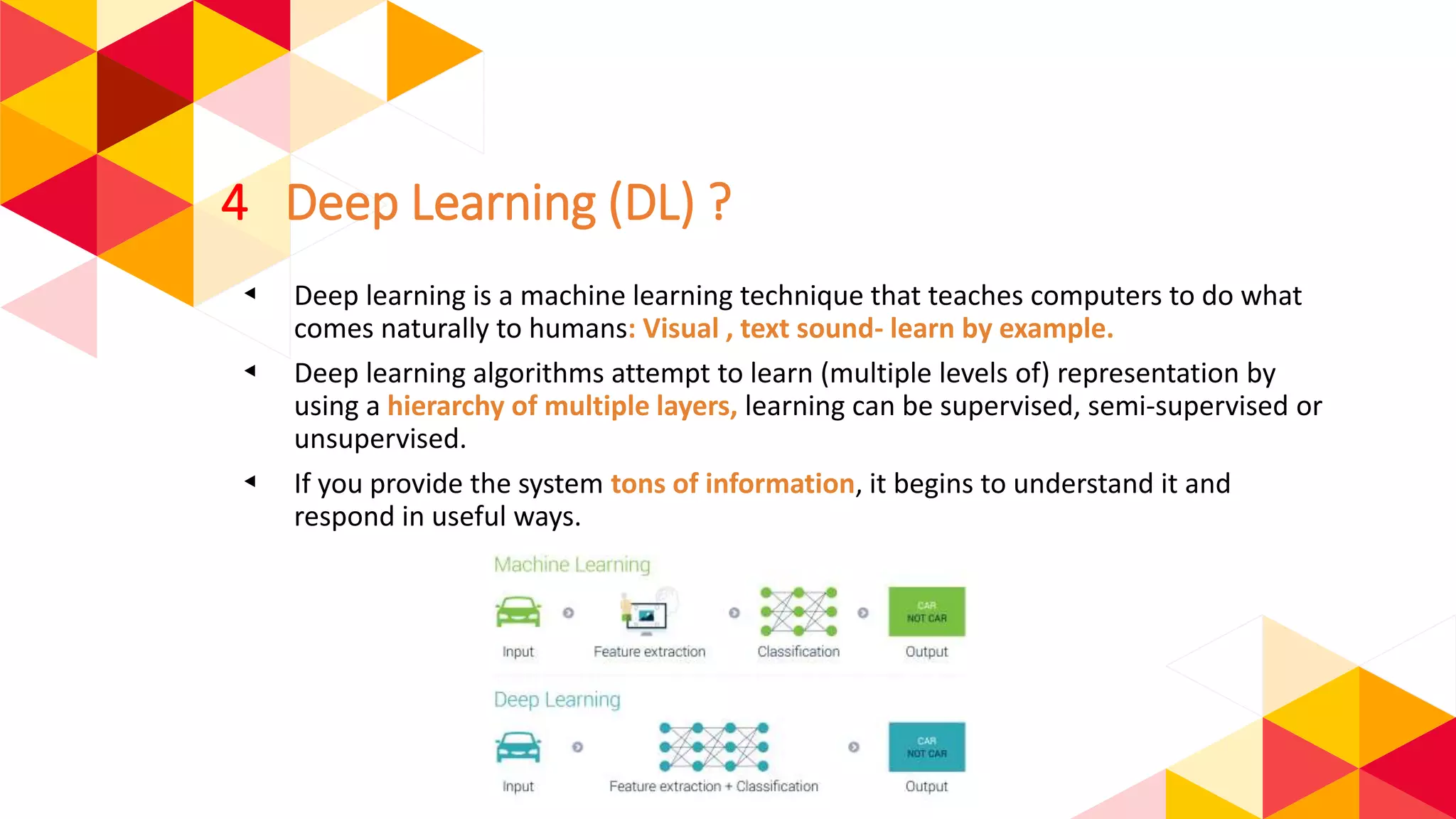

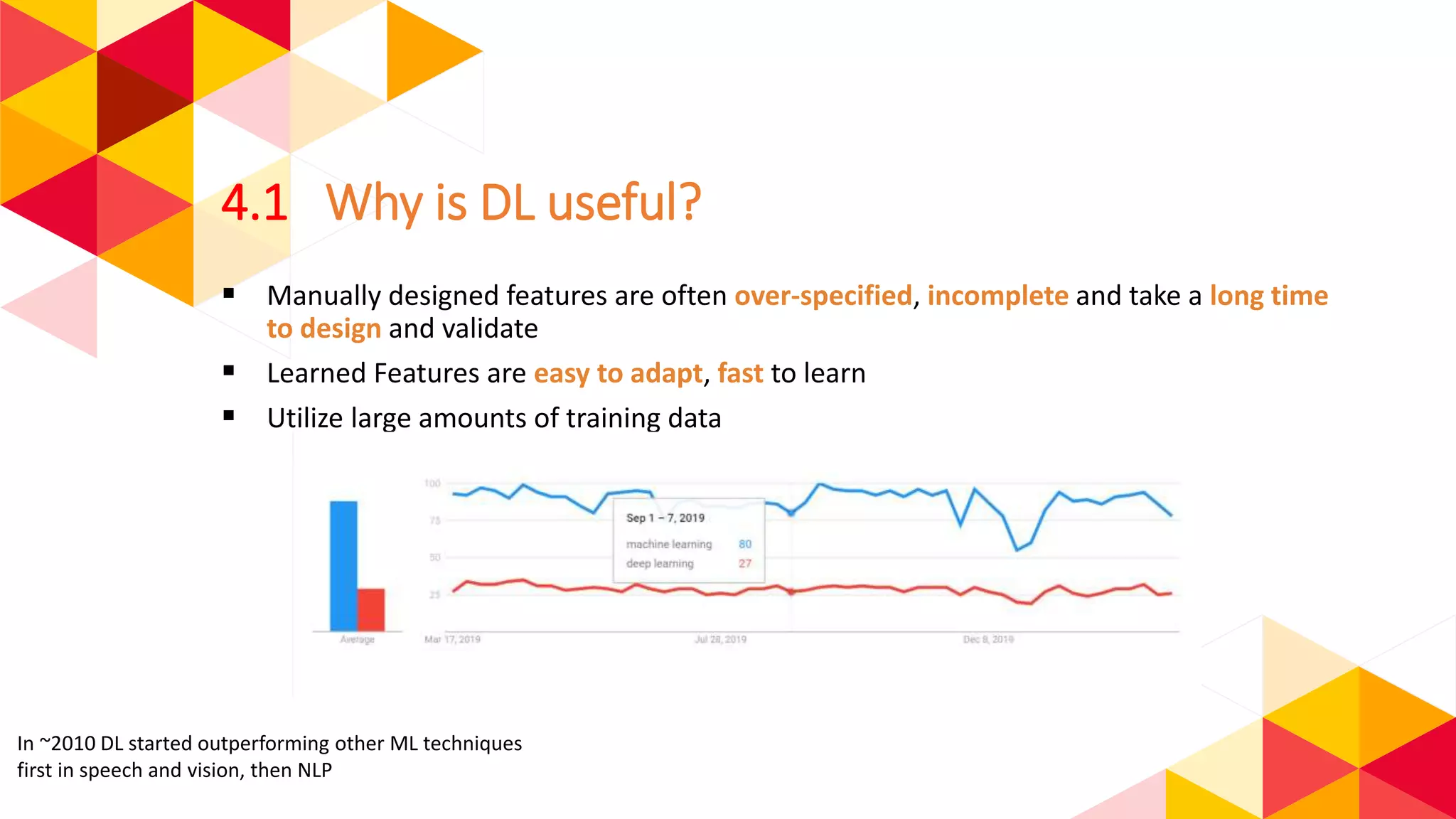

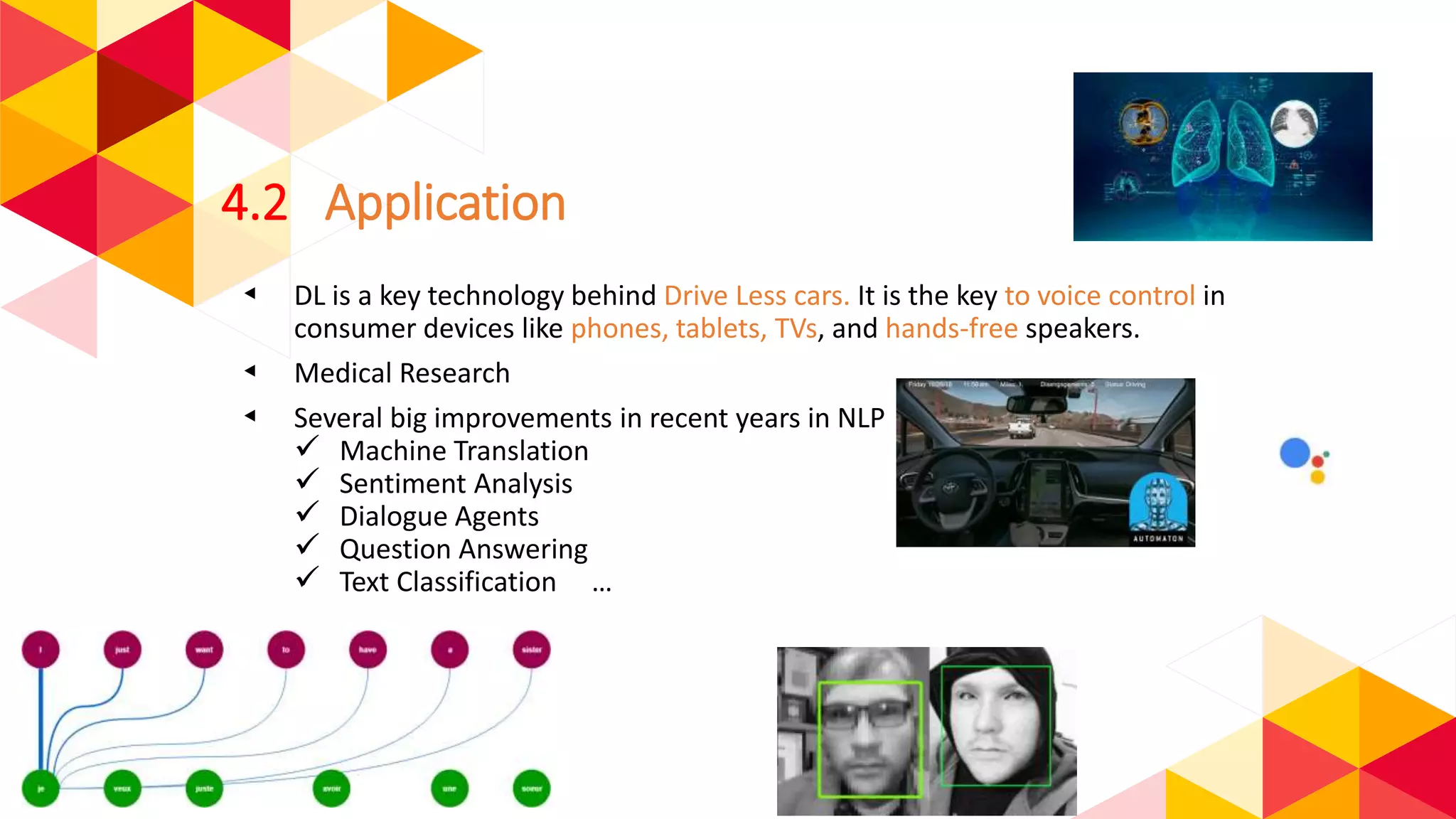

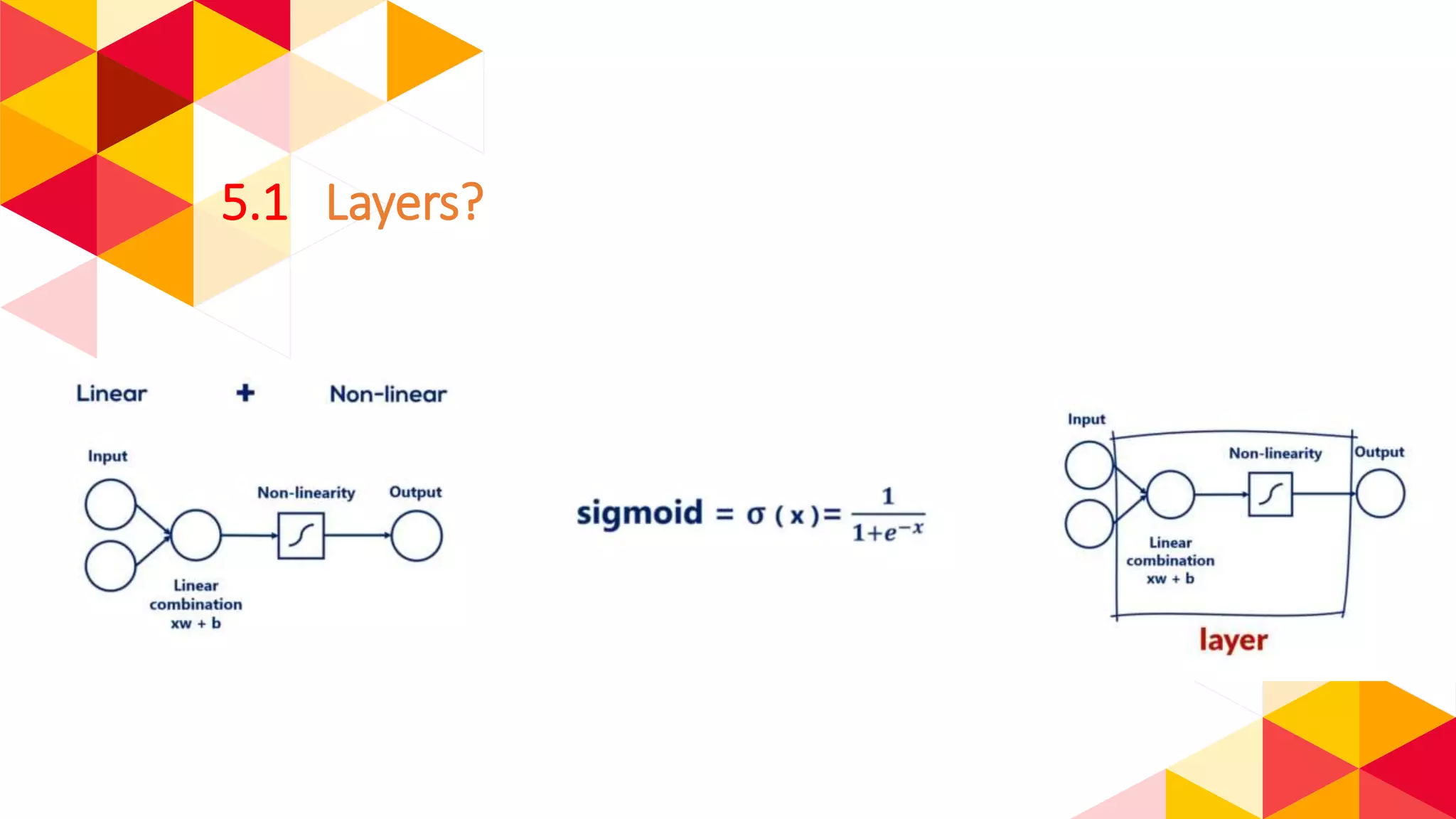

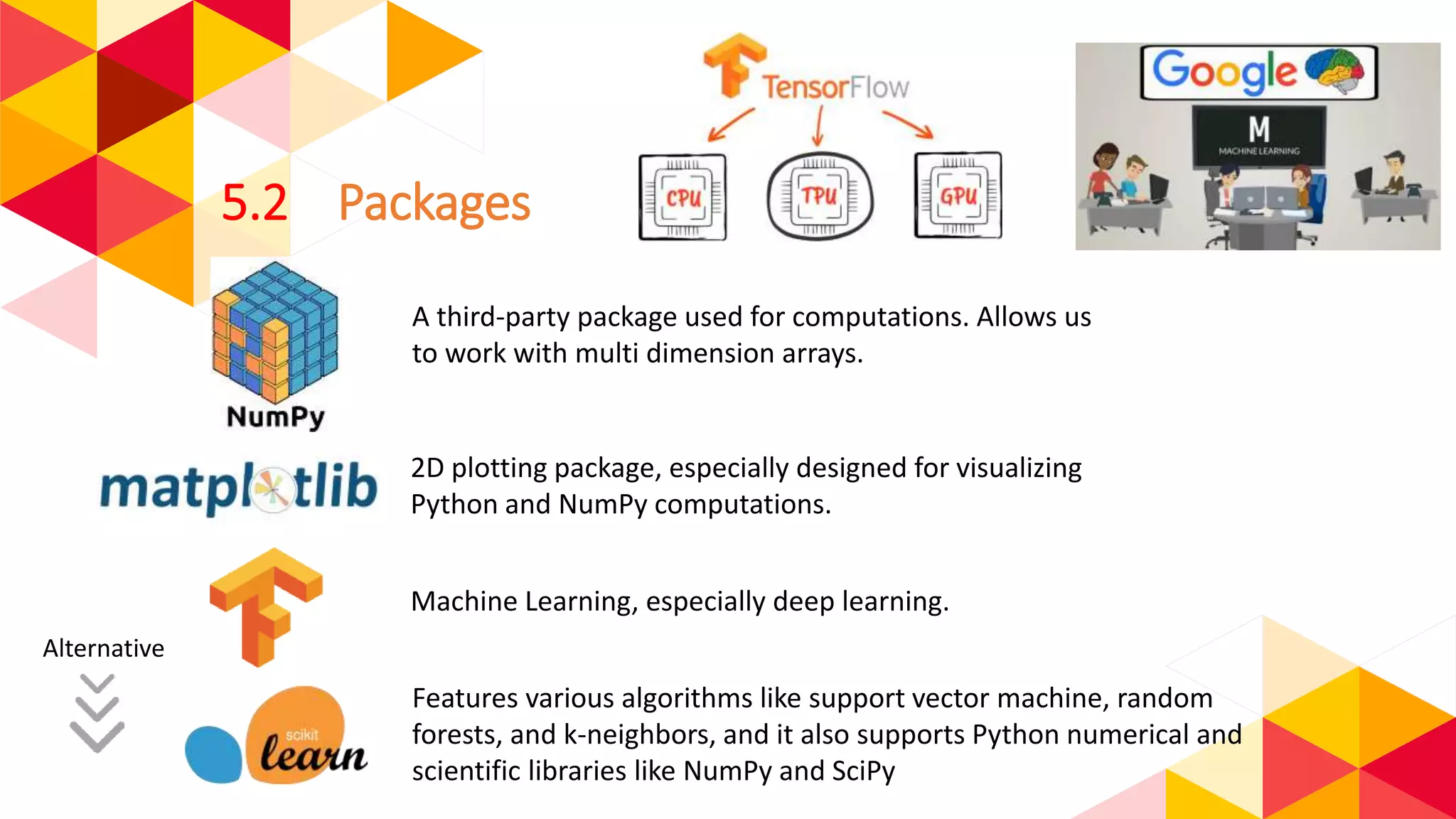

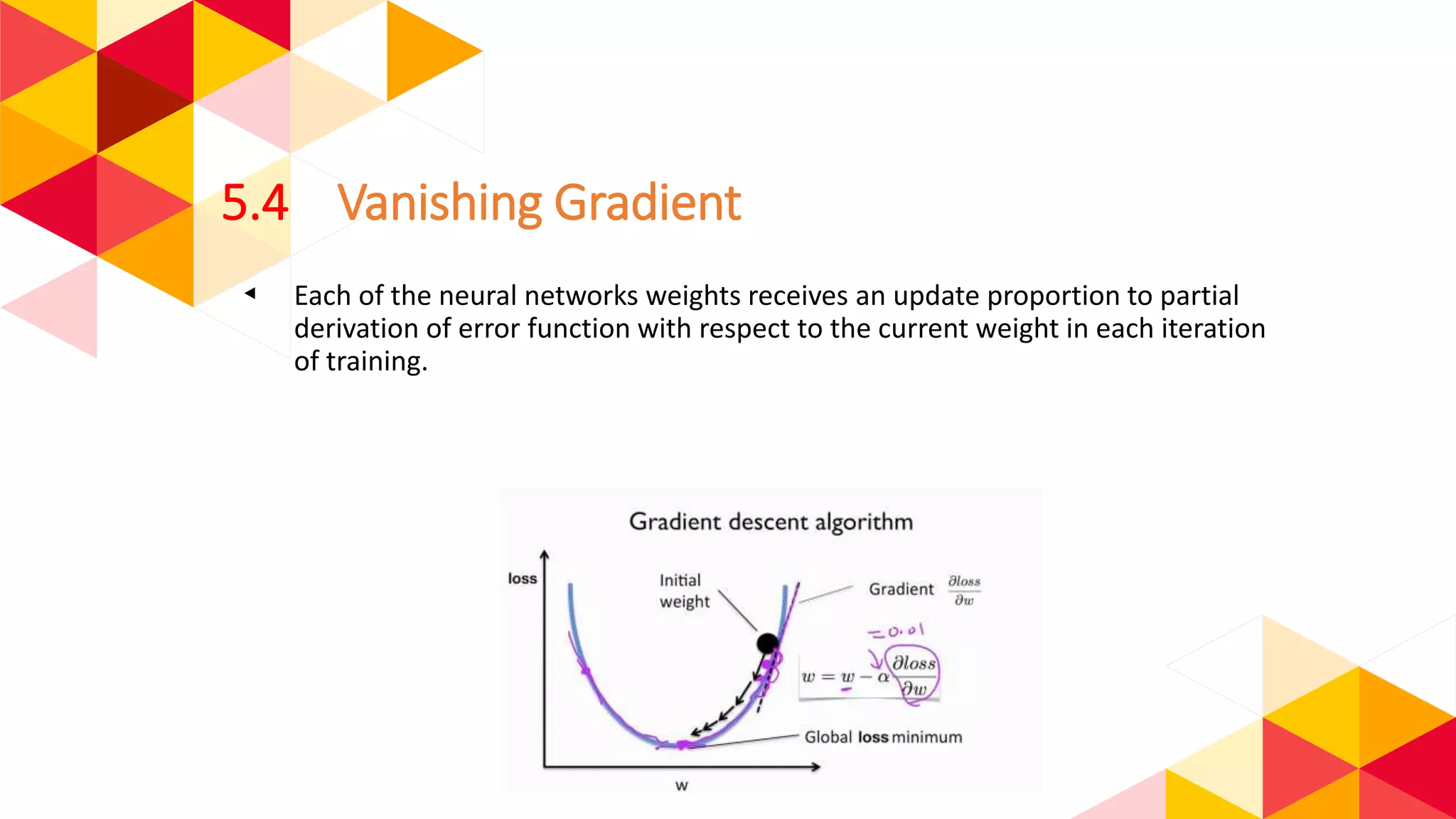

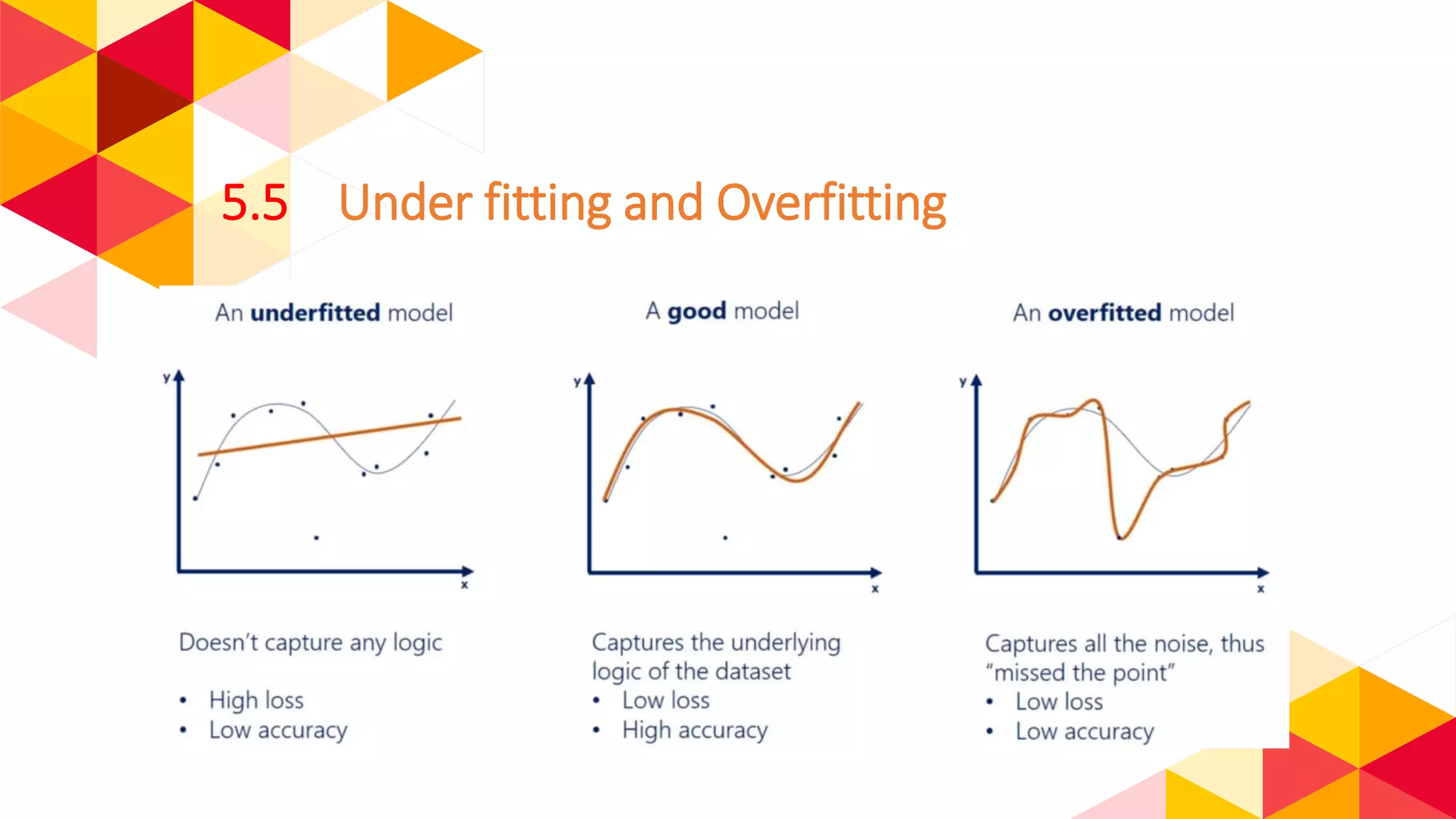

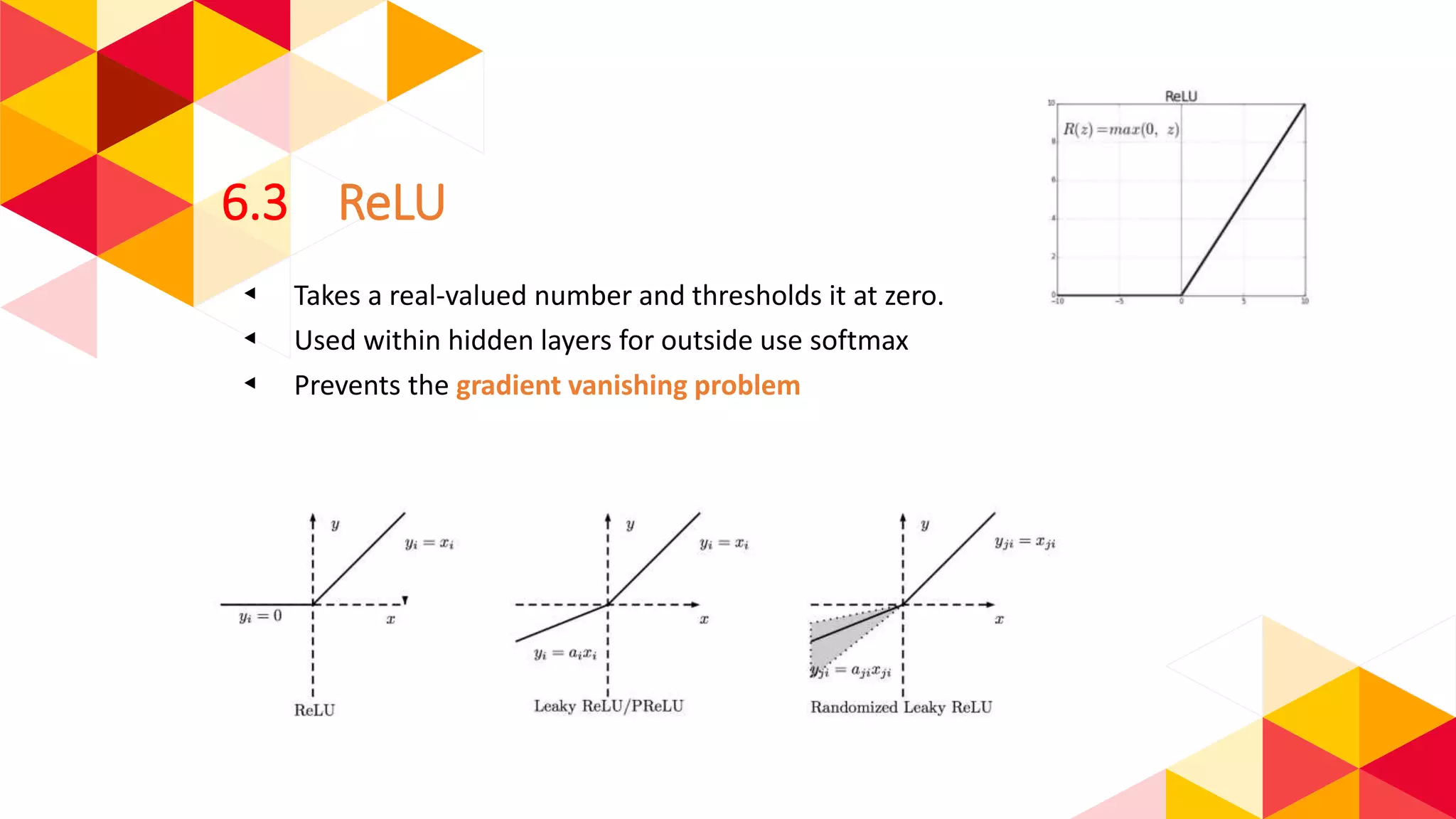

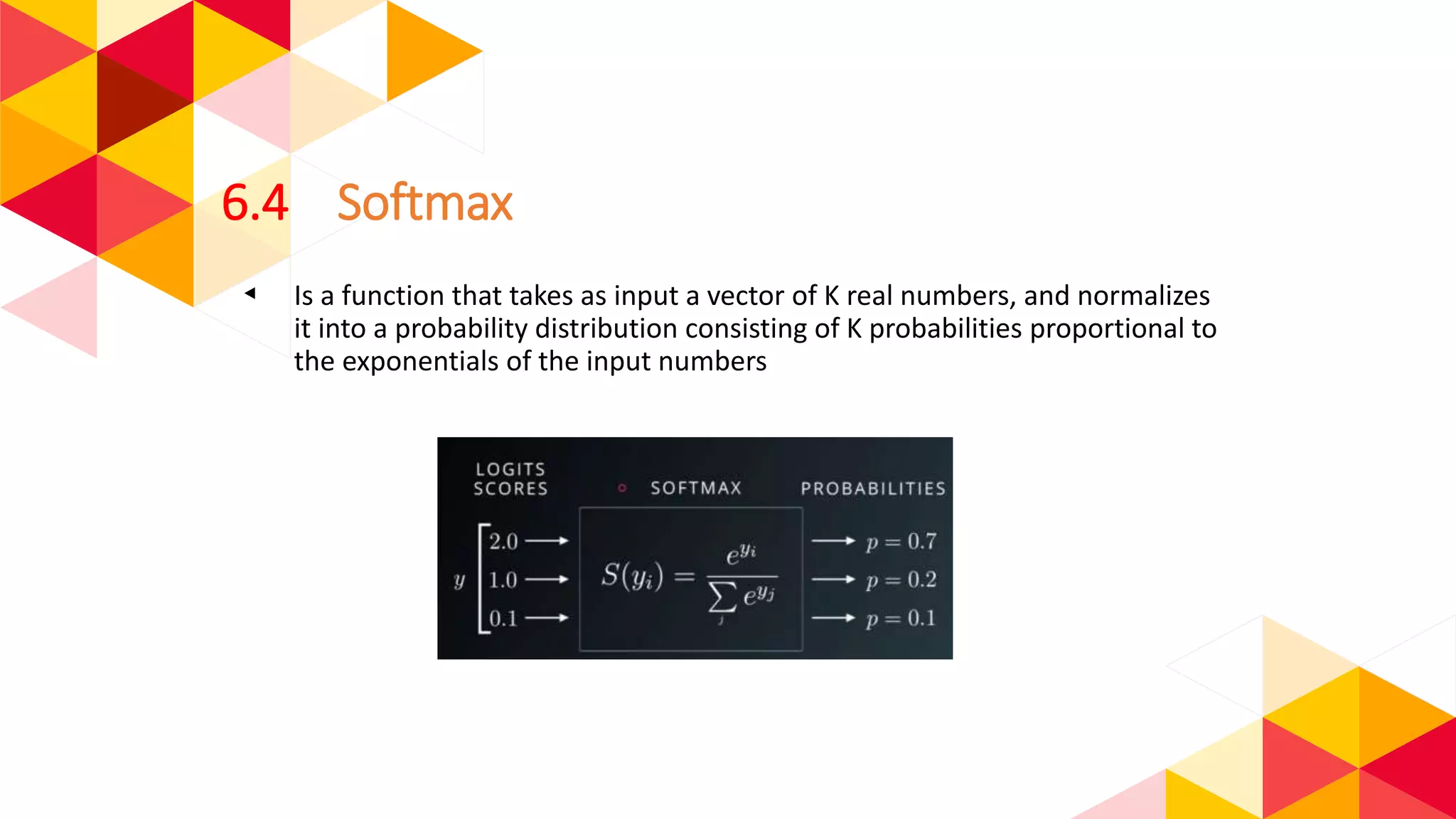

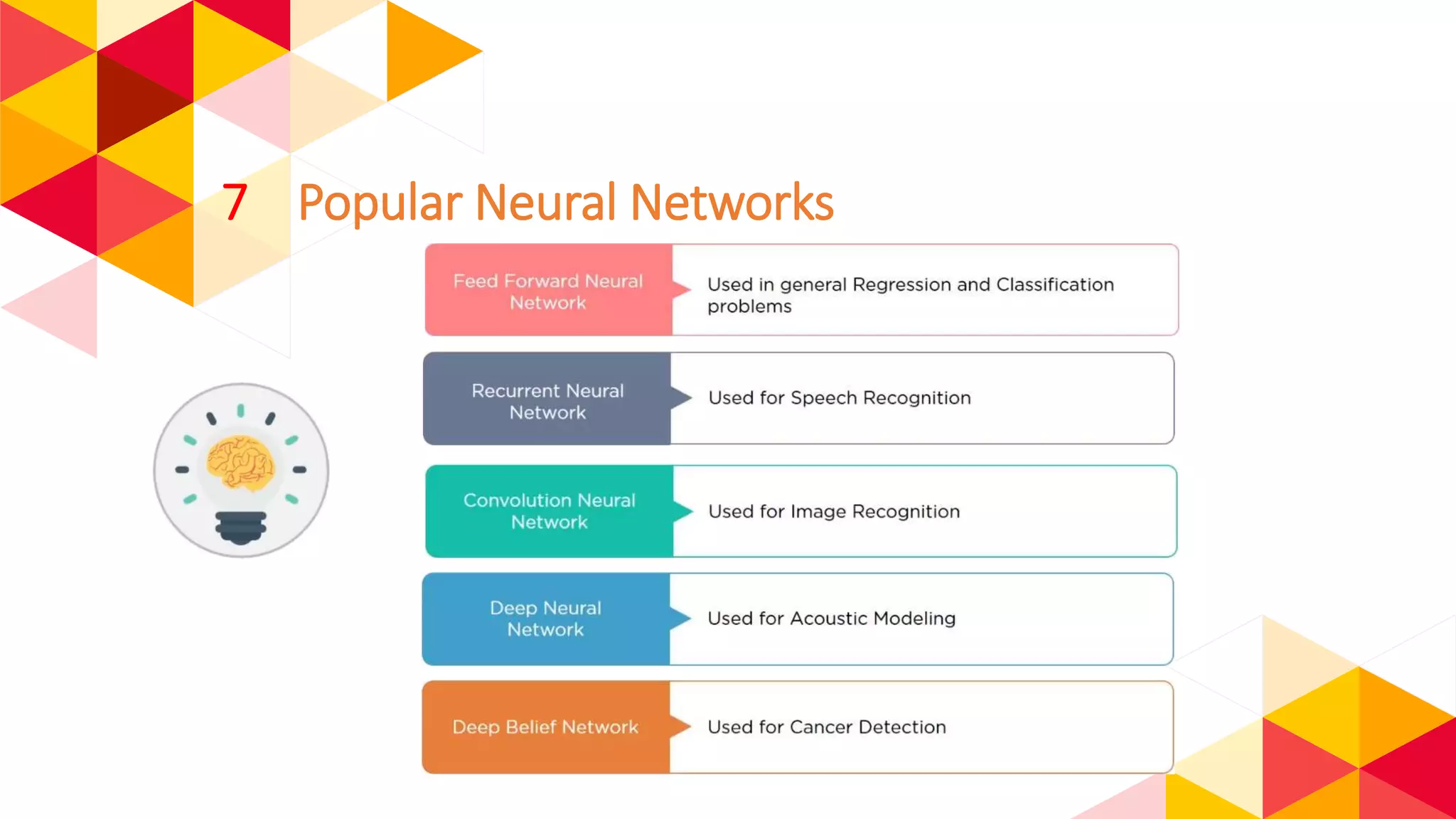

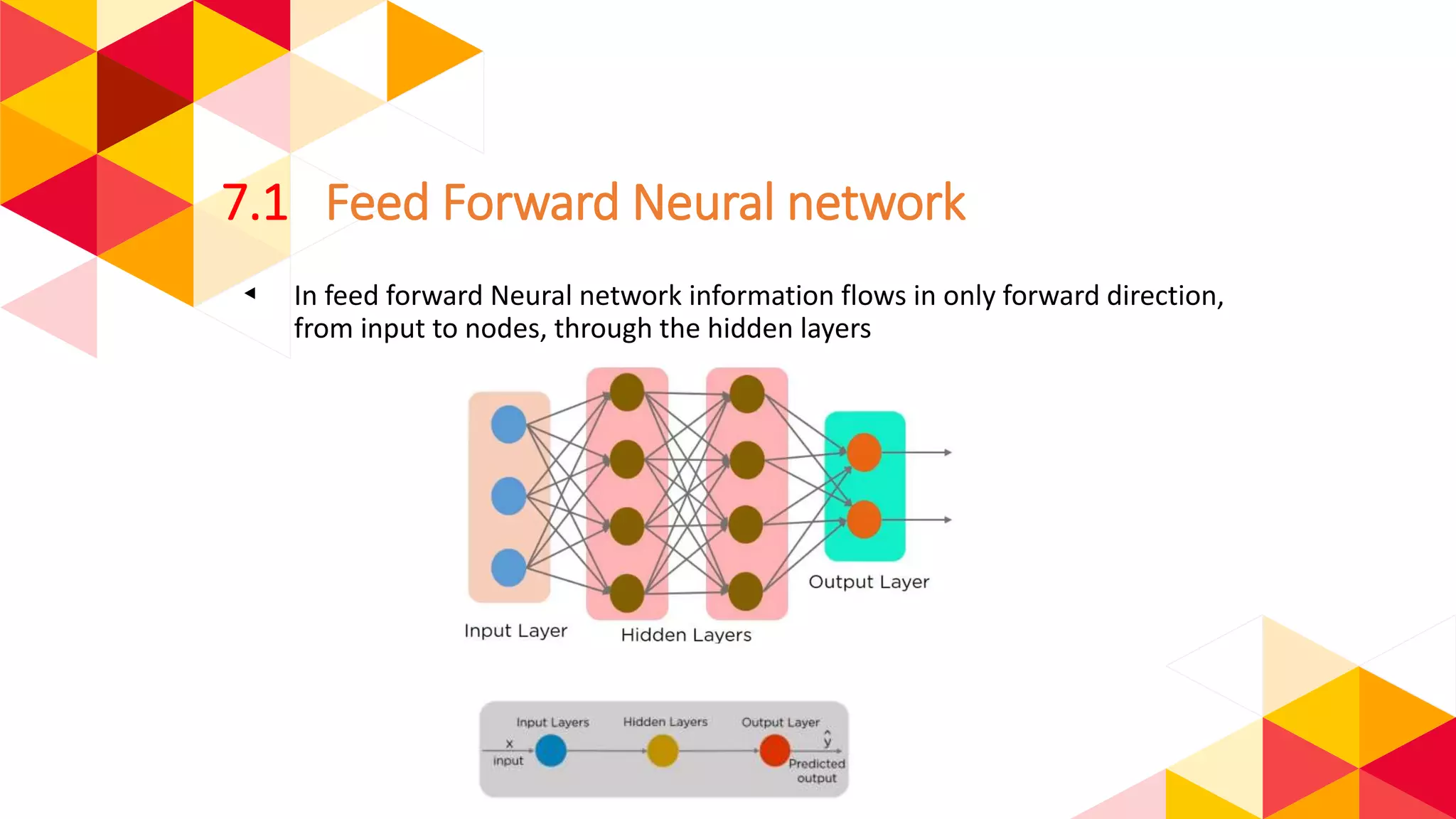

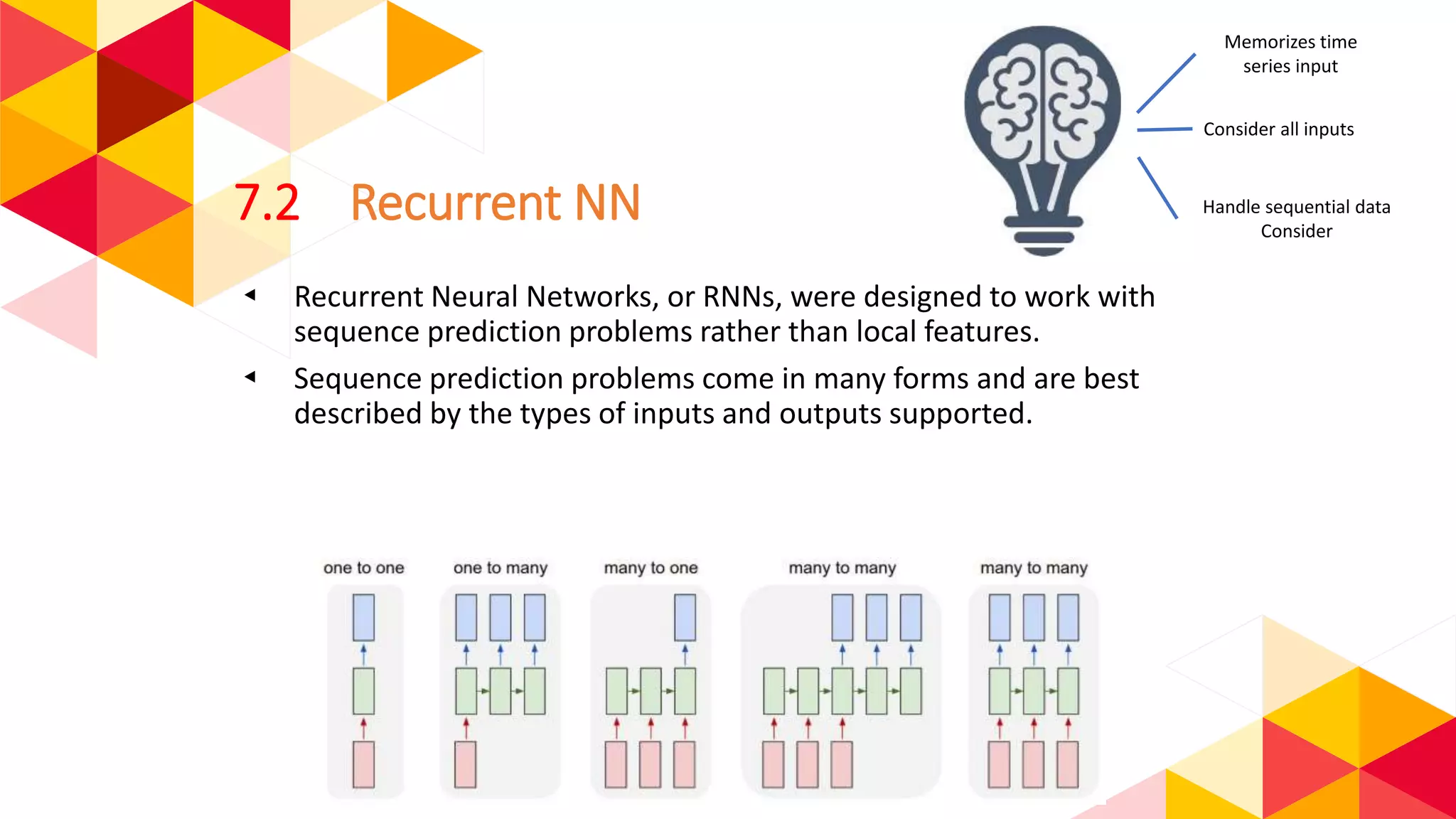

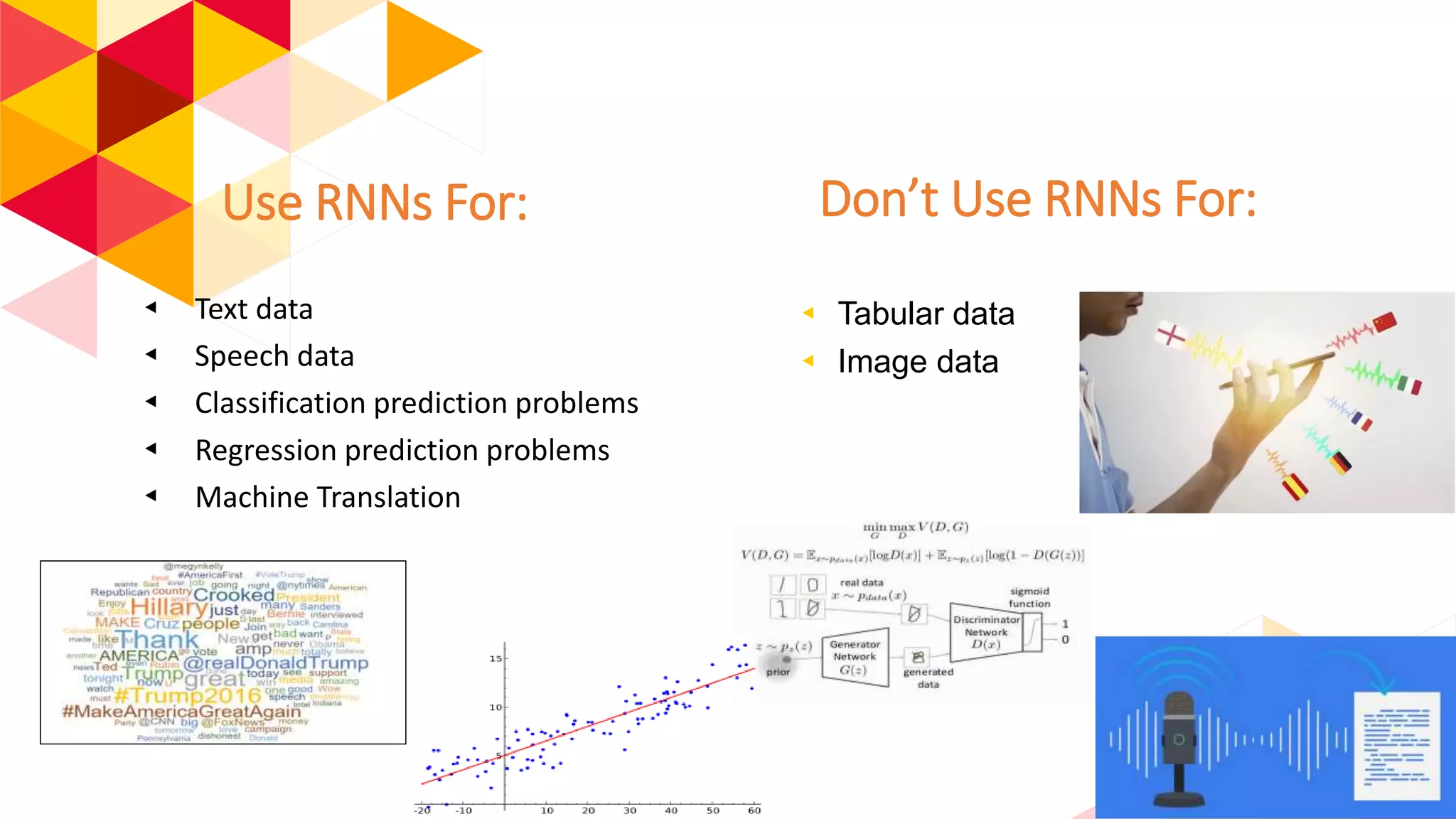

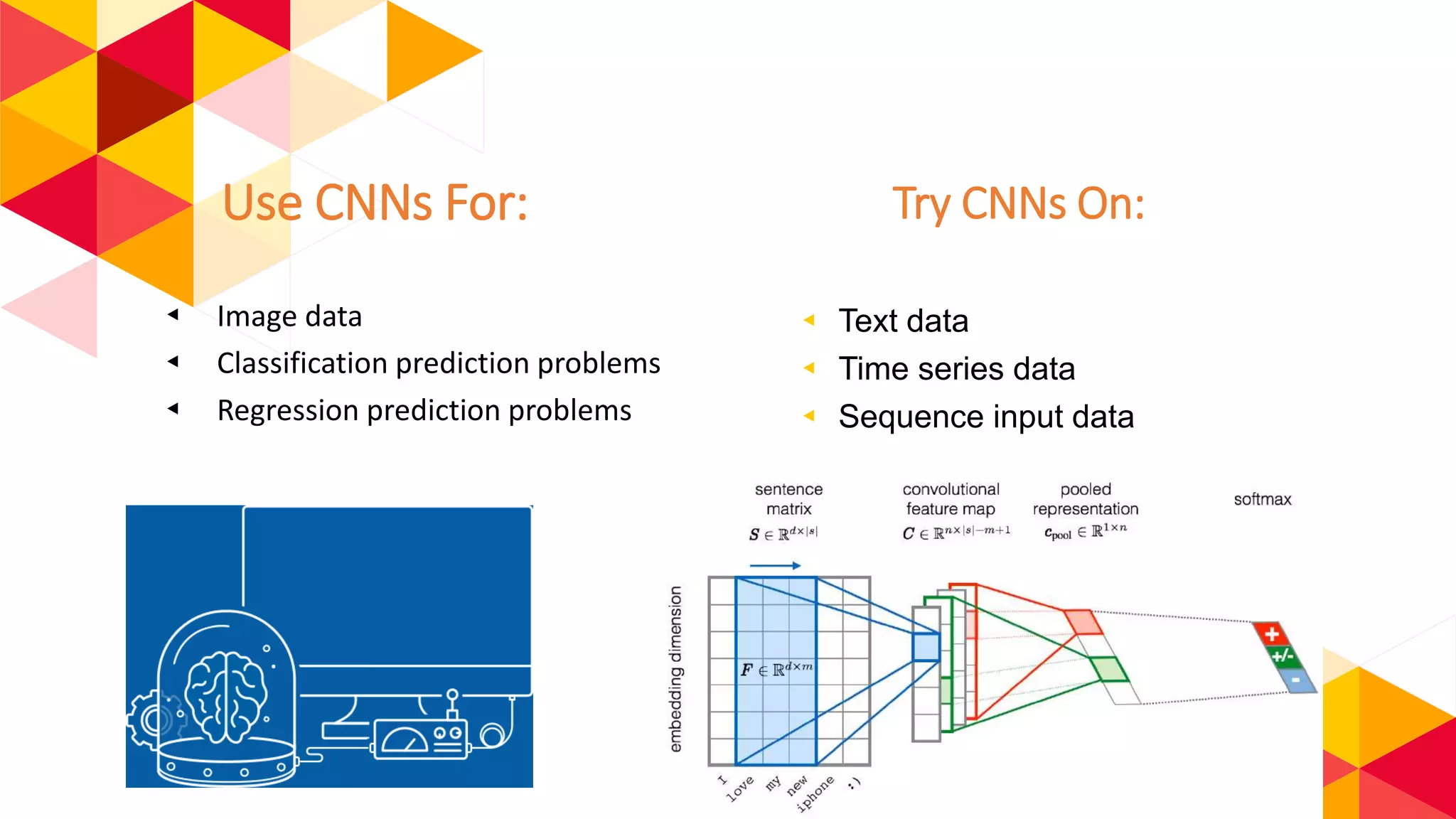

The document outlines the fundamentals of machine learning (ML) and deep learning (DL), including definitions, algorithms, and architectures. It covers types of ML algorithms such as supervised, unsupervised, and reinforcement learning, as well as various neural network architectures and their applications in real-world scenarios like speech recognition and image classification. Key concepts such as activation functions, optimization algorithms, and common challenges in training models are also discussed.