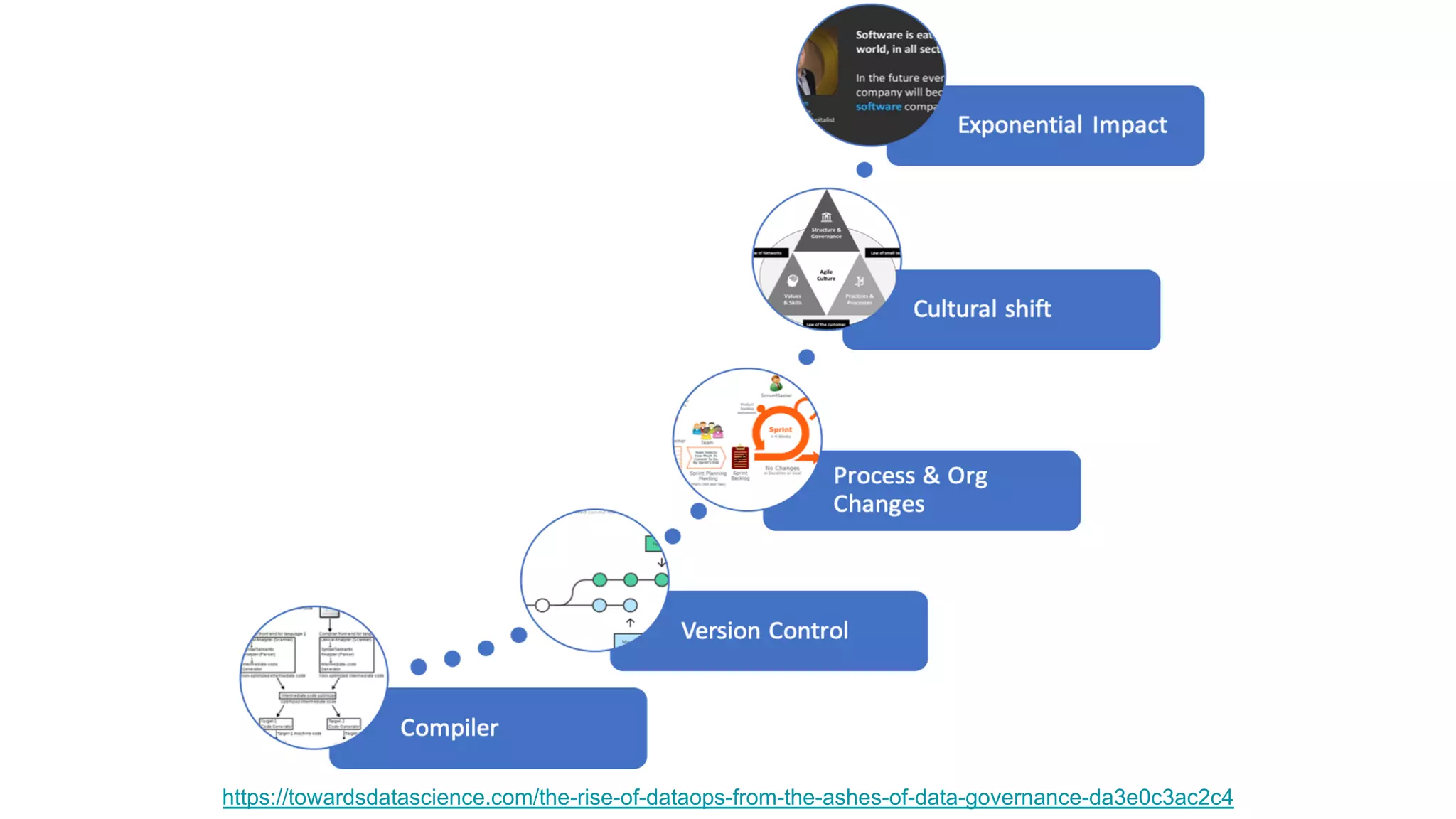

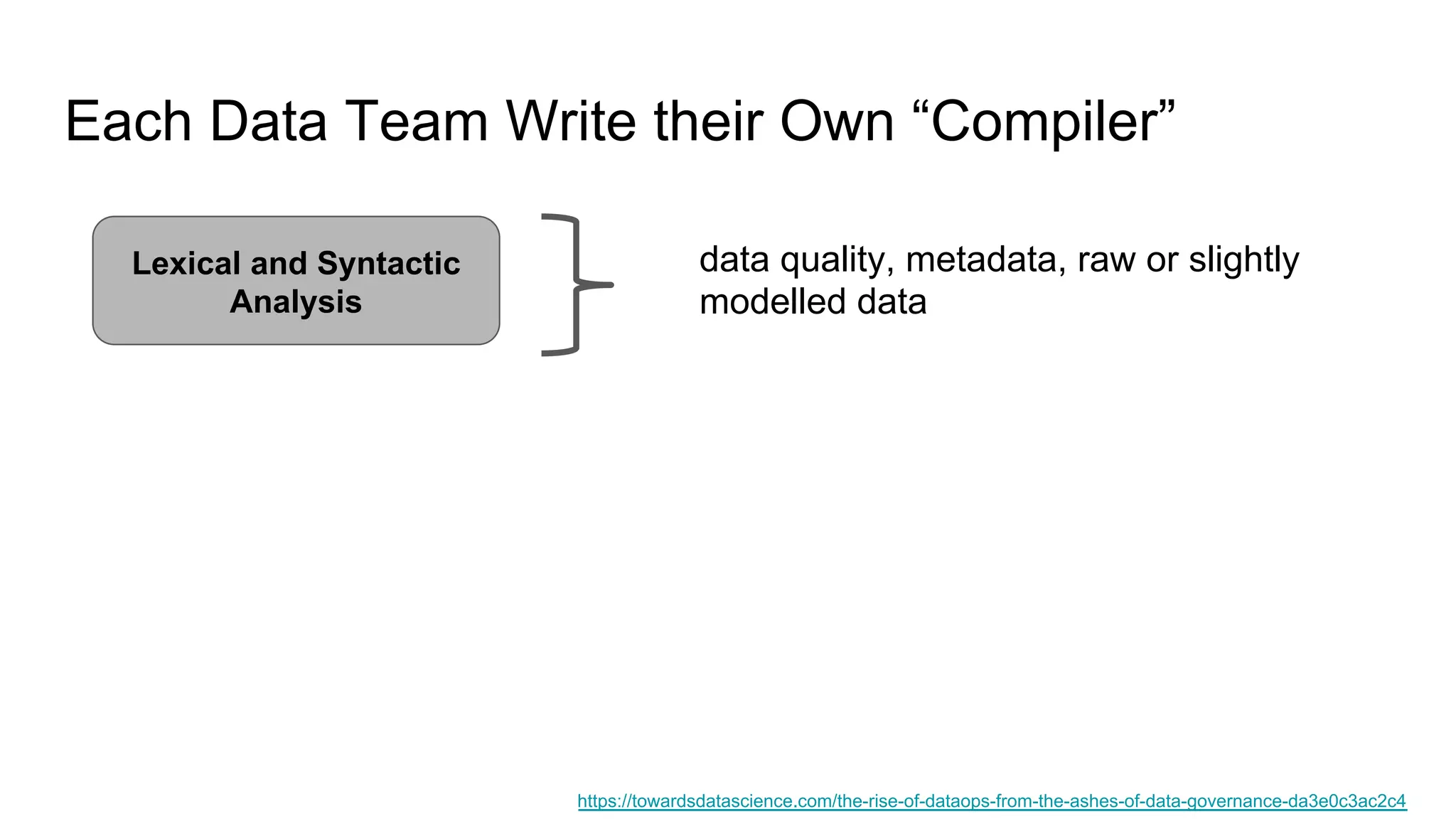

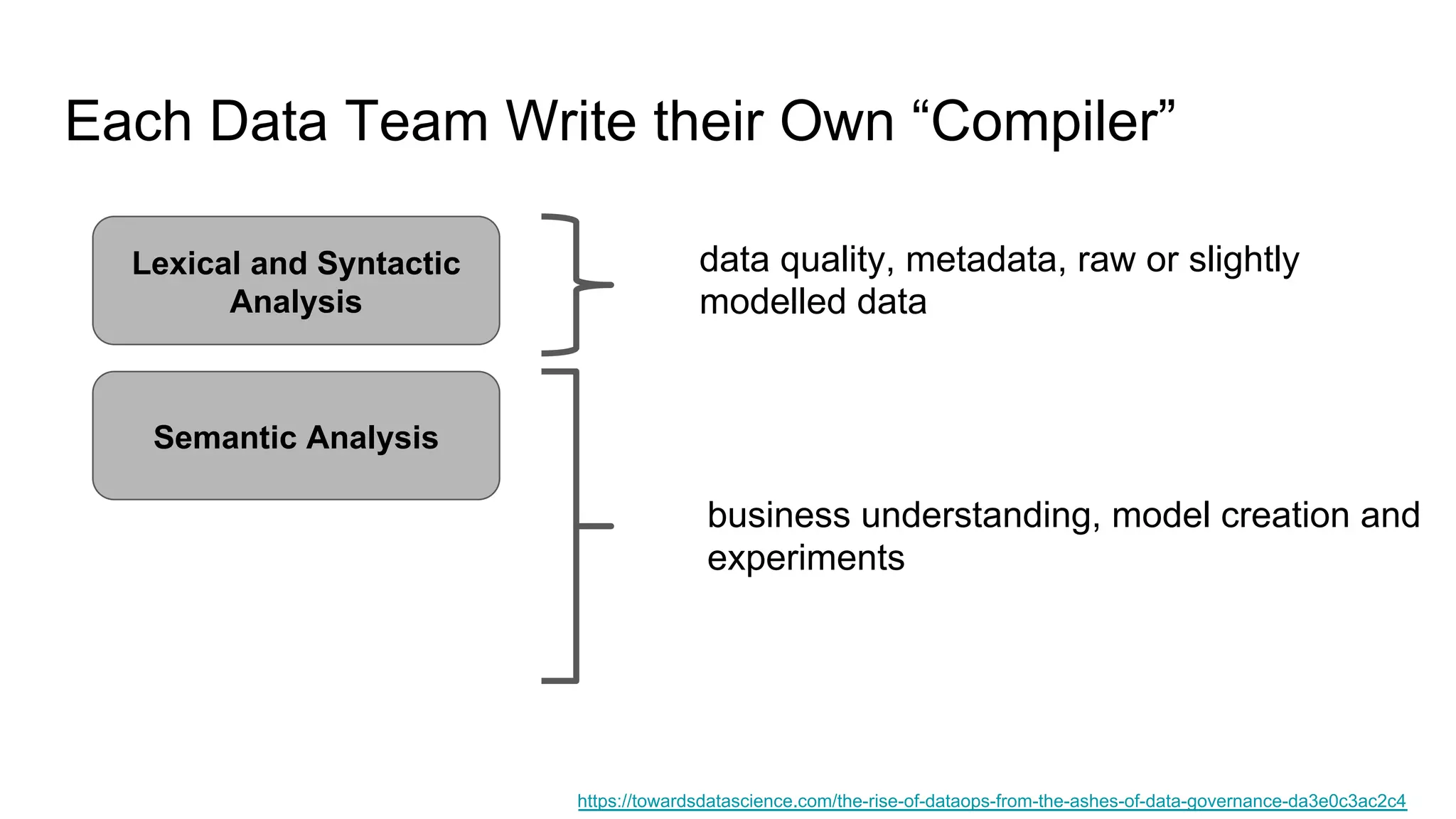

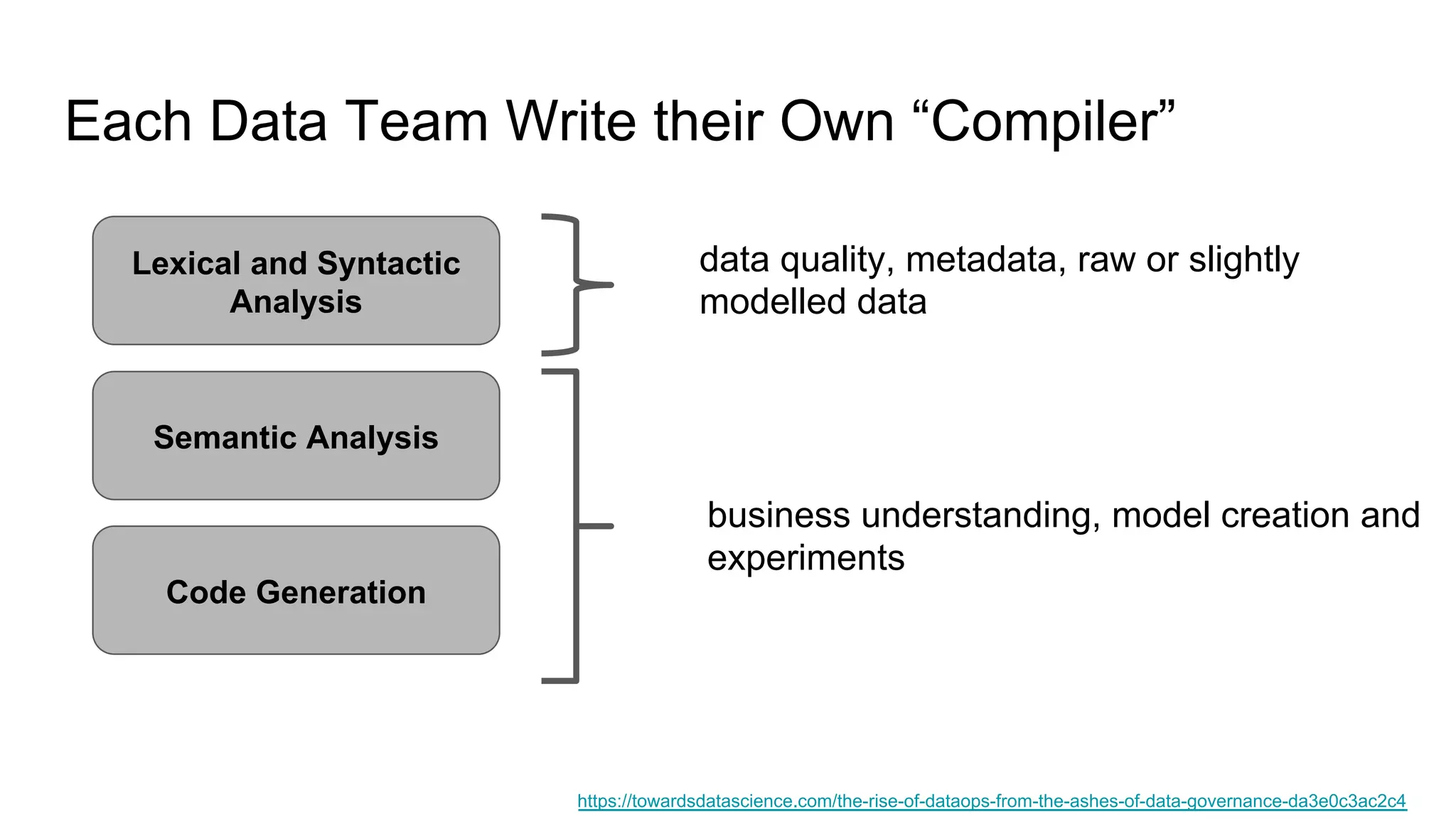

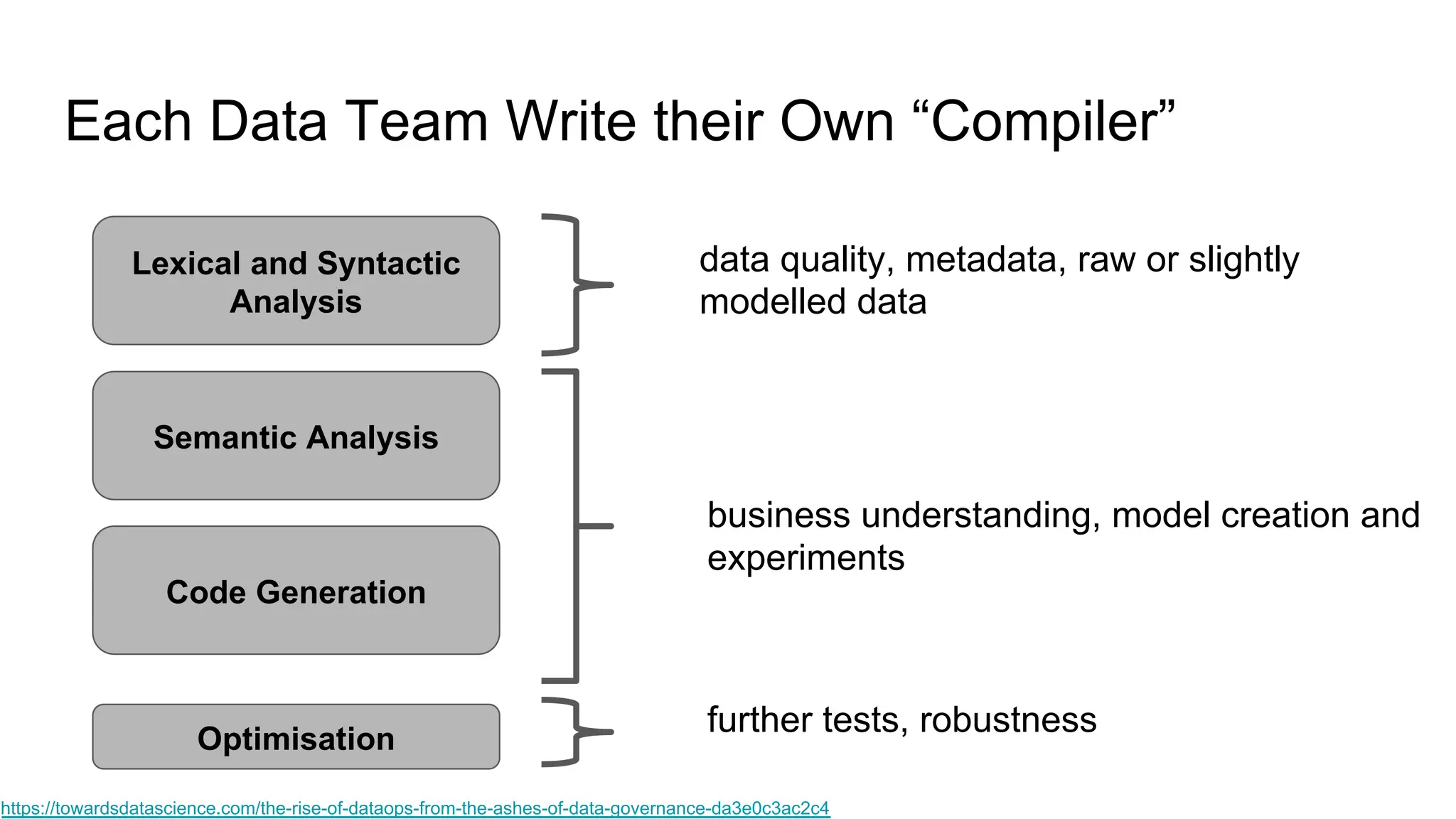

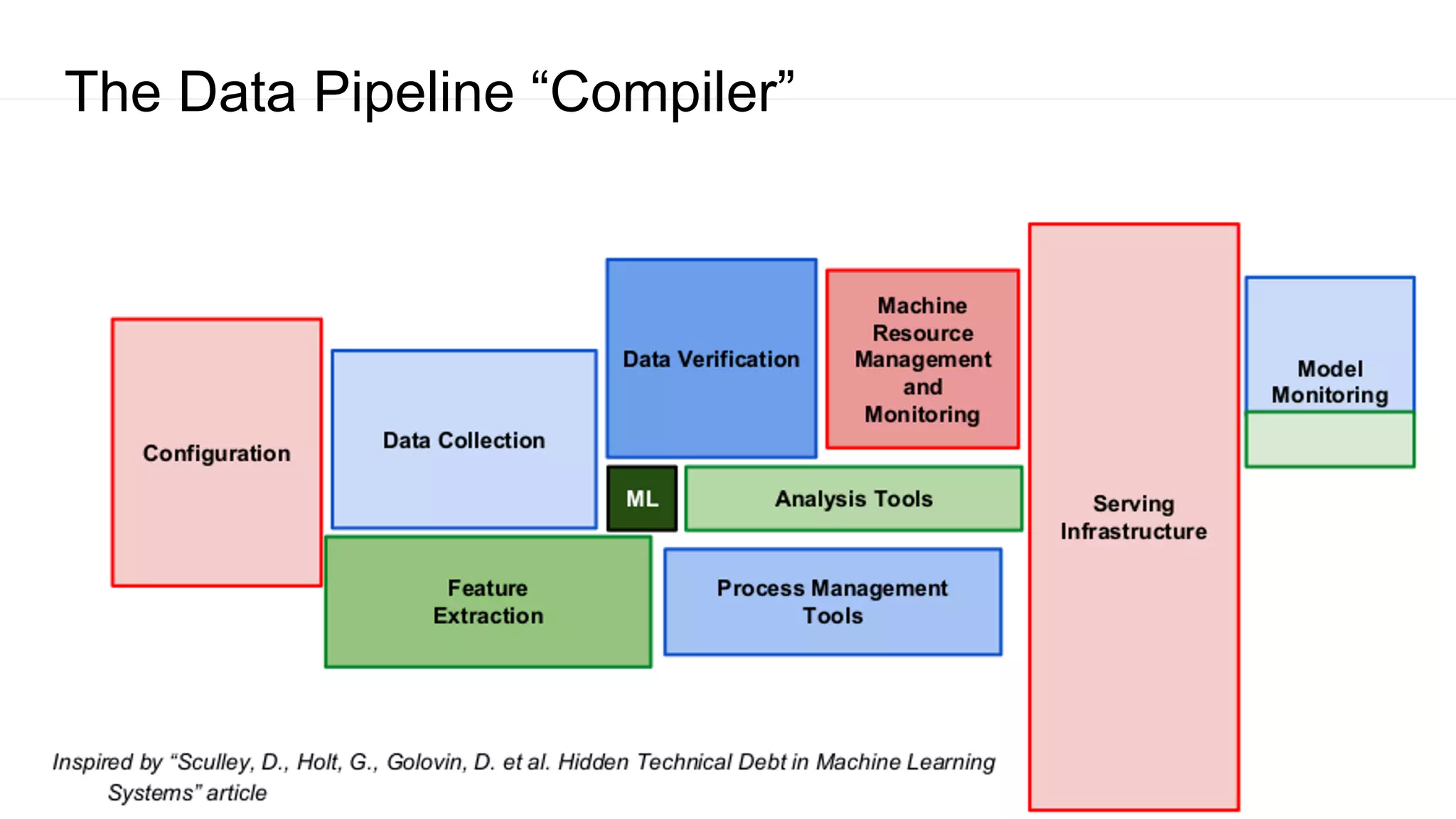

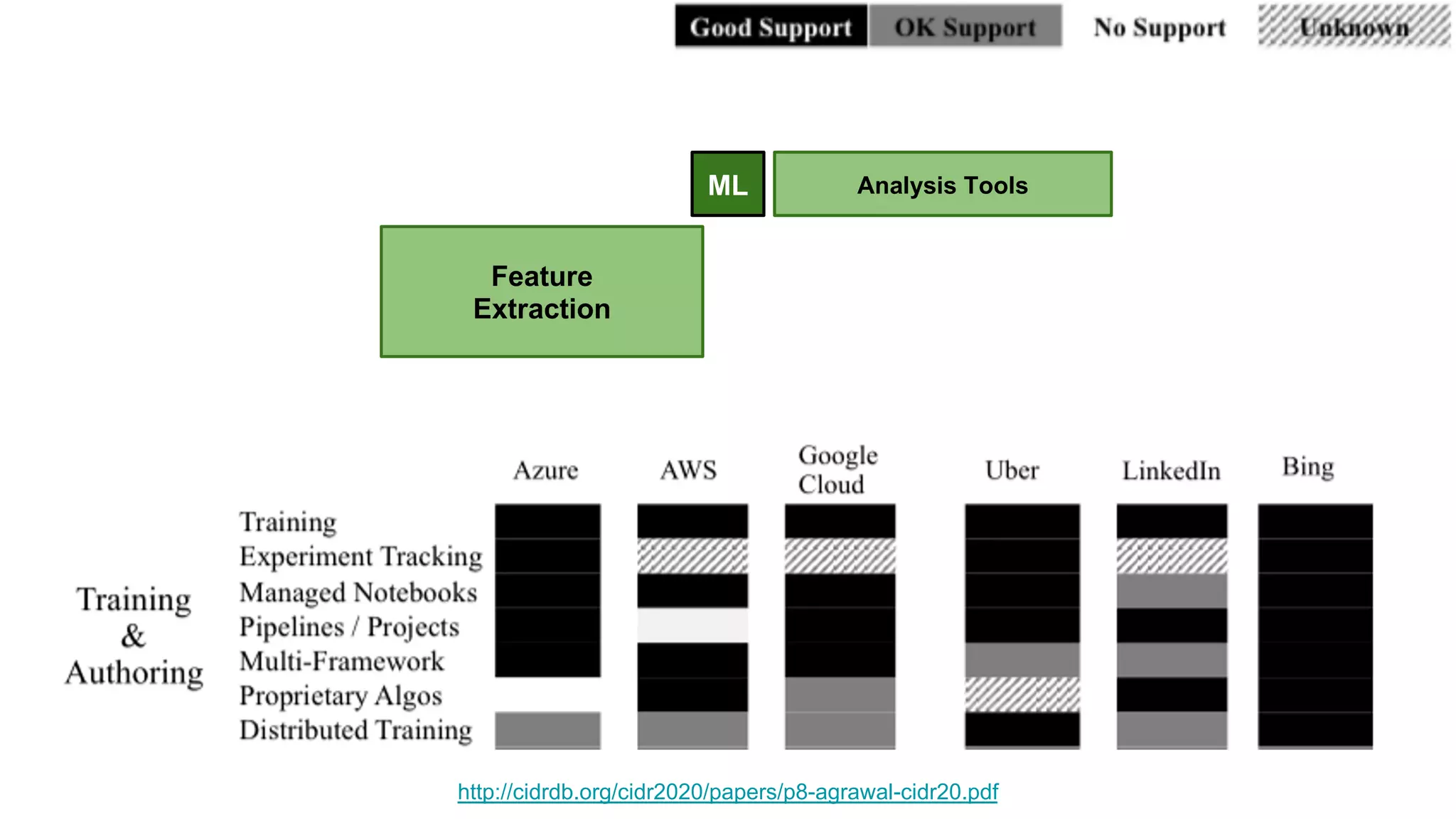

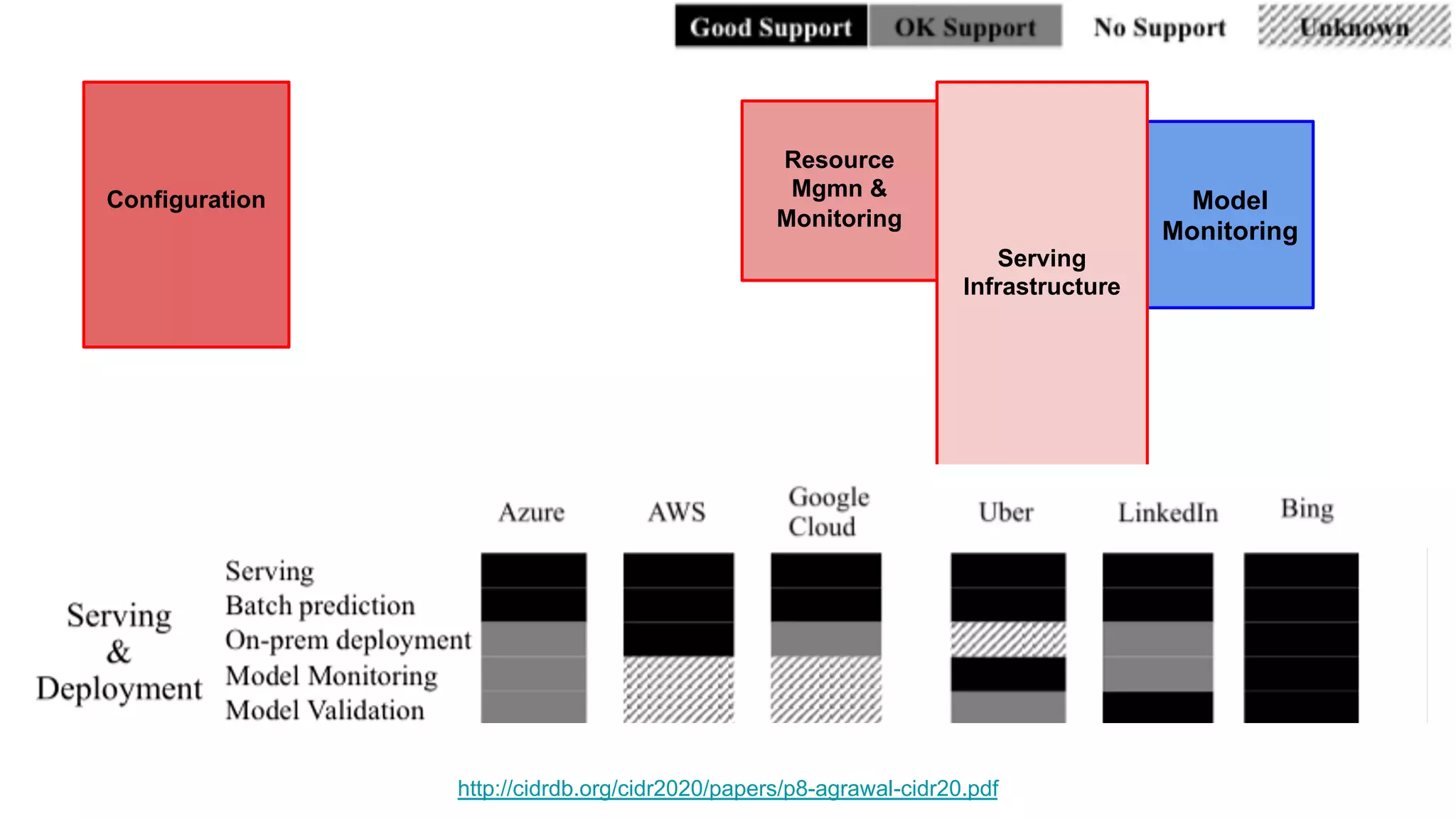

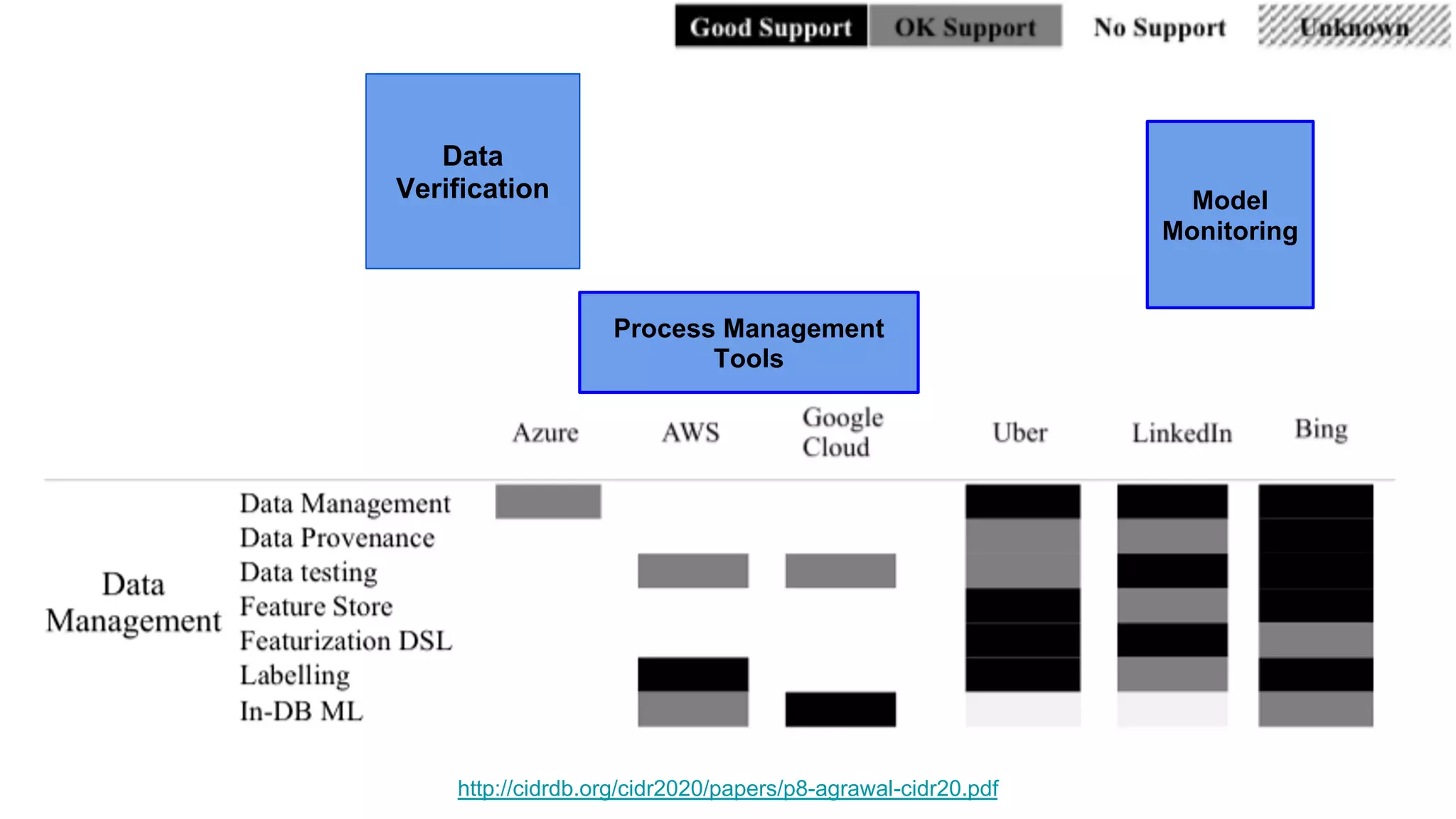

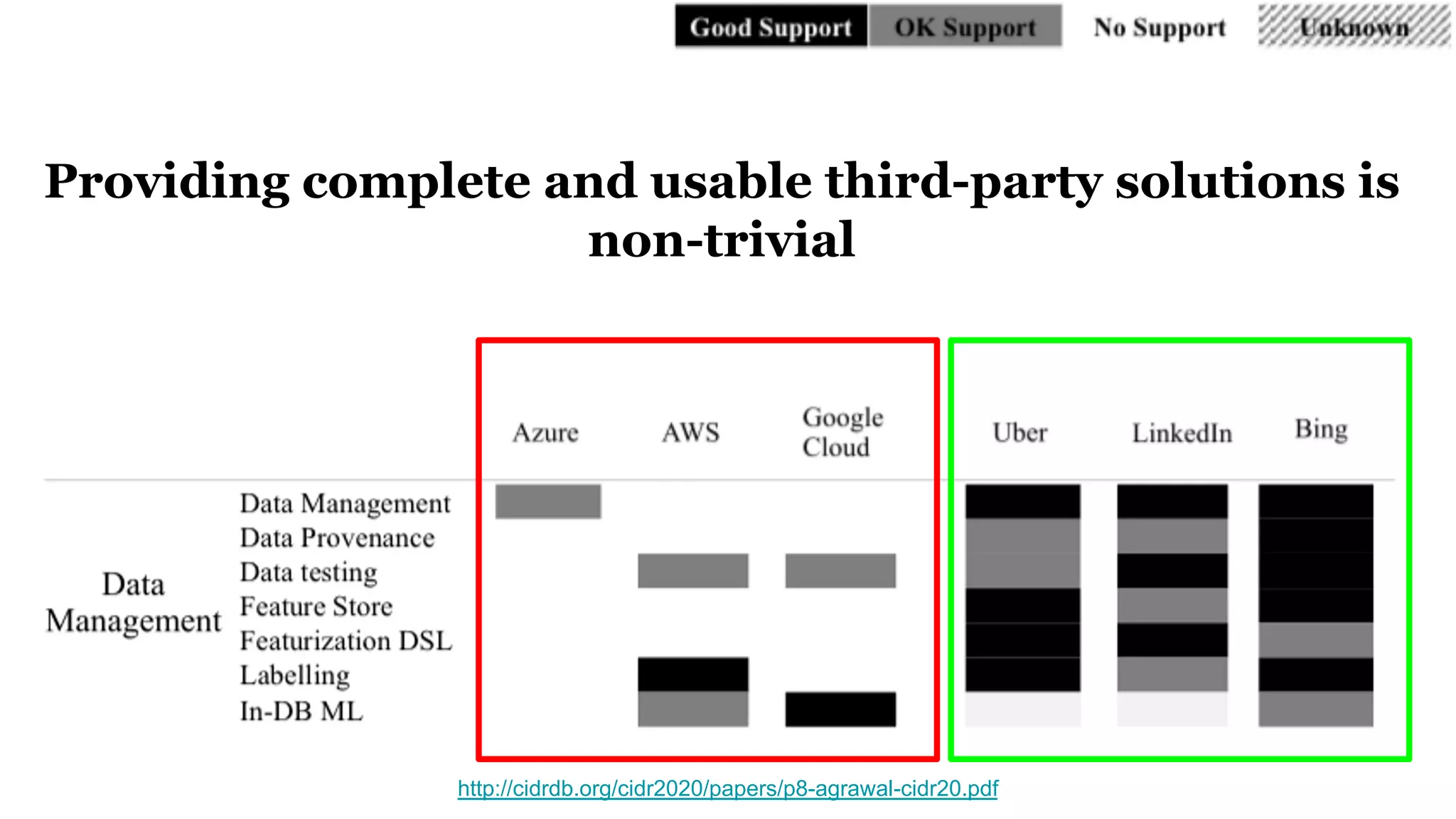

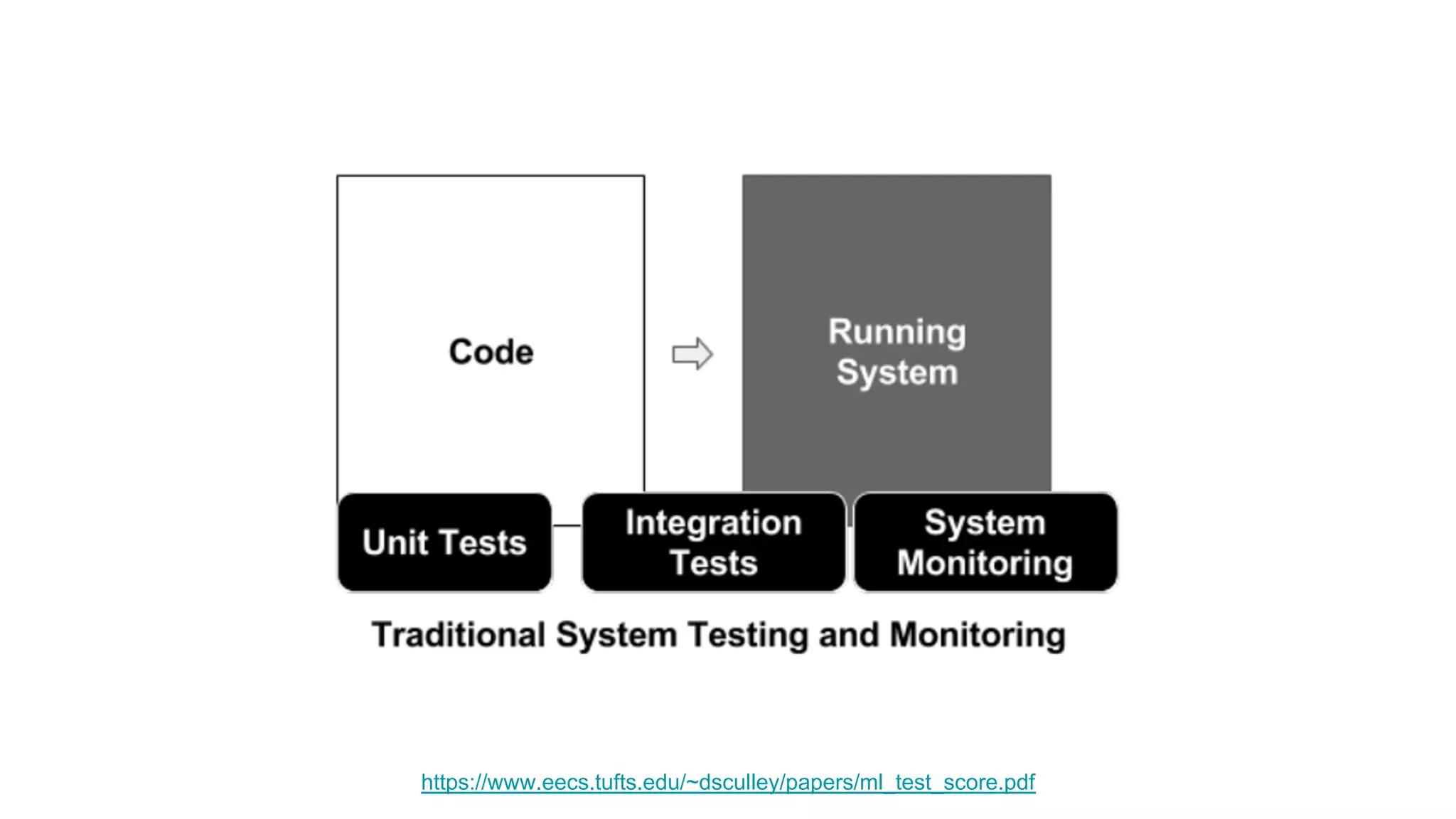

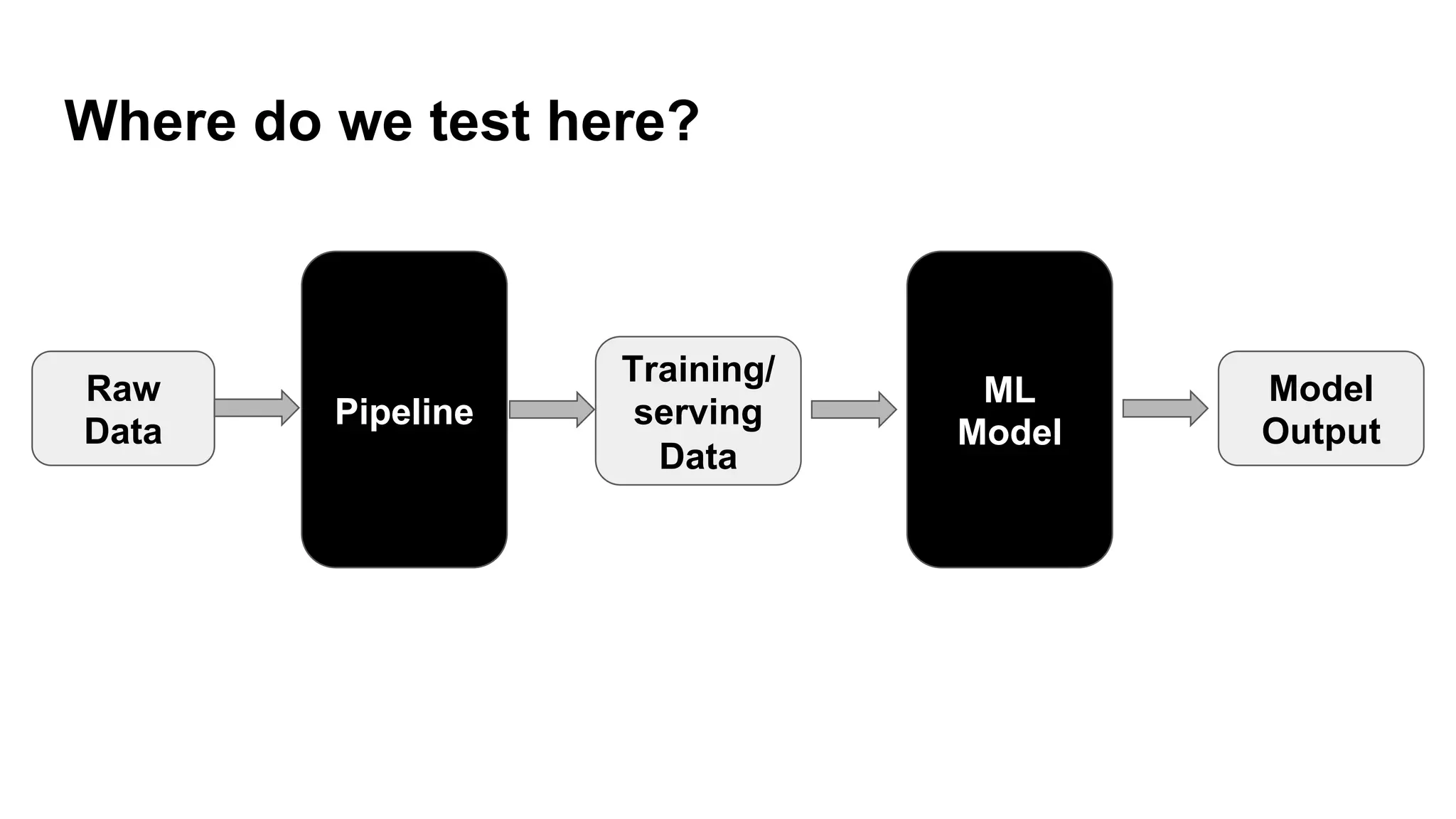

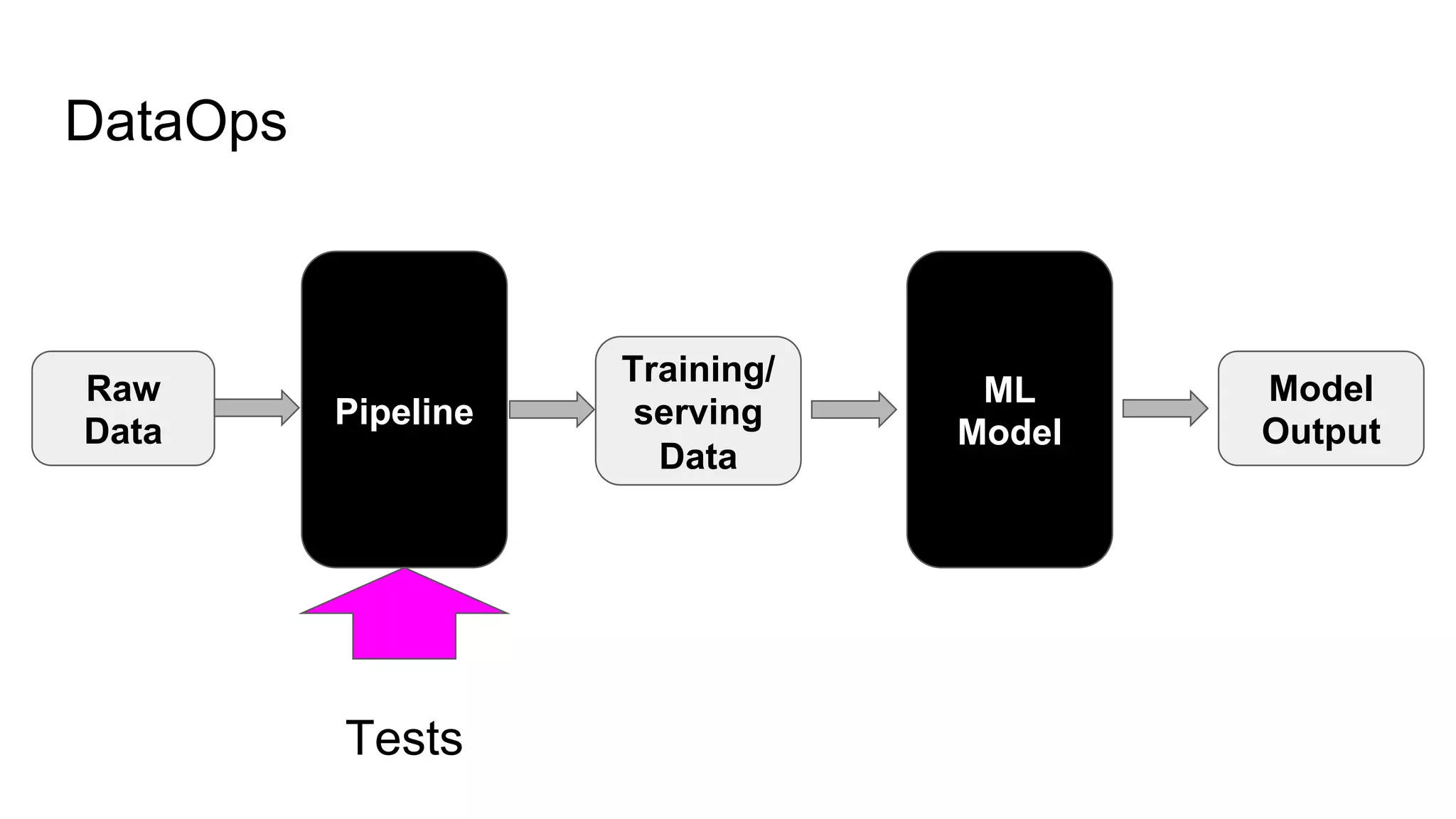

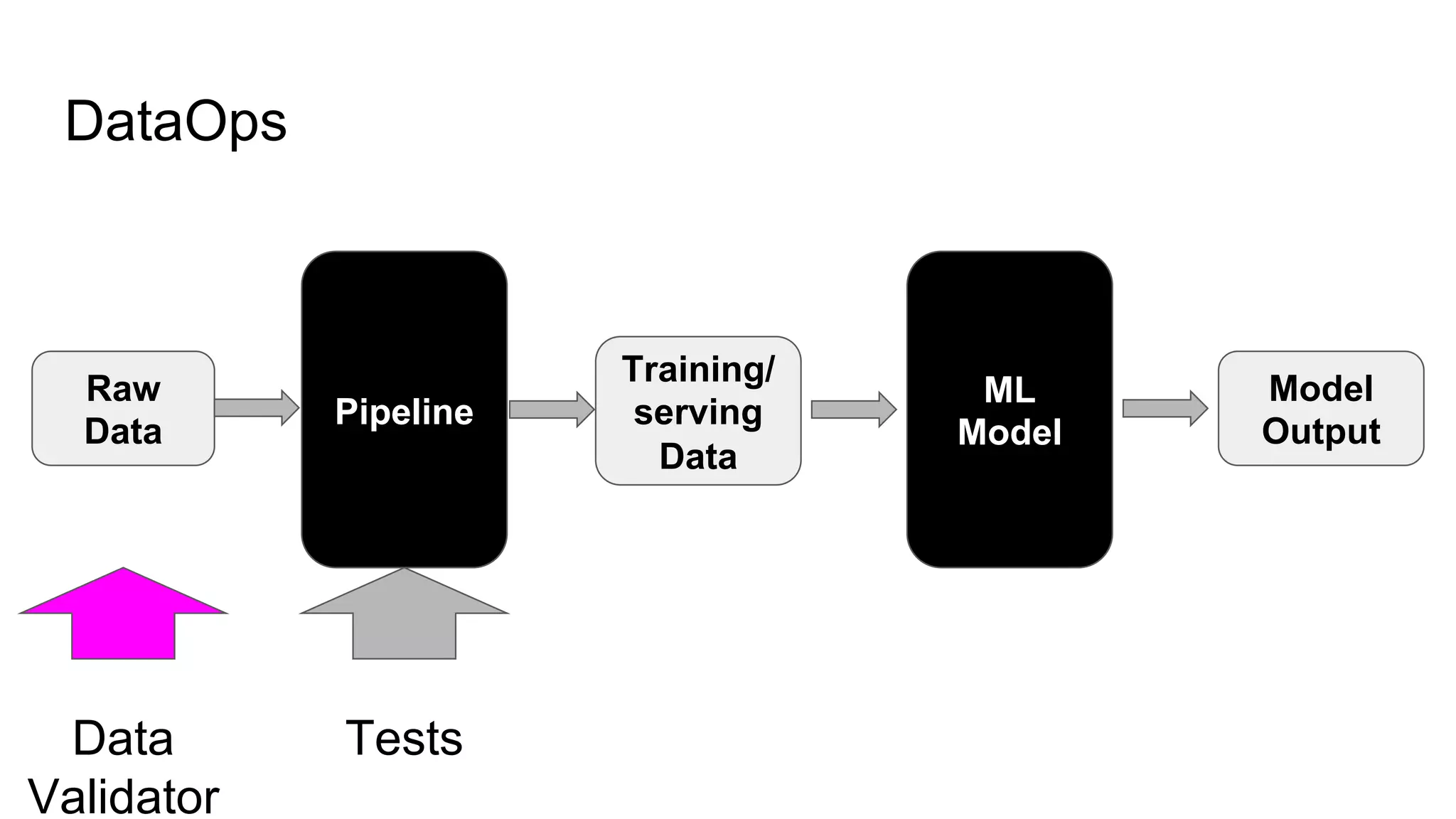

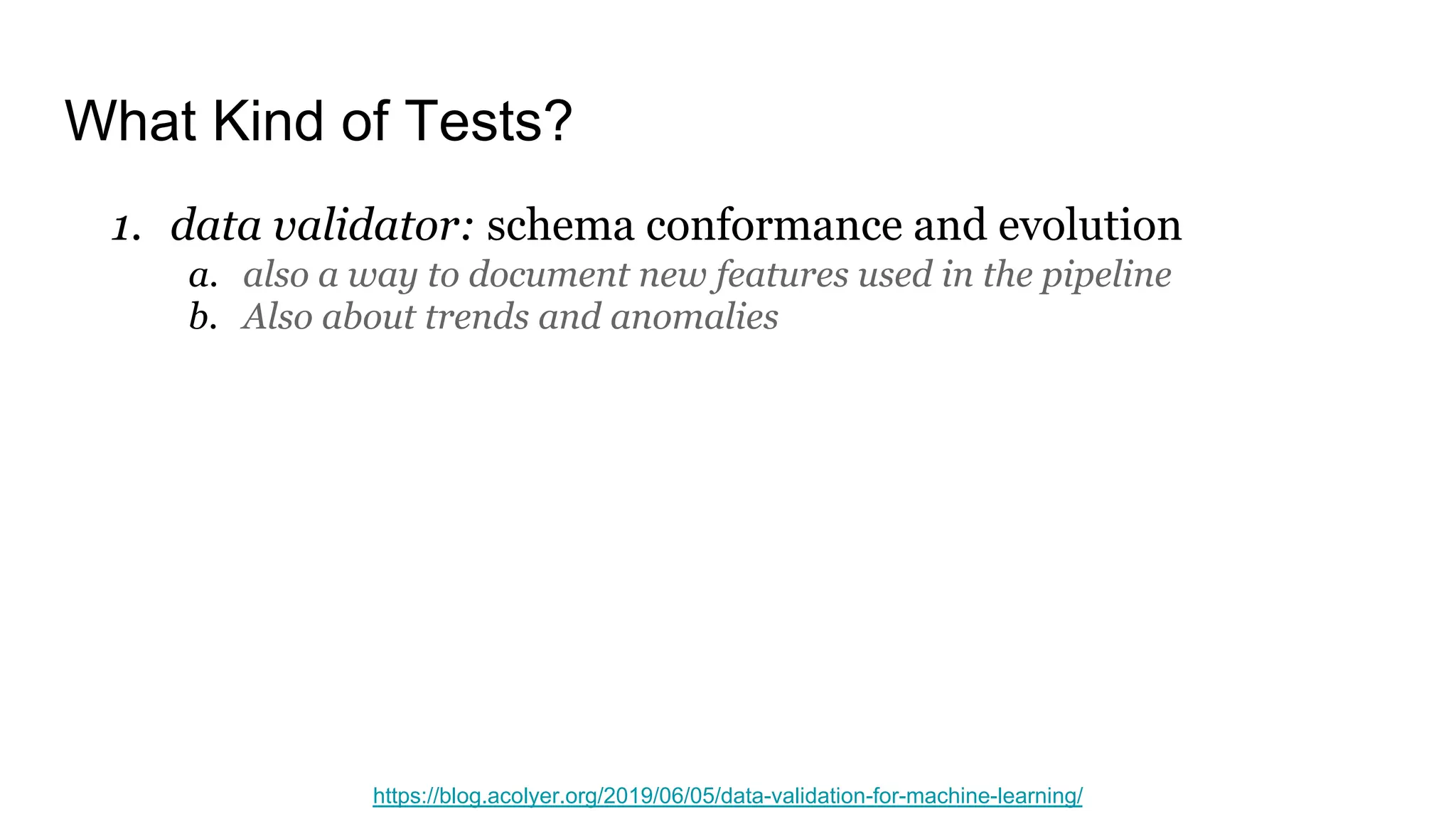

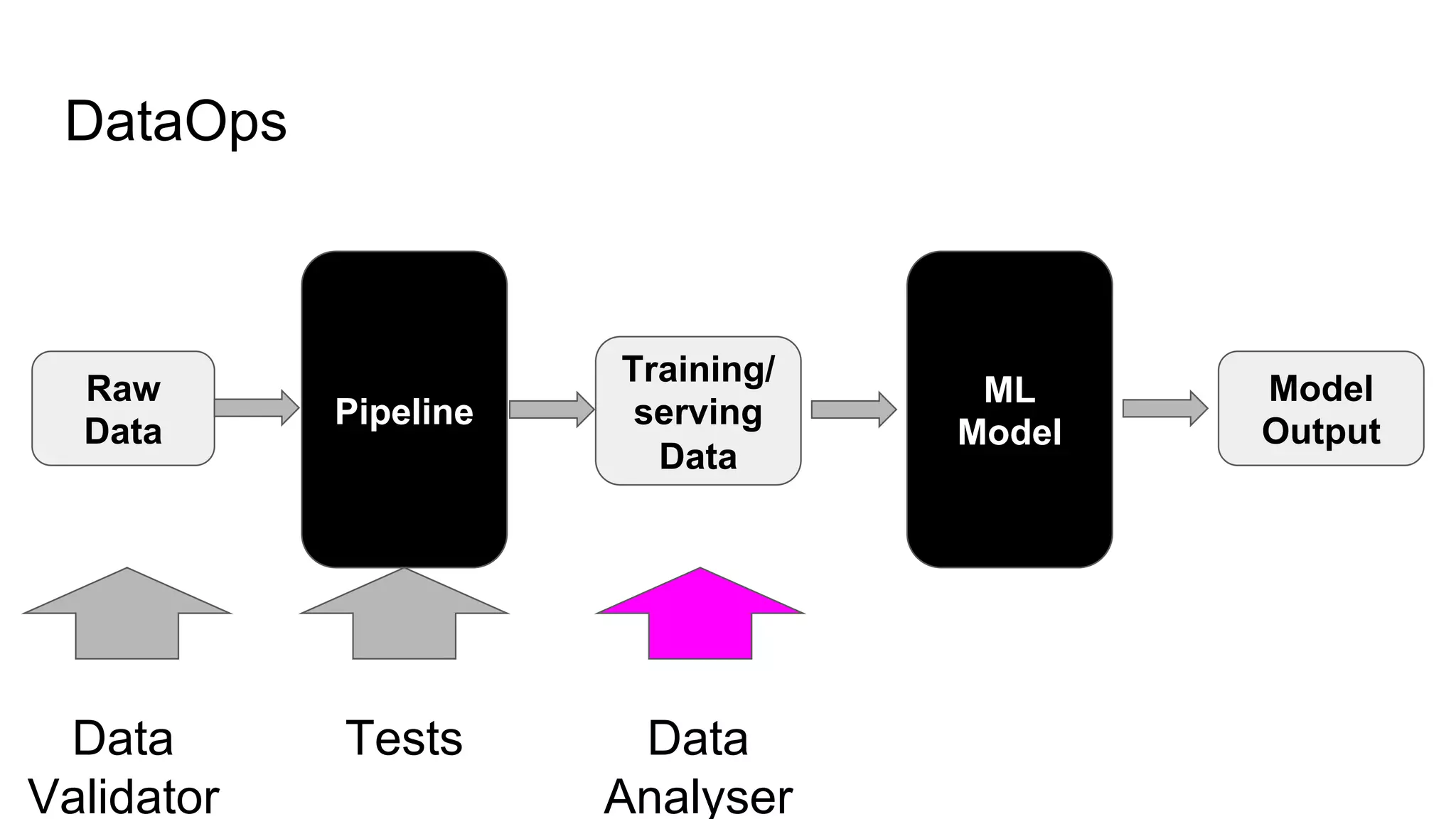

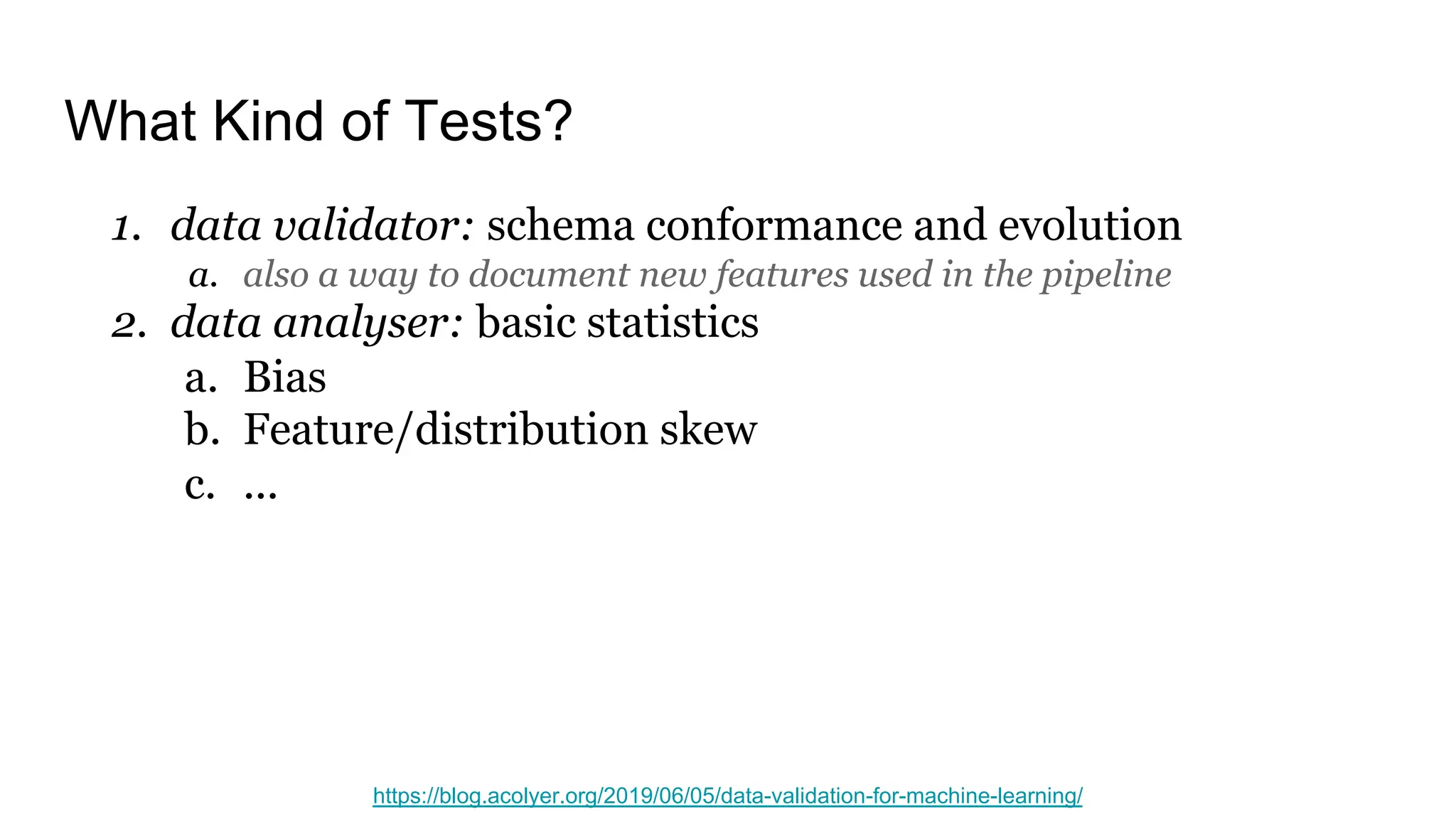

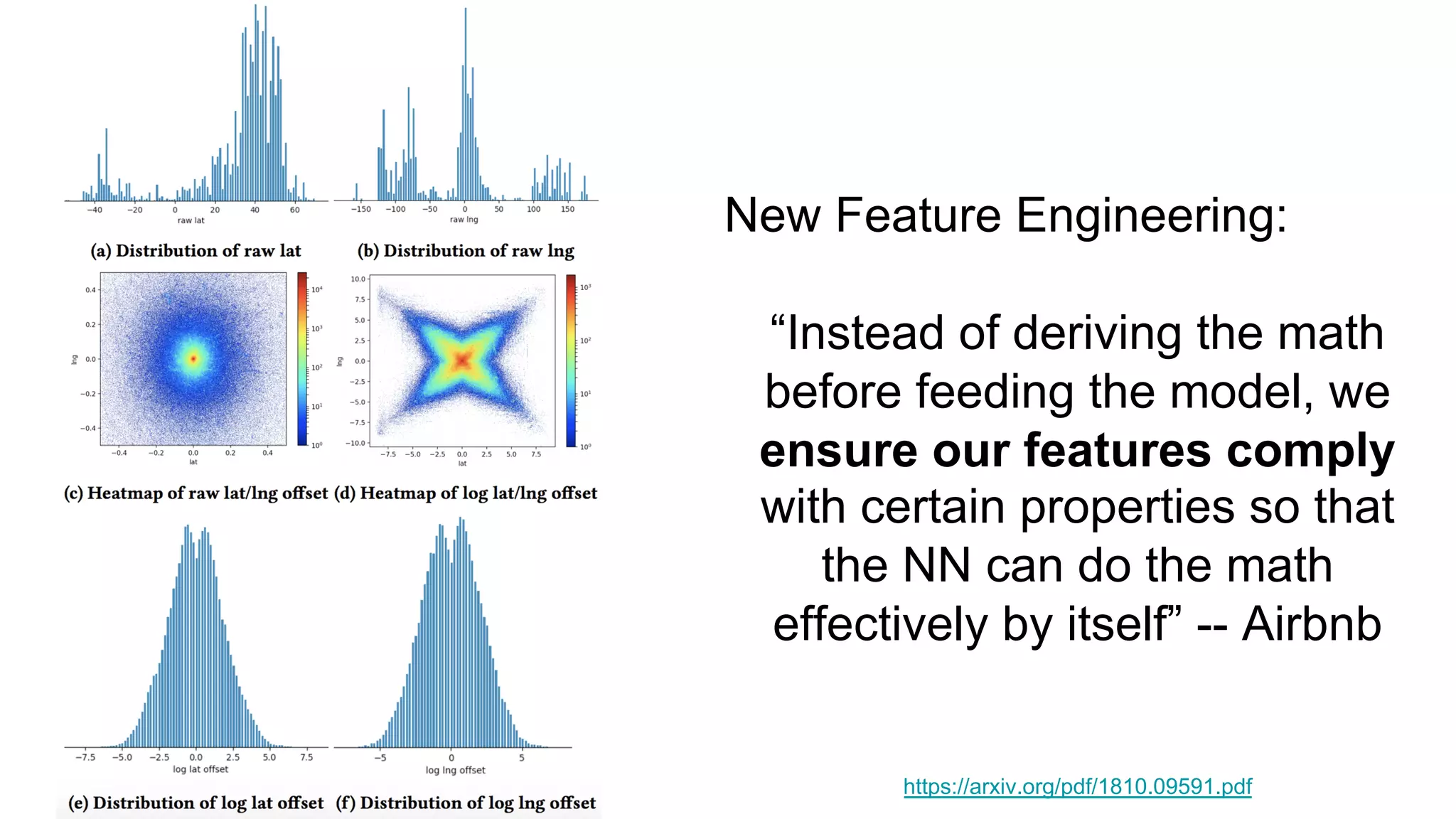

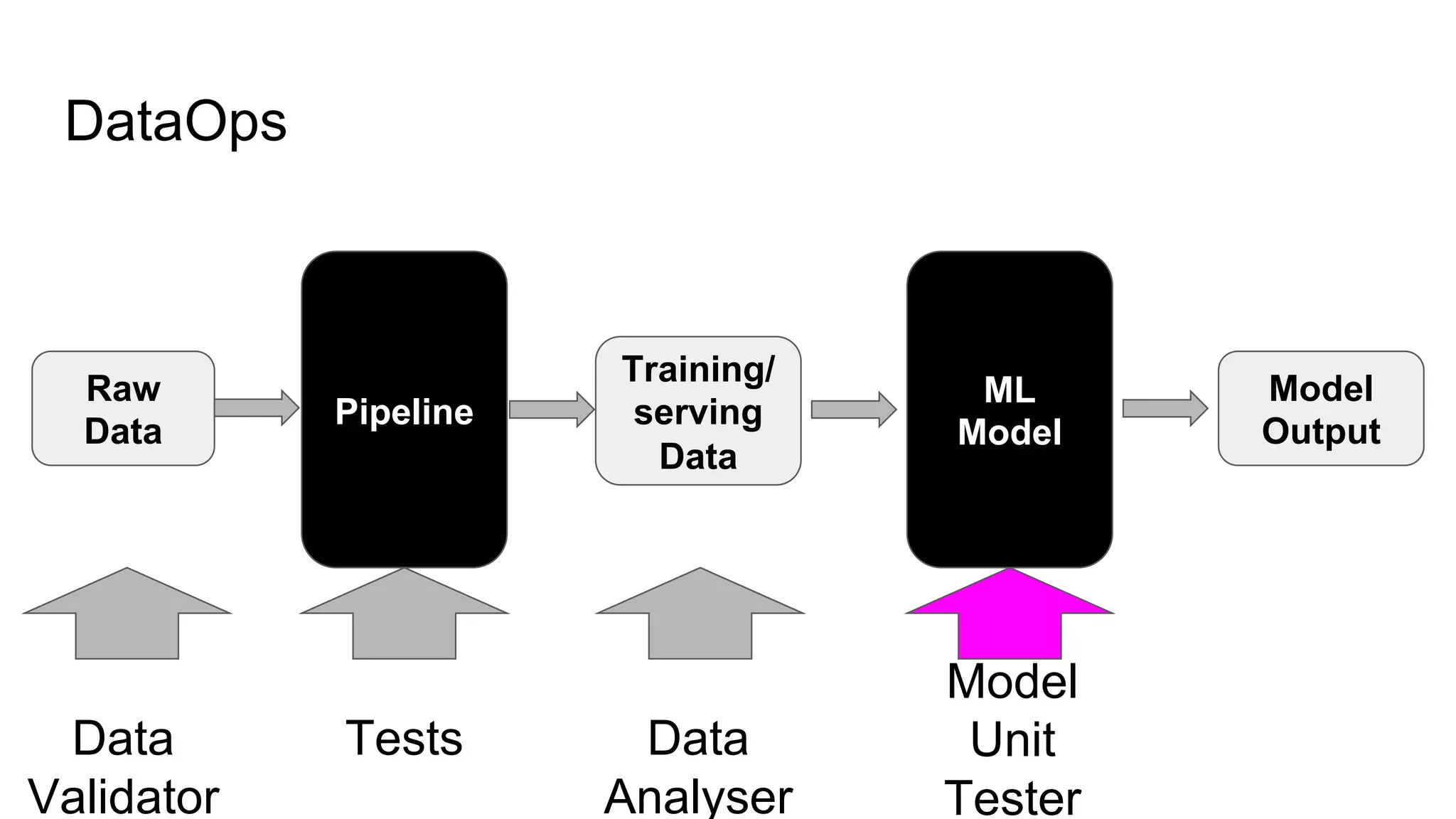

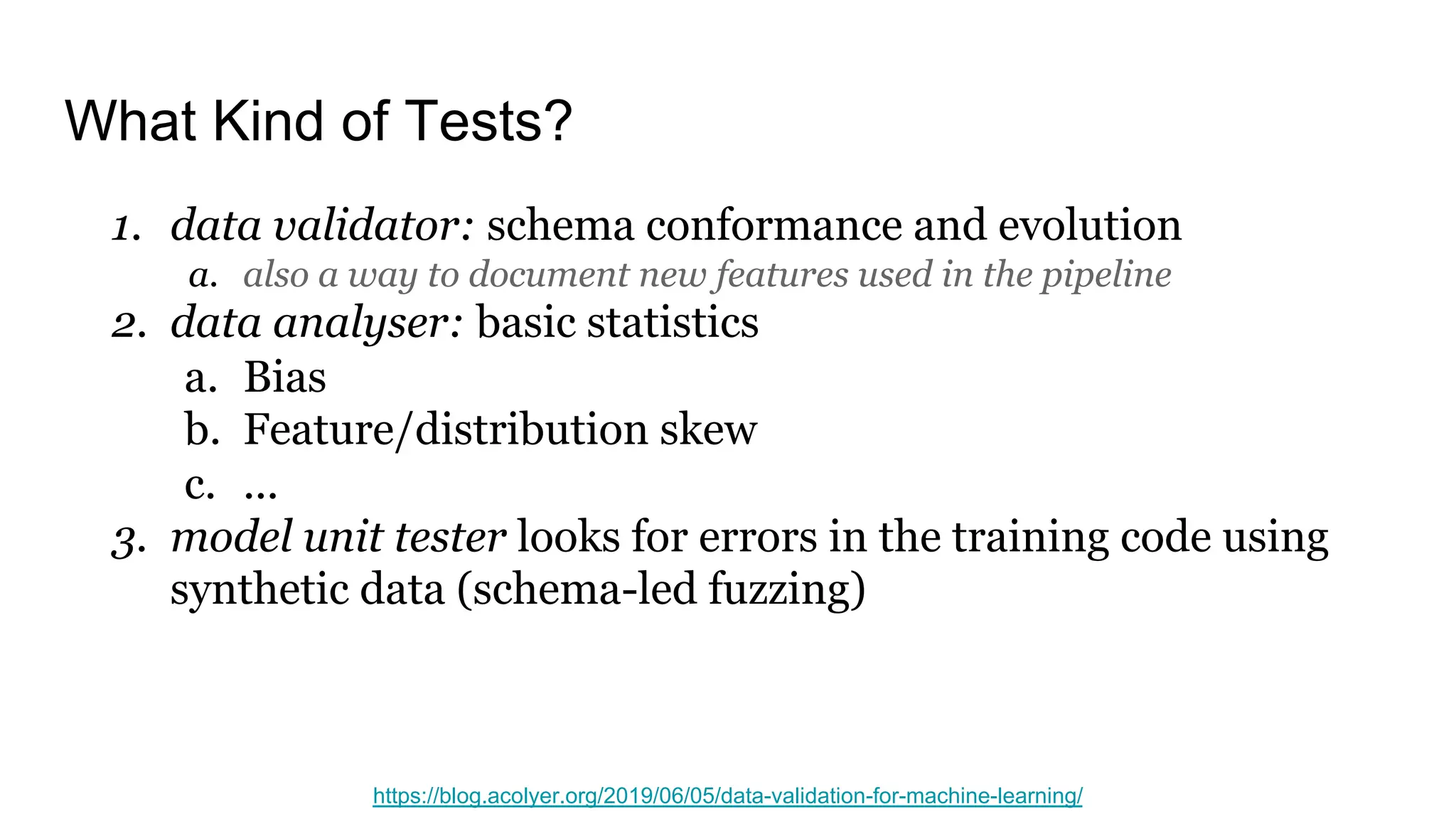

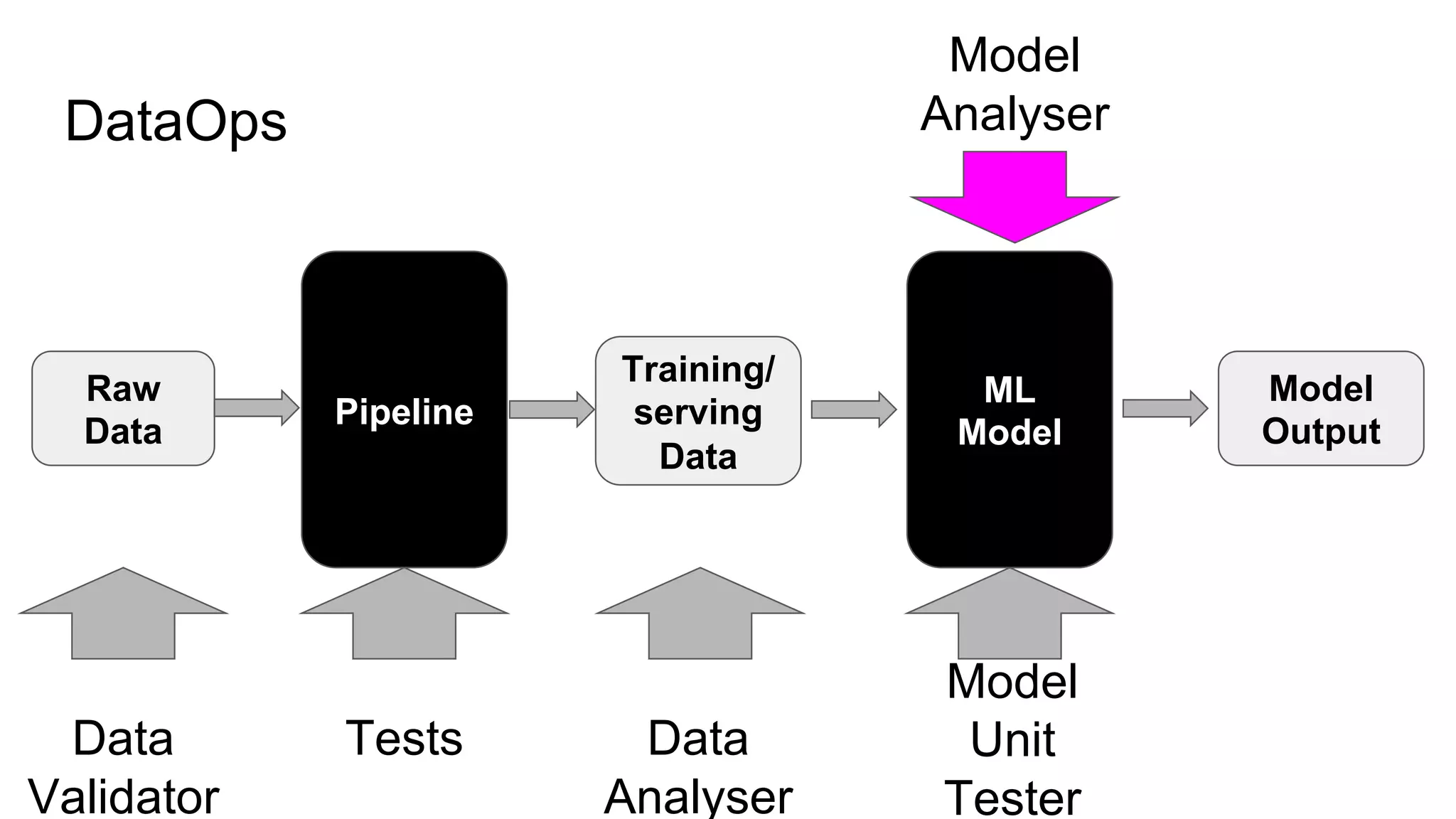

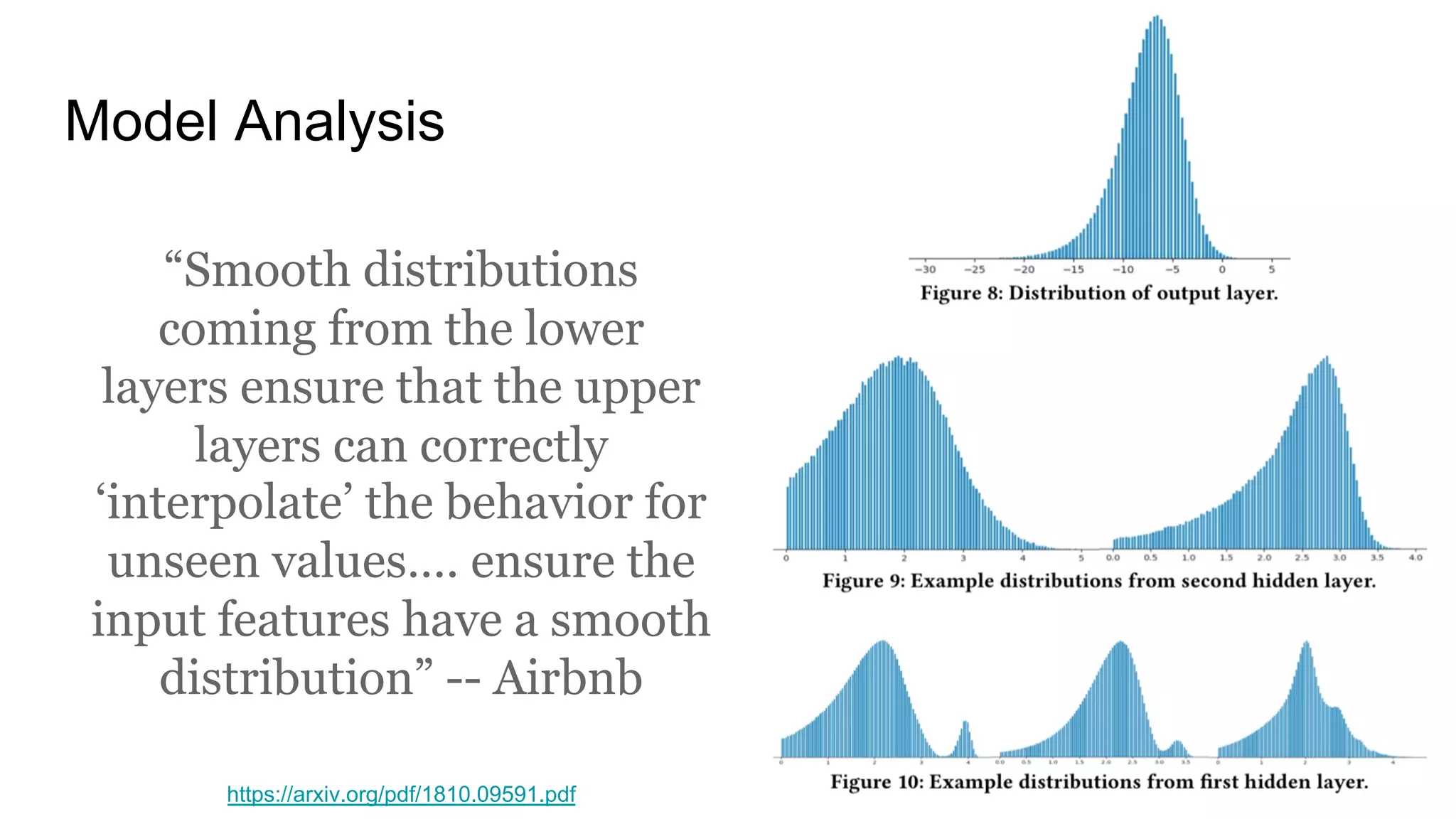

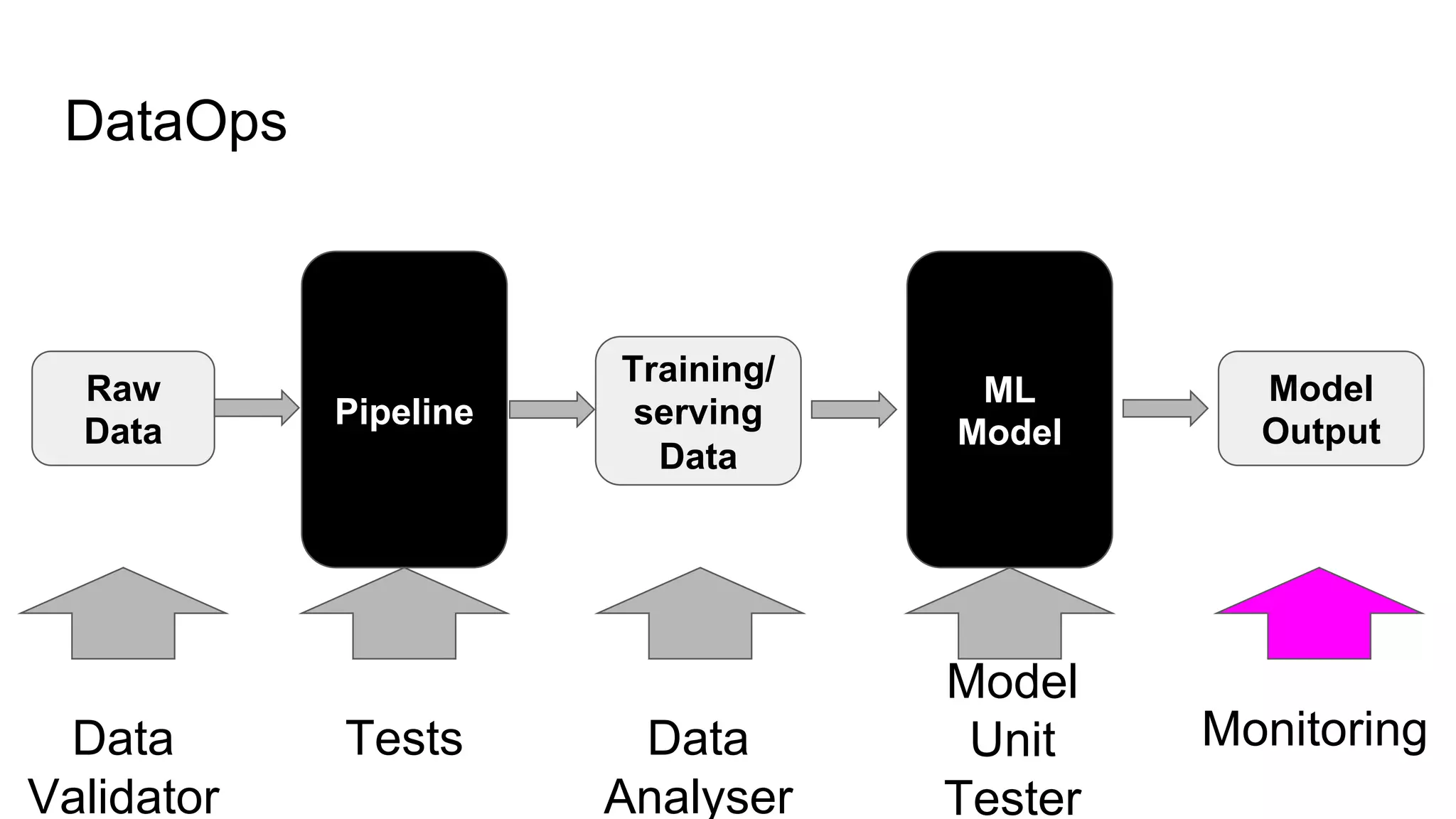

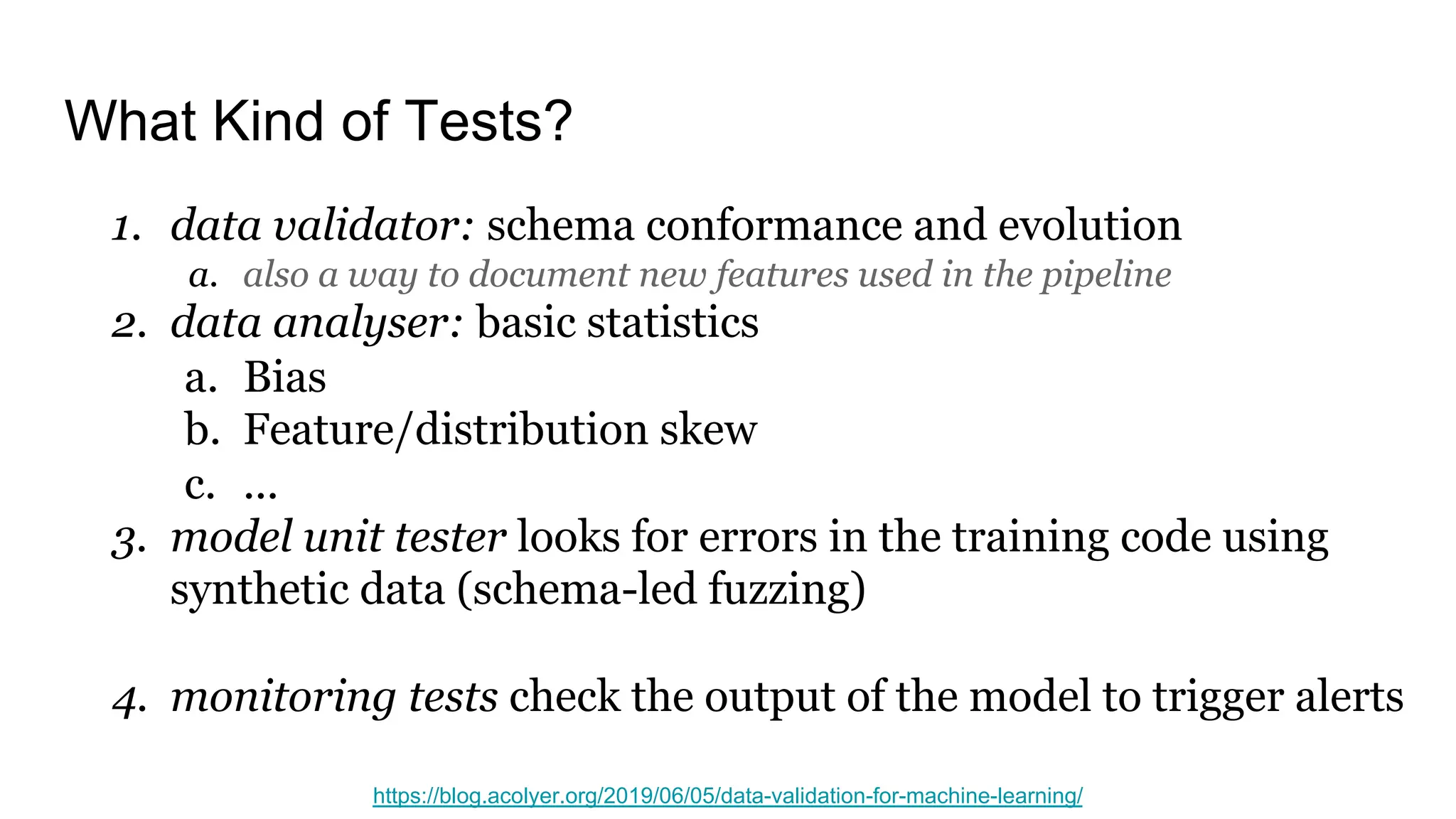

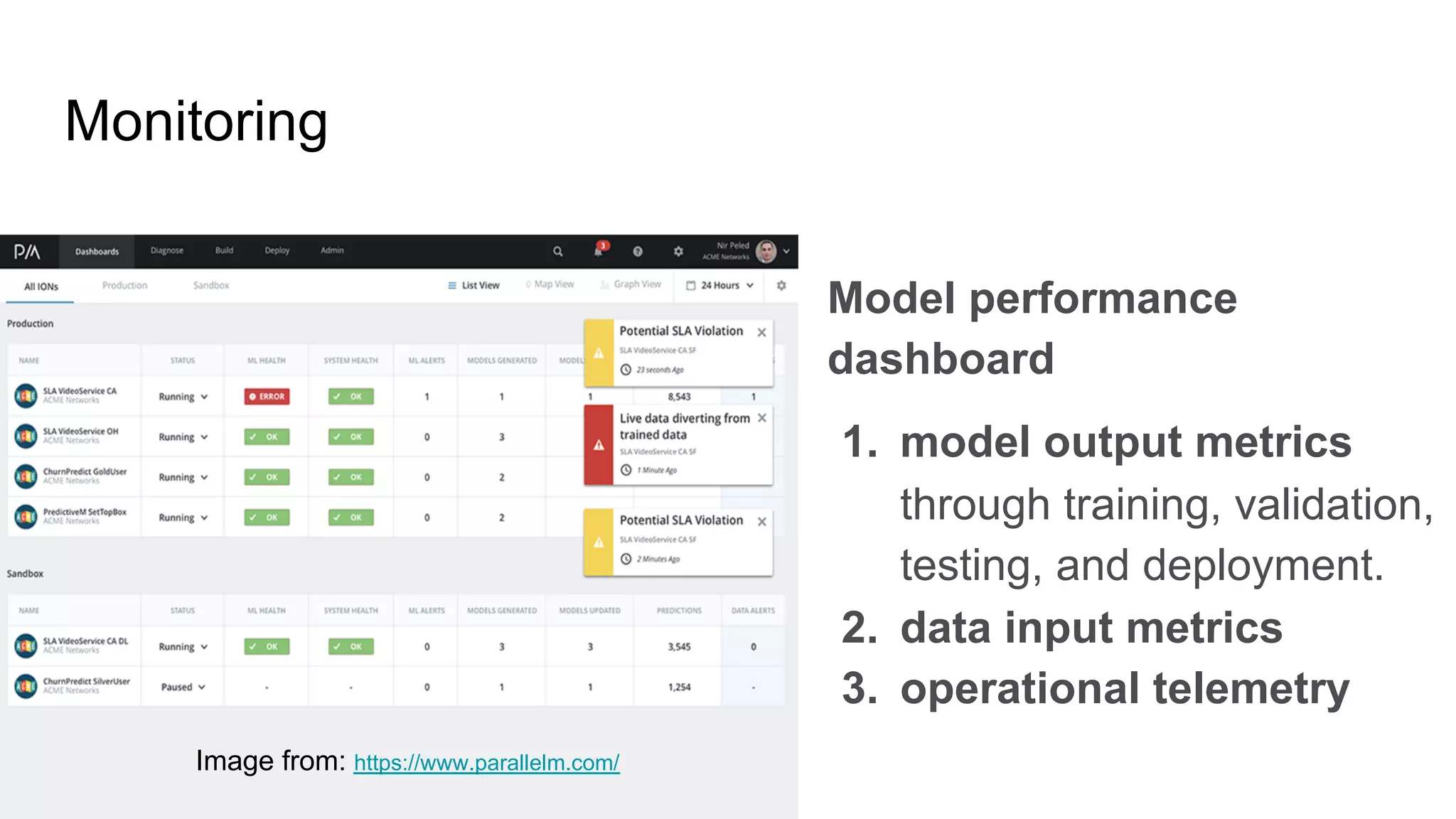

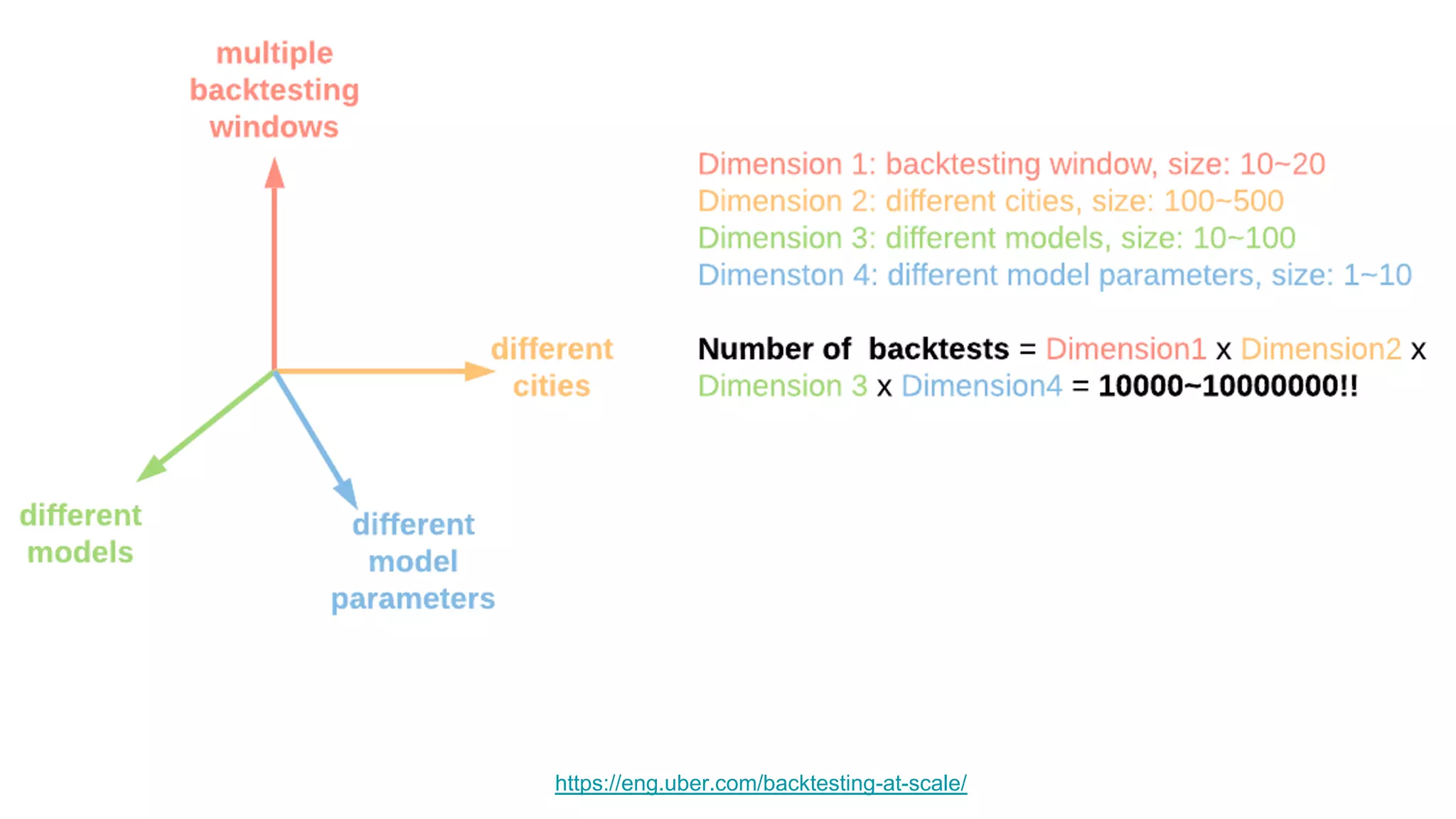

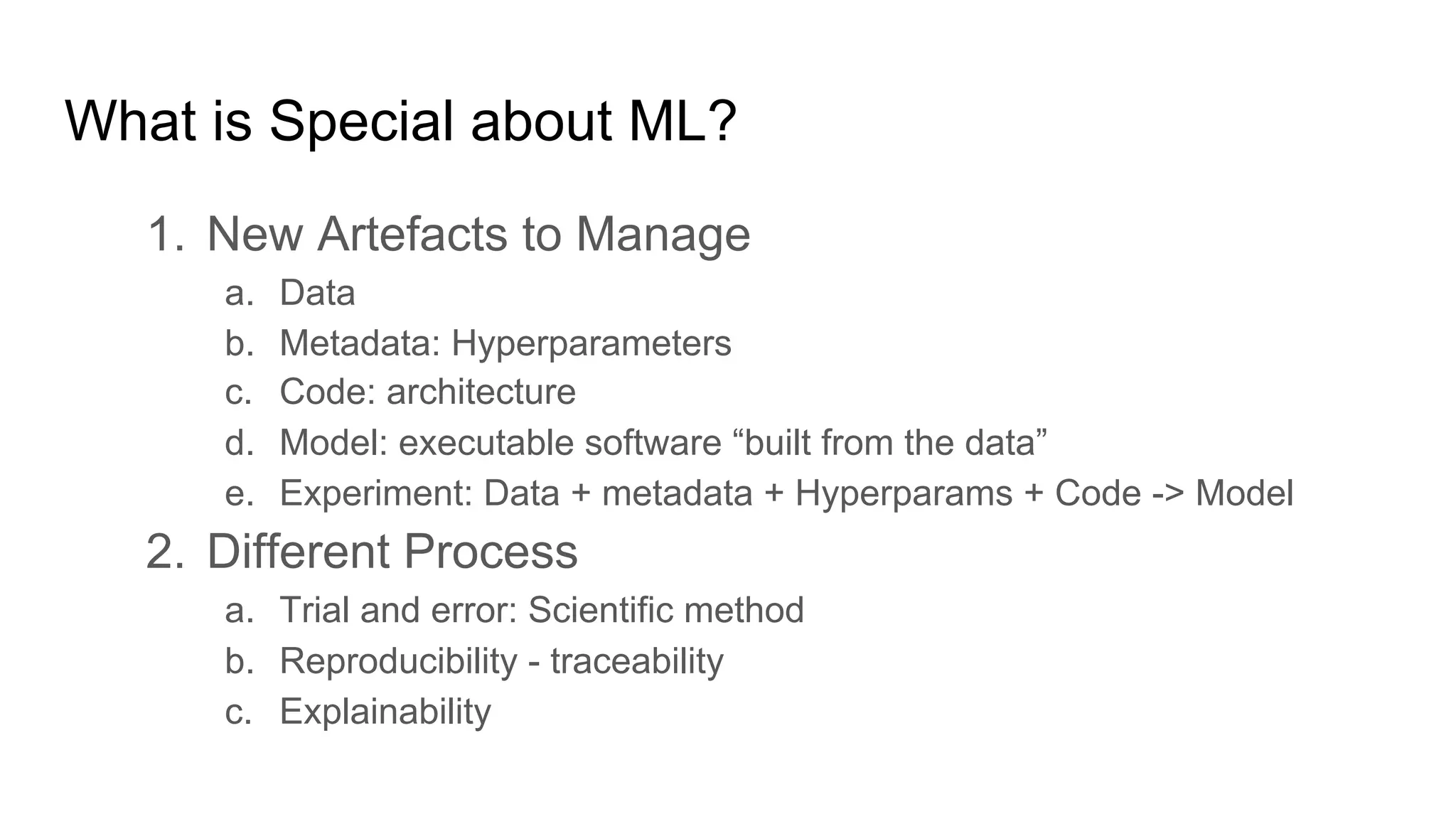

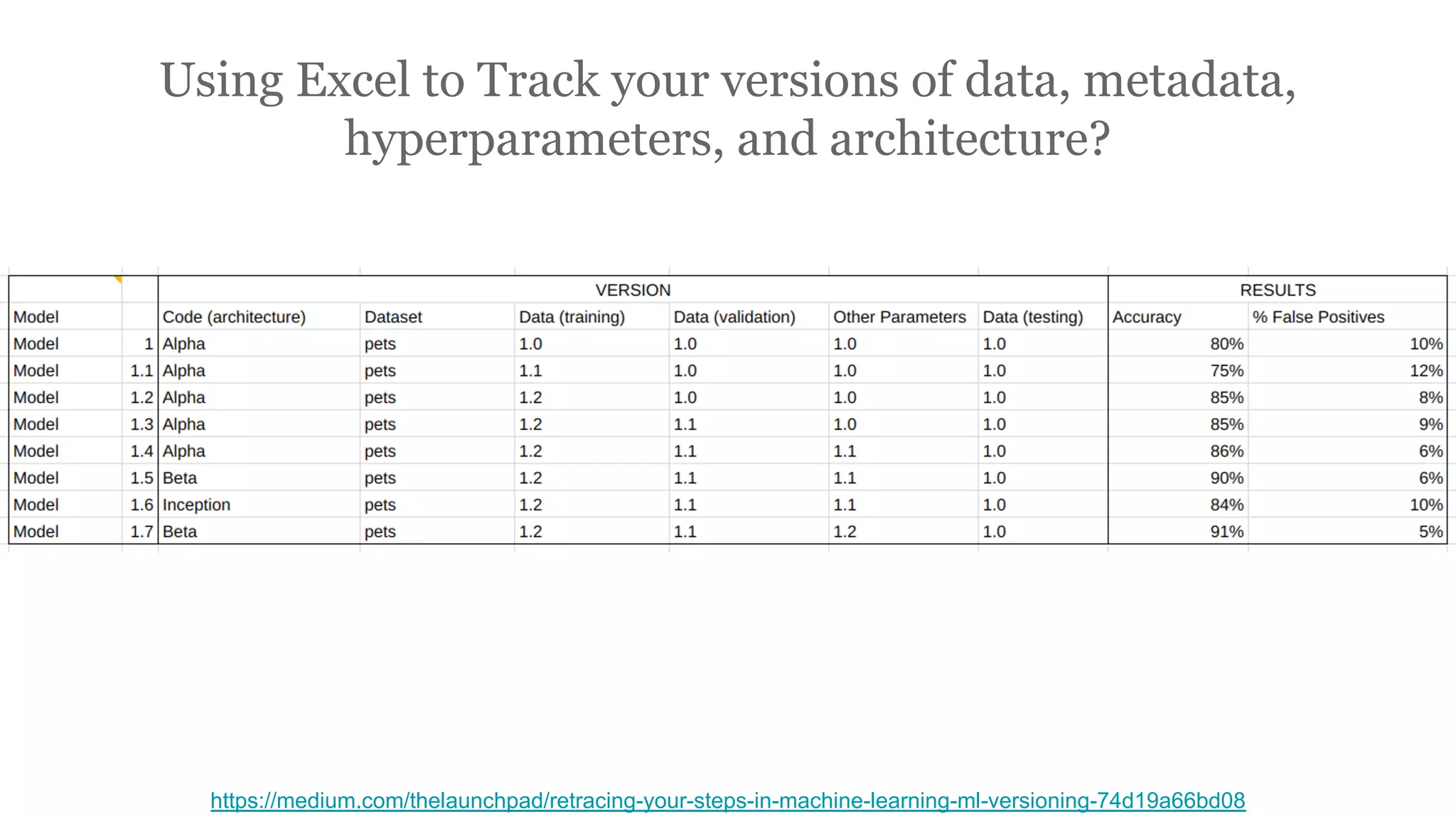

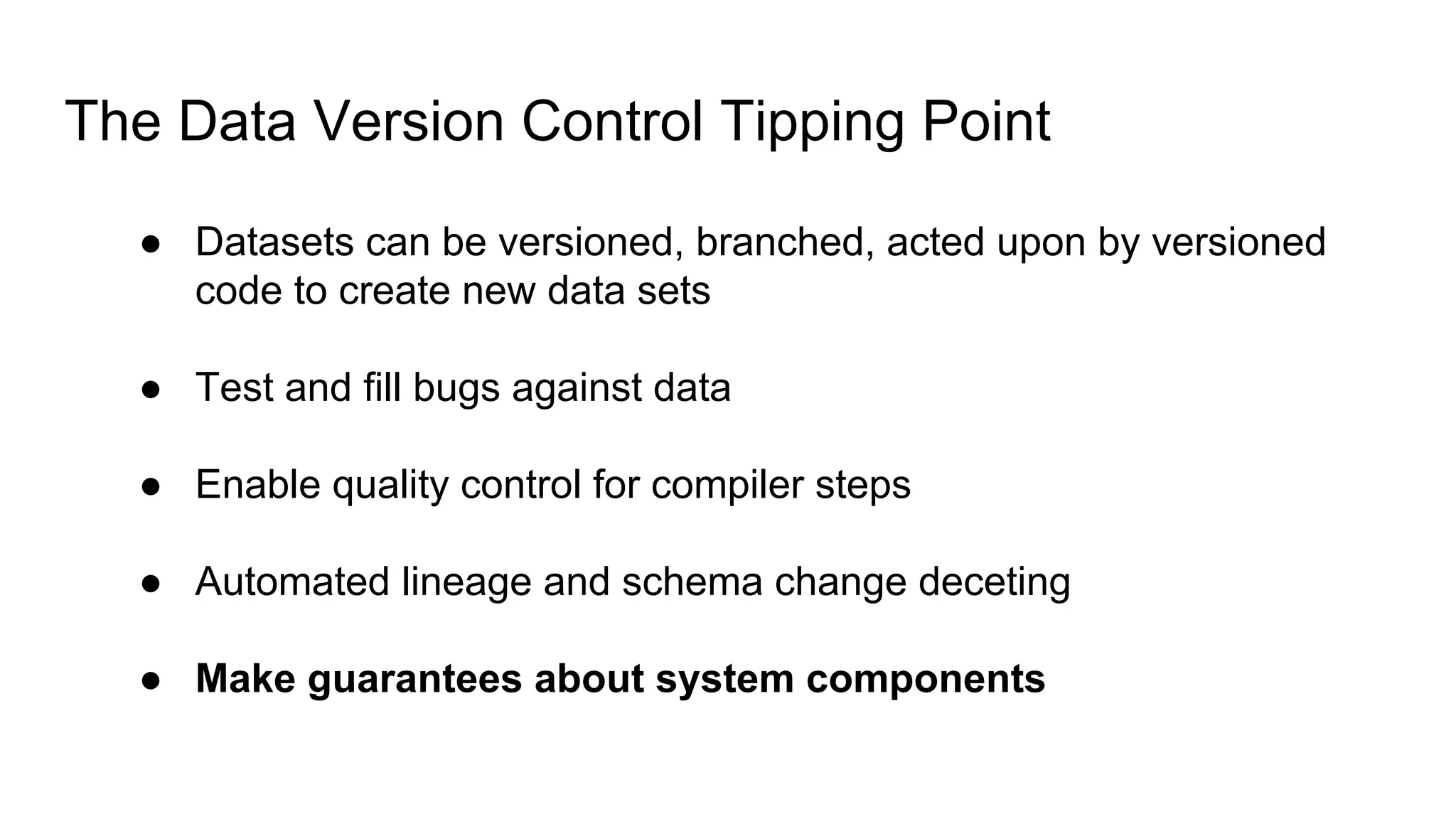

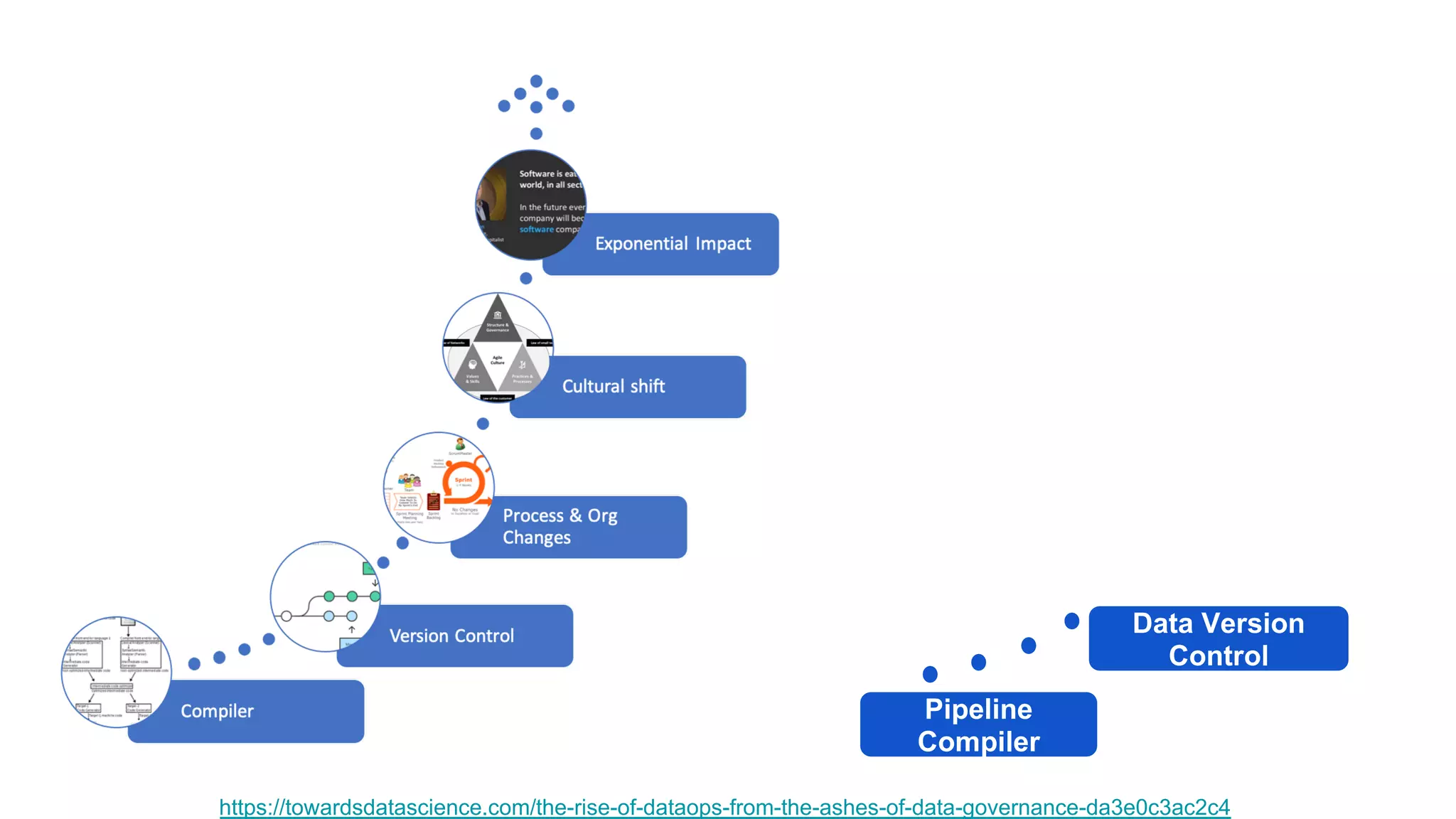

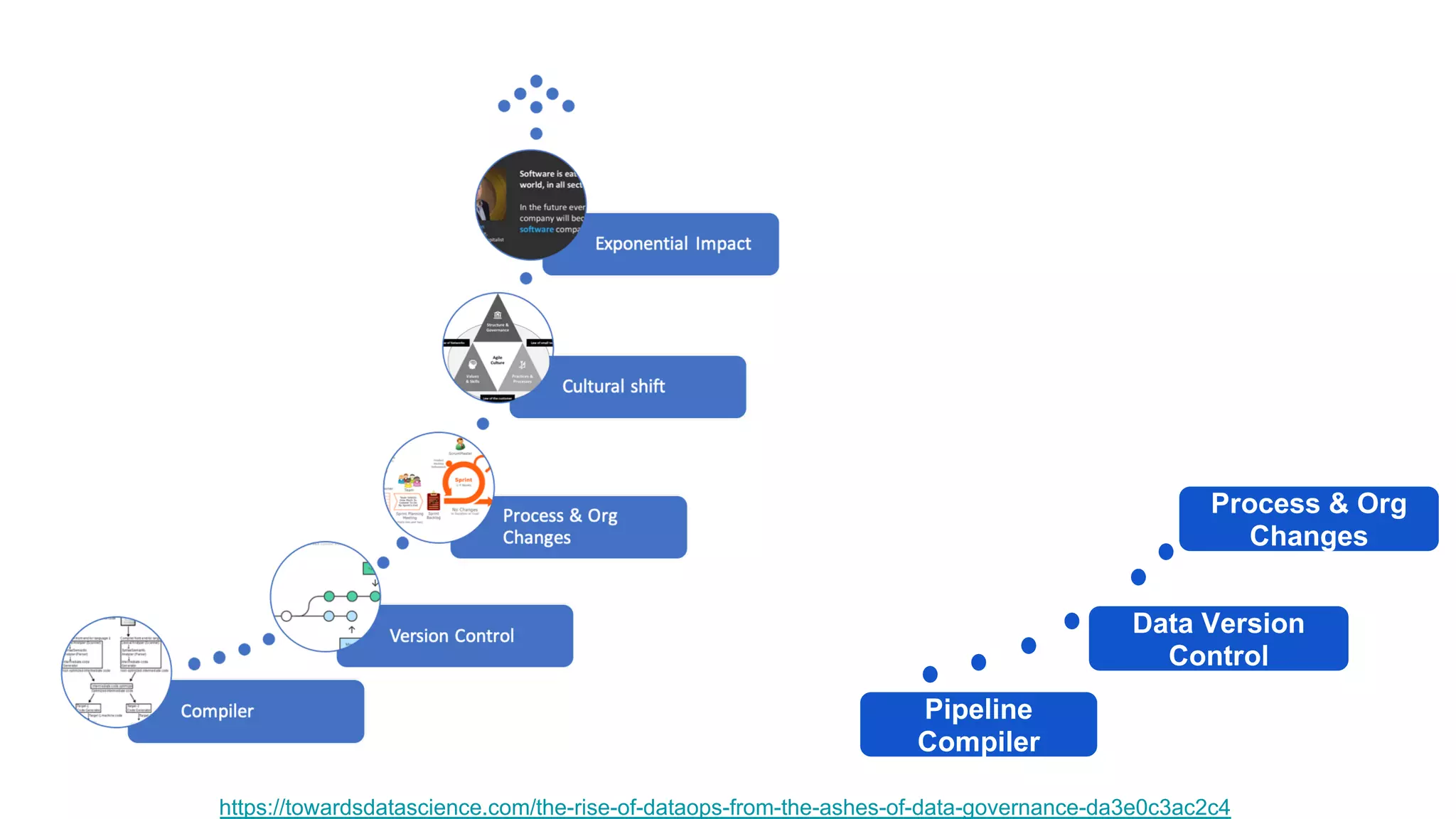

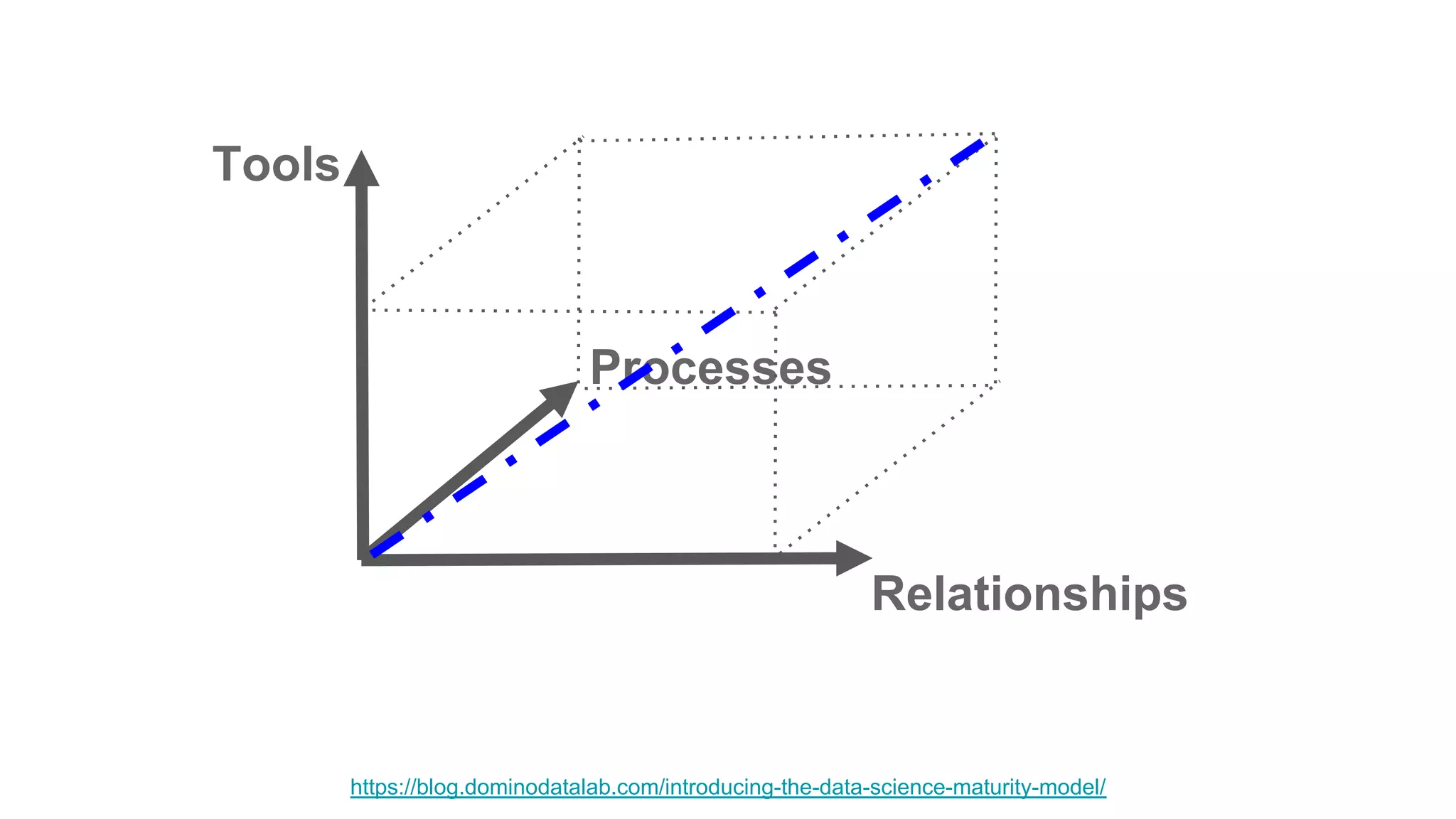

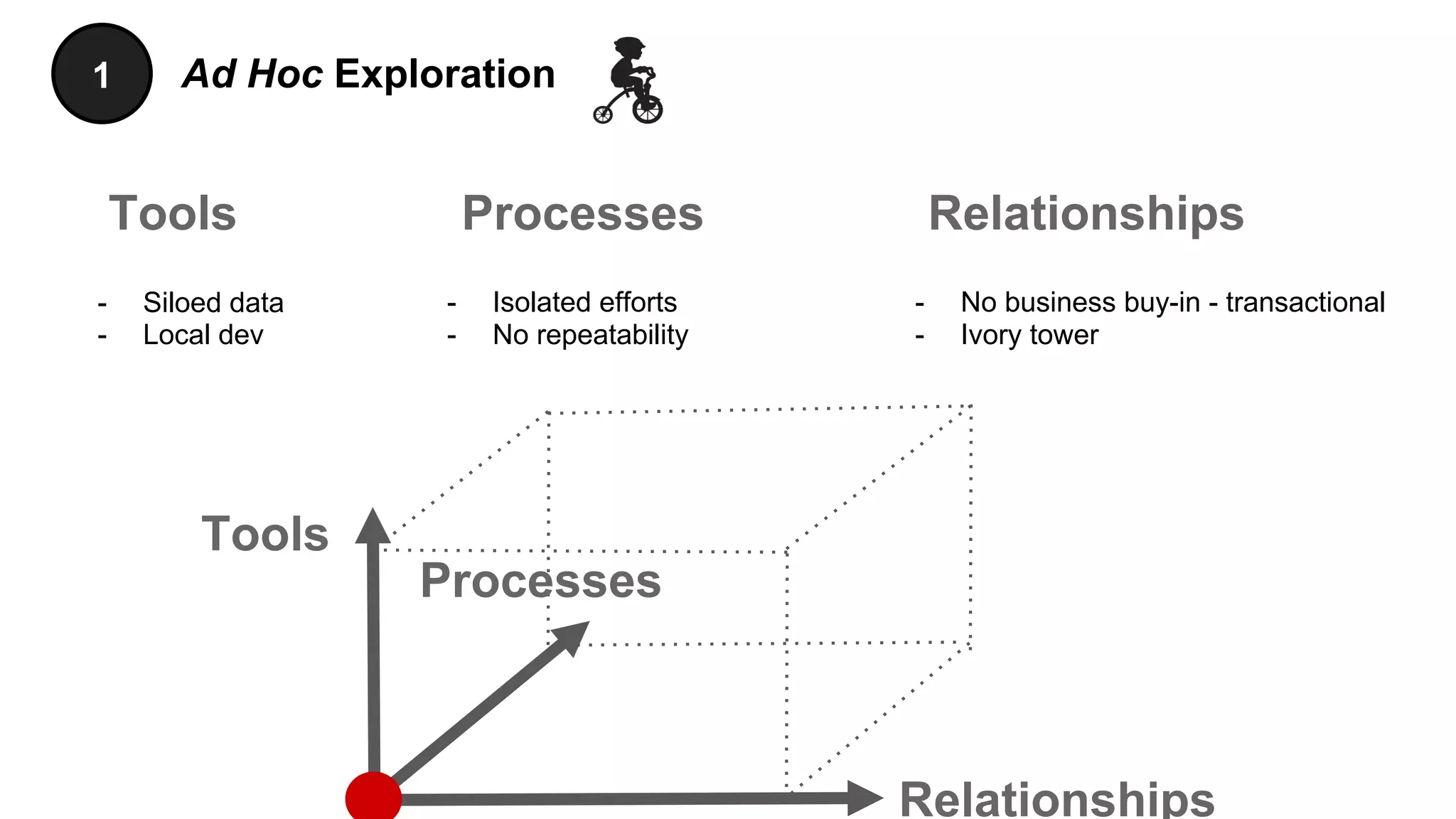

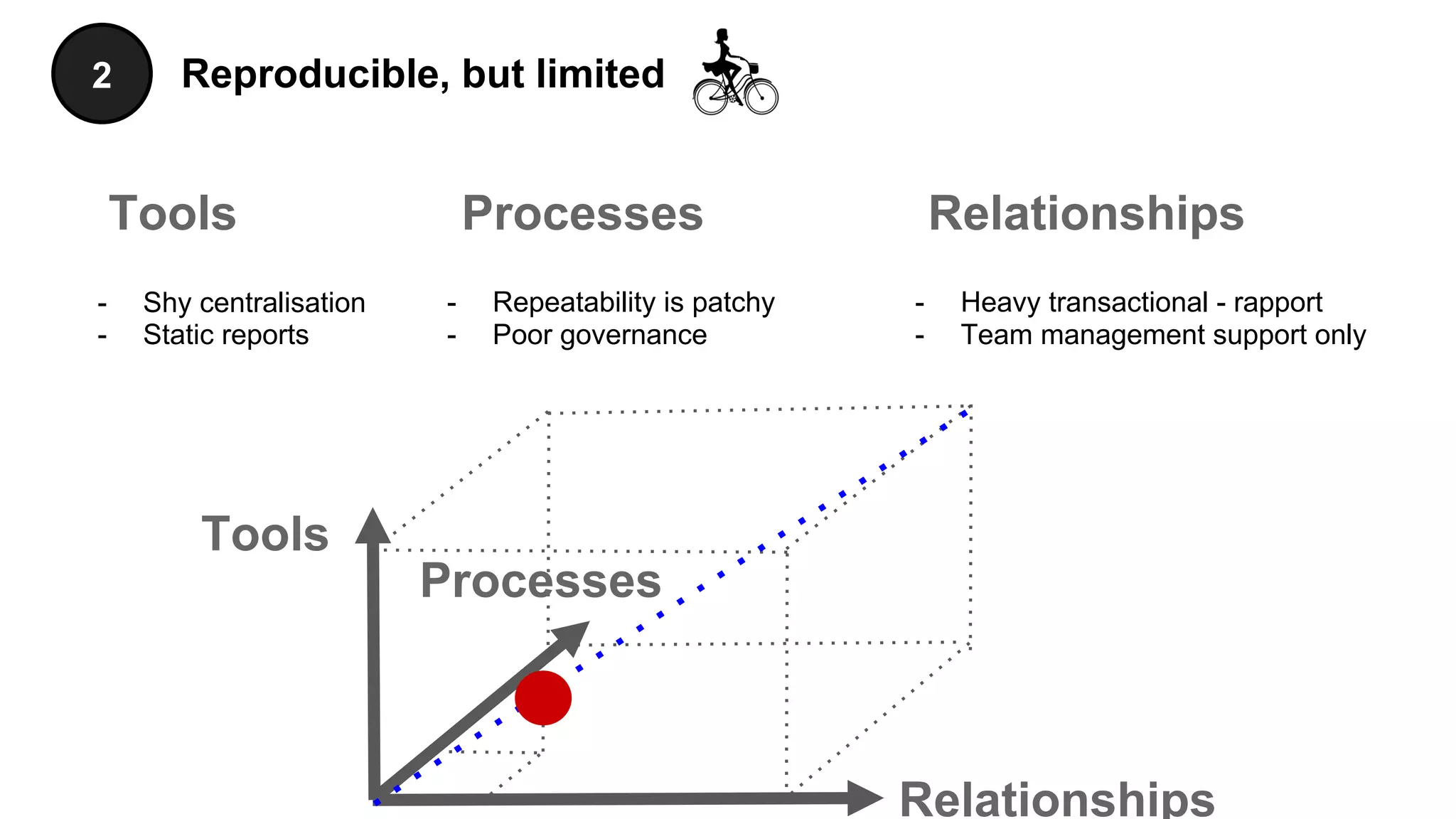

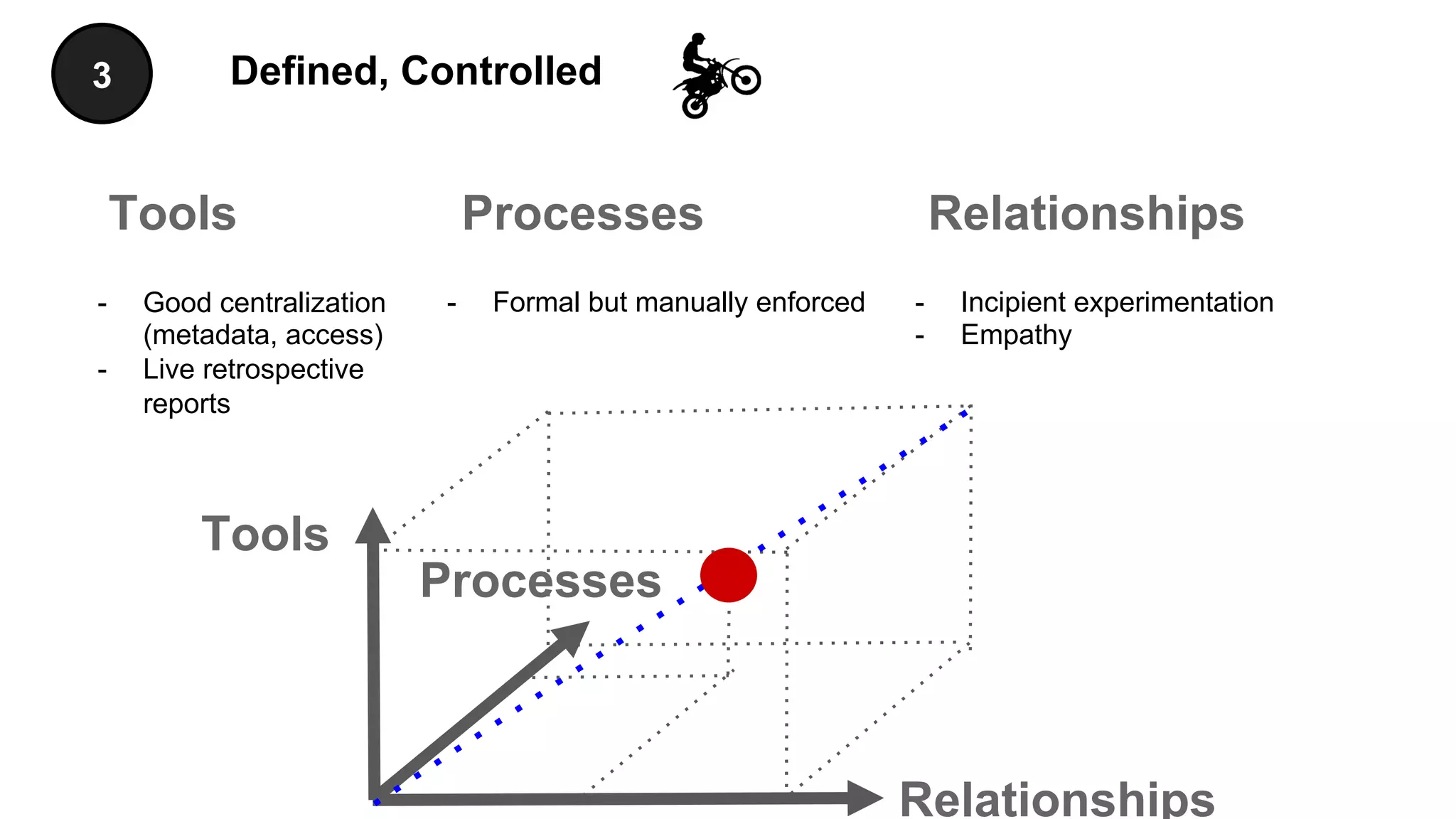

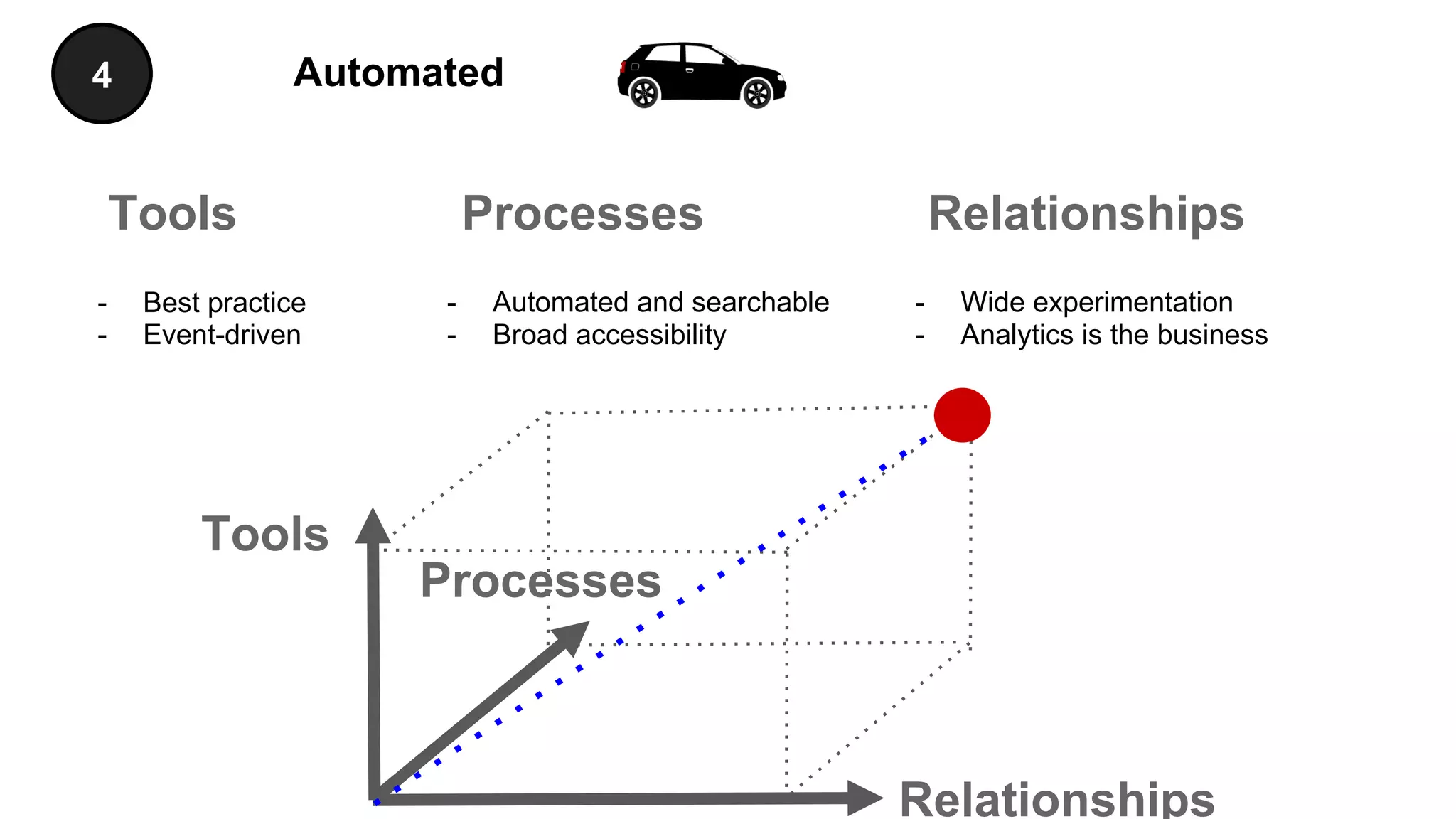

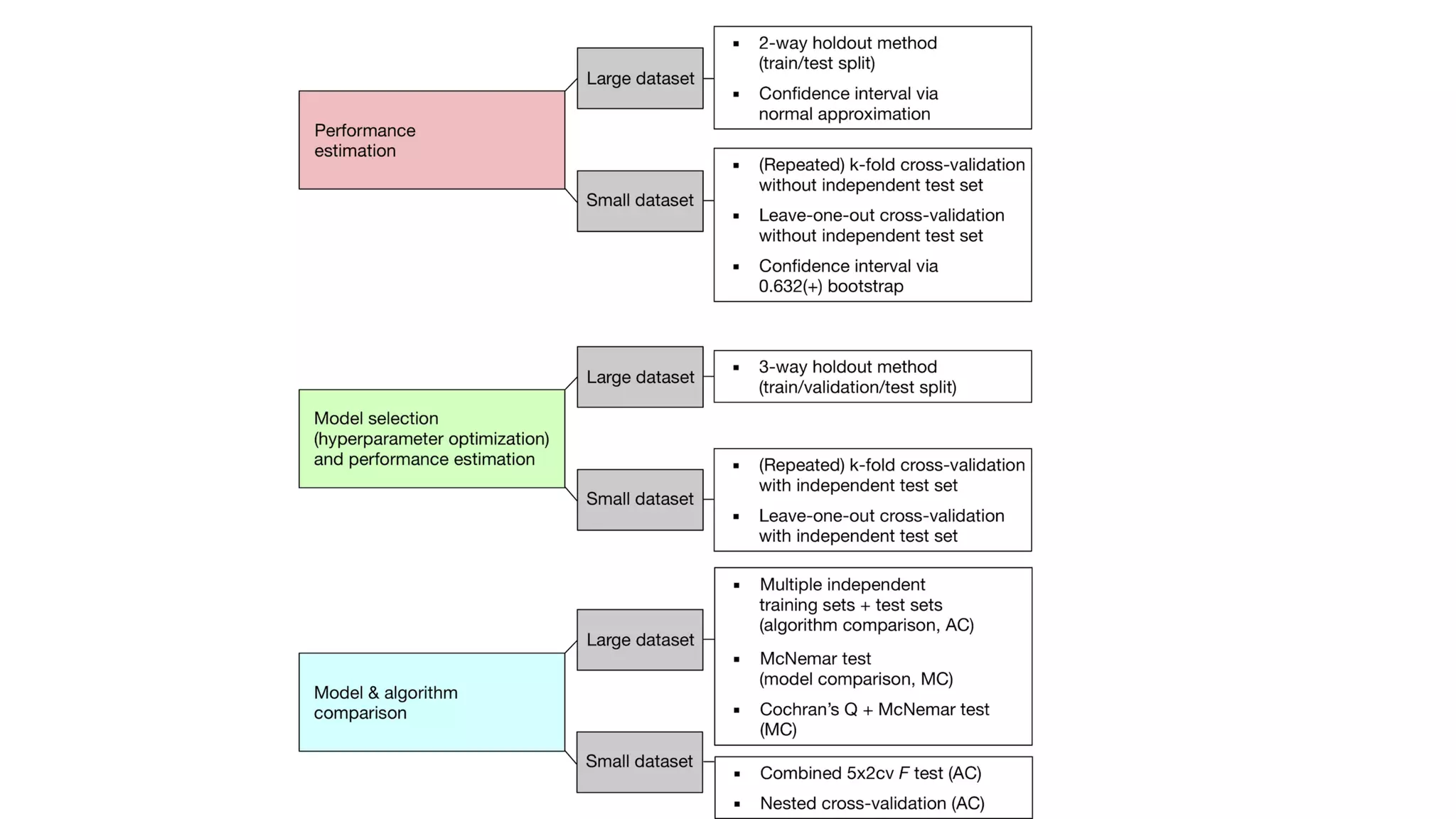

The document discusses the significance of DataOps in enhancing data efficiency and impact within organizations. It highlights the challenges faced by data scientists and web developers, emphasizing the need for a comprehensive data pipeline and testing process to ensure quality and reliability in machine learning model development. Additionally, it addresses the importance of data versioning and automated processes to improve governance and collaboration in data management.