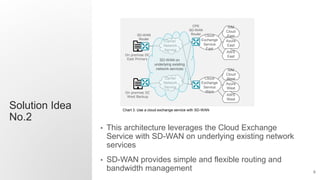

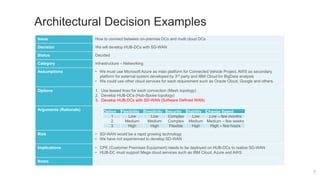

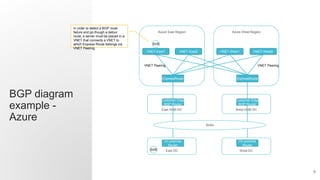

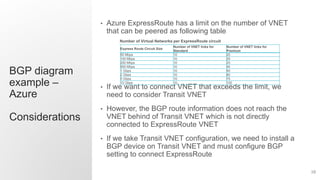

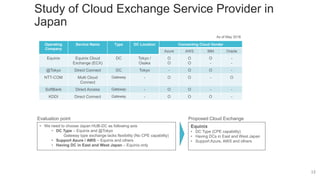

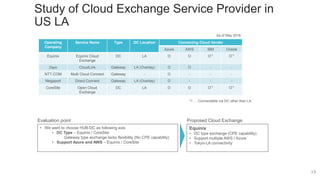

The document presents a multi-cloud networking reference architecture leveraging SD-WAN and cloud exchange services to create a flexible, low-cost solution for connecting on-premise data centers with multiple public clouds. It outlines the issues with traditional mesh topologies and proposes a hub-spoke model using SD-WAN for better bandwidth and routing management. Additionally, the document evaluates various cloud exchange service providers in Japan and the US to support this architecture.