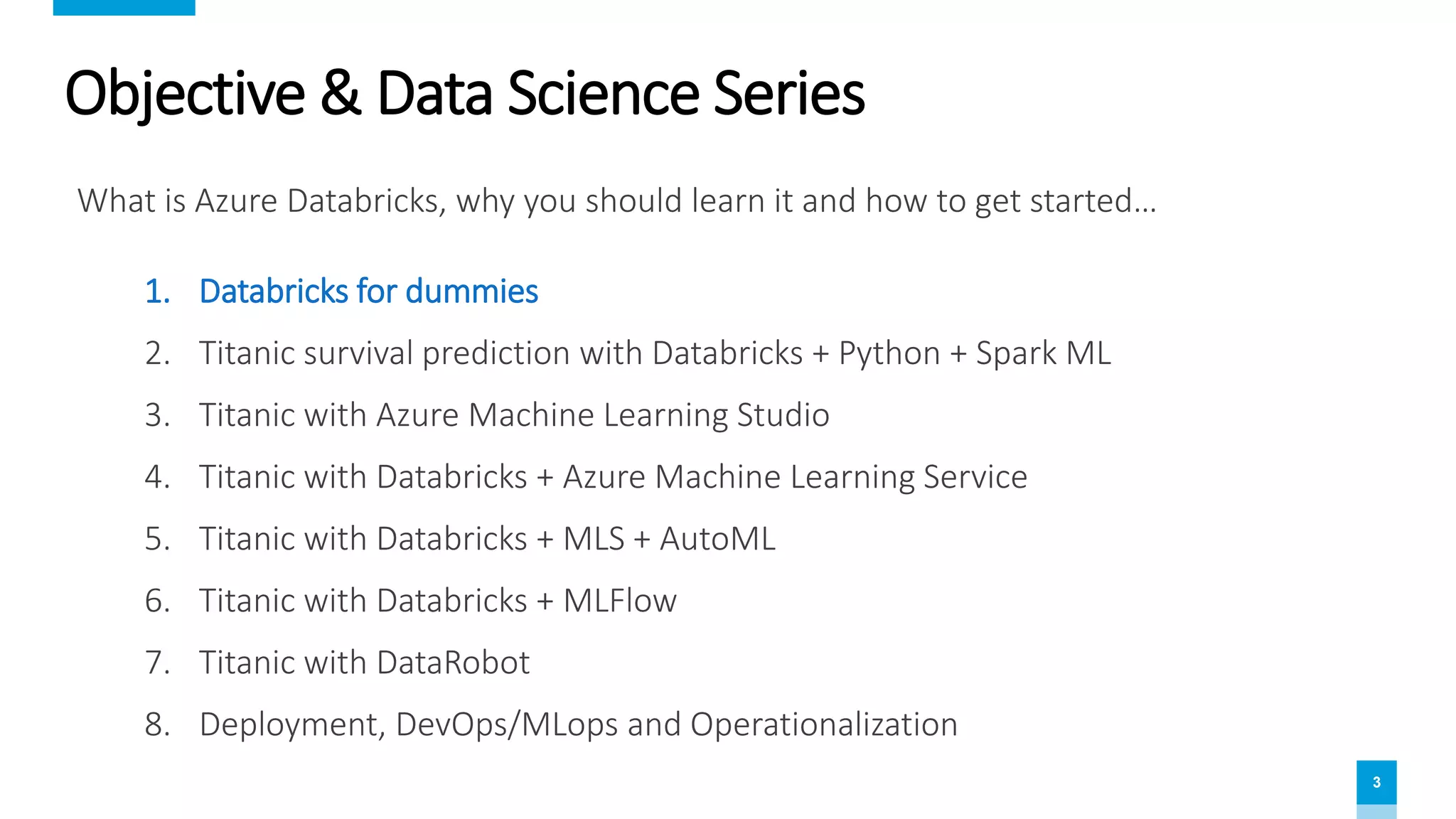

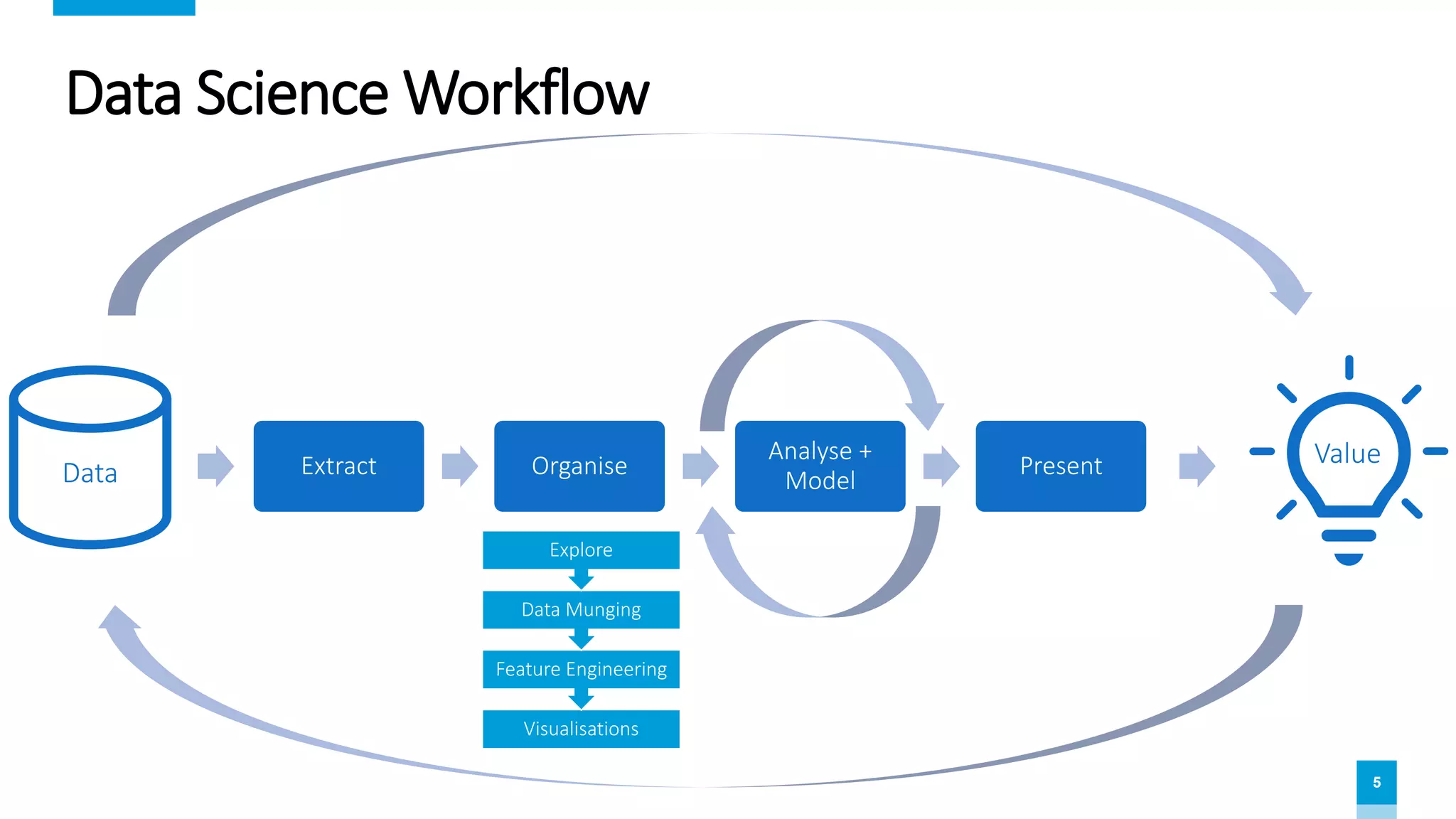

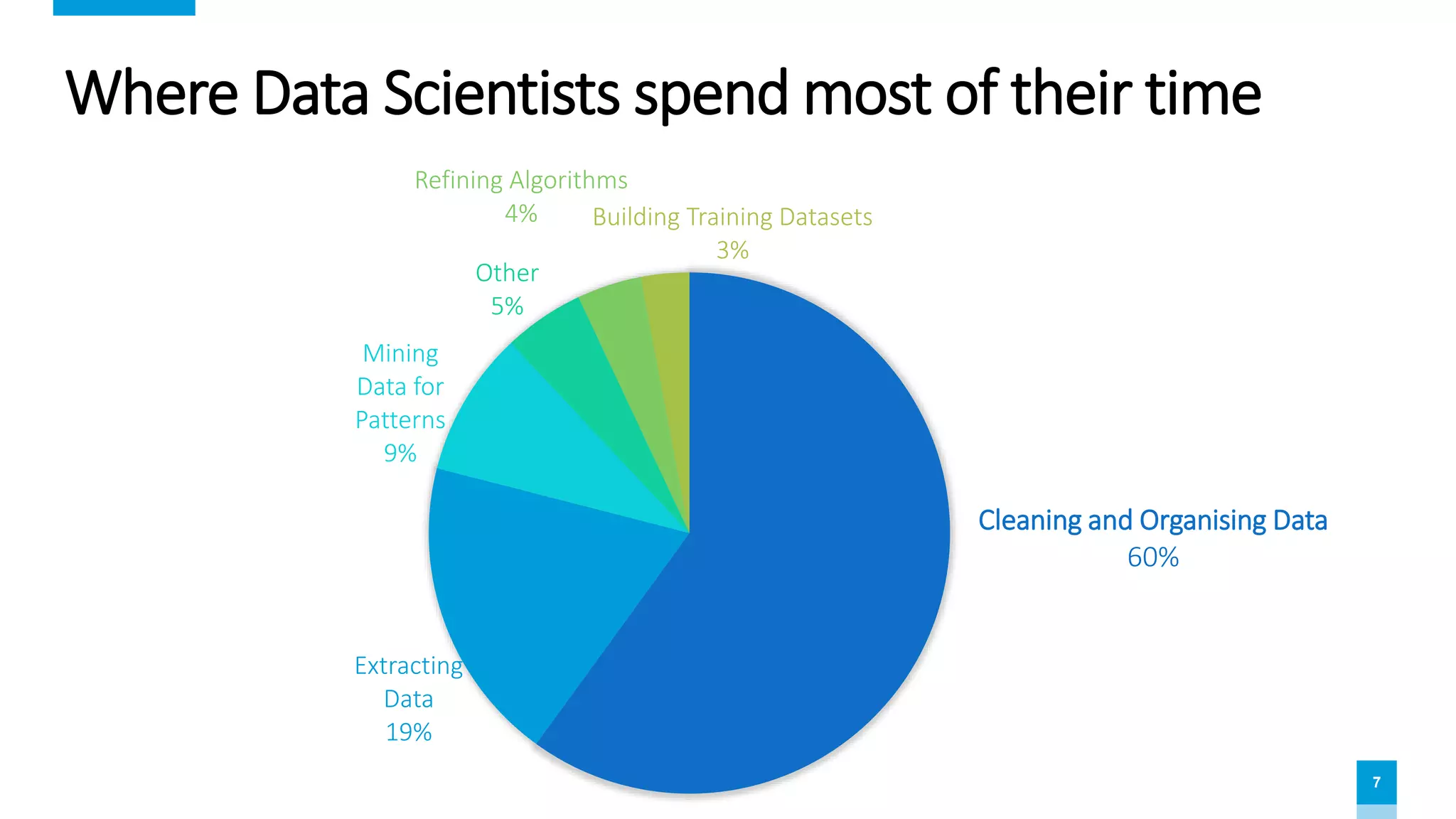

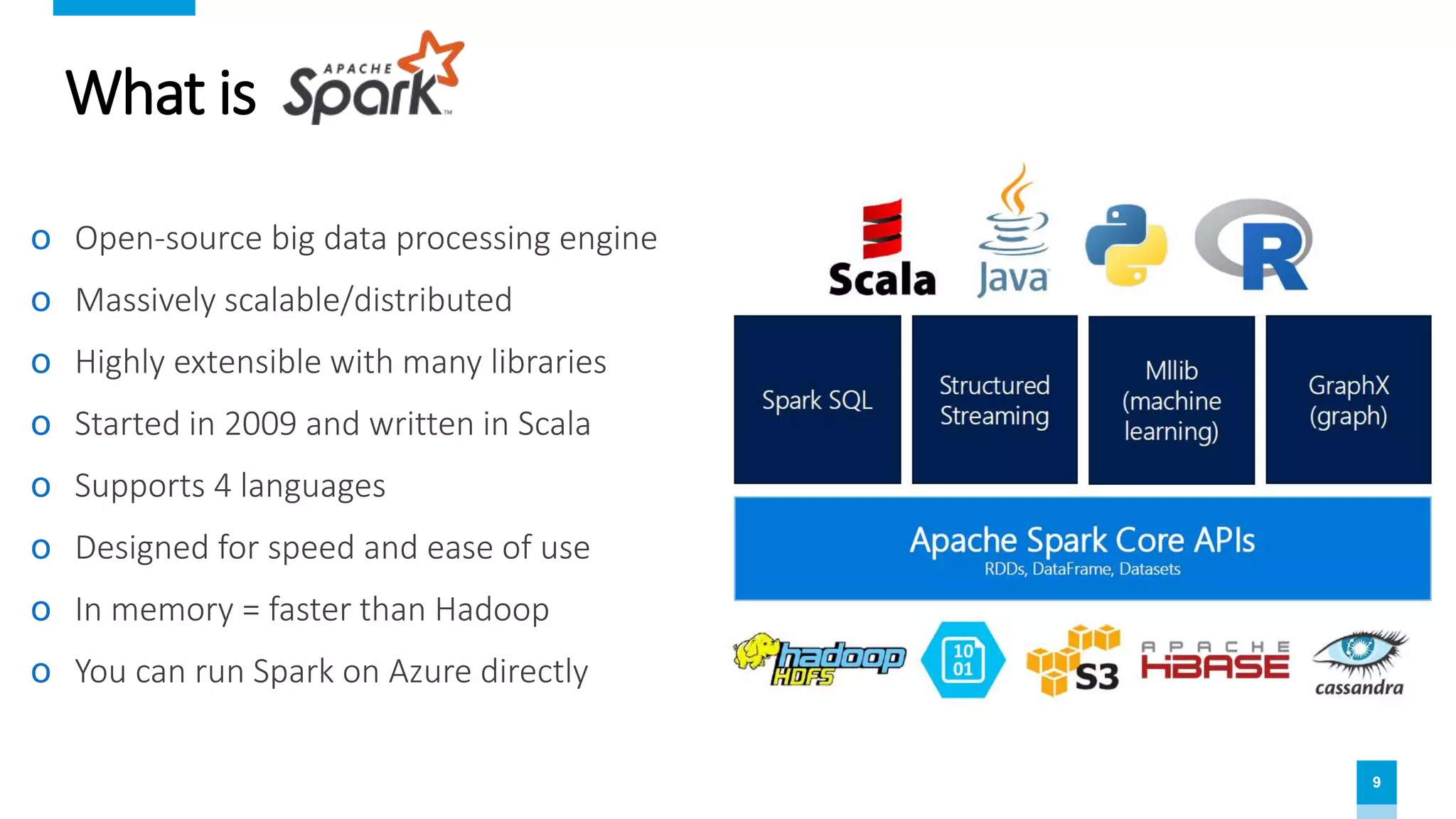

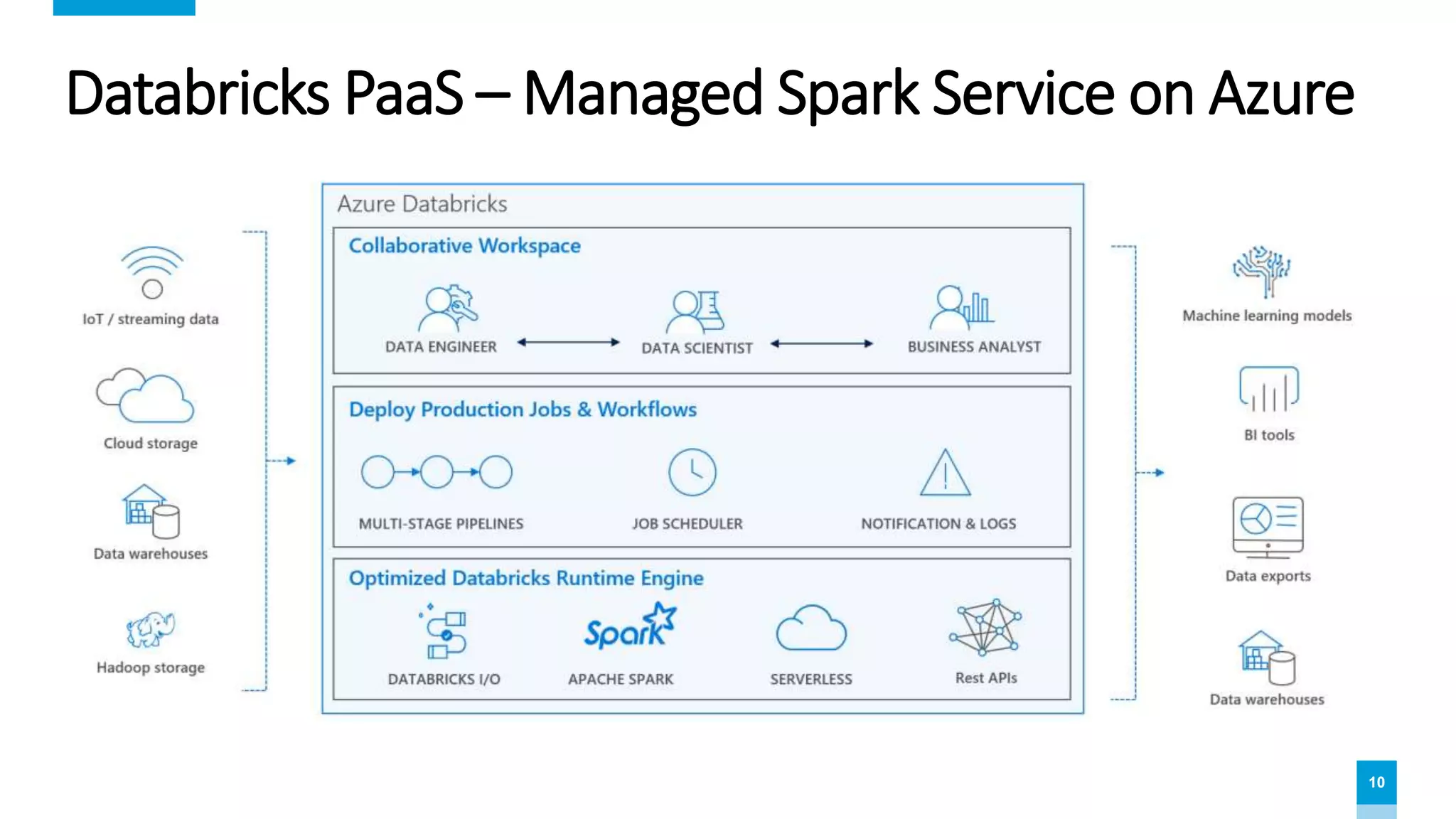

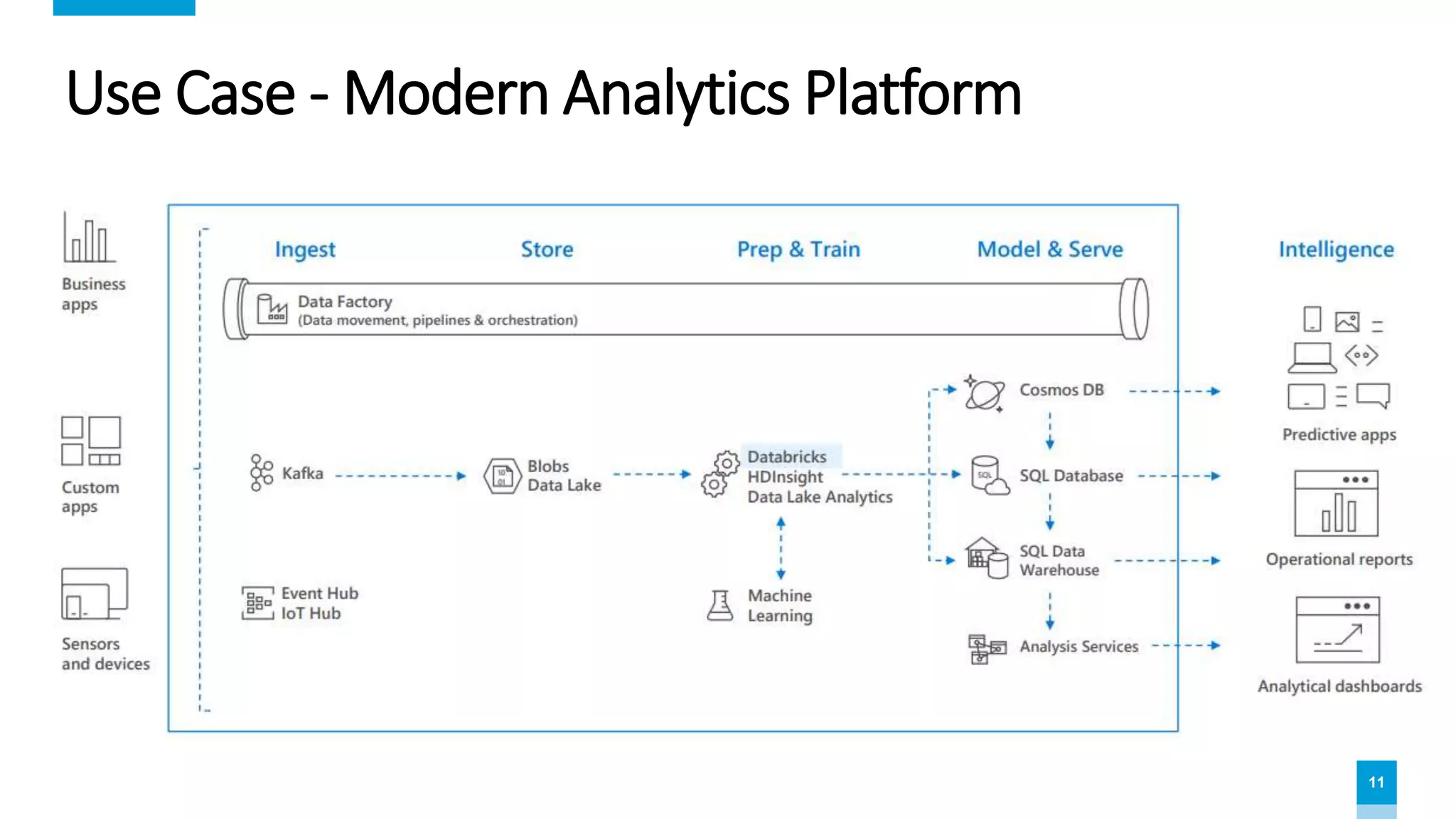

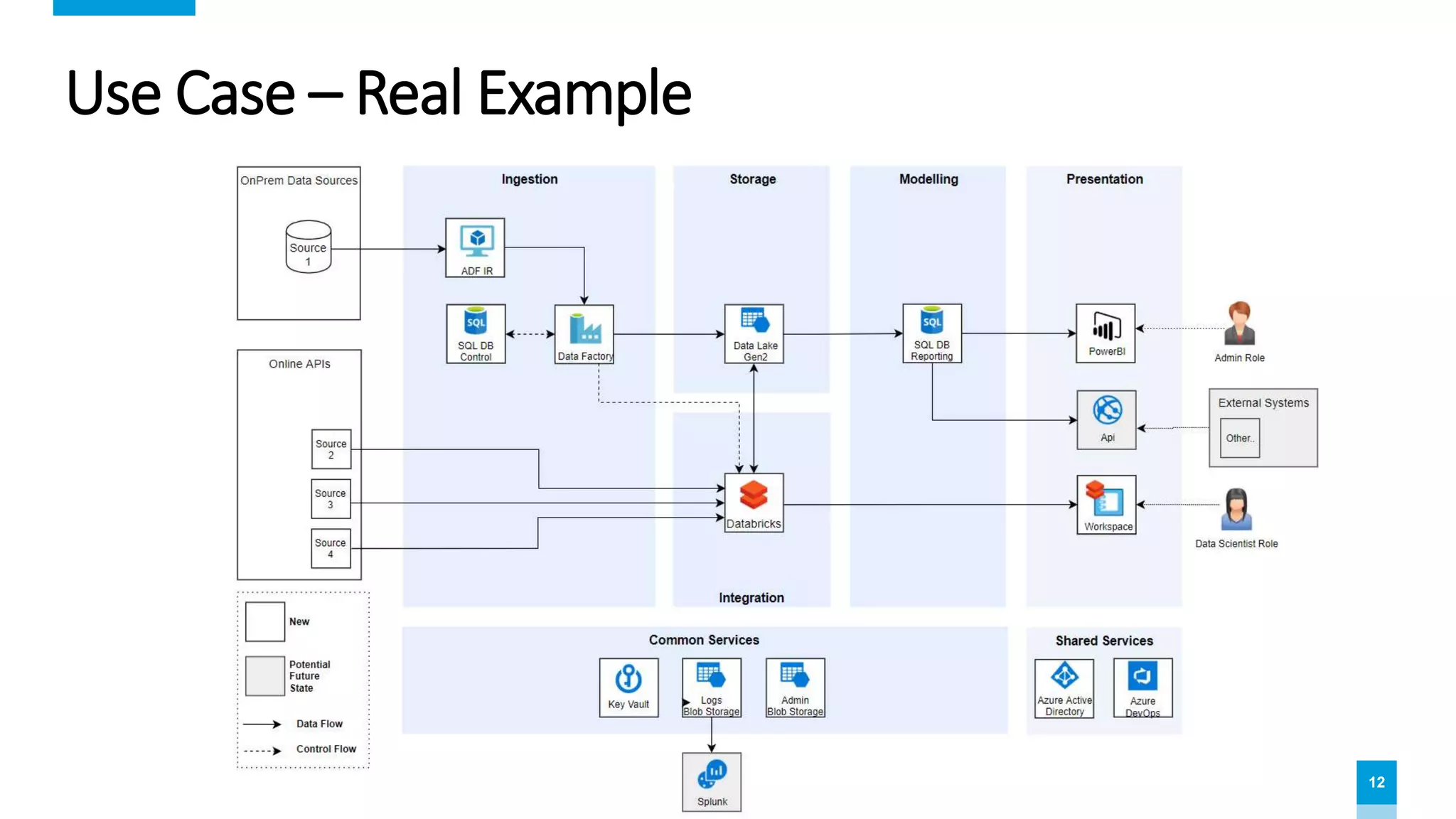

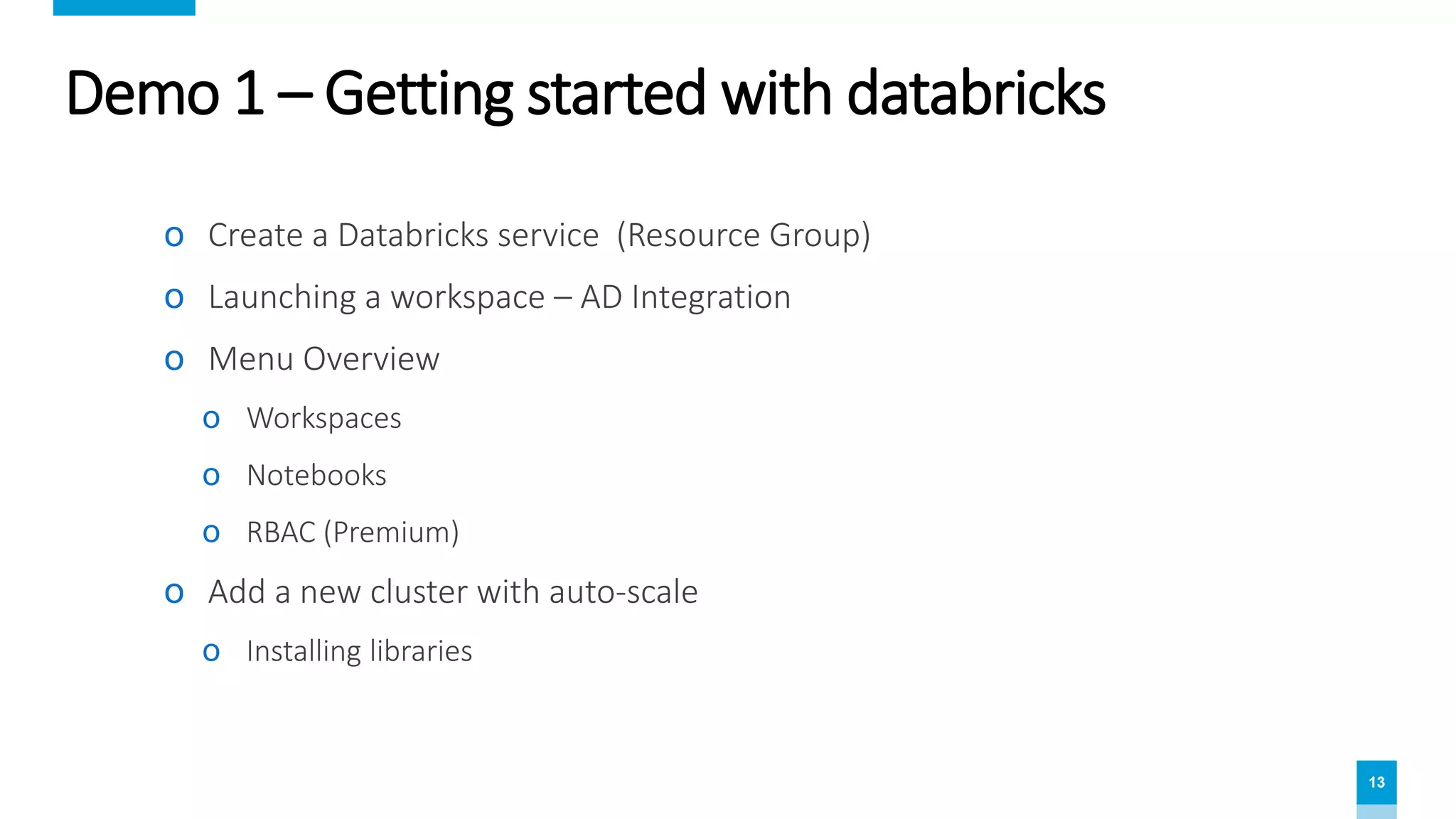

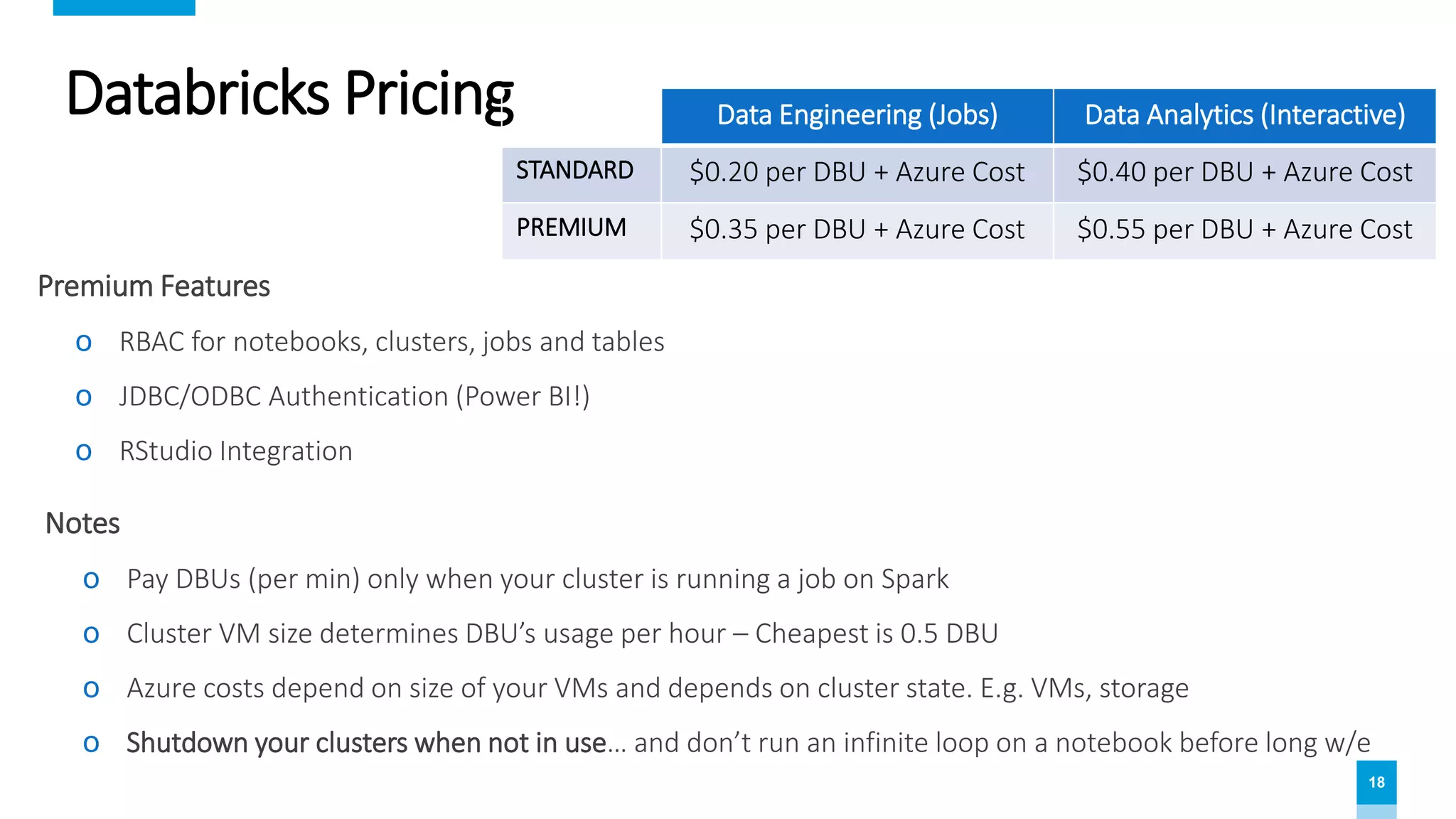

The document provides an overview of data science and analytics using Databricks and Azure, highlighting tools, workflows, and practical use cases like Titanic survival prediction. It addresses the challenges and solutions for data engineers and scientists, emphasizing the importance of a unified analytics platform. Details about Databricks pricing and features, along with demos for getting started, are also included.