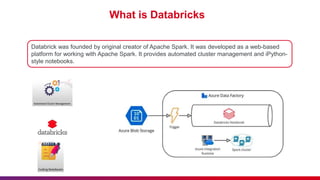

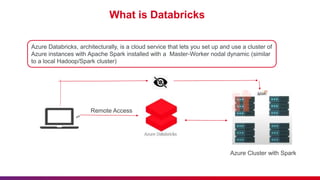

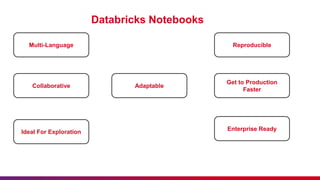

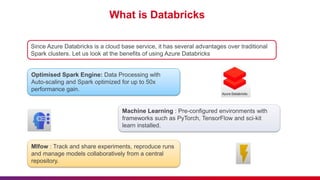

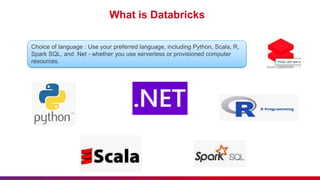

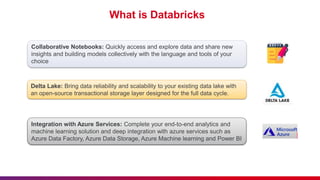

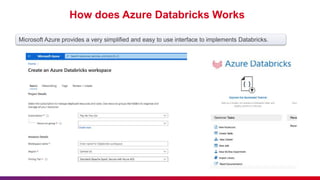

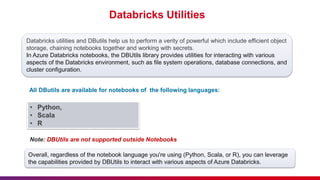

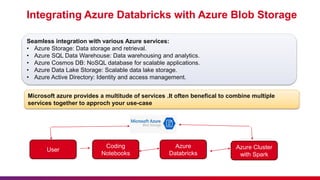

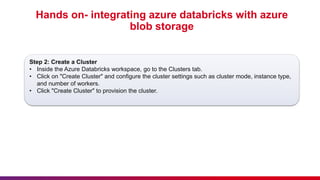

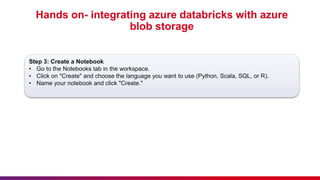

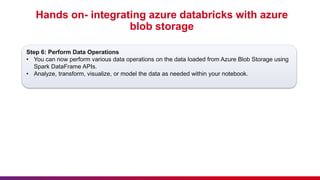

The document provides an overview of Azure Databricks, a cloud-based data and AI service by Microsoft and Databricks for analytics, machine learning, and data engineering. It outlines key features, benefits, and integration steps with Azure services, especially Azure Blob Storage, emphasizing the importance of etiquette during sessions. The document also includes practical steps for setting up and using Azure Databricks effectively.