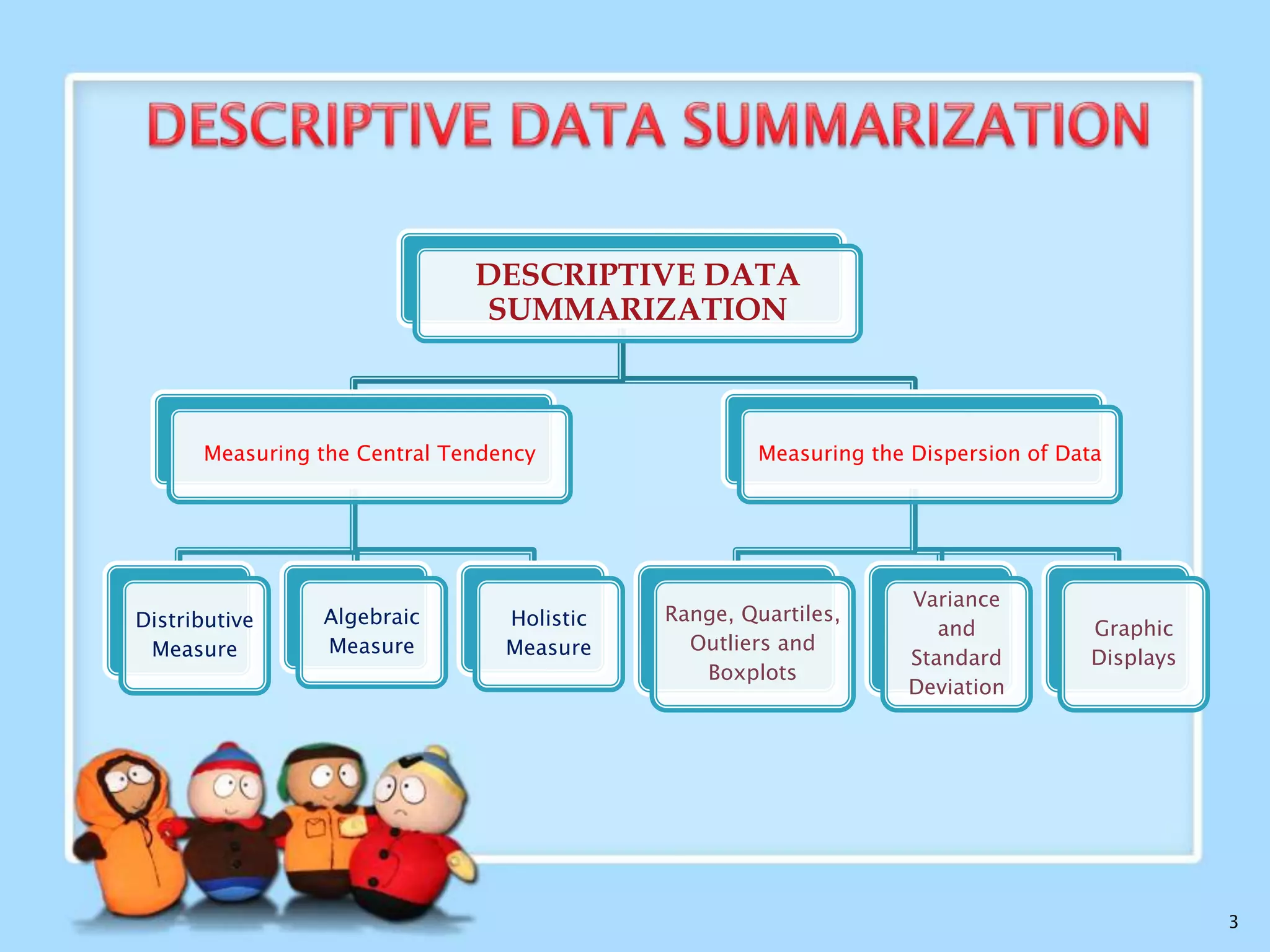

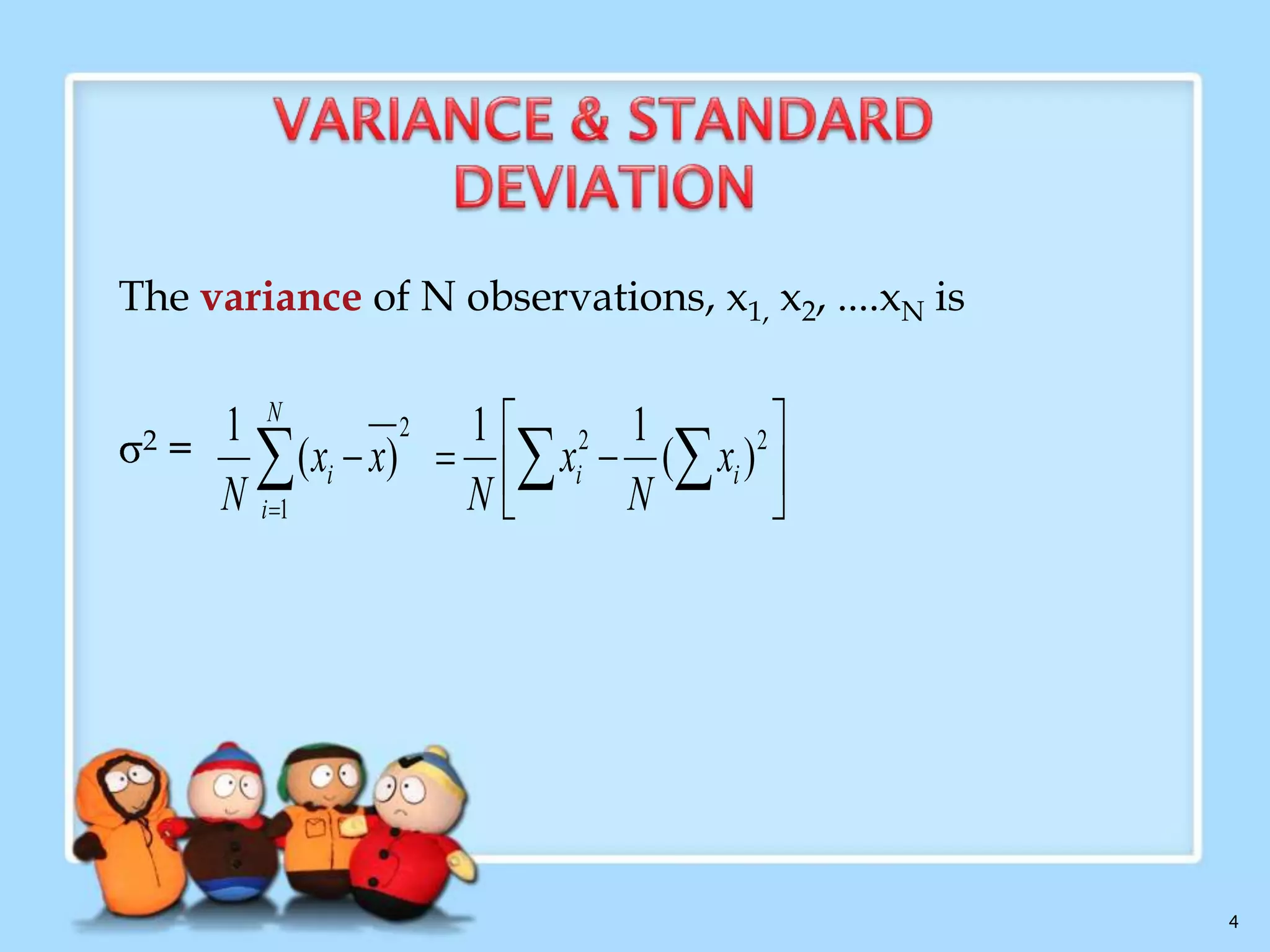

The document discusses descriptive data summarization techniques for data cleaning and preprocessing. It describes common issues with real-world data including missing values, noise, and inconsistencies. Common techniques for data cleaning are then presented, such as data cleaning, integration, transformation, and reduction. Methods for handling missing values, smoothing noisy data, and resolving inconsistencies are outlined. Finally, descriptive statistical techniques for summarizing data distributions are reviewed, including measures of central tendency, dispersion, and graphical displays like histograms and scatter plots.

![

Min-max normalization: to [new_minA, new_maxA]

v'

v minA

(new _ maxA new _ minA) new _ minA

maxA minA

◦ Ex. Let income range $12,000 to $98,000 normalized to [0.0, 1.0].

Then $73,000 is mapped to 73,600 12,000 (1.0 0) 0 0.716

98,000 12 ,000

Z-score normalization (μ: mean, σ: standard deviation):

v

A

v'

73,600 54 ,000

A

1.225

◦ Ex. Let μ = 54,000, σ = 16,000. Then

Normalization by decimal scaling

v

v'

10 j

16 ,000

Where j is the smallest integer such that Max(|ν’|) < 1

31](https://image.slidesharecdn.com/datamining-140224045836-phpapp01/75/Data-mining-31-2048.jpg)