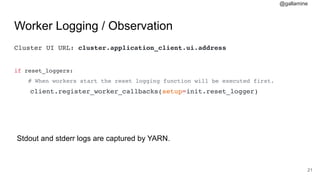

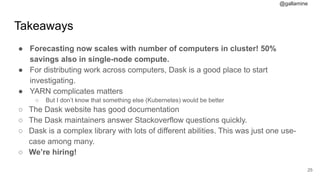

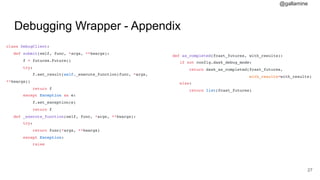

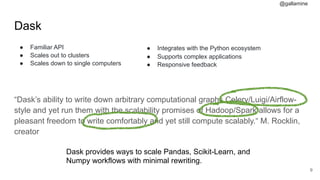

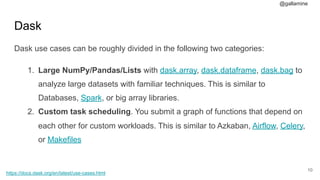

William Cox discusses the challenges in scheduling drivers for food delivery at Grubhub and how his team forecasts order volumes to optimize driver allocation. The use of Dask is highlighted as an effective tool for parallelizing predictions and scaling workflows while integrating well with the Python ecosystem. Key takeaways include significant savings in computation time and the benefits of distributed computing for enhanced forecasting capabilities.

![@gallamine

#13

from dask.distributed import Client, as_completed

import time

import random

if __name__ == "__main__":

client = Client()

predictions = []

for group in ["a", "b", "c", "d"]:

static_parameters = 1

fcast_future = client.submit(_forecast, group, static_parameters, pure=False)

predictions.append(fcast_future)

for future in as_completed(predictions, with_results=False):

try:

print(f"future {future.key} returned {future.result()}")

except ValueError as e:

print(e)

“The concurrent.futures module provides a high-level

interface for asynchronously executing callables.” Dask implements

this interface

Arbitrary function we’re scheduling](https://image.slidesharecdn.com/pycolorado2019daskml-190918144821/85/Dask-and-Machine-Learning-Models-in-Production-PyColorado-2019-13-320.jpg)

![@gallamine

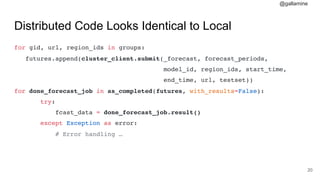

worker = skein.Service(

instances=config.dask_worker_instances,

max_restarts=10,

resources=skein.Resources(

memory=config.dask_worker_memory,

vcores=config.dask_worker_vcores

),

files={

'./cachedvolume': skein.File(

source=config.volume_sqlite3_filename, type='file'

)

},

env={'THEANO_FLAGS': 'base_compiledir=/tmp/.theano/',

'WORKER_VOL_LOCATION': './cachedvolume',},

script='dask-yarn services worker',

depends=['dask.scheduler']

)

Program-

matically

Describe

Service

#18](https://image.slidesharecdn.com/pycolorado2019daskml-190918144821/85/Dask-and-Machine-Learning-Models-in-Production-PyColorado-2019-18-320.jpg)