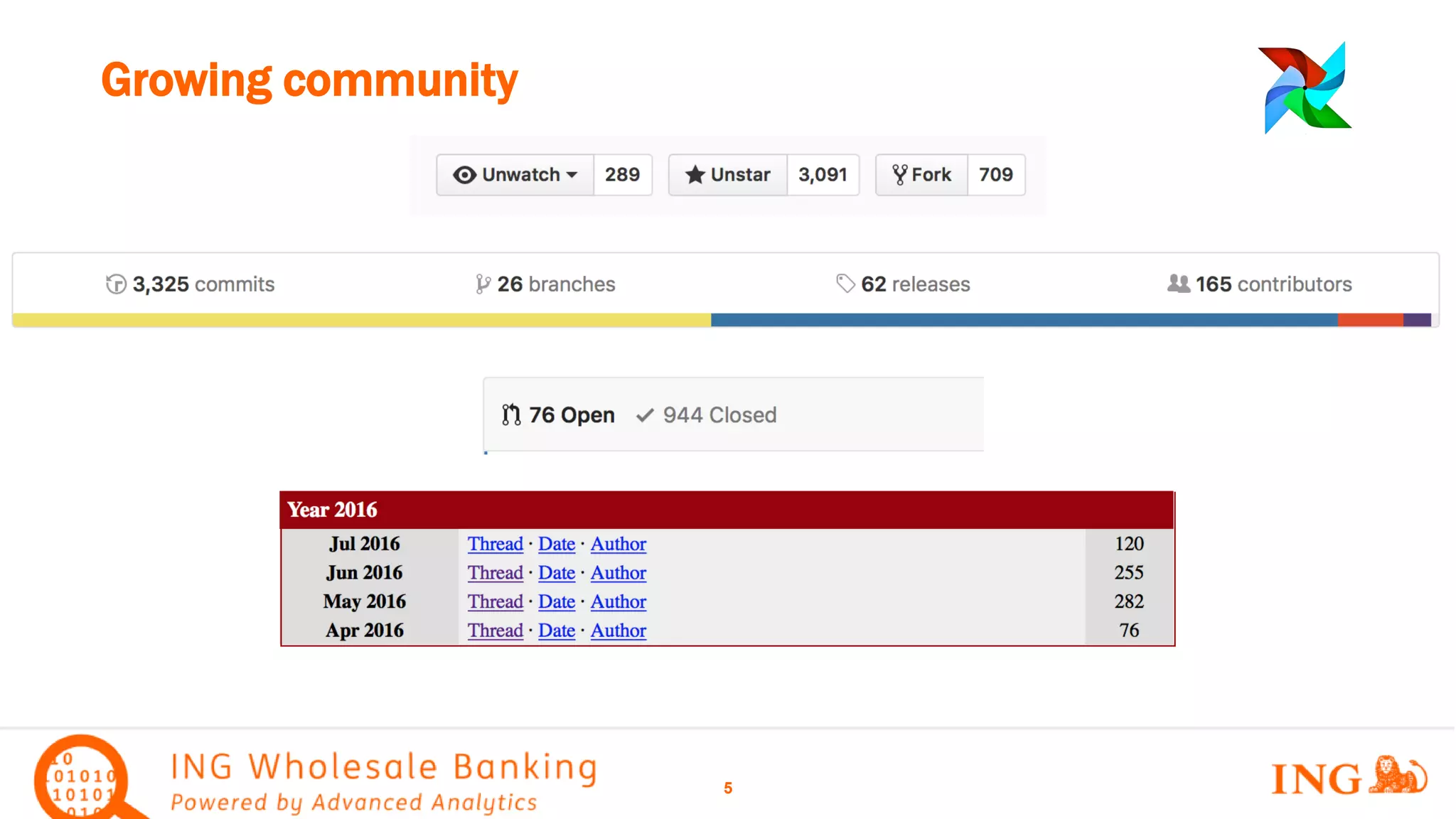

The document discusses Apache Airflow, an incubating project designed for orchestrating complex workflows, particularly in the banking and financial services sector. It highlights its features such as fault tolerance, scalability, and the importance of idempotent tasks, while also outlining execution models and configurations. Additionally, it touches upon future roadmap initiatives and community engagement resources.

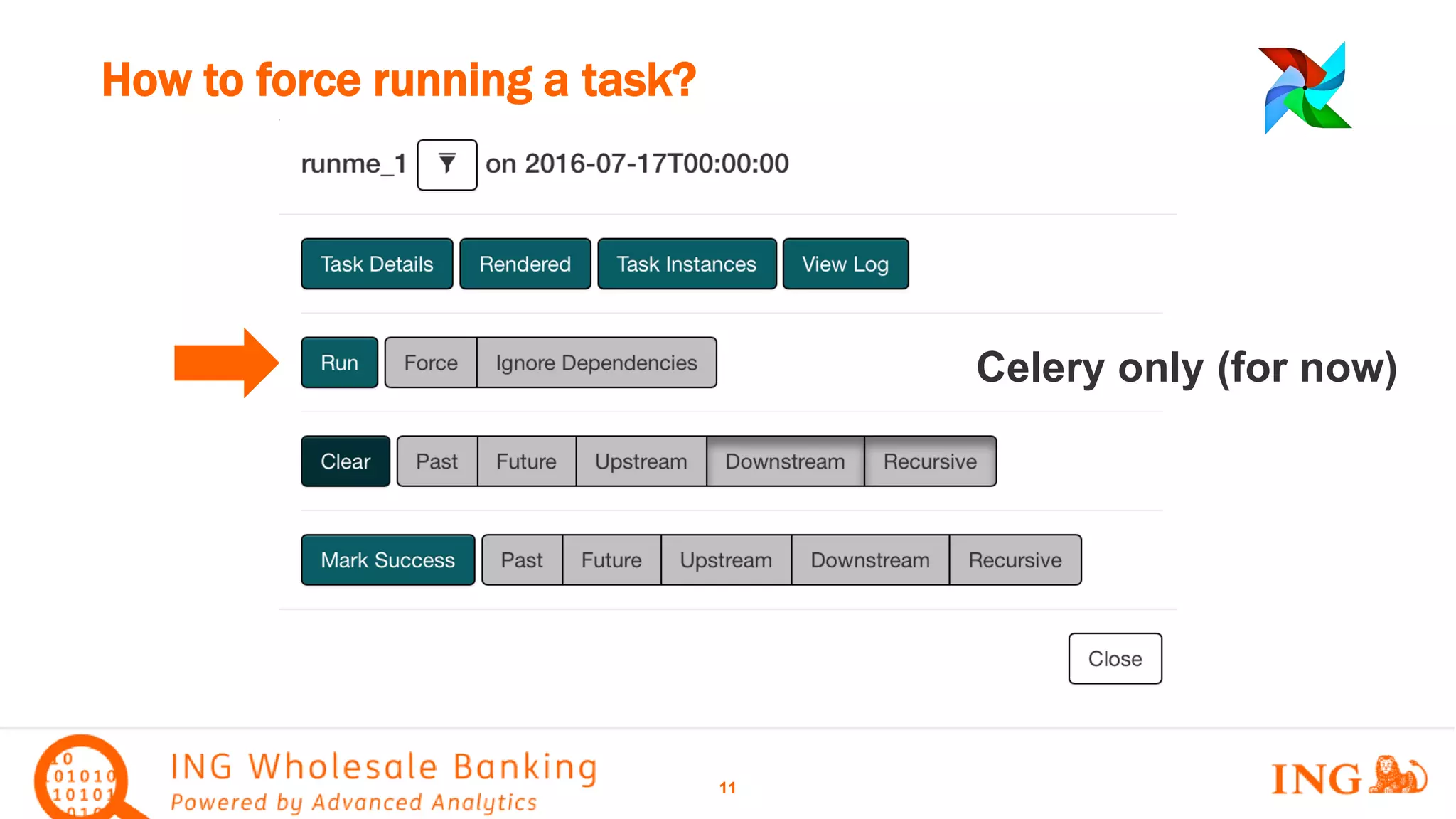

![Choose an executor that fits your environment

7

SequentialExecutor LocalExecutor CeleryExecutor

Use case Mainly testing Production (~50% of

installed base)

Production (~50% of

installed base)

Scaleability -na- Vertical Horizontal and Vertical

Complexity Low Medium Medium/High

DAG Local Local Needs sync / pickle

Configuration [core]

Executor =

SequentialExecutor

[core]

Executor =

LocalExecutor

Parallelism=32

[core]

Executor =

CeleryExecutor

[celery]

Celeryd_concurrency = 32

Broker_url = rabbitmq

celery_result_backend

Default_queue =

Remark Don’t use num_runs](https://image.slidesharecdn.com/airflowmeetup20160719-160720071253/75/Apache-Airflow-incubating-NL-HUG-Meetup-2016-07-19-7-2048.jpg)