This document discusses the evolution of cluster computing and resource management. It describes how:

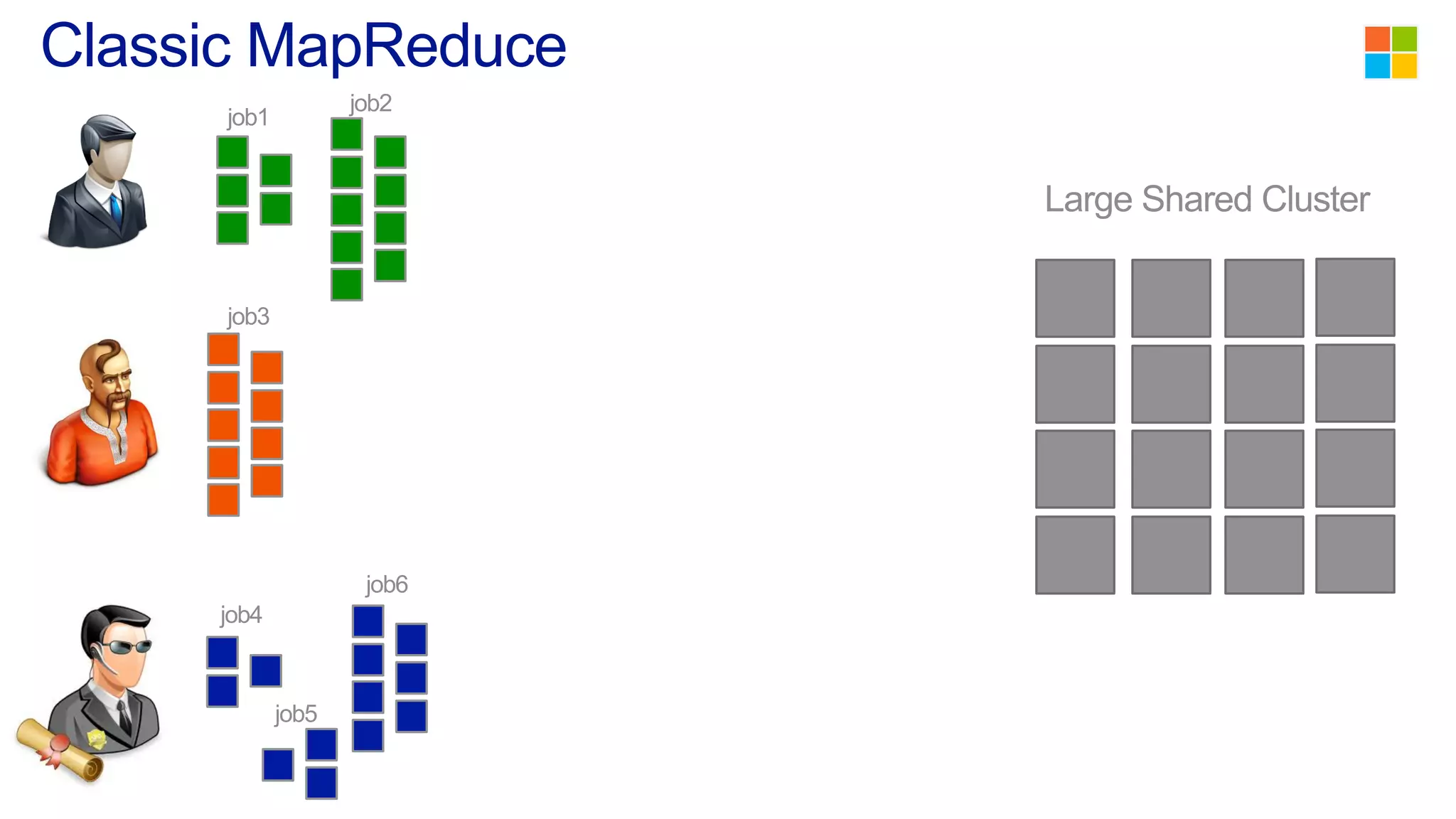

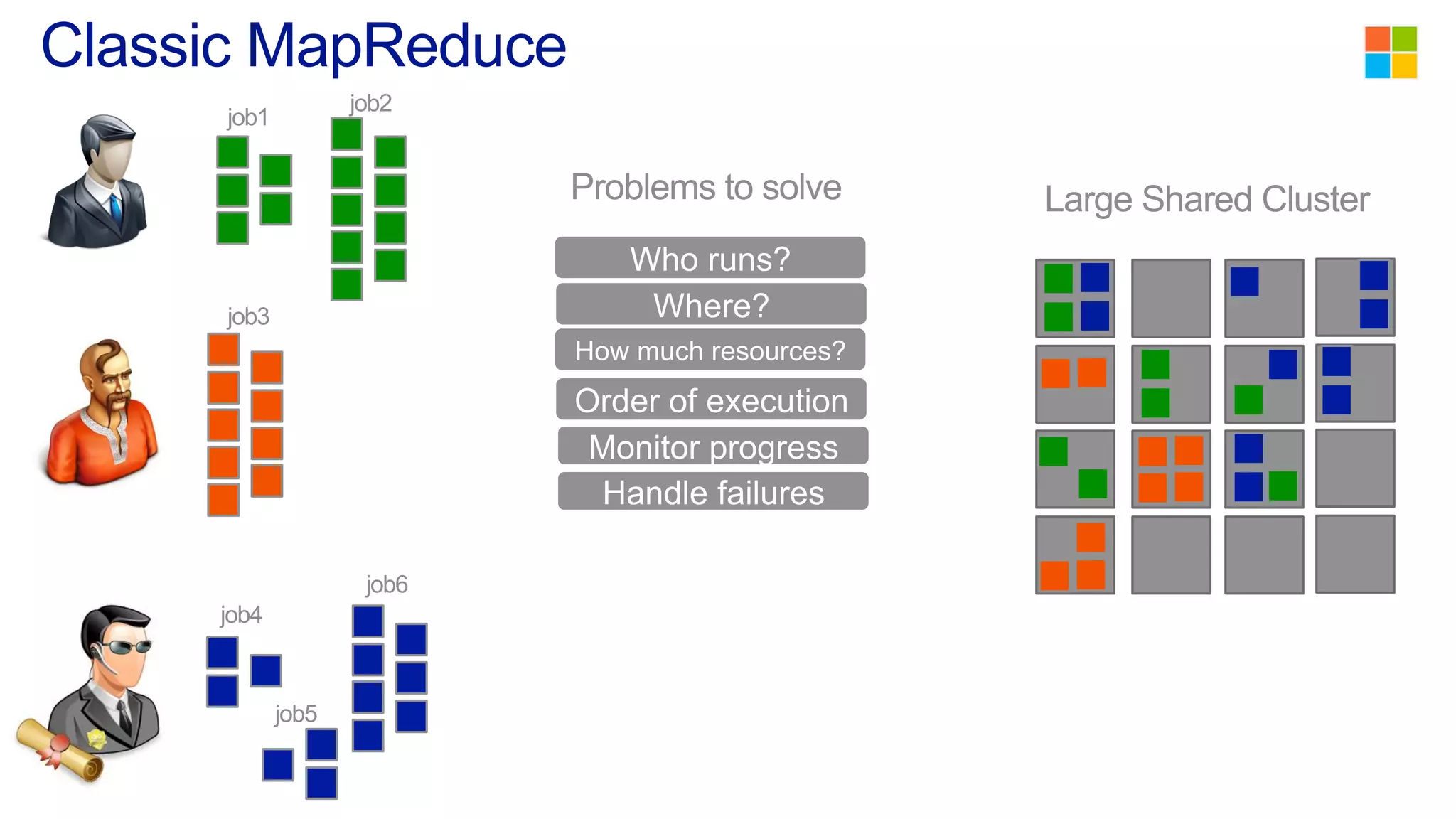

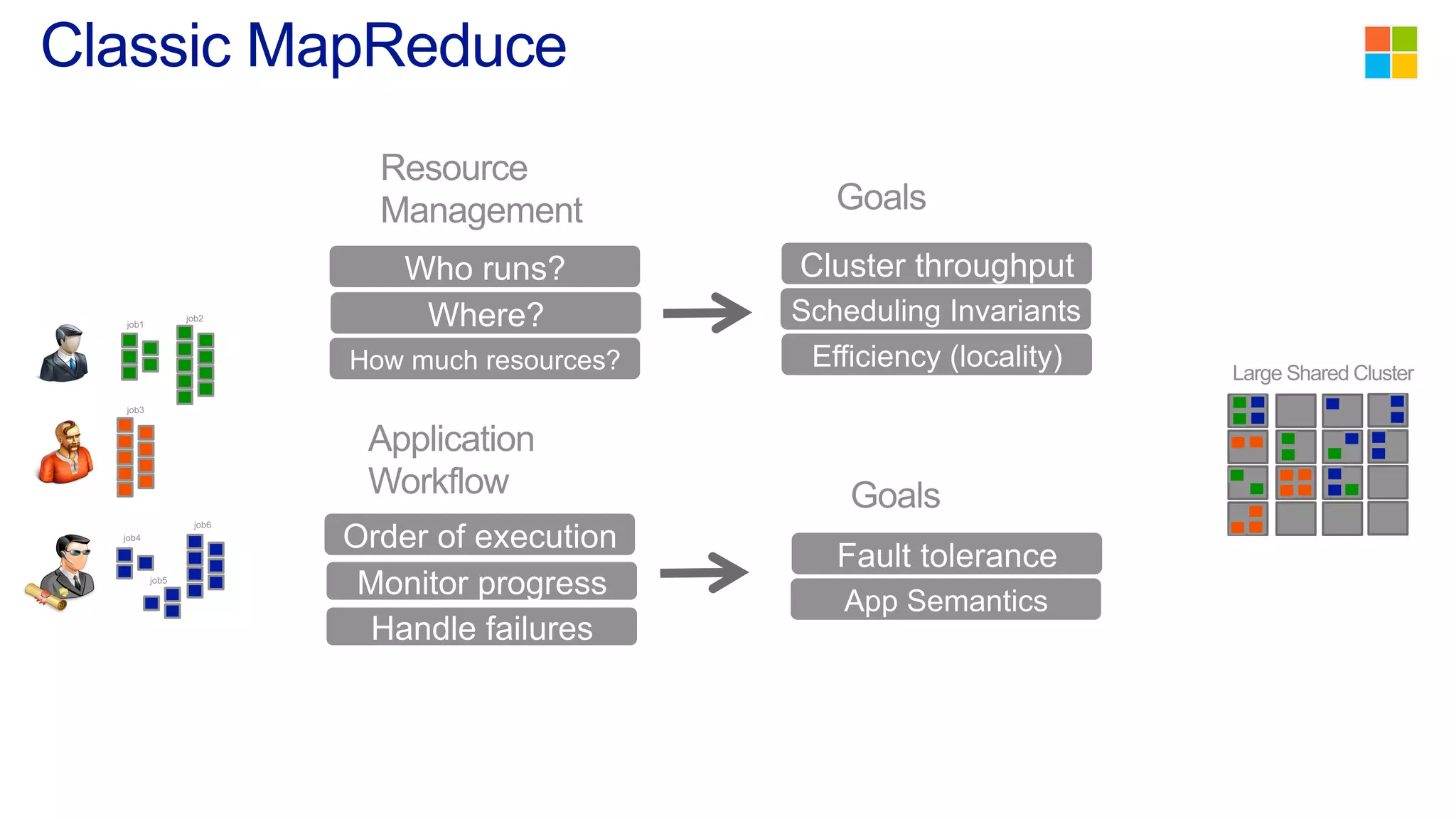

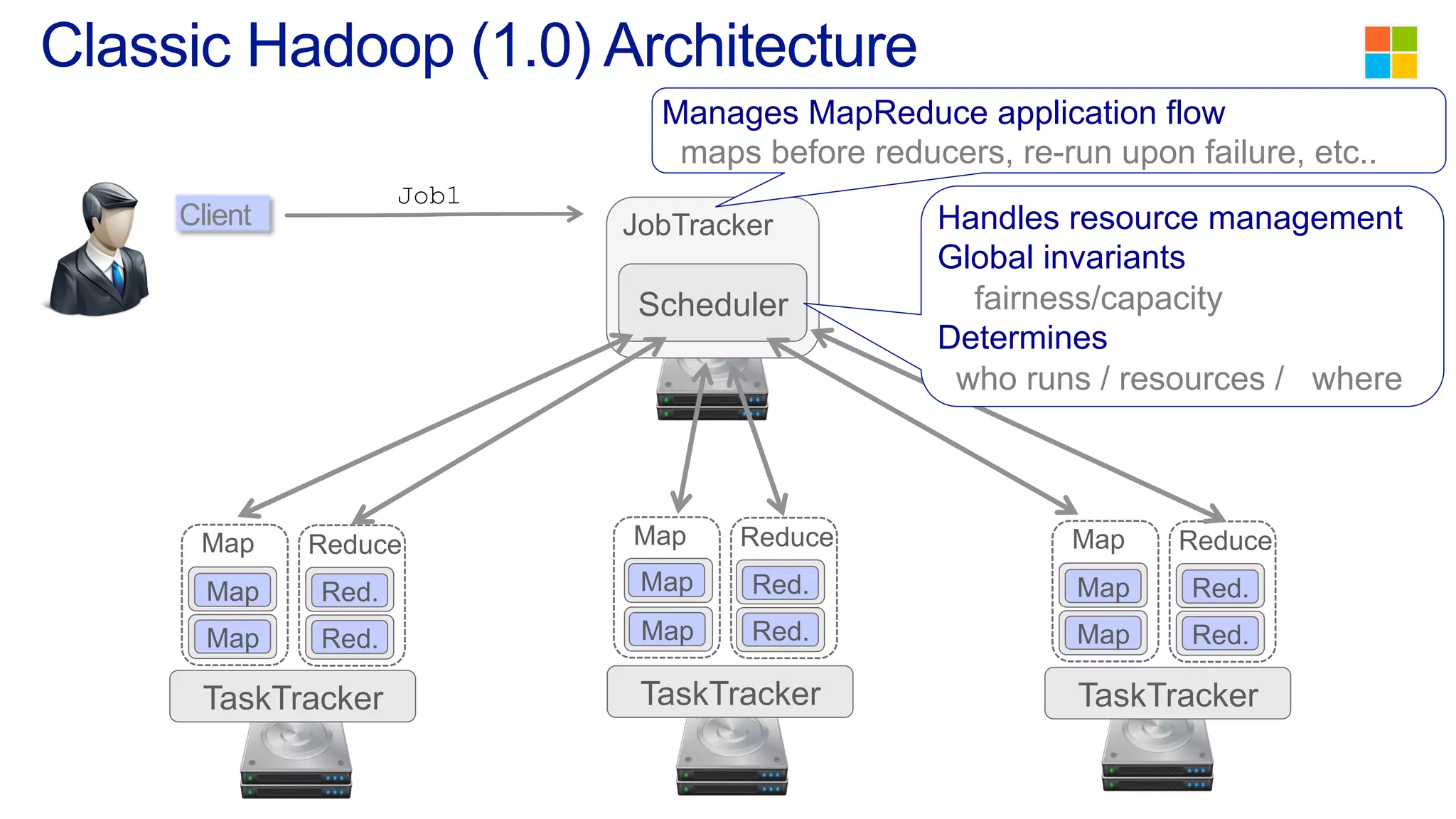

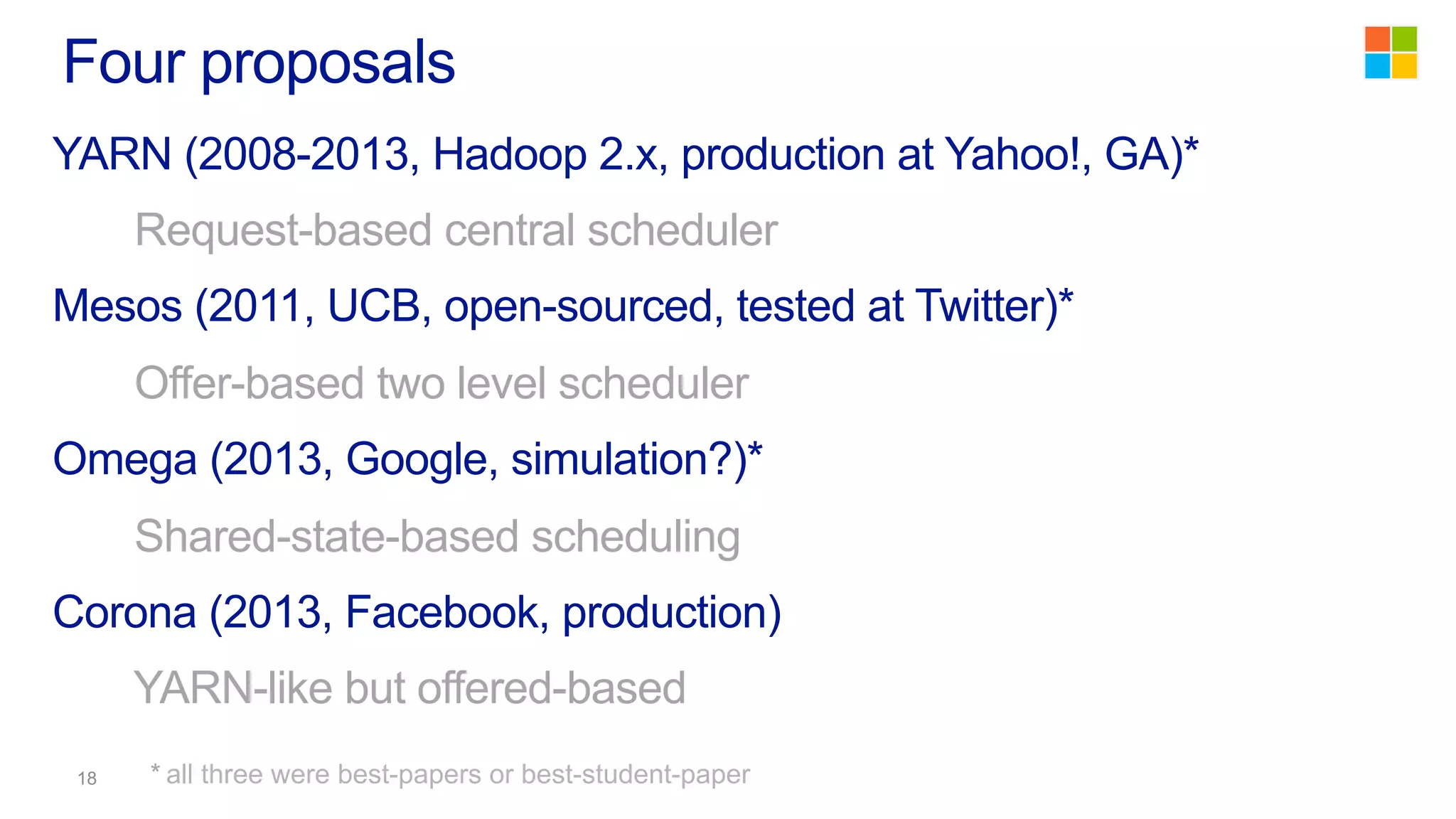

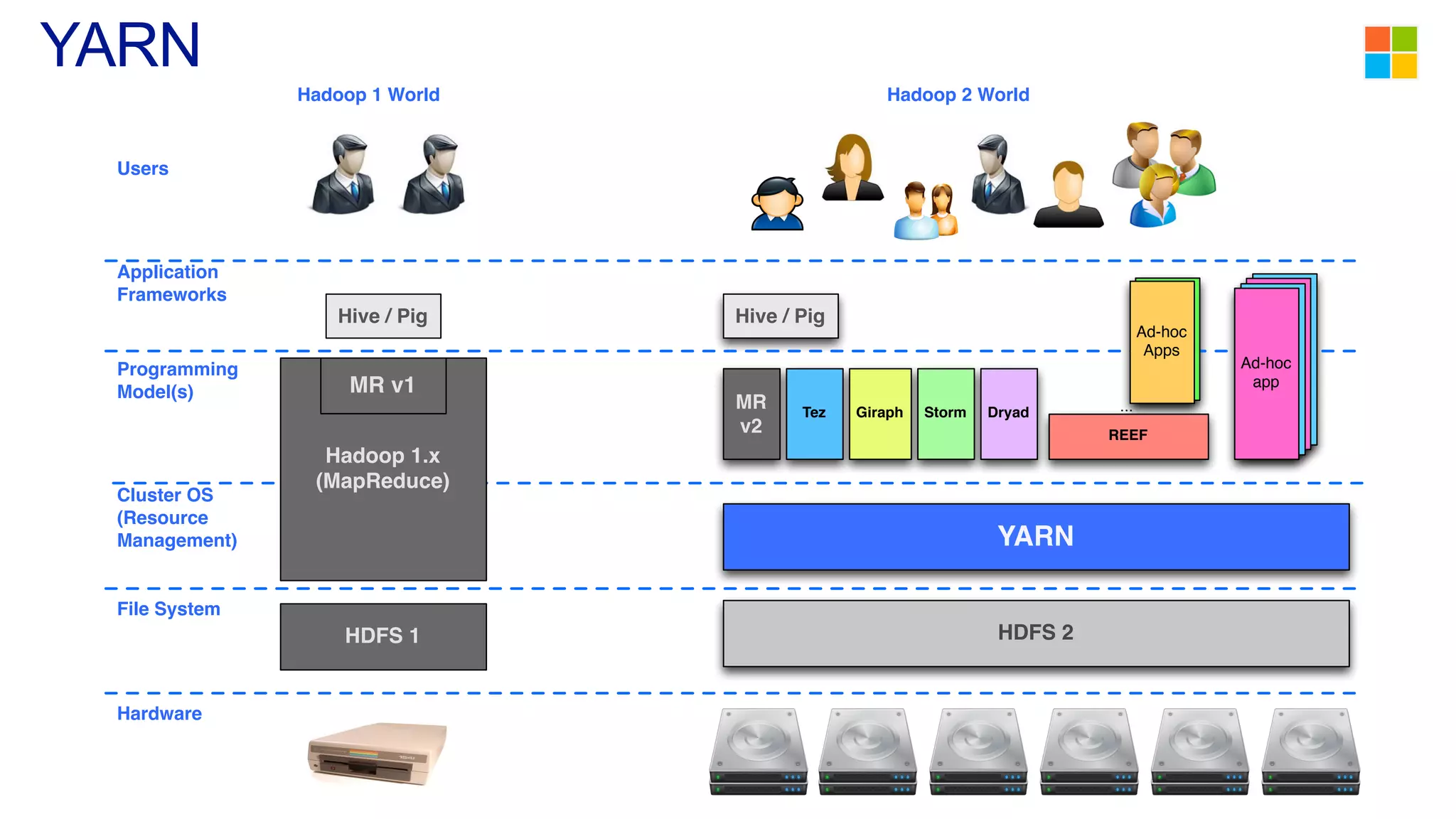

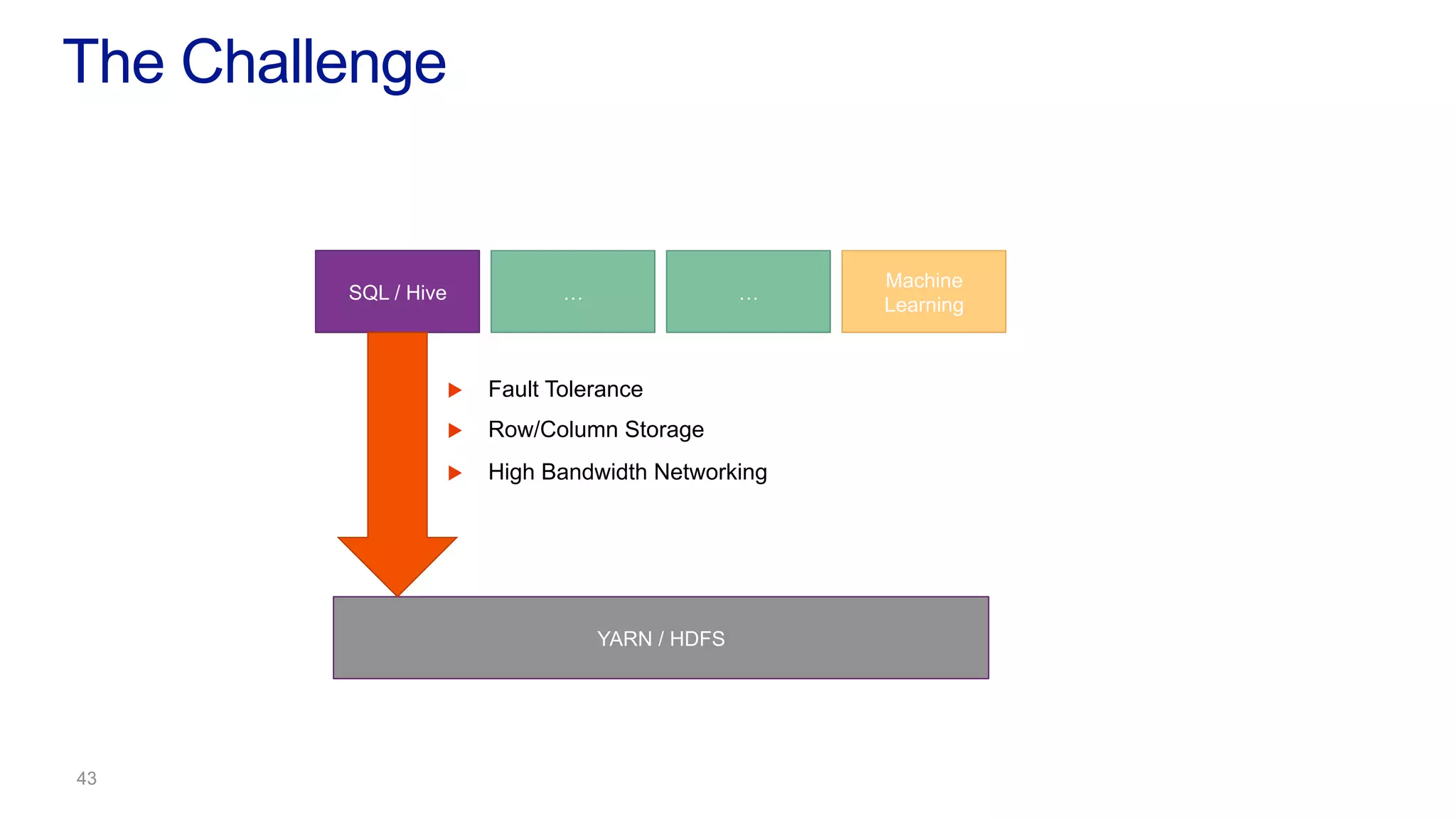

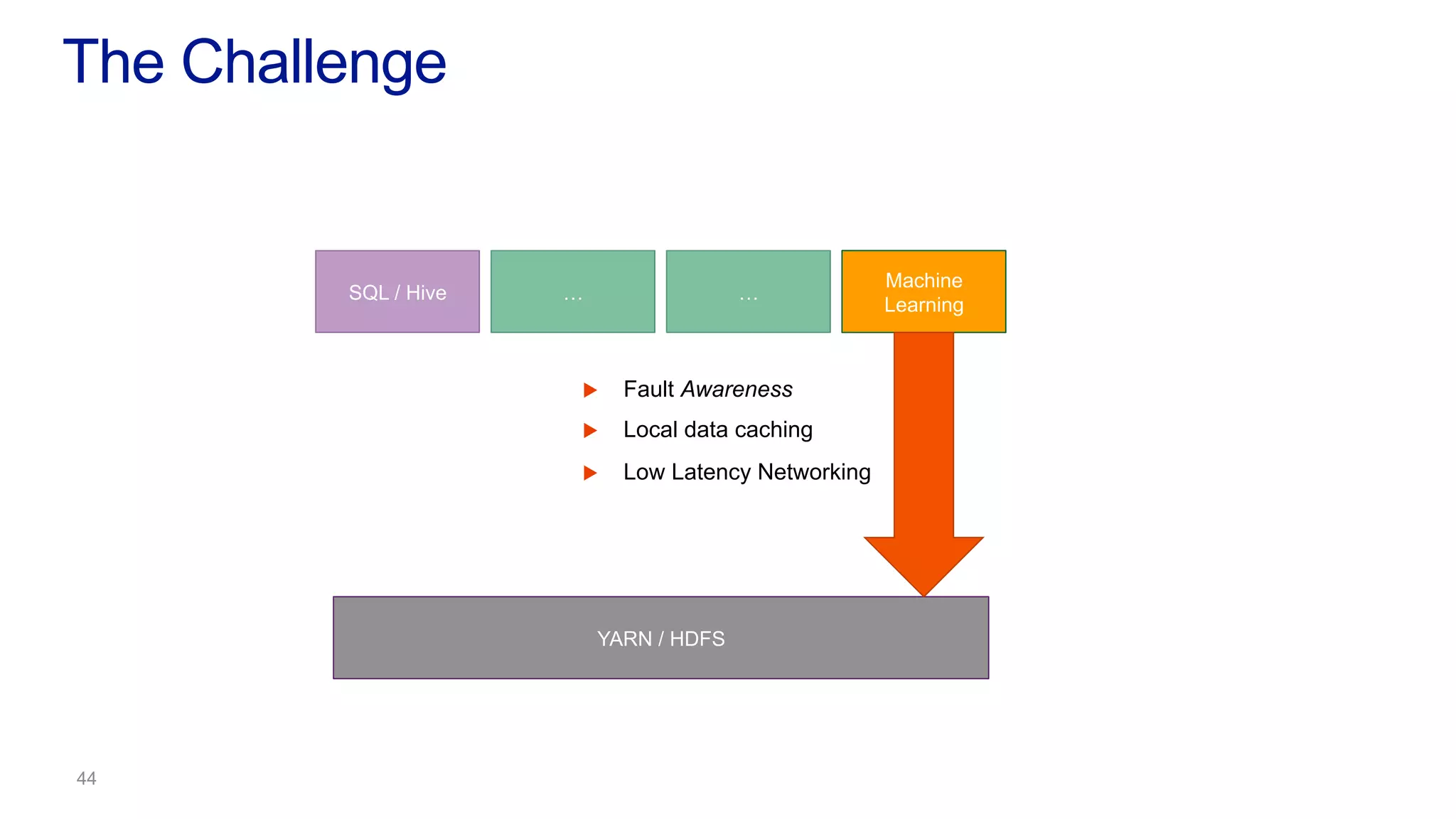

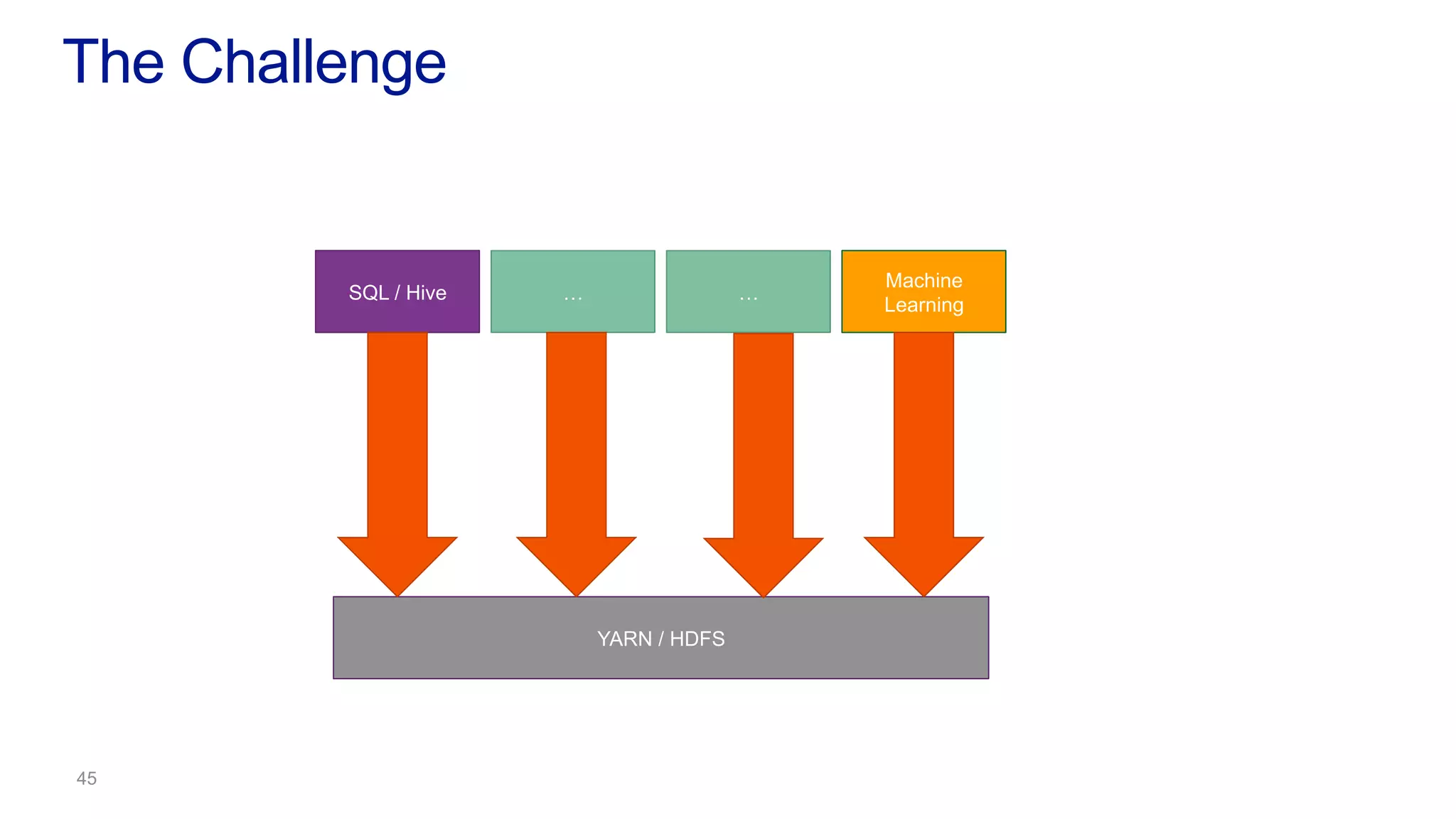

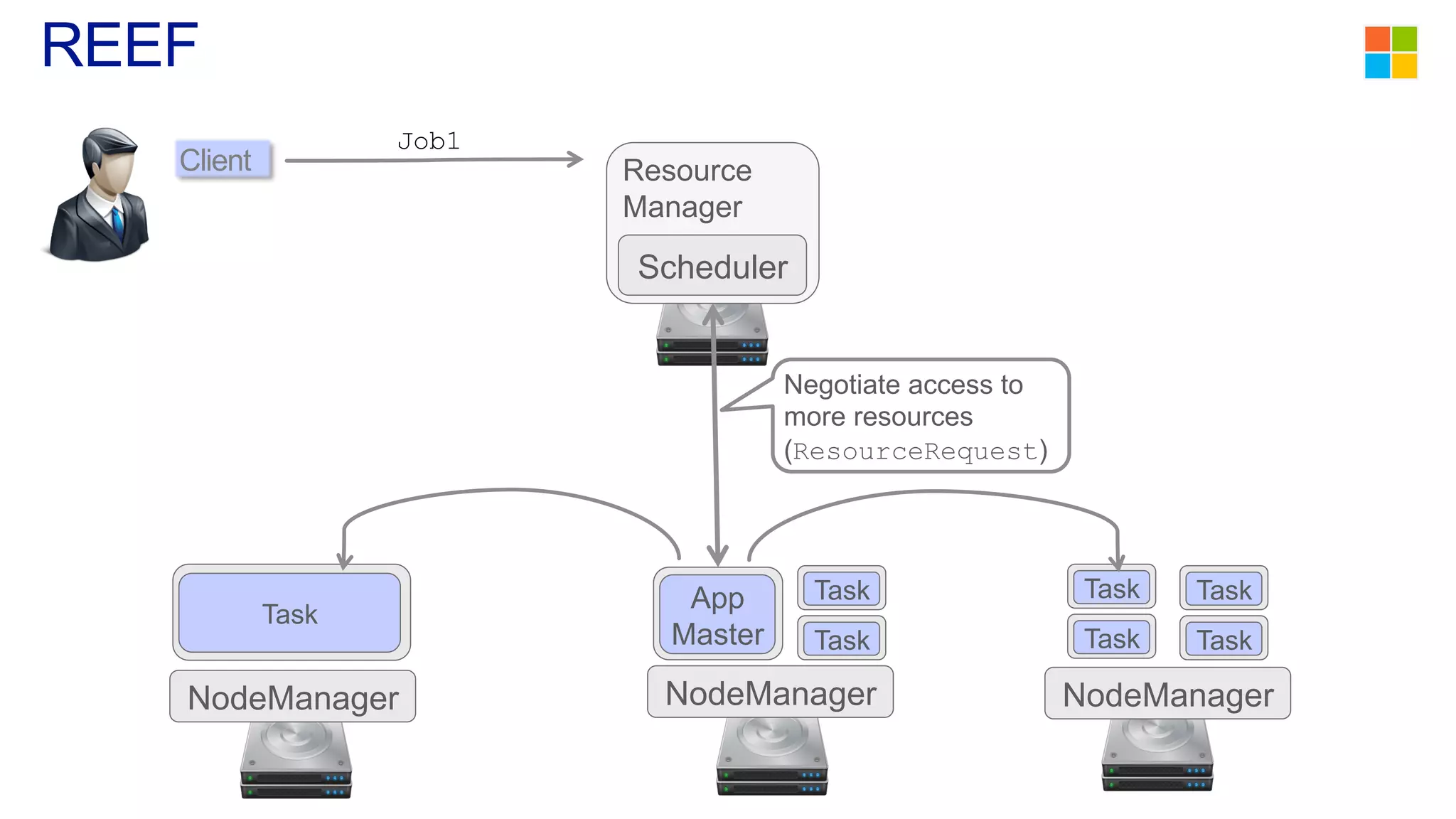

1) Early clusters were single-purpose and used technologies like MapReduce. General purpose cluster OSes like YARN emerged to allow multiple applications on a cluster.

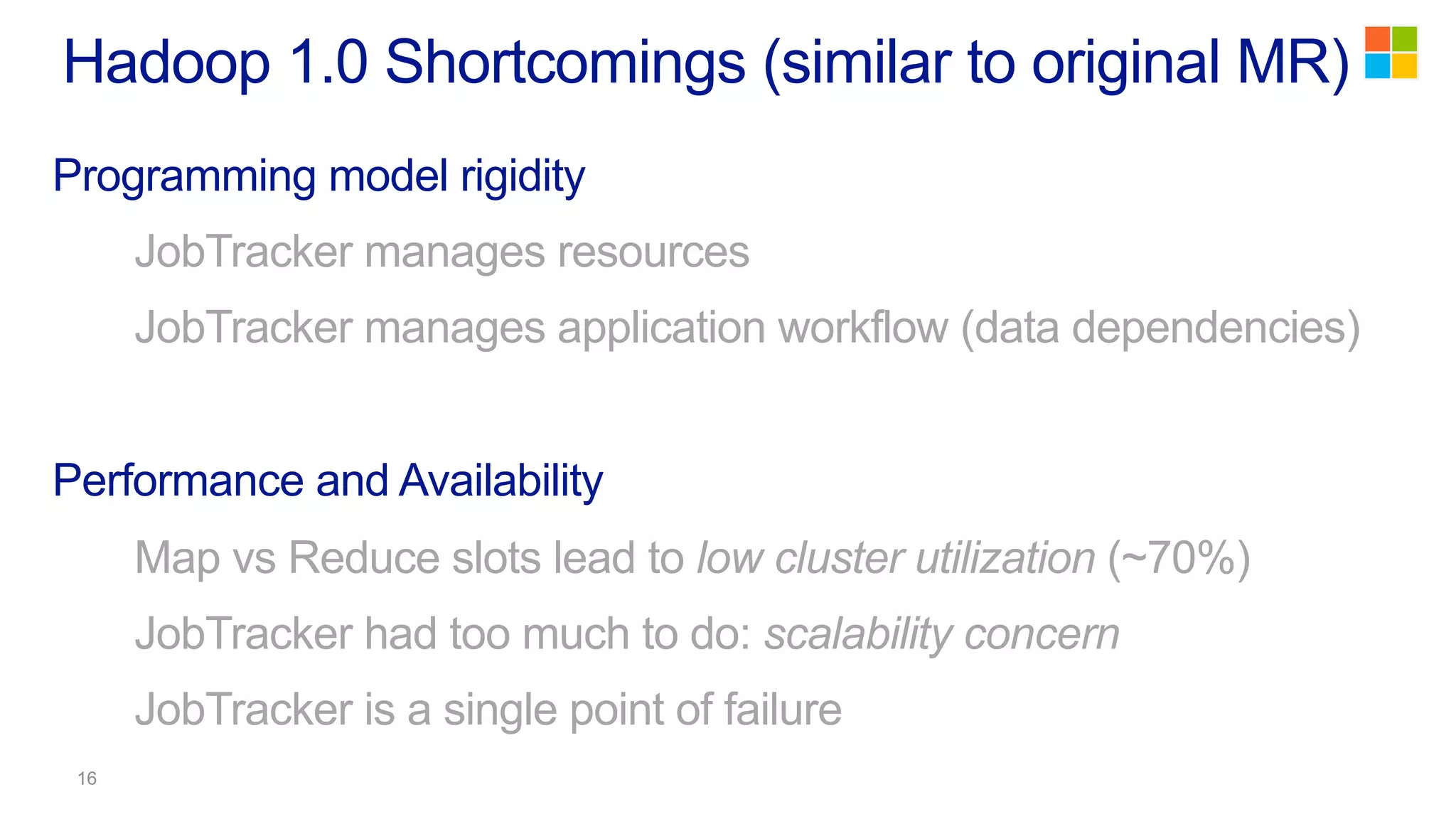

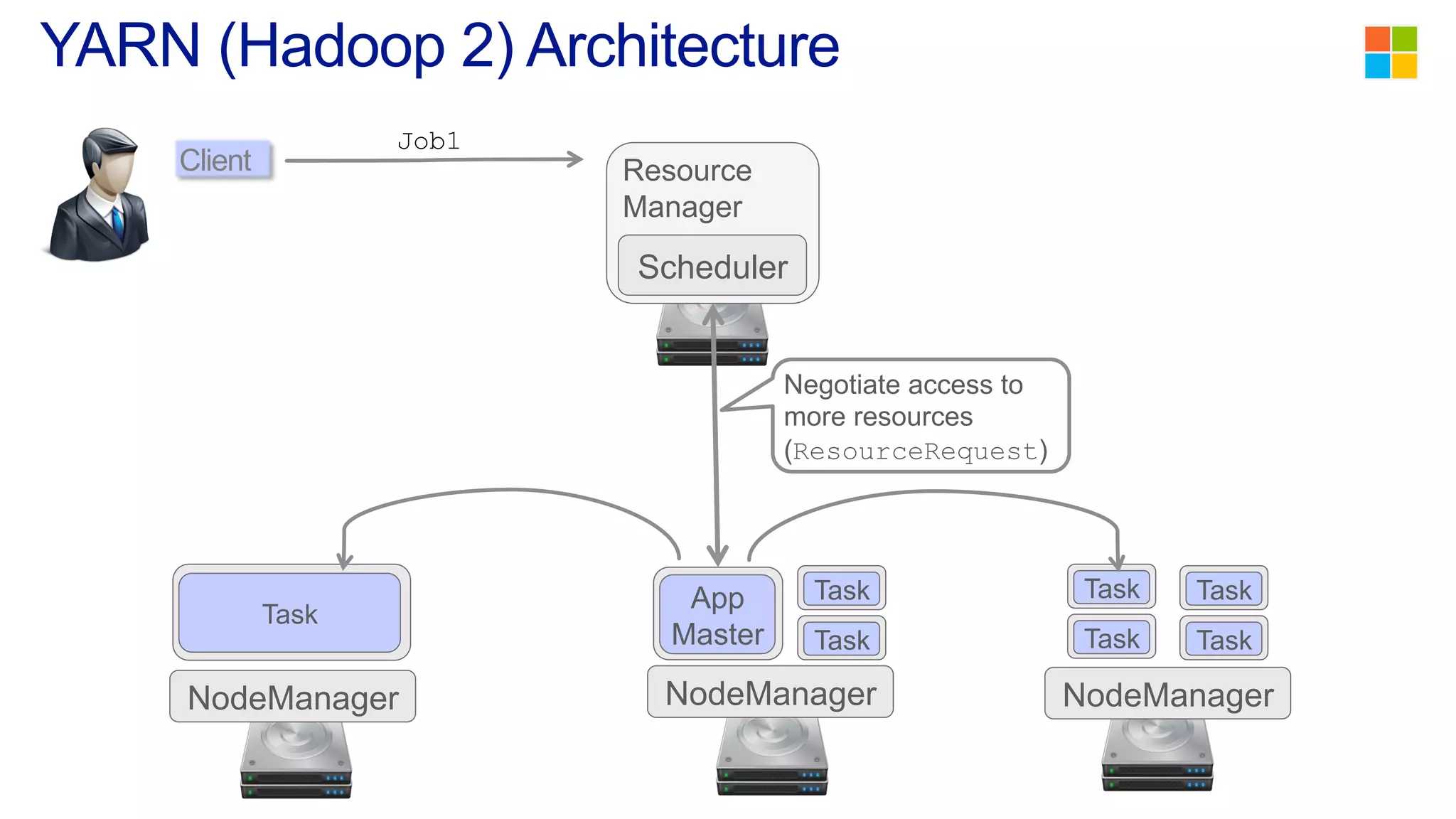

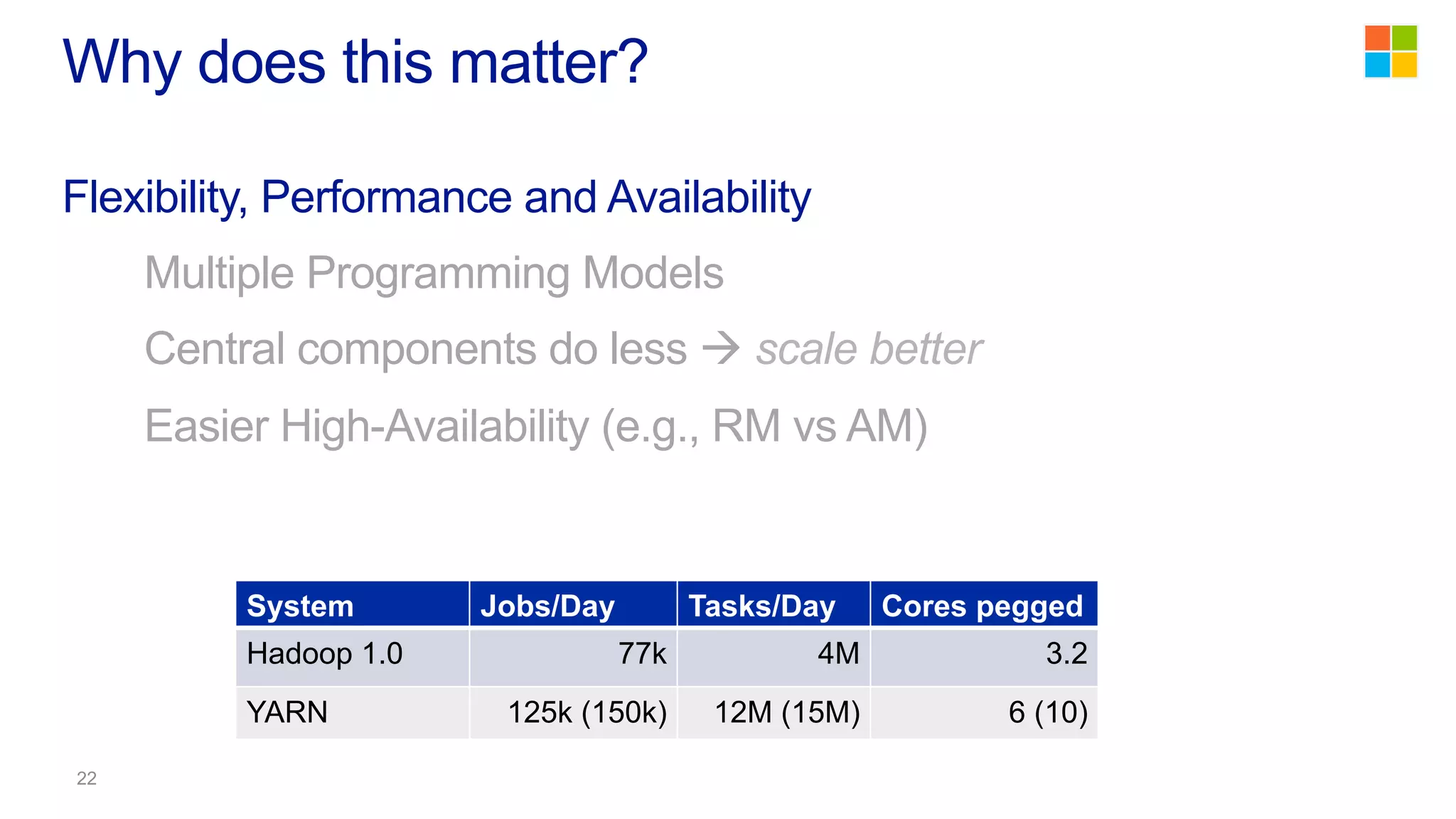

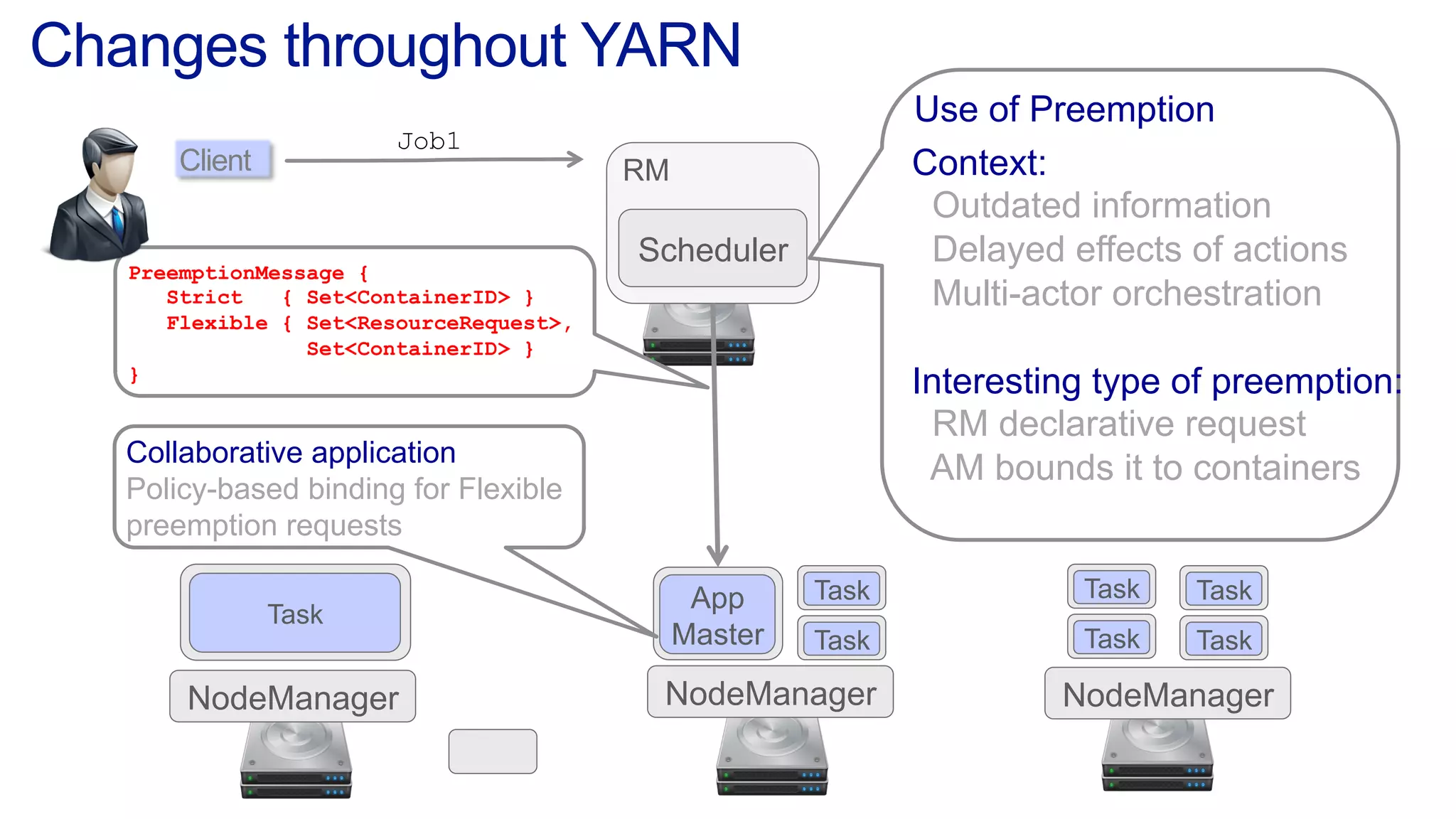

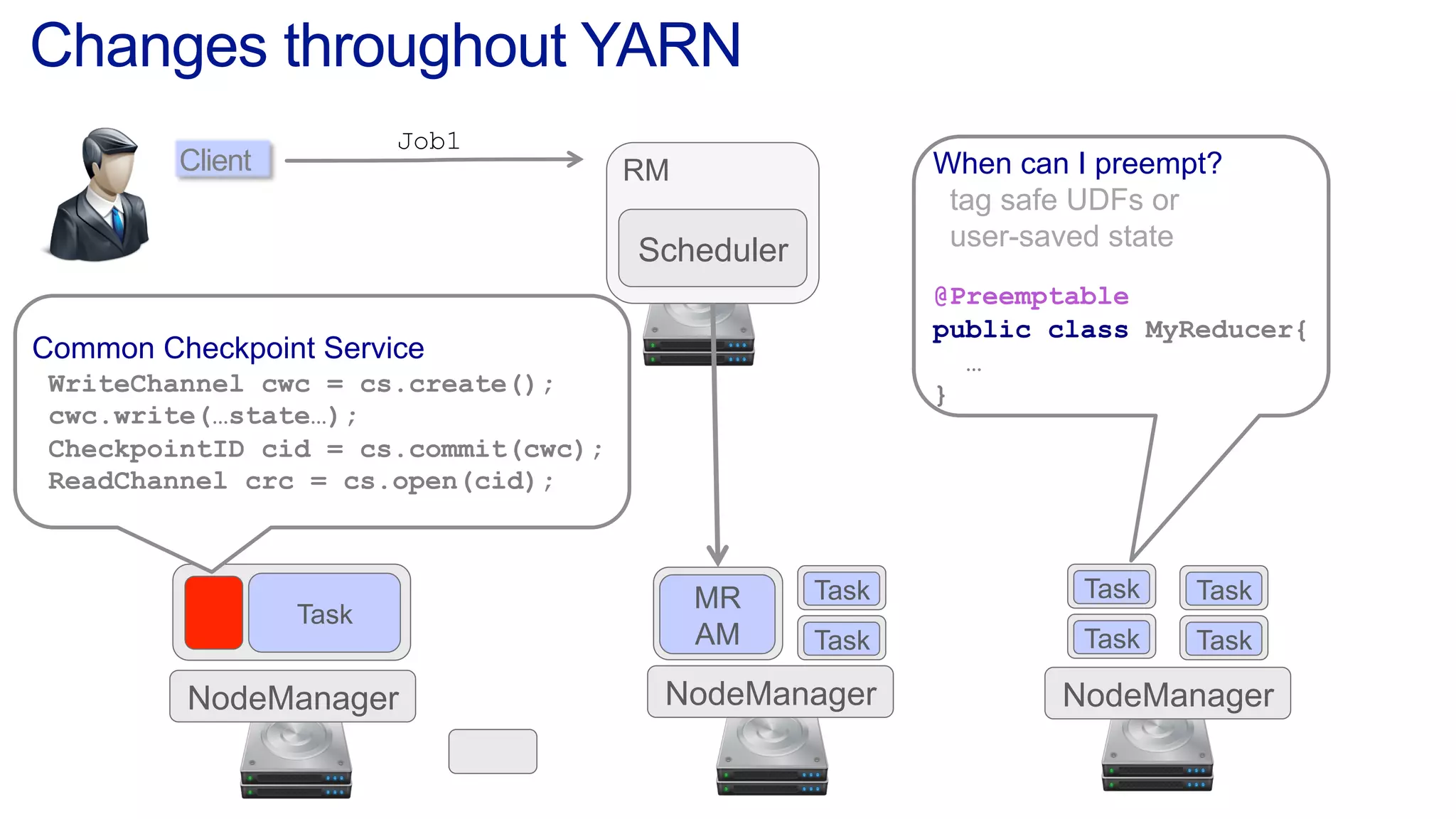

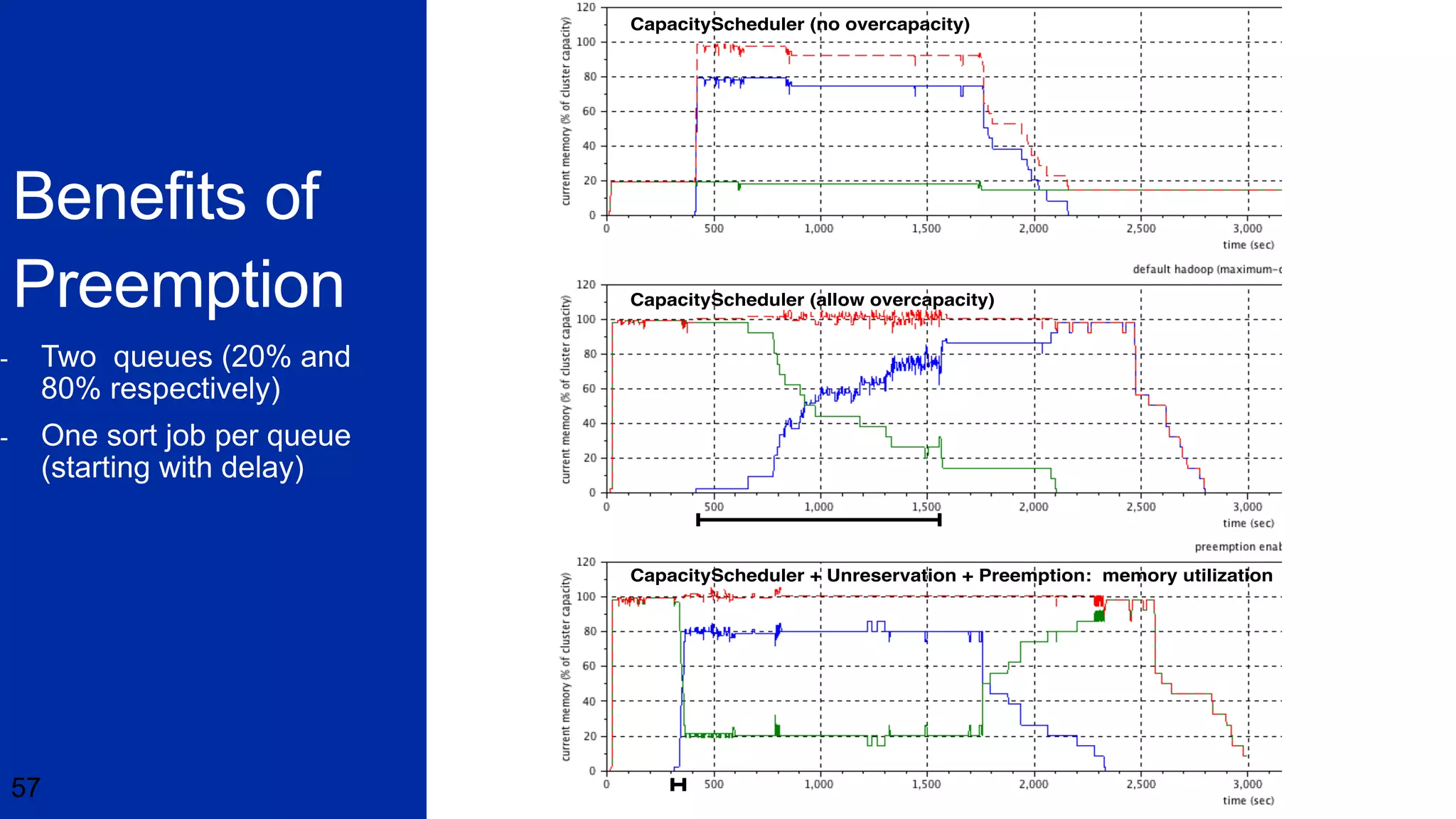

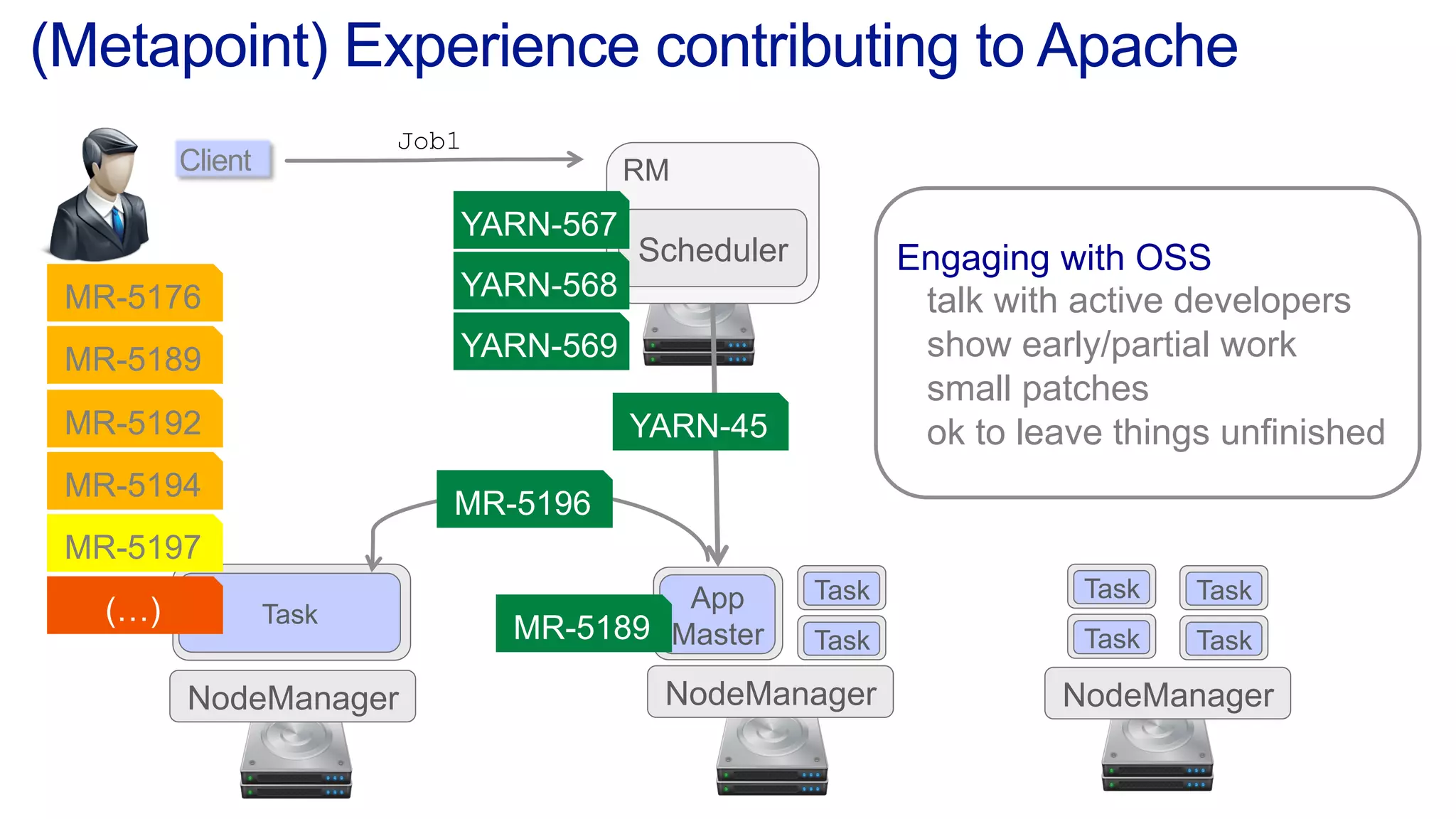

2) YARN improved on Hadoop by decoupling the programming model from resource management, allowing more flexibility and better performance/availability.

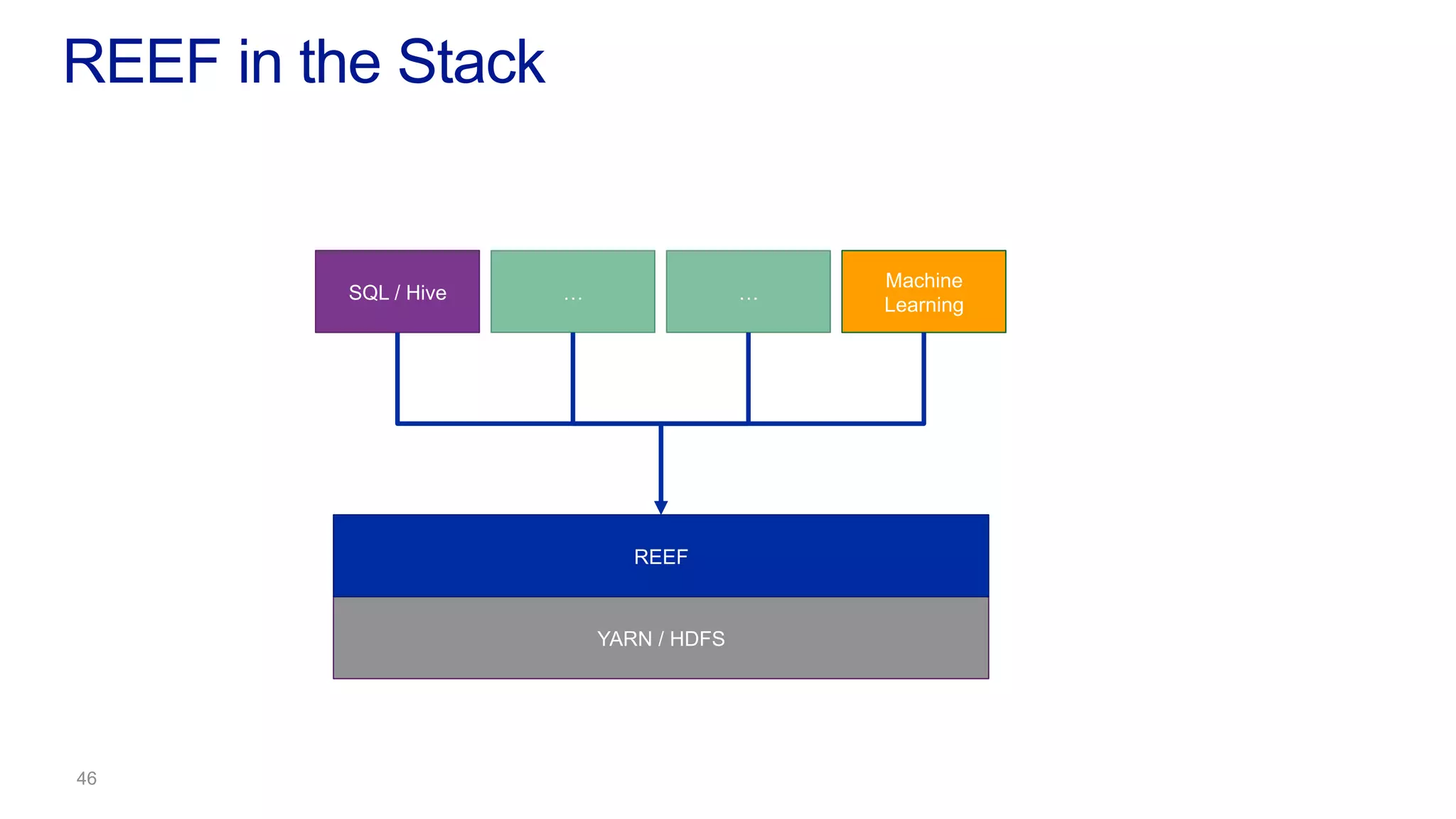

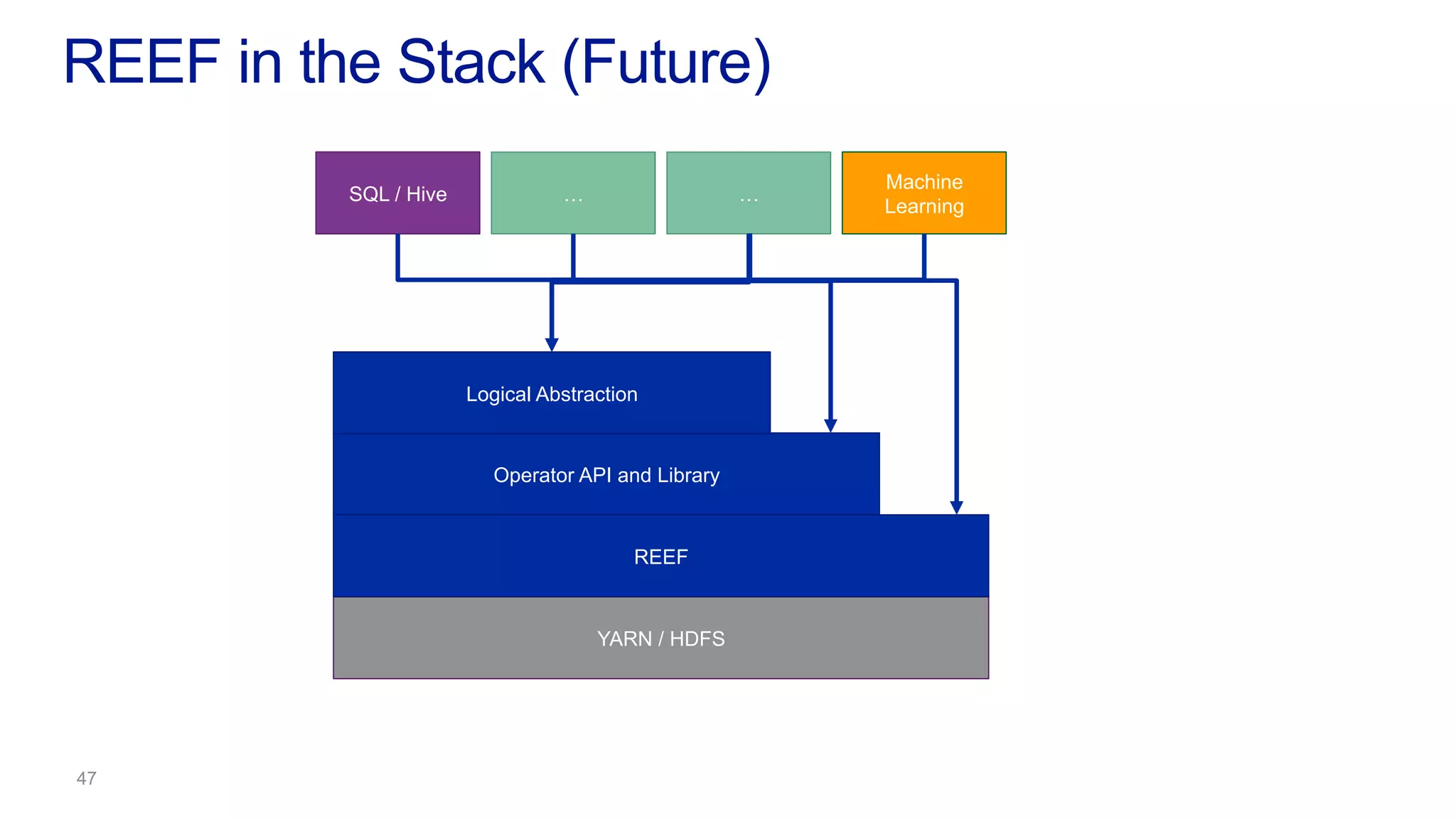

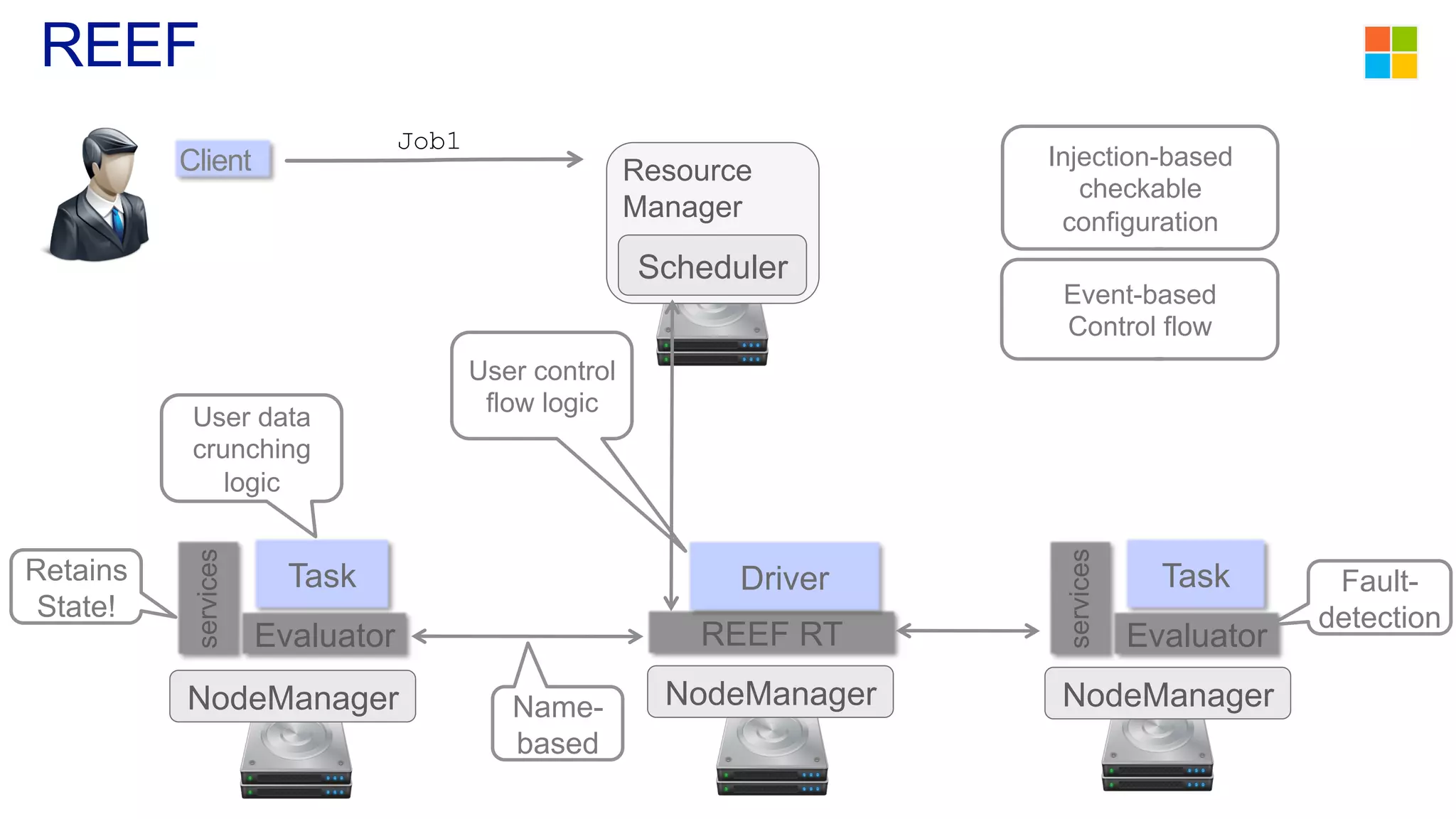

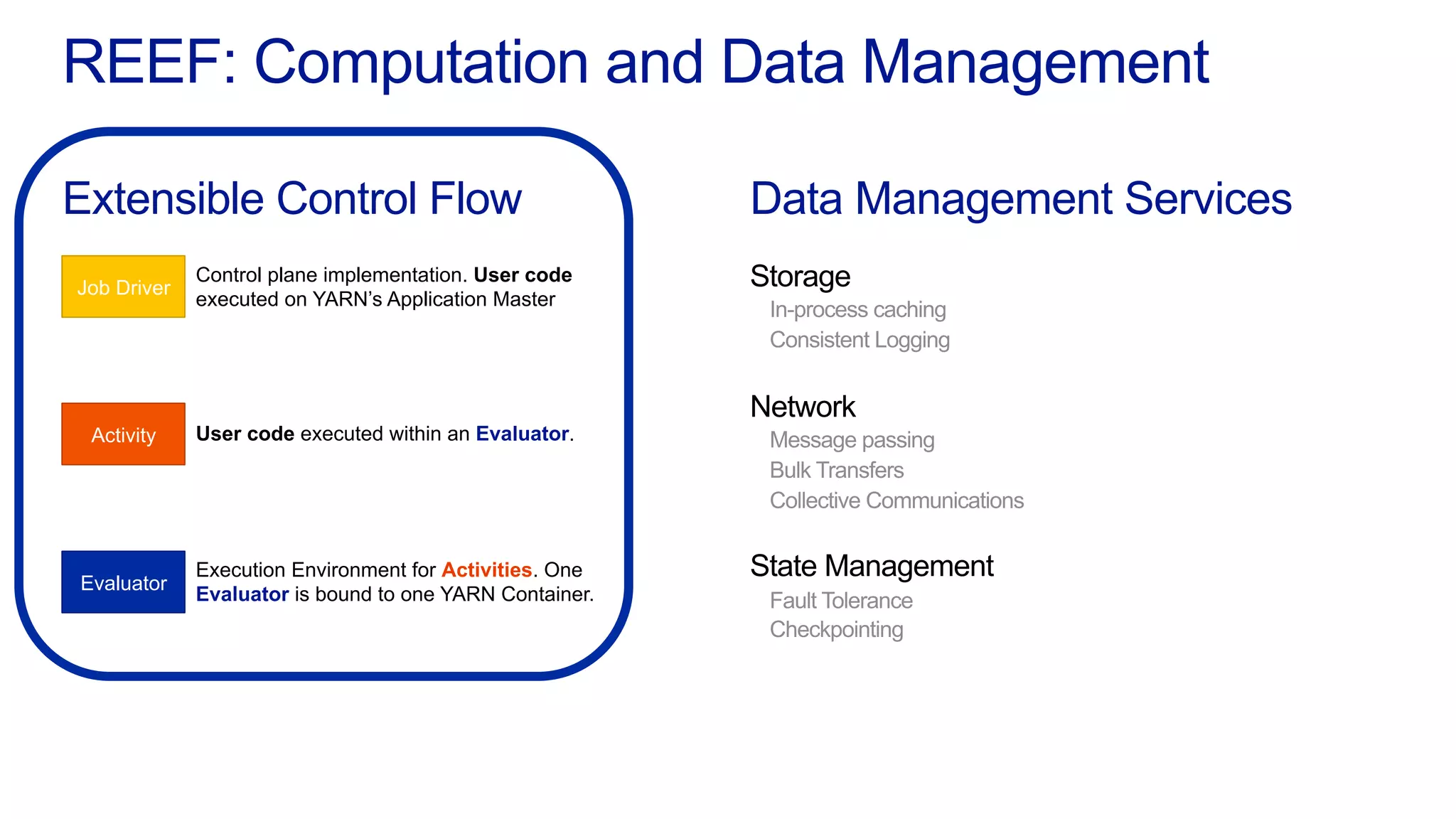

3) REEF aims to further improve frameworks by factoring out common functionalities around communication, configuration, and fault tolerance.