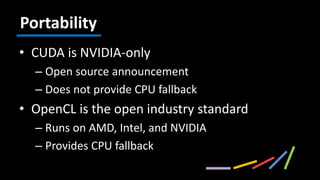

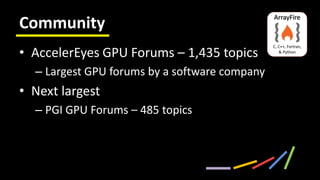

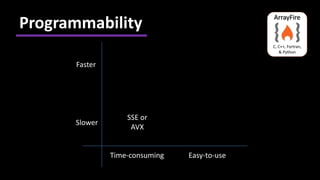

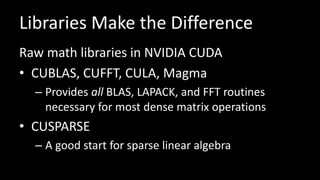

This document summarizes a webinar comparing OpenCL and CUDA for GPU computing. It discusses key factors like programmability, portability, scalability, performance, and community support. Both OpenCL and CUDA can fully utilize hardware but performance depends on details. CUDA only runs on NVIDIA while OpenCL runs on multiple vendors but with less community support. Libraries help improve programmability over low-level kernels. ArrayFire provides a portable interface across CUDA and OpenCL with optimizations for different hardware.