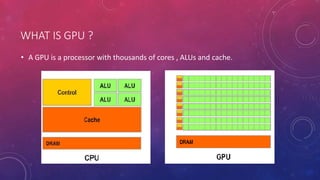

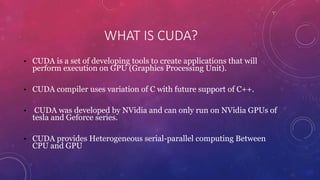

CUDA is a parallel computing platform that allows developers to use GPUs for general purpose processing. It provides a programming model for writing C/C++ applications that leverage the parallel compute engines on Nvidia GPUs. CUDA applications use a data-parallel programming model where the GPU runs many lightweight threads concurrently. The CUDA programming model exposes a hierarchical memory structure including registers, shared memory, and global memory. Developers can write CUDA programs that transfer data from CPU to GPU memory, launch kernels on the GPU, and copy results back to the CPU.