This document discusses using a hybrid modeling approach combining decision trees and logistic regression to develop a credit scoring model for retail loans. It presents a case study where this approach was tested on real loan data. Key points:

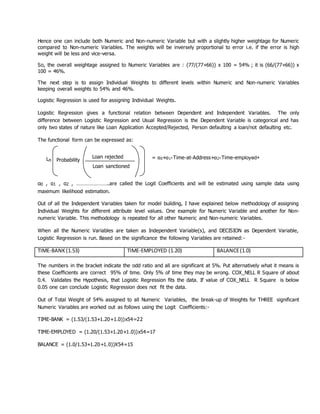

- A decision tree was first used to identify important borrower characteristics and assign initial weights. Logistic regression was then used to compute odds ratios and assign refined weights within each characteristic.

- This iterative process determined weights for both numeric factors like time at bank and non-numeric factors like occupation. Binning of numeric variables was done automatically using additional decision trees.

- When tested on loan application data, the hybrid model achieved a classification accuracy of around 80%, higher than single models. This approach provides an