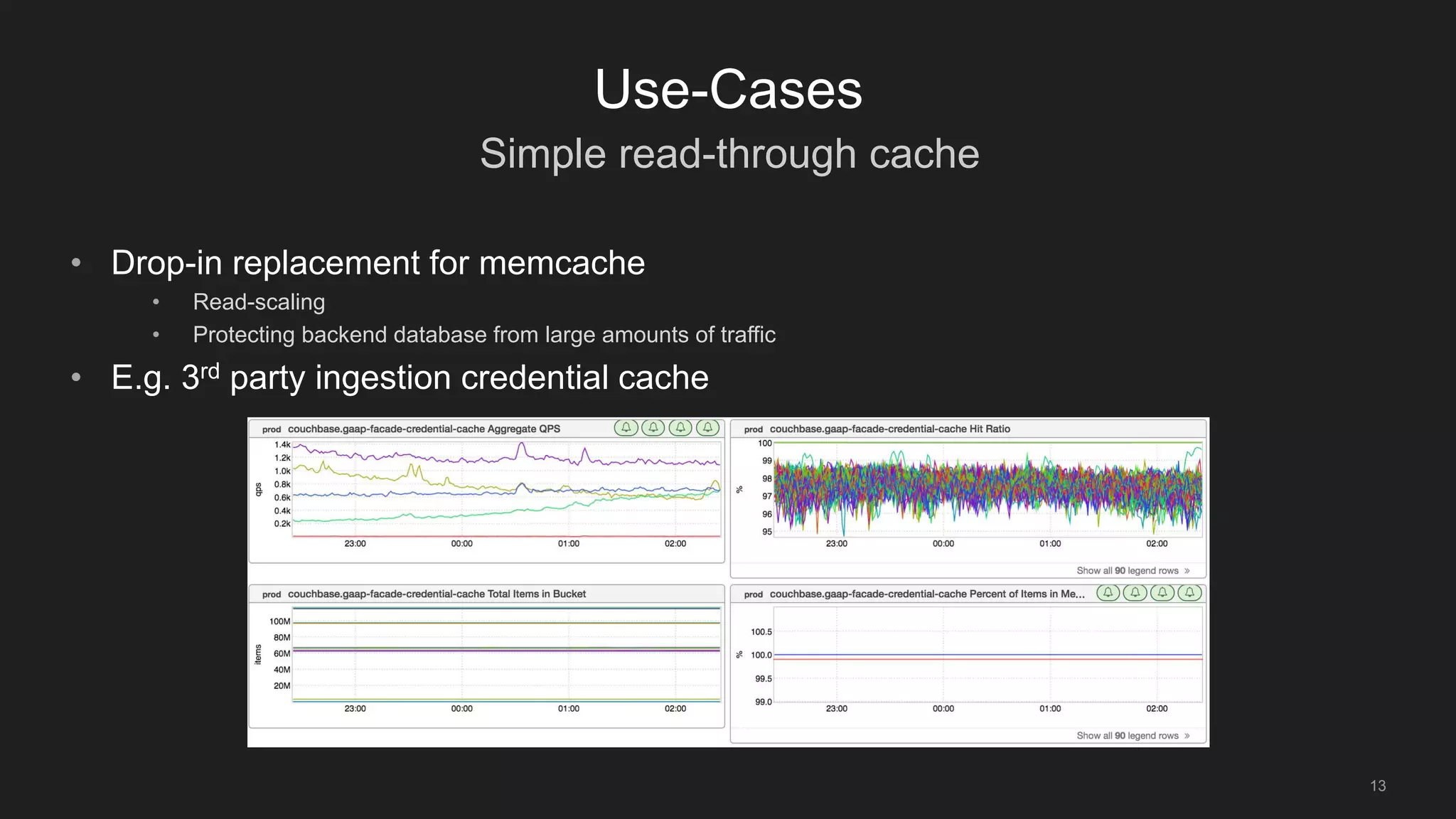

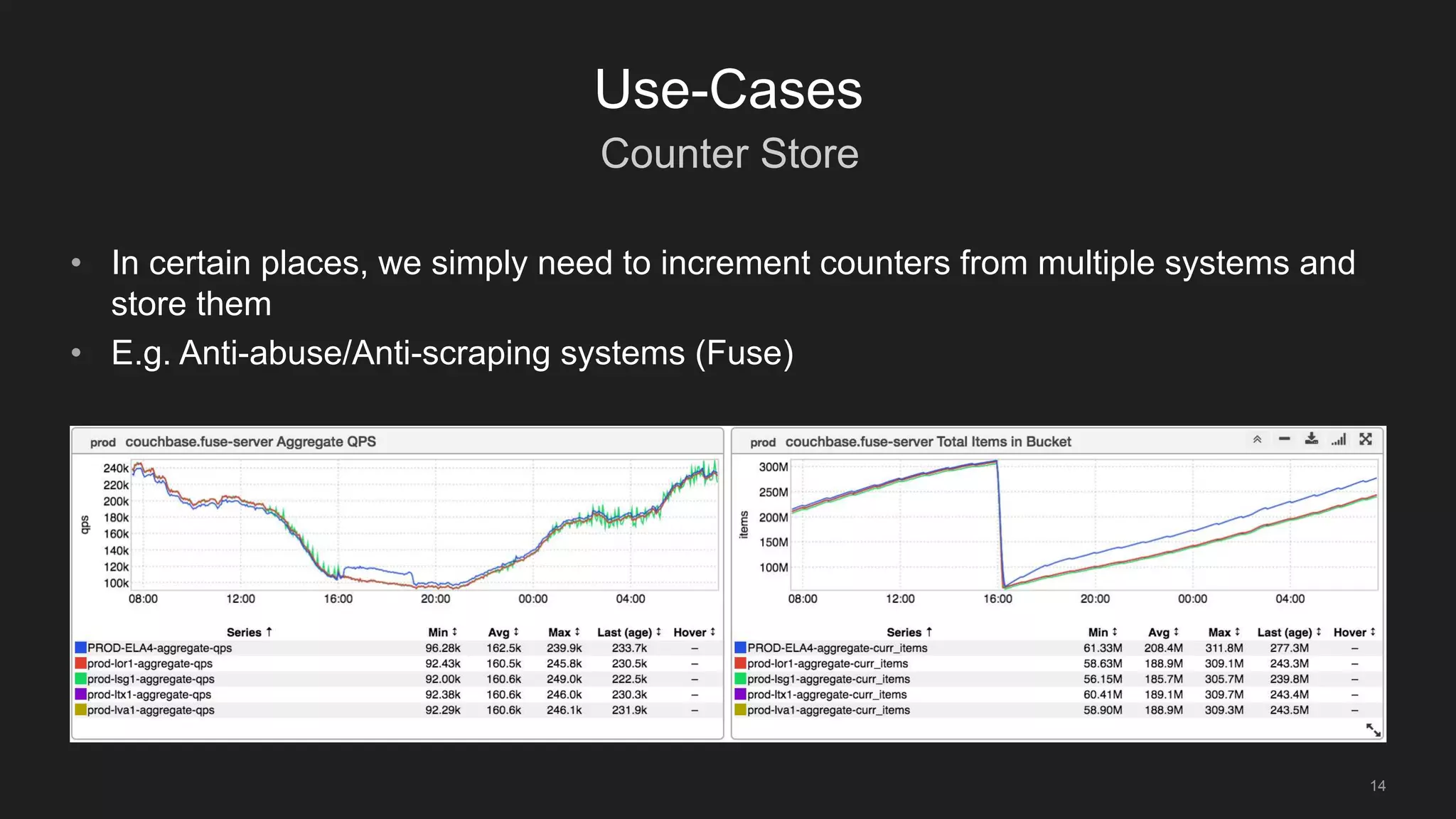

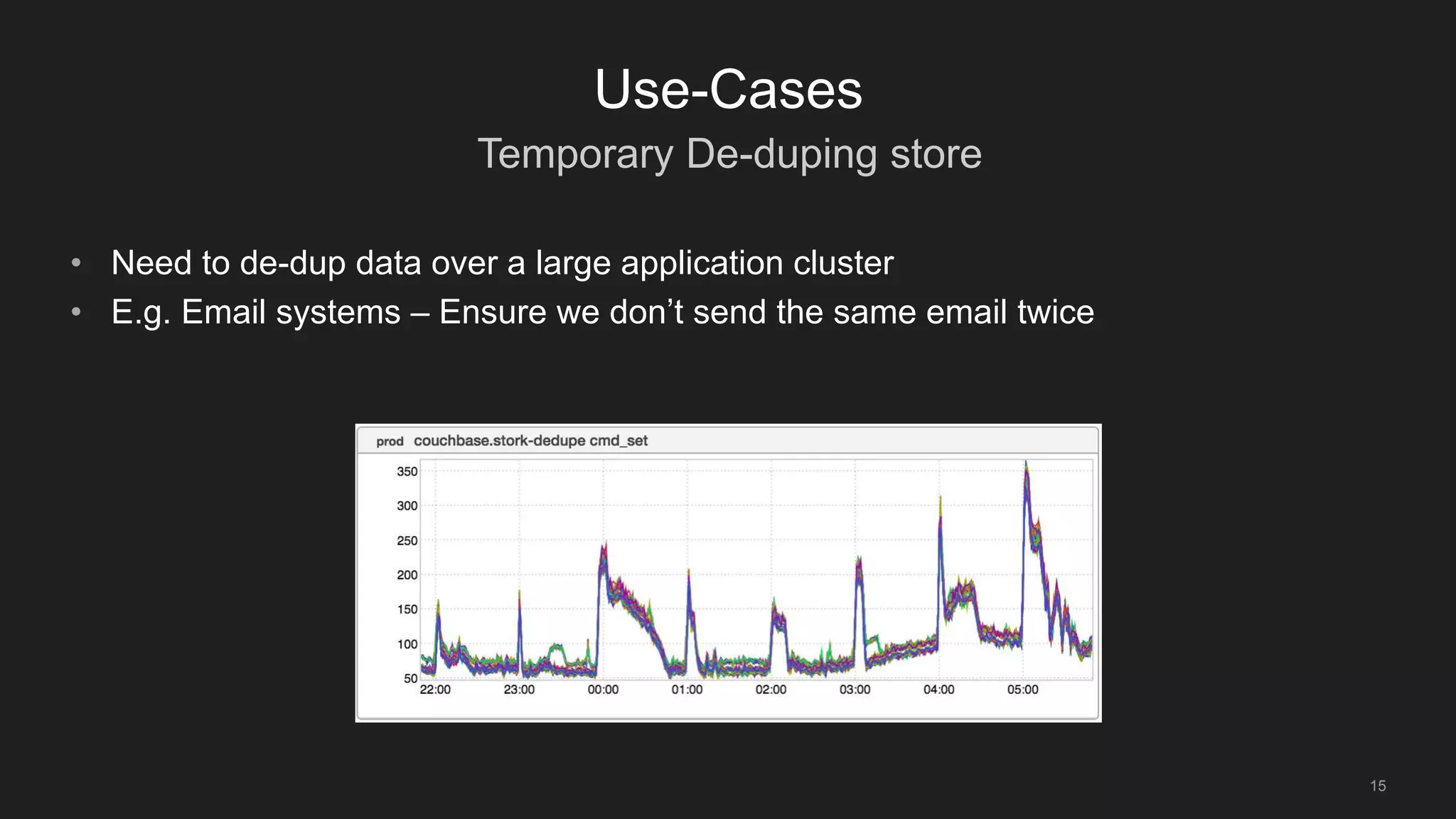

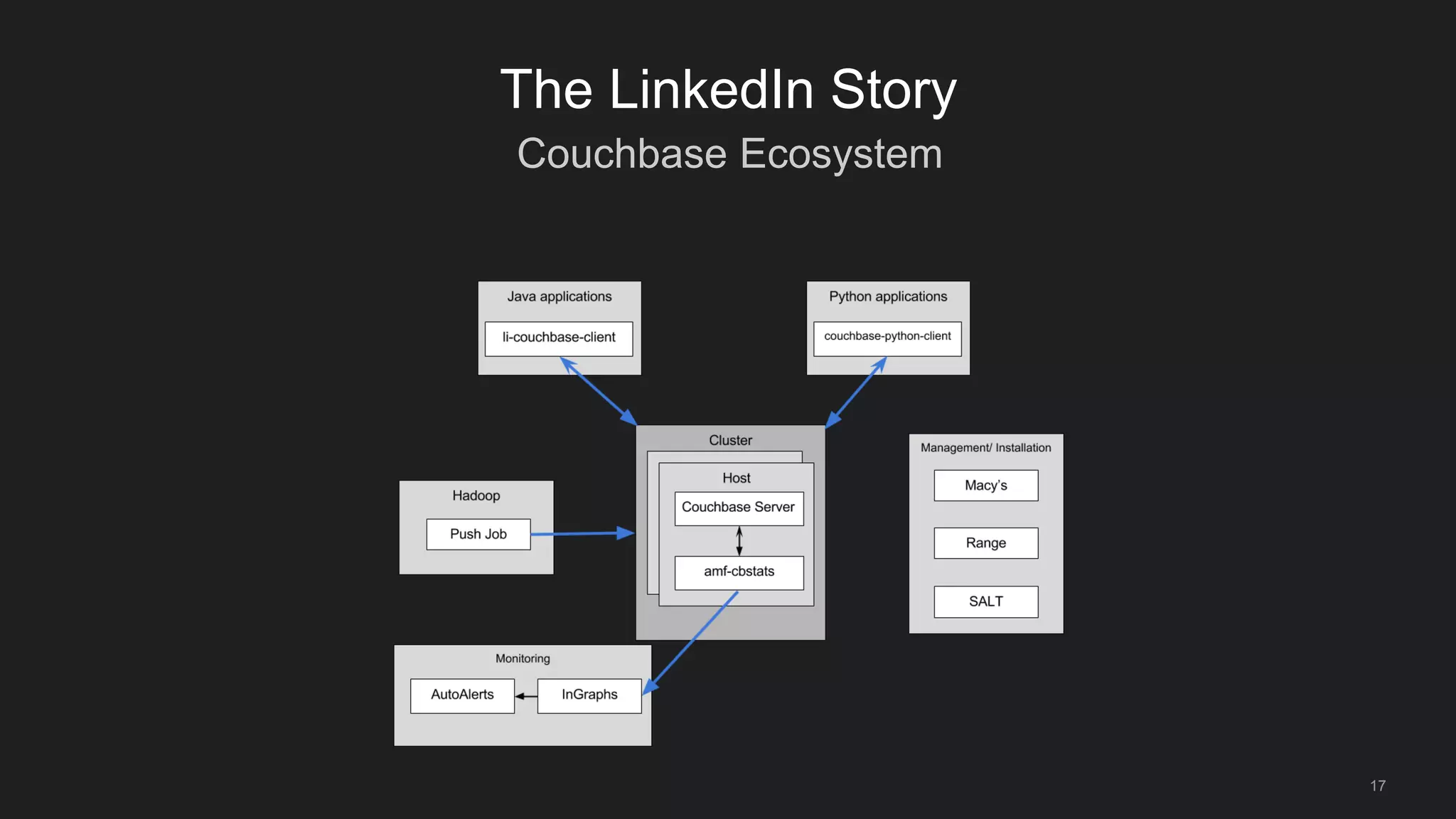

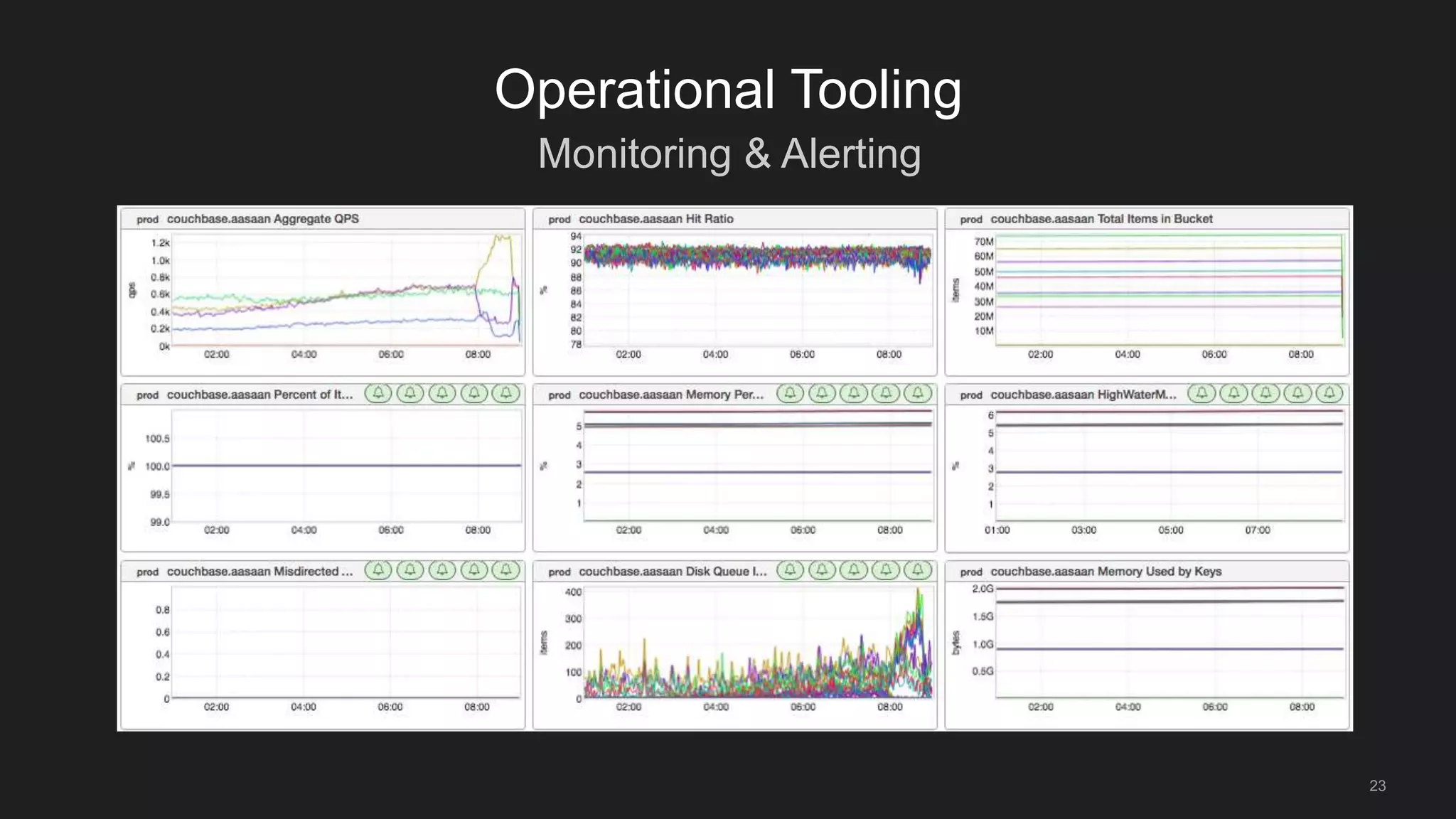

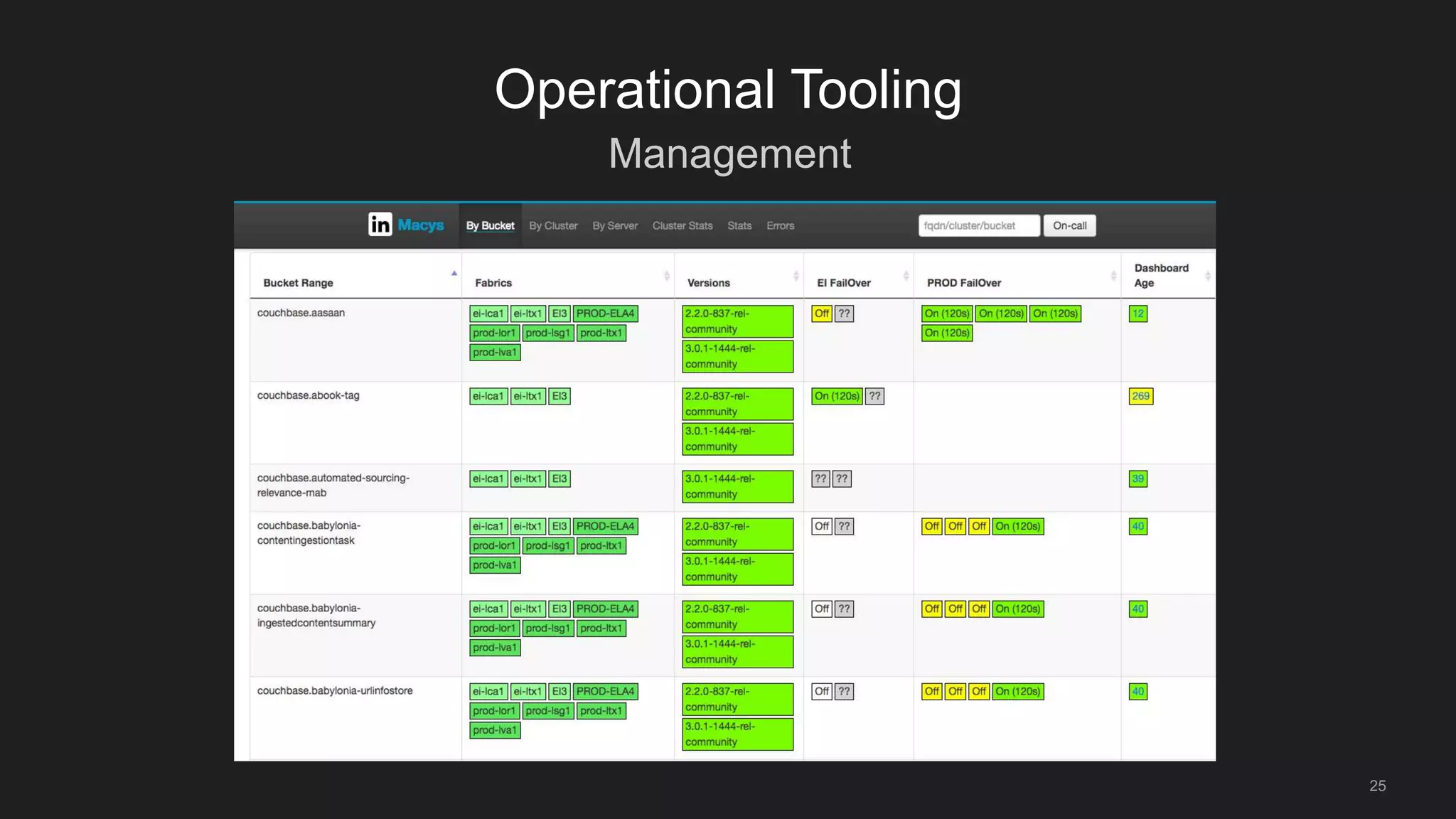

This document discusses LinkedIn's use of Couchbase as an in-memory data store. It describes how LinkedIn has grown to rely heavily on Couchbase, now running it in production, staging, and corporate environments. It also outlines some of the key use cases Couchbase supports at LinkedIn, such as serving as a read-through cache, storing counters, and acting as a source of truth datastore for some internal tools. Finally, it discusses the operational tooling and processes LinkedIn has developed to support Couchbase at scale.