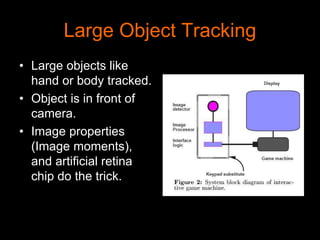

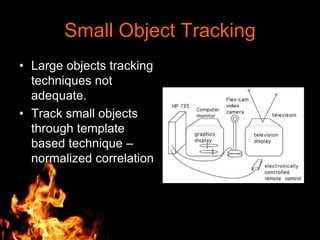

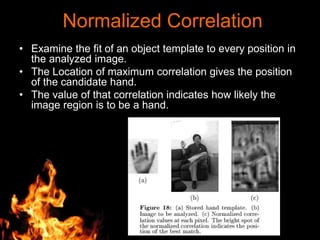

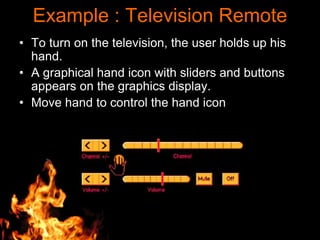

This document discusses computer vision and its uses for interactive computer graphics. Computer vision involves acquiring, processing, analyzing and understanding images from the real world in order to produce information. It allows computers to interpret user movements and gestures through algorithms like tracking, shape recognition and motion analysis. These visual algorithms enable interactive applications where the computer can track and respond to both large and small objects in real-time, creating new possibilities for human-computer interaction. Examples provided demonstrate how computer vision can be used to track a user's hand gestures to control a television interface.

![References

[1] R. Bajcsy. Active perception. IEEE Proceedings, 76(8):996-1006, 1988.

[2] A. Blake and M. Isard. 3D position, attitude and shape input using video tracking of

hands and lips. In Proc. SIGGRAPH 94,pages 185{192, 1994. In Computer

Graphics, Annual Conference Series.

[3] T. Darrell, P. Maes, B. Blumberg, and A. P.Pentland. Situated vision and behavior for

interactive environments. Technical Report 261, M.I.T. Media Laboratory, Perceptual

Computing Group, 20 Ames St., Cambridge, MA 02139, 1994.

[4] I. Essa, editor. International Workshop on Automatic Face- and Gesture-

Recognition.IEEE Computer Society, Killington, Vermont, 1997.

[5] W. T. Freeman and M. Roth. Orientation histograms for hand gesture recognition. In

M. Bichsel, editor, Intl. Workshop on automatic face and gesture-recognition, Zurich,

Switzerland, 1995. Dept. of Computer Science, University of Zurich, CH-8057.

[6] W. T. Freeman and C. Weissman. Television control by hand gestures. In M. Bichsel,

editor, Intl. Workshop on automatic face and gesture recognition, Zurich, Switzerland,

1995. Dept. of Computer Science, University of Zurich, CH-8057.](https://image.slidesharecdn.com/computervisionforinteractivecomputergraphics-141121030115-conversion-gate02/85/Computer-vision-for-interactive-computer-graphics-11-320.jpg)