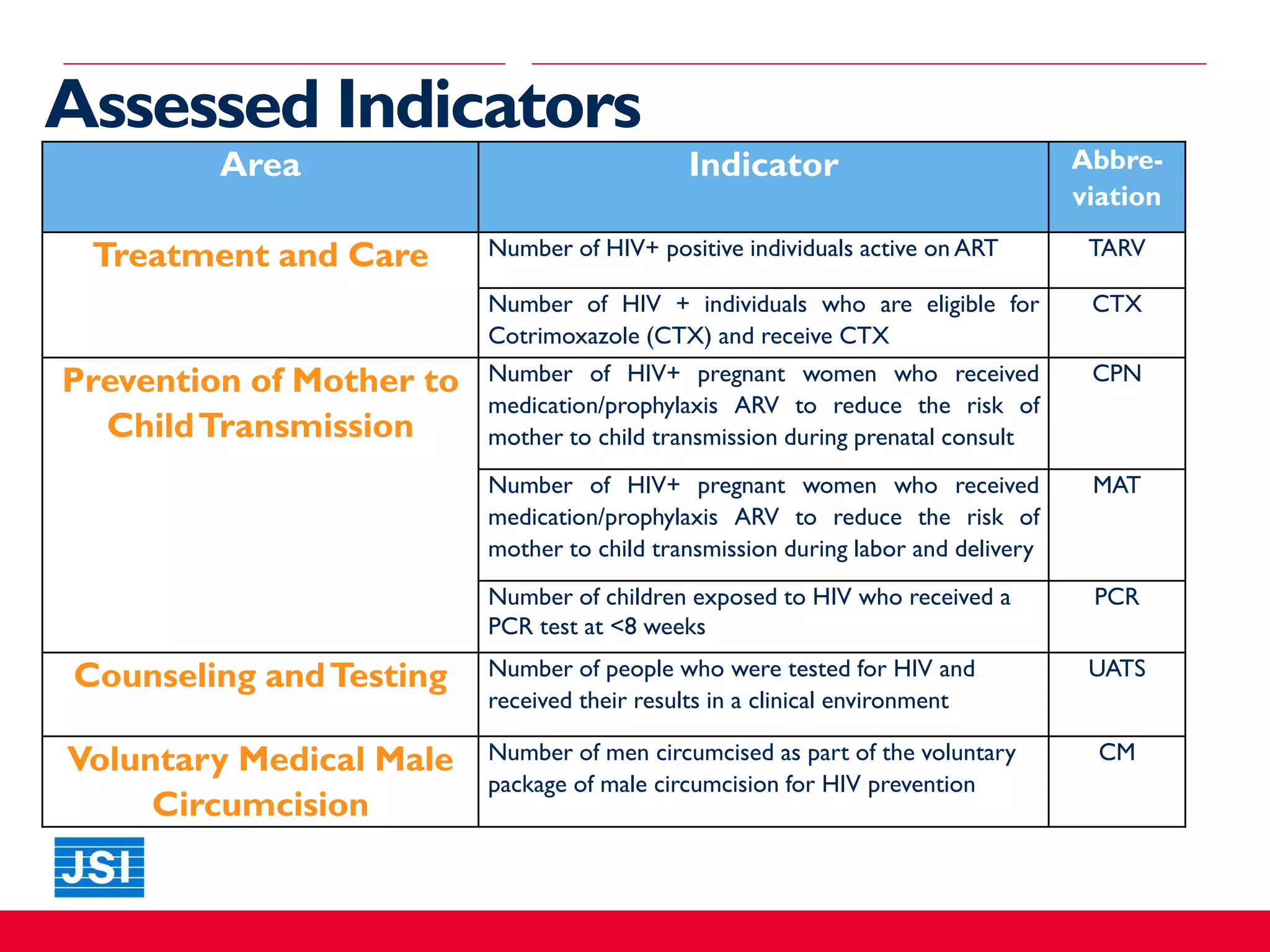

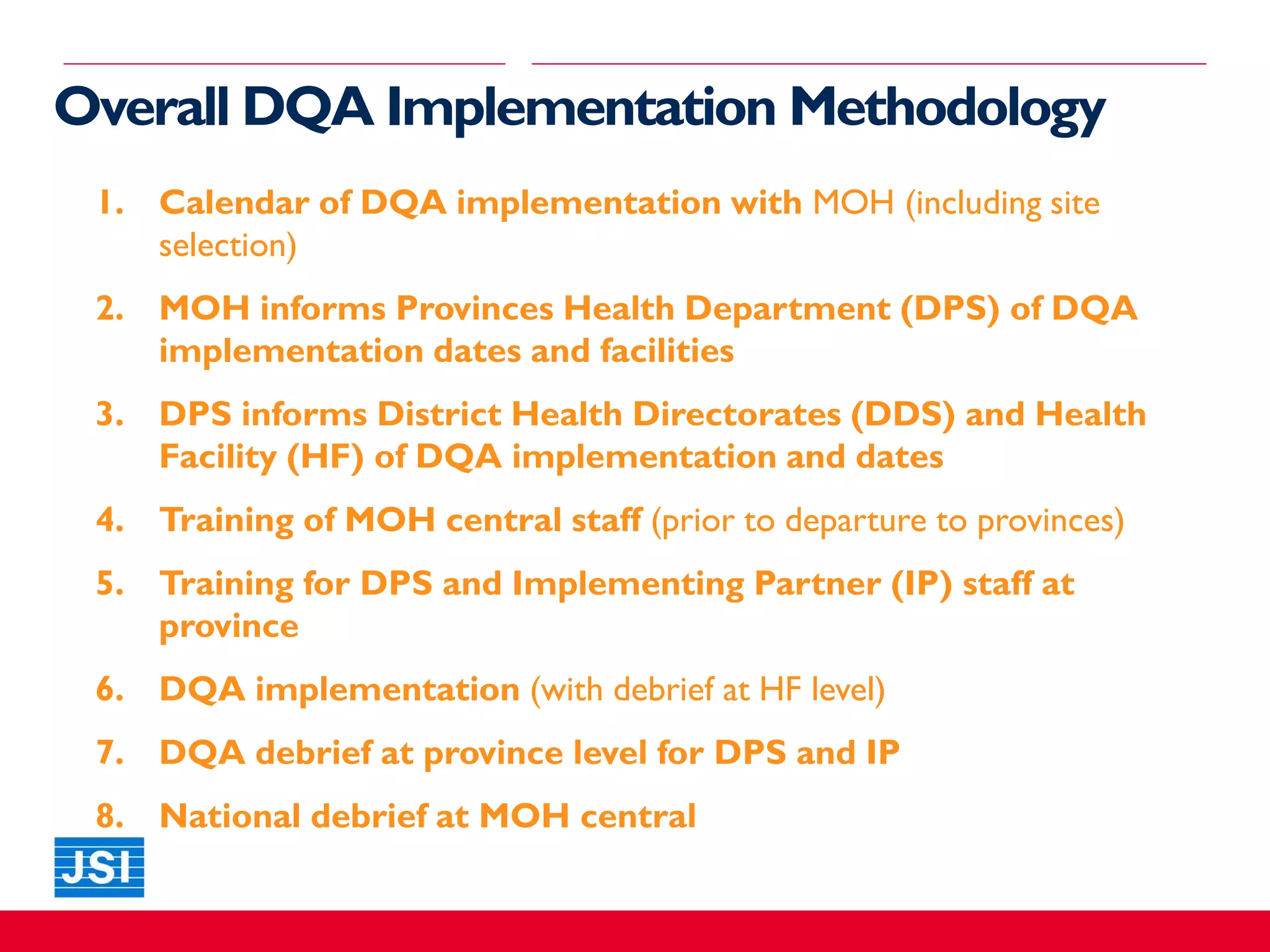

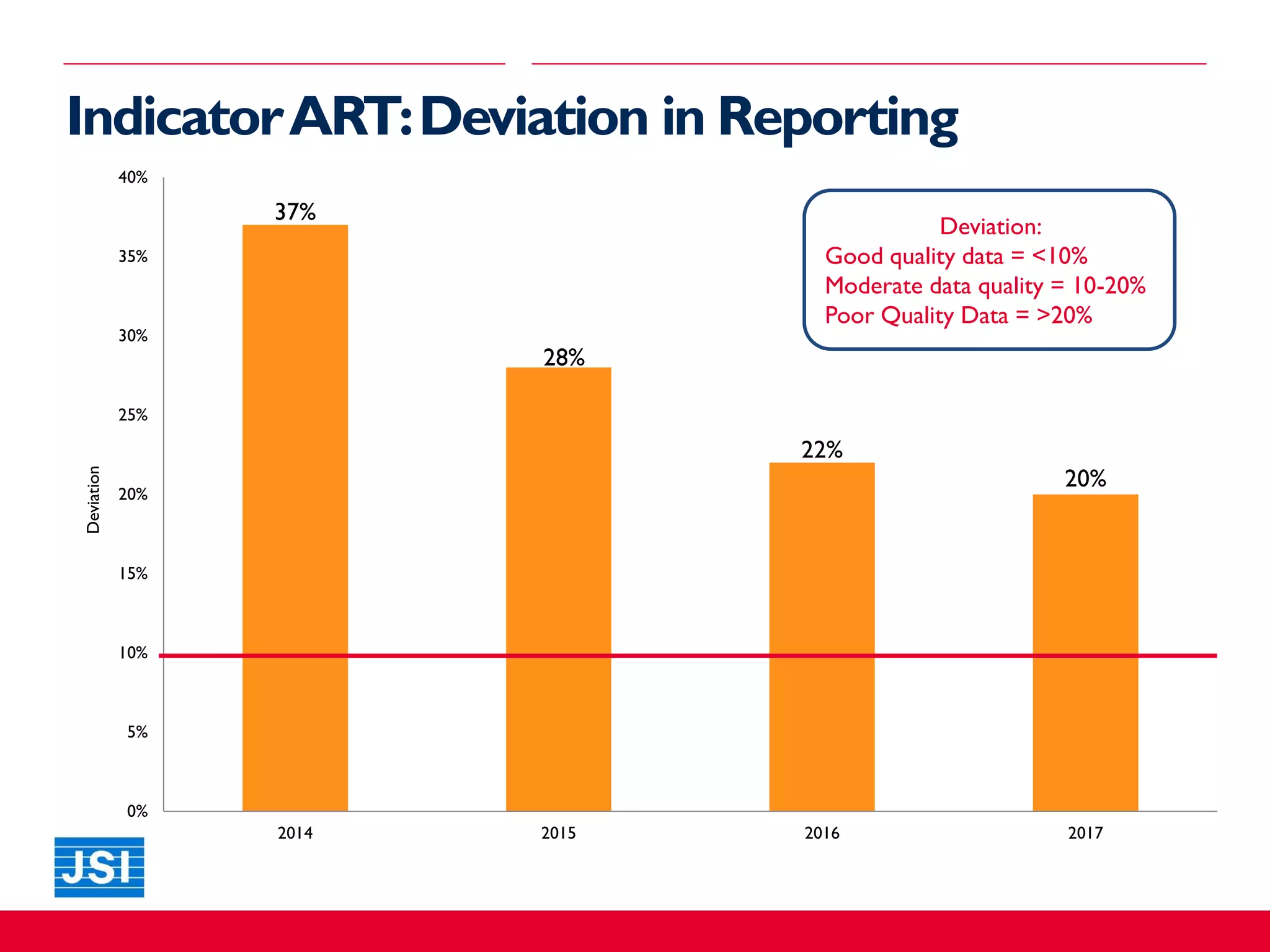

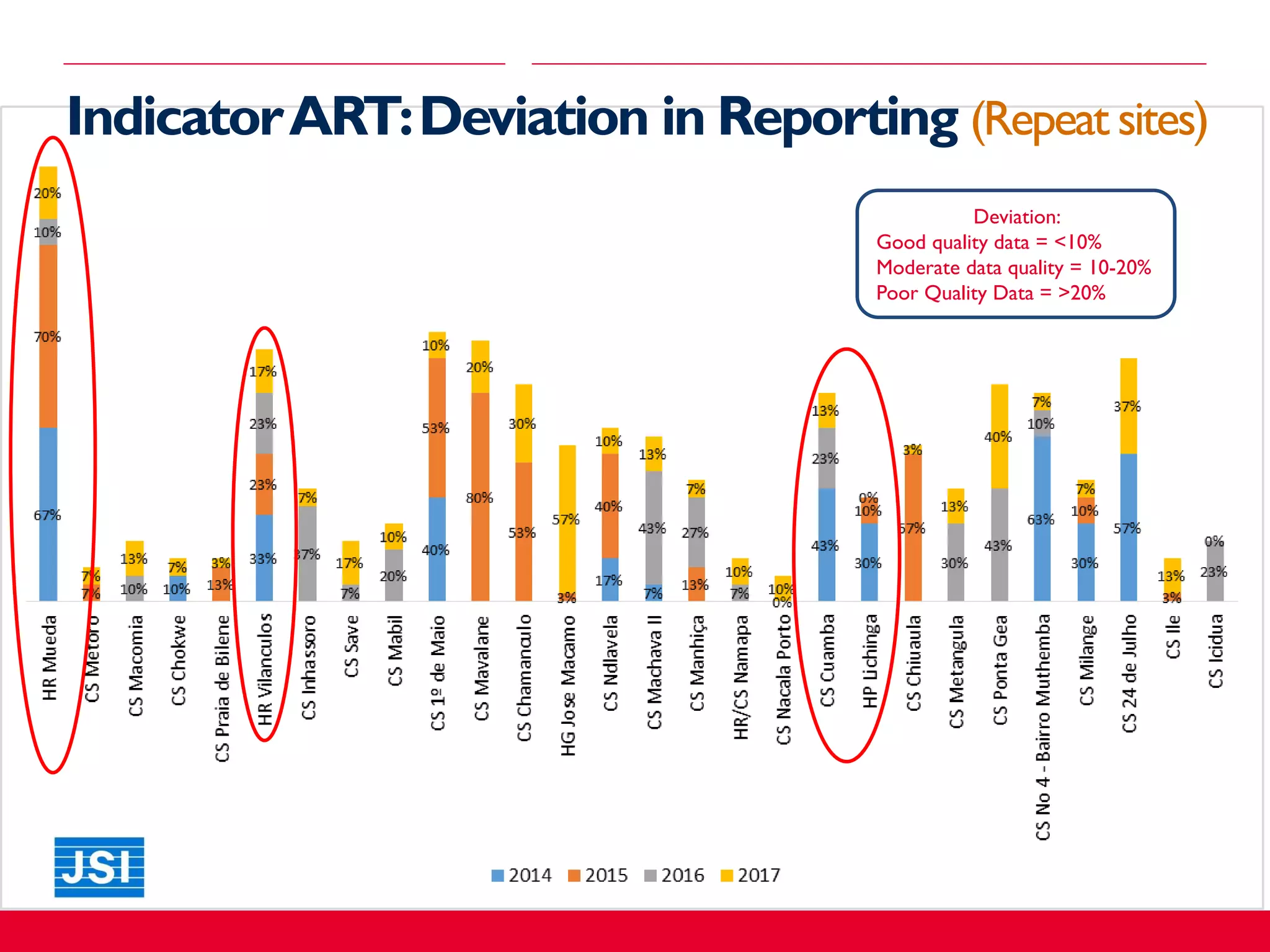

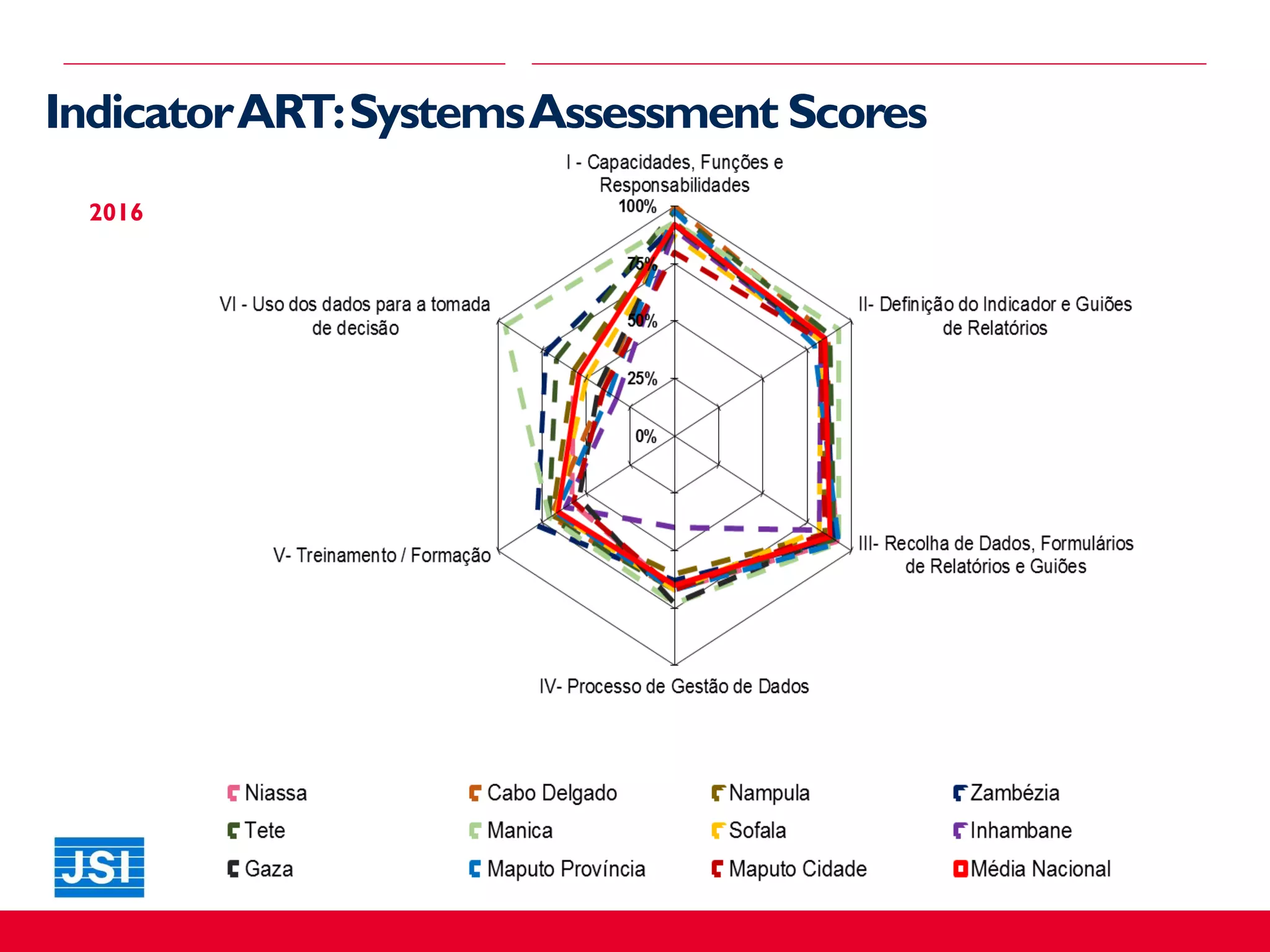

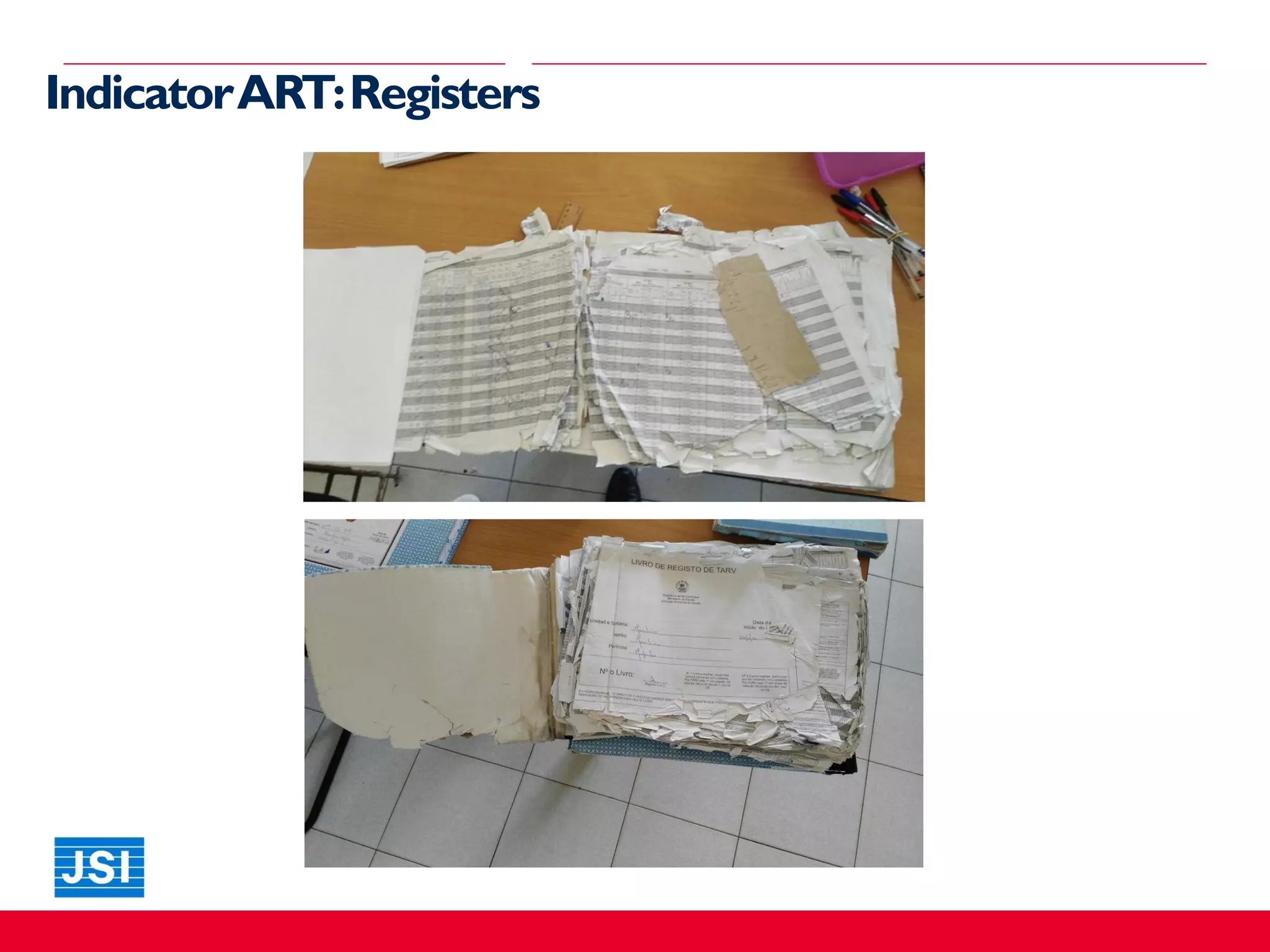

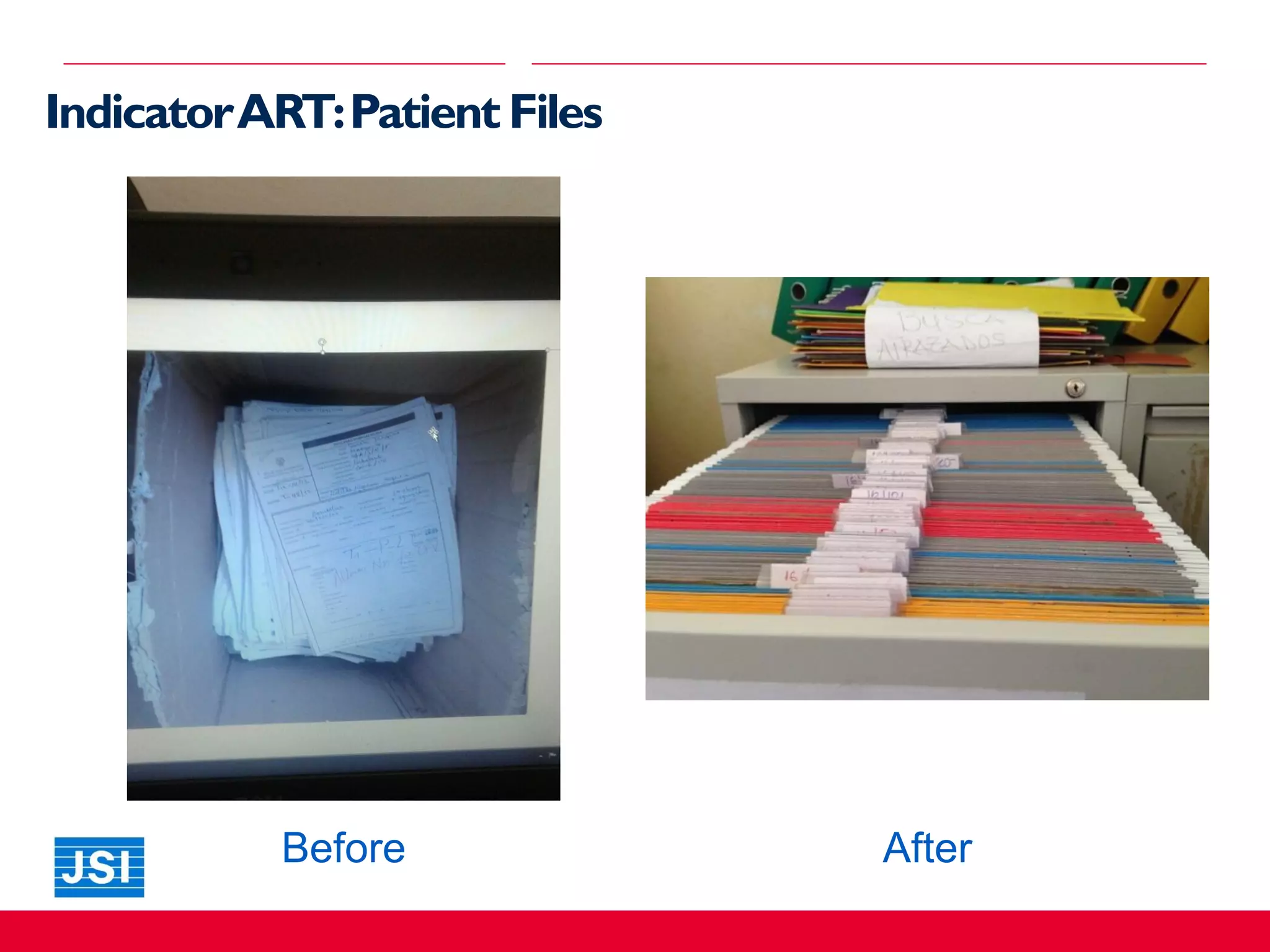

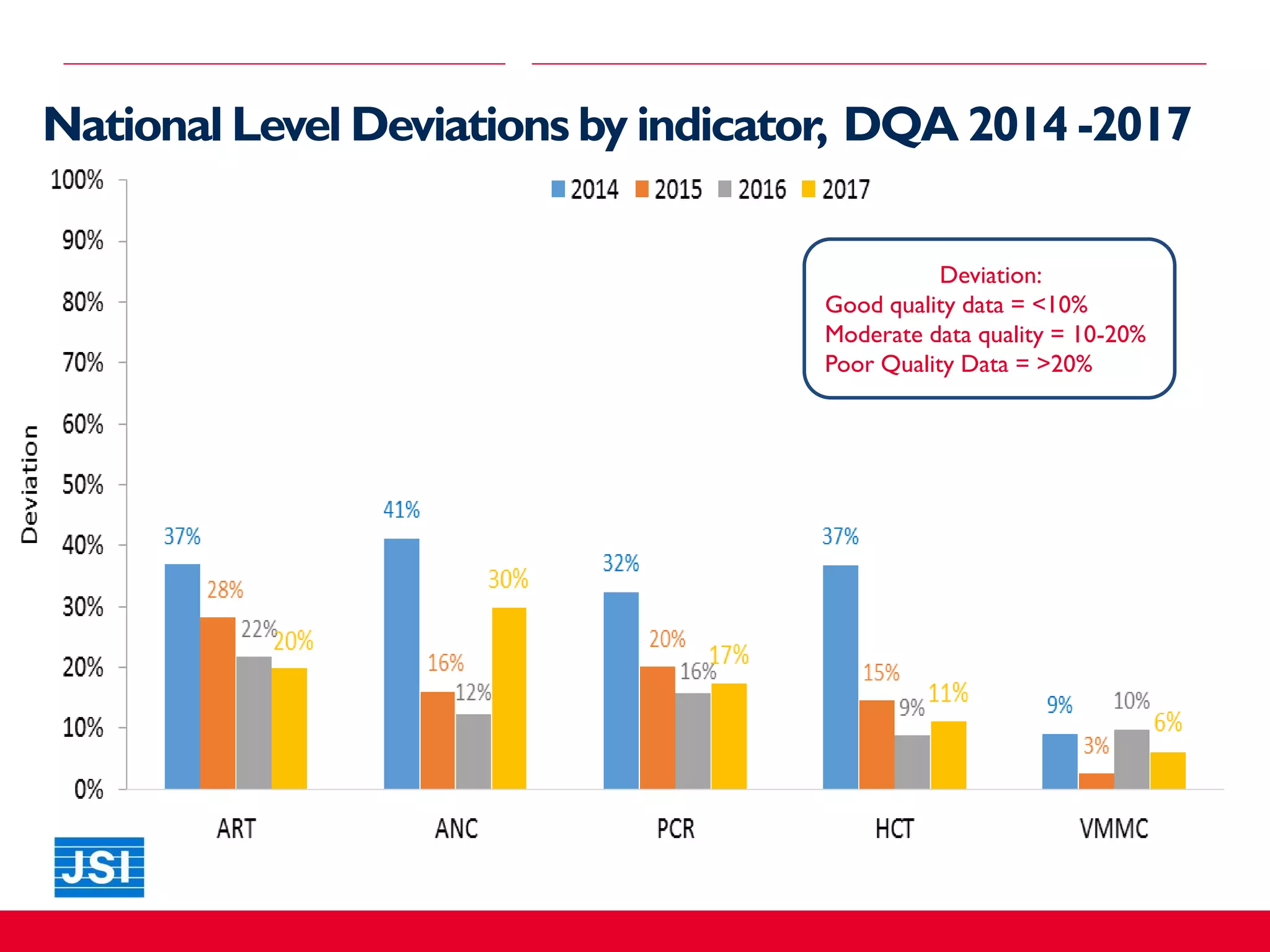

The document discusses the assessment of clinical data quality in Mozambique through a strategy implemented by the Mozambique Strategic Information Project (MSIP). It outlines the objectives, methodology, and results of the data quality assessment (DQA) over several years, highlighting the importance of Ministry of Health (MOH) ownership and feedback mechanisms for improving data quality. Key findings emphasize the need for non-punitive approaches and the potential for scaling DQA methodologies to other health programs.