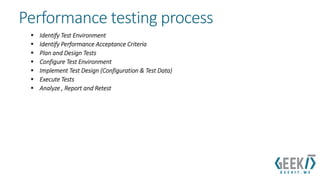

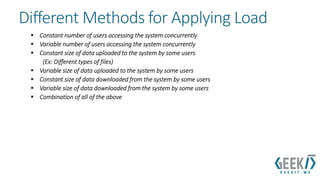

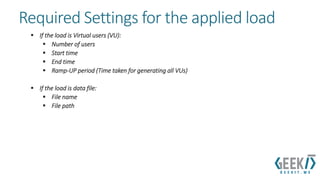

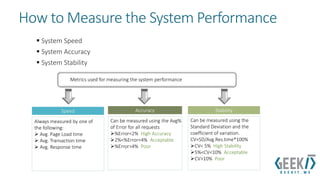

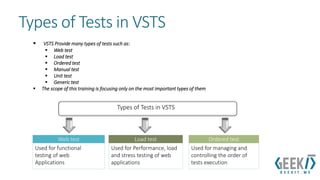

This document provides an overview and agenda for a training on performance testing using Visual Studio 2010. It discusses why functional testing is not enough and introduces performance, load, and stress testing. It covers the performance testing process in Visual Studio, including creating web, load, and ordered tests. It also addresses measuring system performance, applying load, and analyzing test results. Key points about testing methodology and best practices are provided.

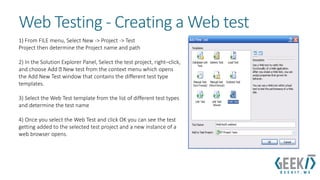

![Web Testing -How it Works ?

Web tests are used for testing the functionality of the web applications,

Web sites, web services or a combination of all of this.

Web tests can be created by recording the interactions that are performed in the

browser which are normally a series of HTTP requests (GET/POST).

This requests can be played back to test the web application by setting a validation

point at the response to validate it against the expected results

Step 1

Step 2

Step 3

Record the user interactions [Test Scenario] o0n0 the web browser

Play back the recorded scenario after setting validation points on the response

Get the test result [Either Success or failure] after validating against the expected results](https://image.slidesharecdn.com/performancetestusingmsvs2010-141026073154-conversion-gate02/85/Performance-Testing-Using-VS-2010-Part-1-25-320.jpg)

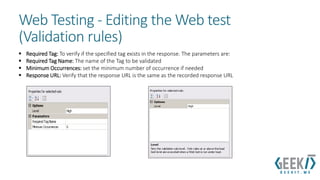

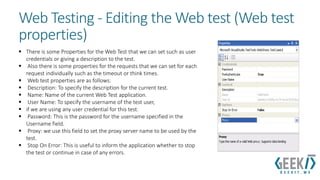

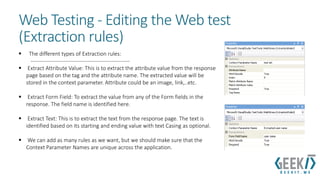

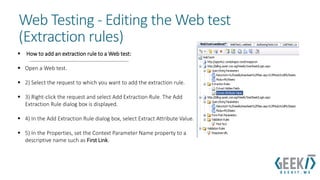

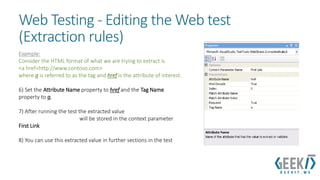

![Web Testing - Editing the Web test

(Validation rules)

The different types of validation rules:

------------------------------------------------------

Form Field: Used to verify the existence of a form field with a certain value.

The parameters are Form Field Name and Expected value.

Find Text: This is to verify the existence of a specified text in the response.

The parameters used for this are:

Find Text: The text to search for

Ignore Case: To determine whether the search will be case sensitive or not.

[True: Ignore case / False: Don’t Ignore case]

Pass If Text Found: To determine the acceptance criterion

[True: The test will pass if the text is found / False: The test will pass if the text is

not found]

Use Regular Expression: Used if you need to search for a regular expression (i.e.

Special sequence of characters)](https://image.slidesharecdn.com/performancetestusingmsvs2010-141026073154-conversion-gate02/85/Performance-Testing-Using-VS-2010-Part-1-37-320.jpg)