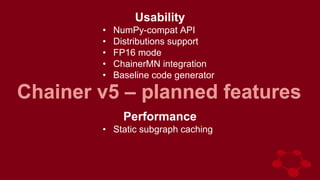

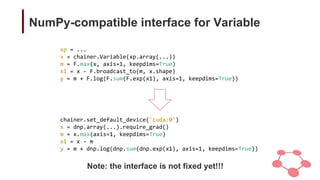

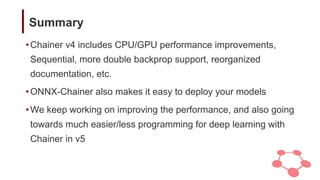

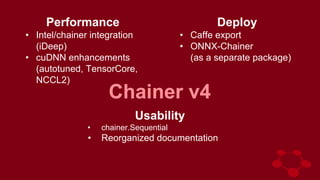

Chainer v4 includes performance improvements like Intel integration and cuDNN enhancements. It also introduces usability features like Sequential chains and reorganized documentation. Chainer v4 allows exporting models to Caffe and ONNX formats. Chainer v5 is planned to improve usability with NumPy compatibility, distributions support, and code generation. It also aims to enhance performance through static subgraph caching.

![Caffe export / ONNX-Chainer

model = L.VGG16Layers()

# Pseudo input

x = np.zeros((1, 3, 224, 224), dtype=np.float32)

# Caffe export

caffe.export(model, [x], directory='.',

graph_name='VGG16')

# ONNX-Chainer

chainer.config.train = False

onnx_chainer.export(model, x, filename='VGG16.onnx')](https://image.slidesharecdn.com/chainer-v4-and-v5-180426021815/85/Chainer-v4-and-v5-9-320.jpg)