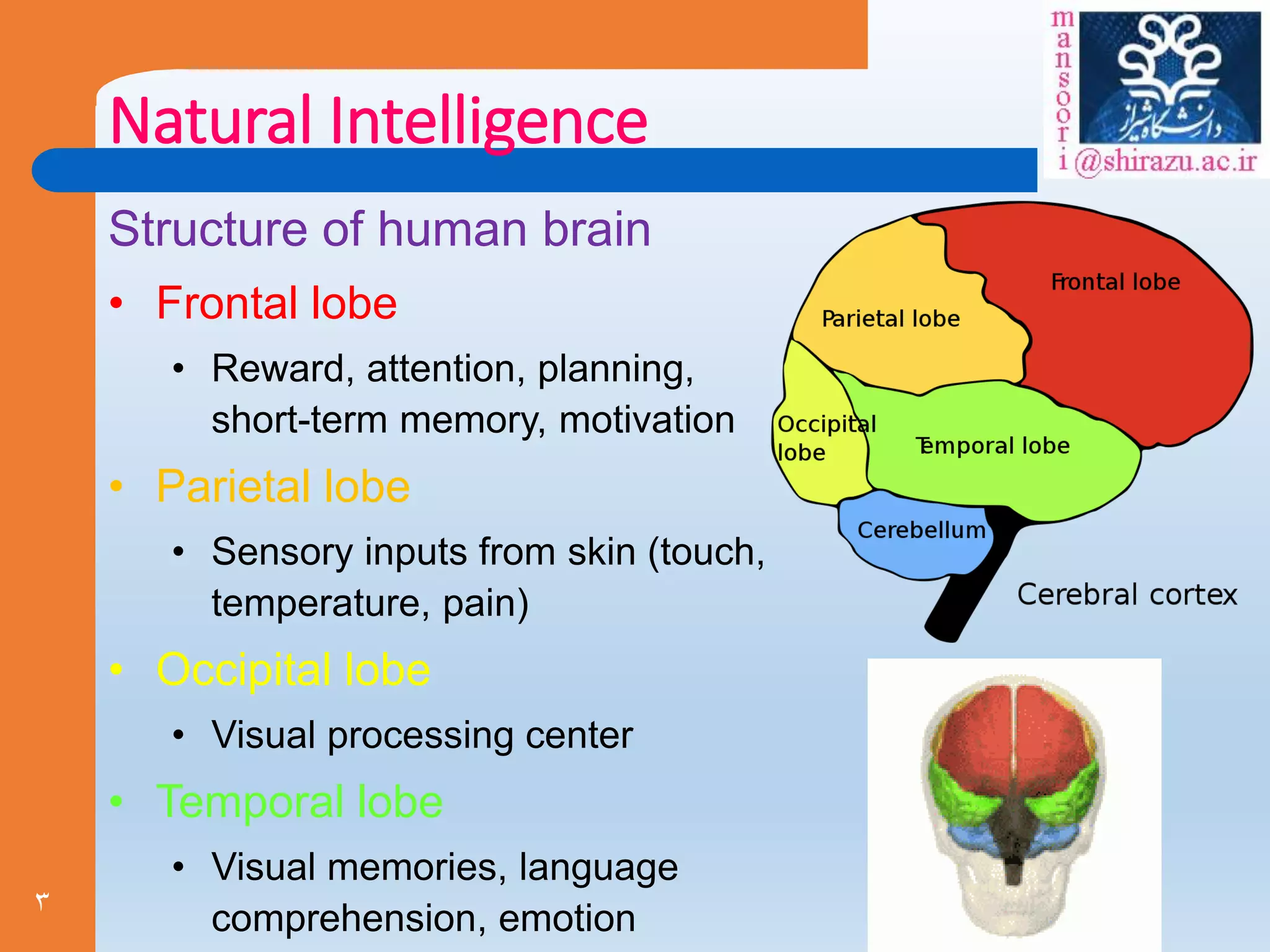

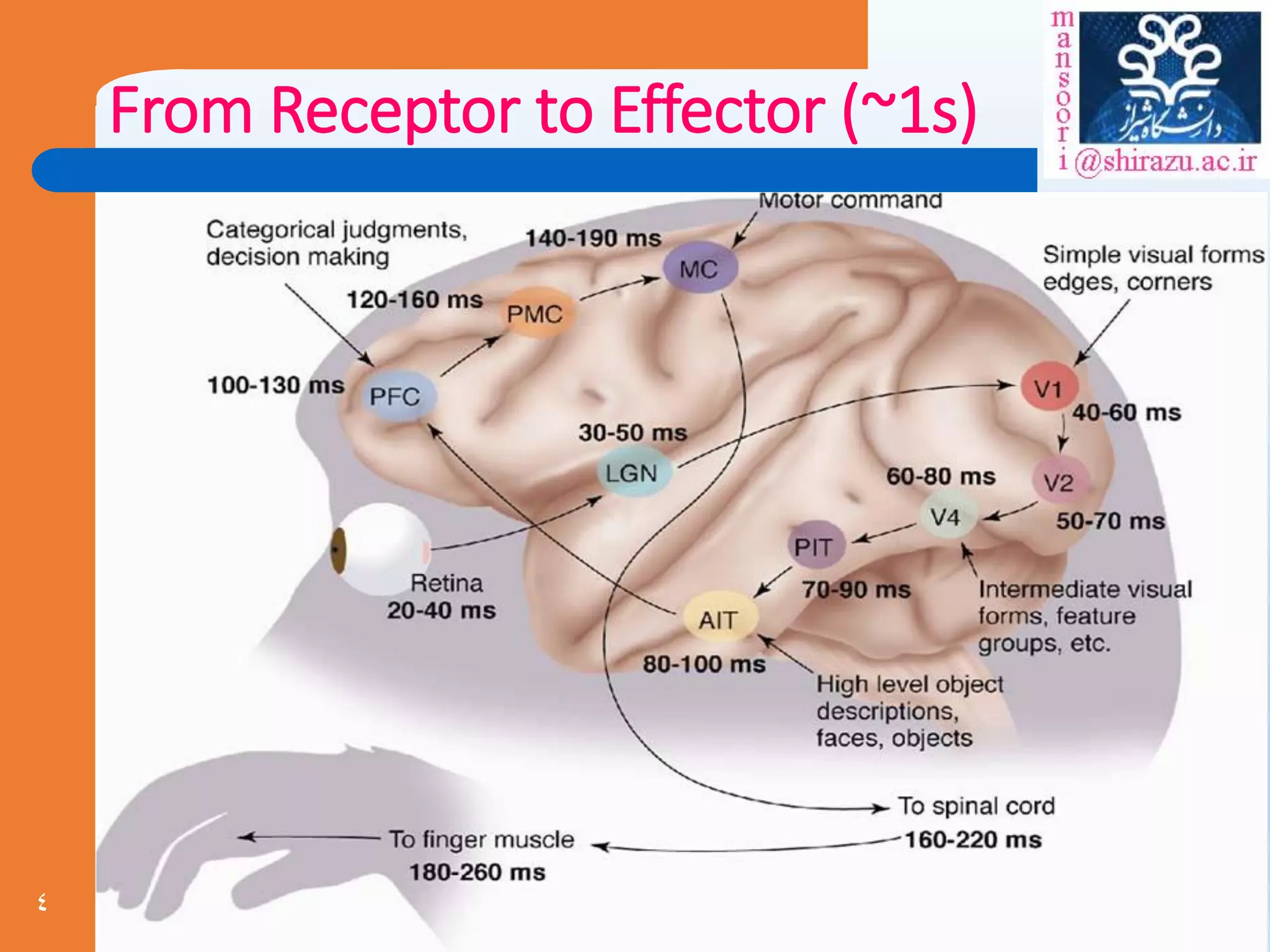

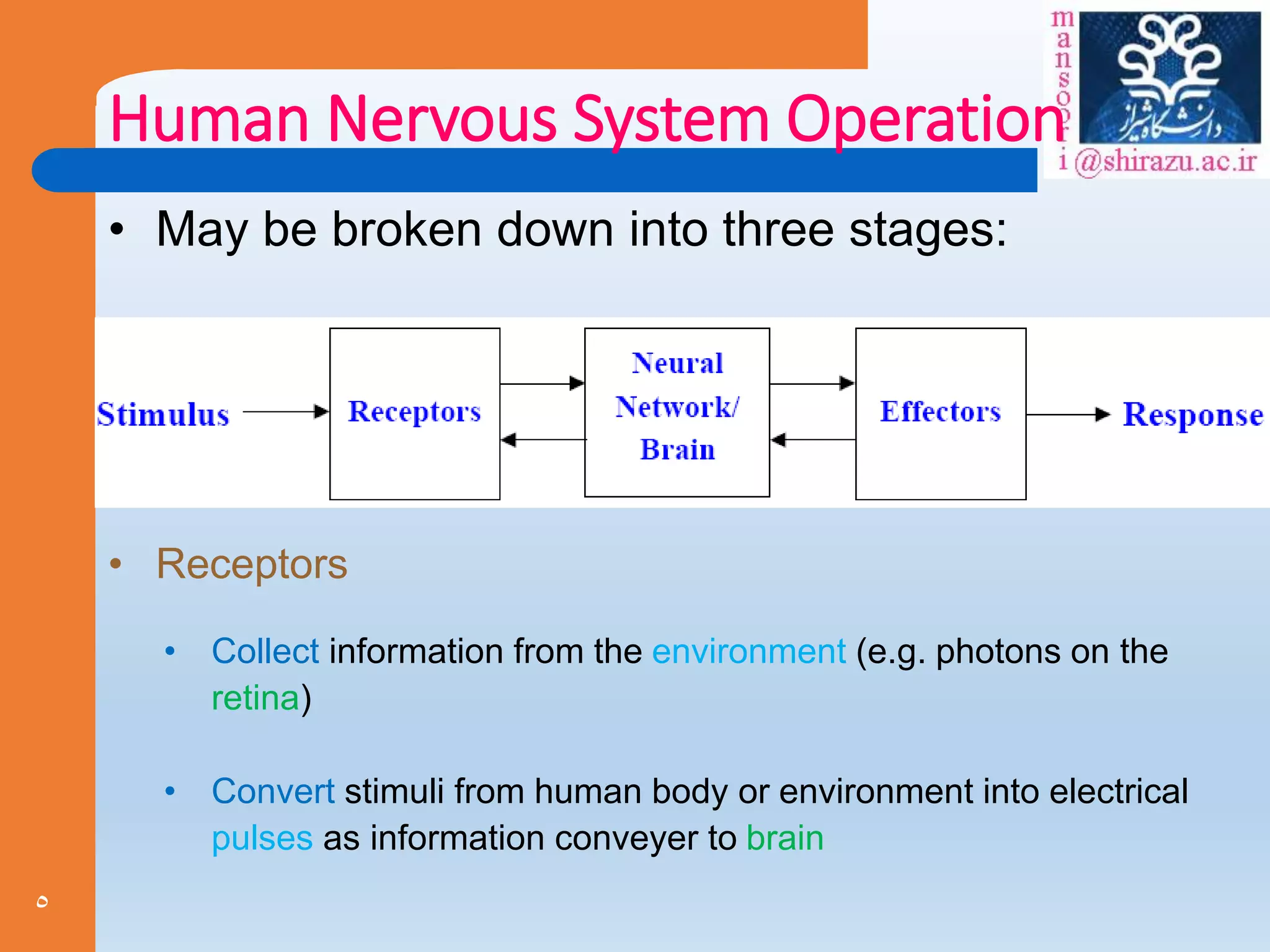

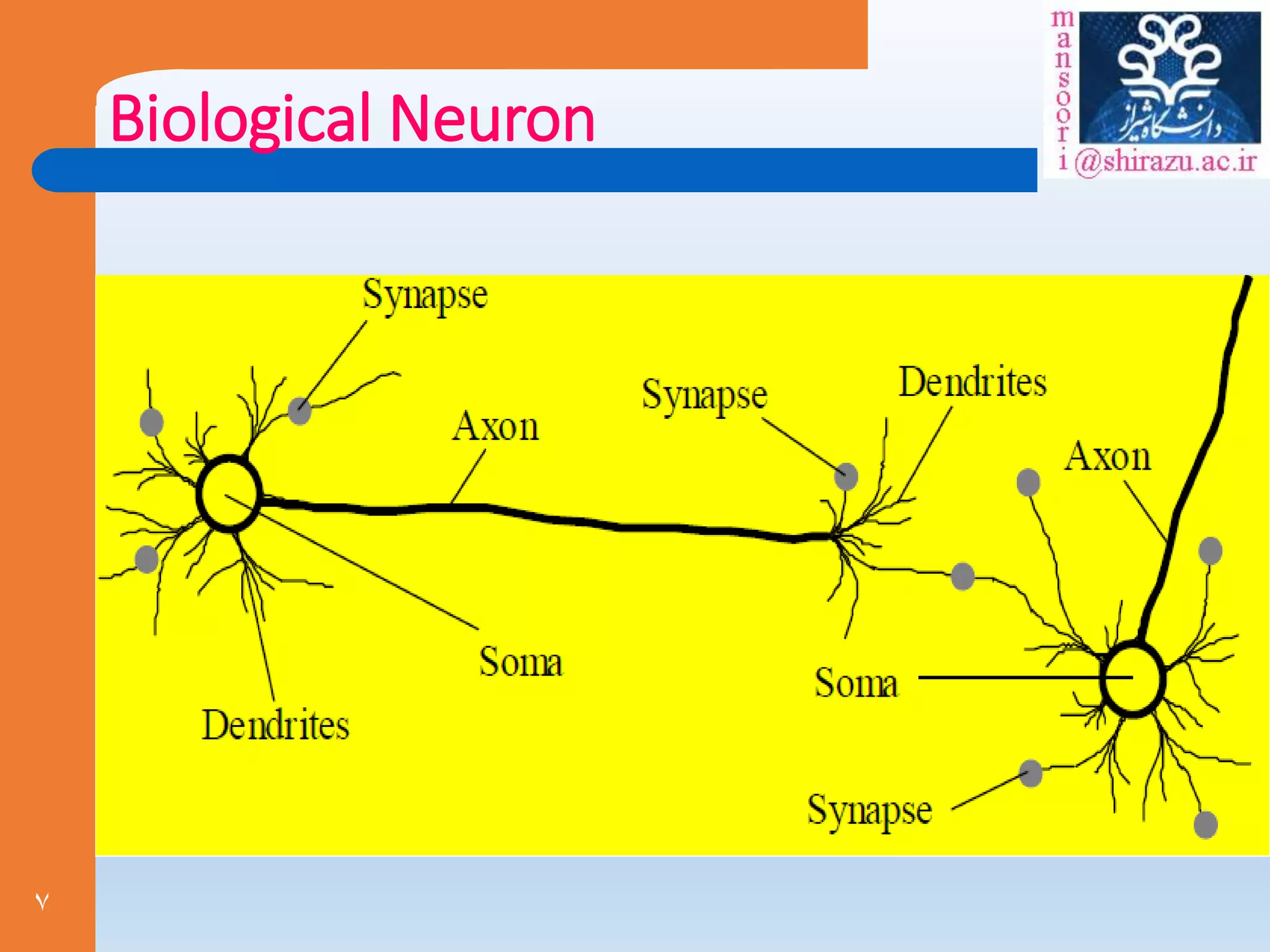

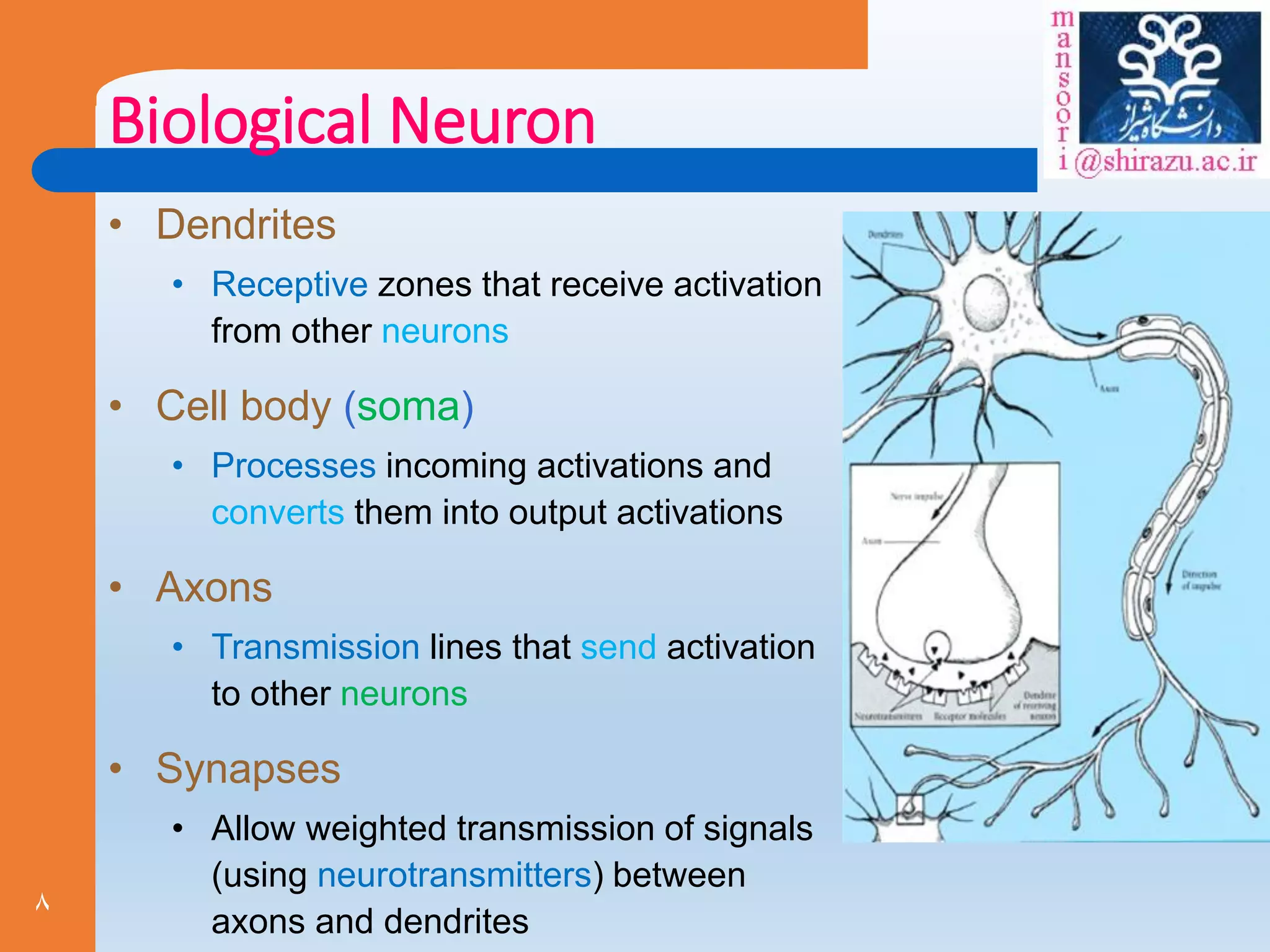

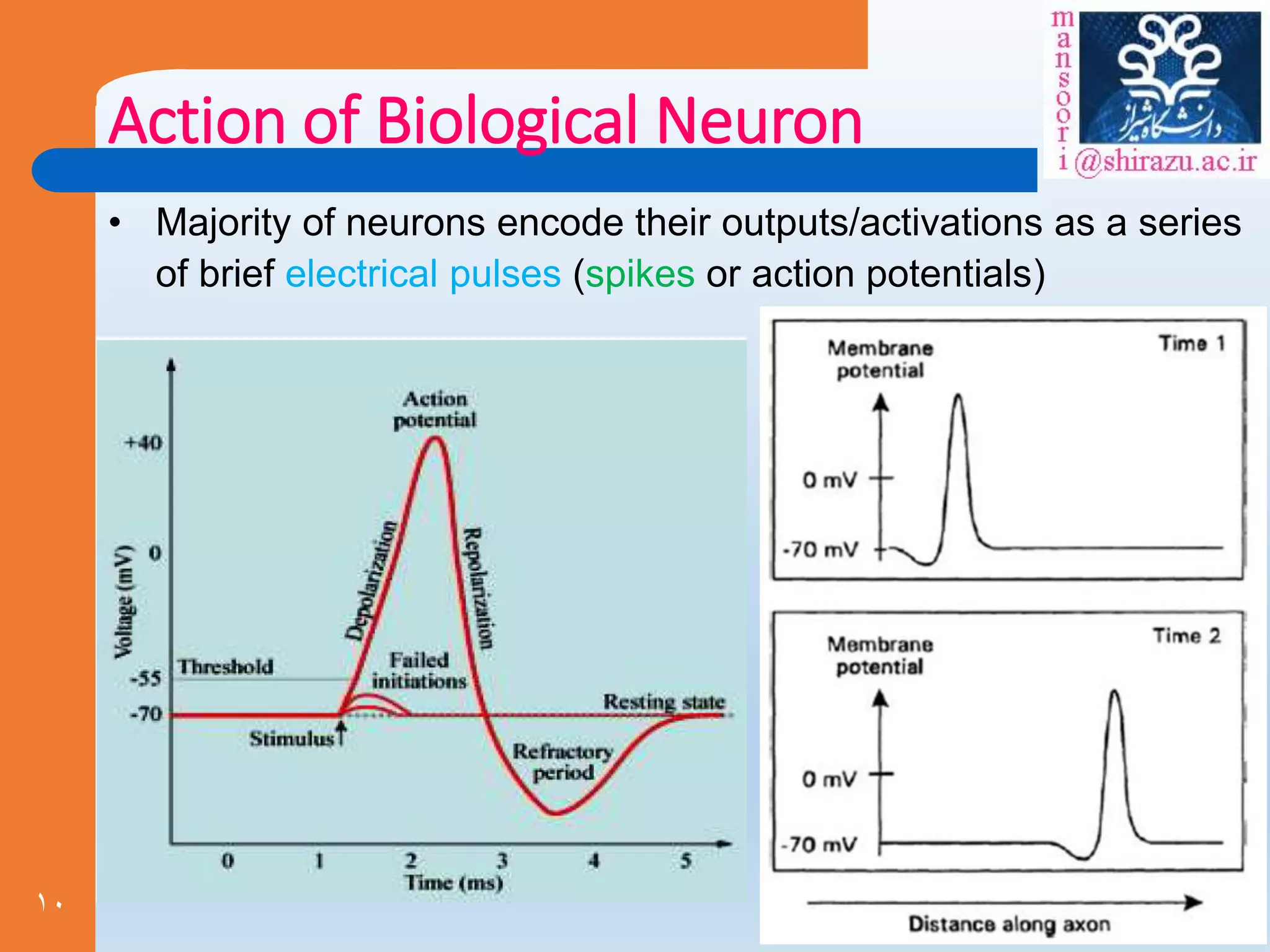

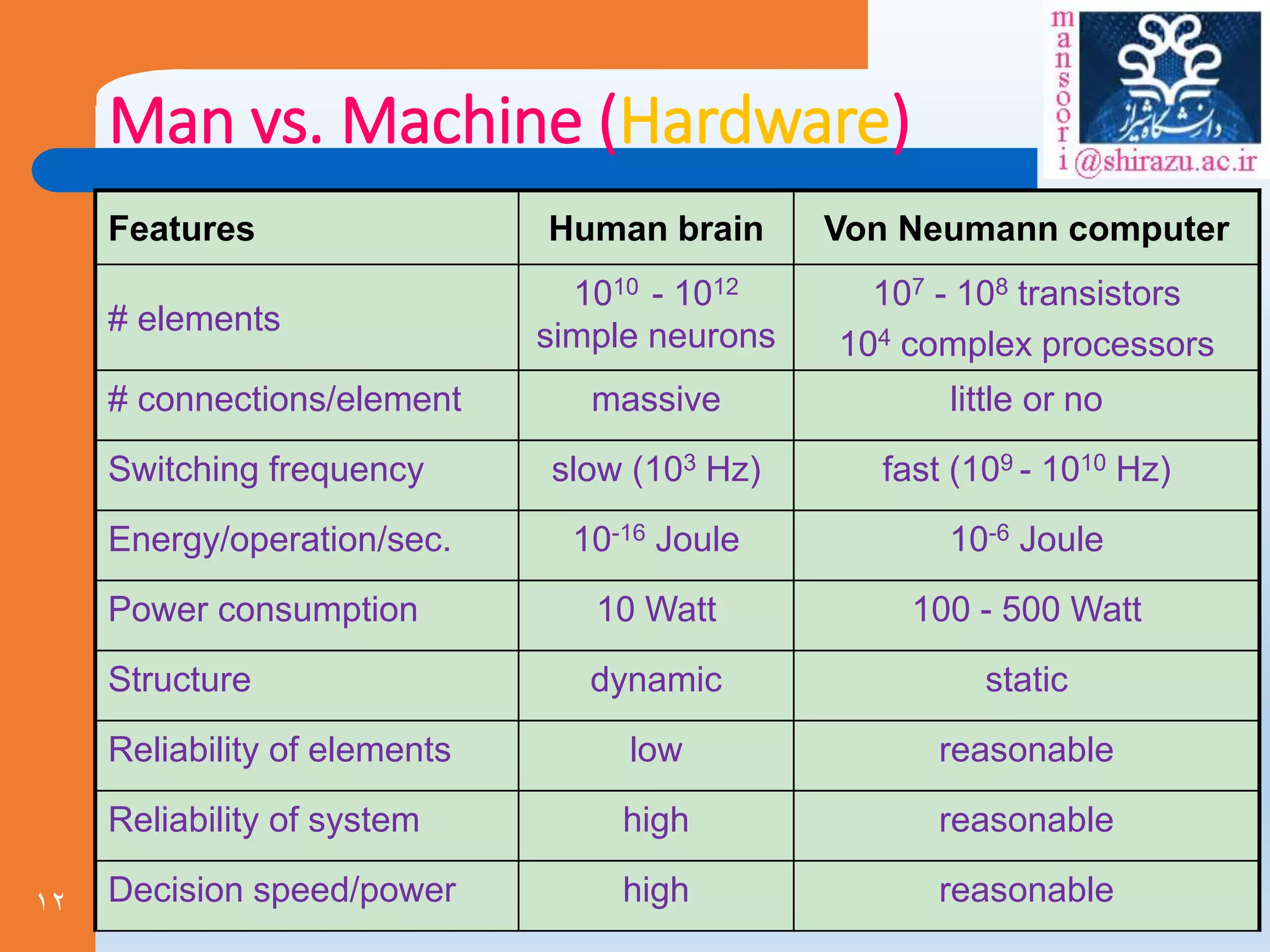

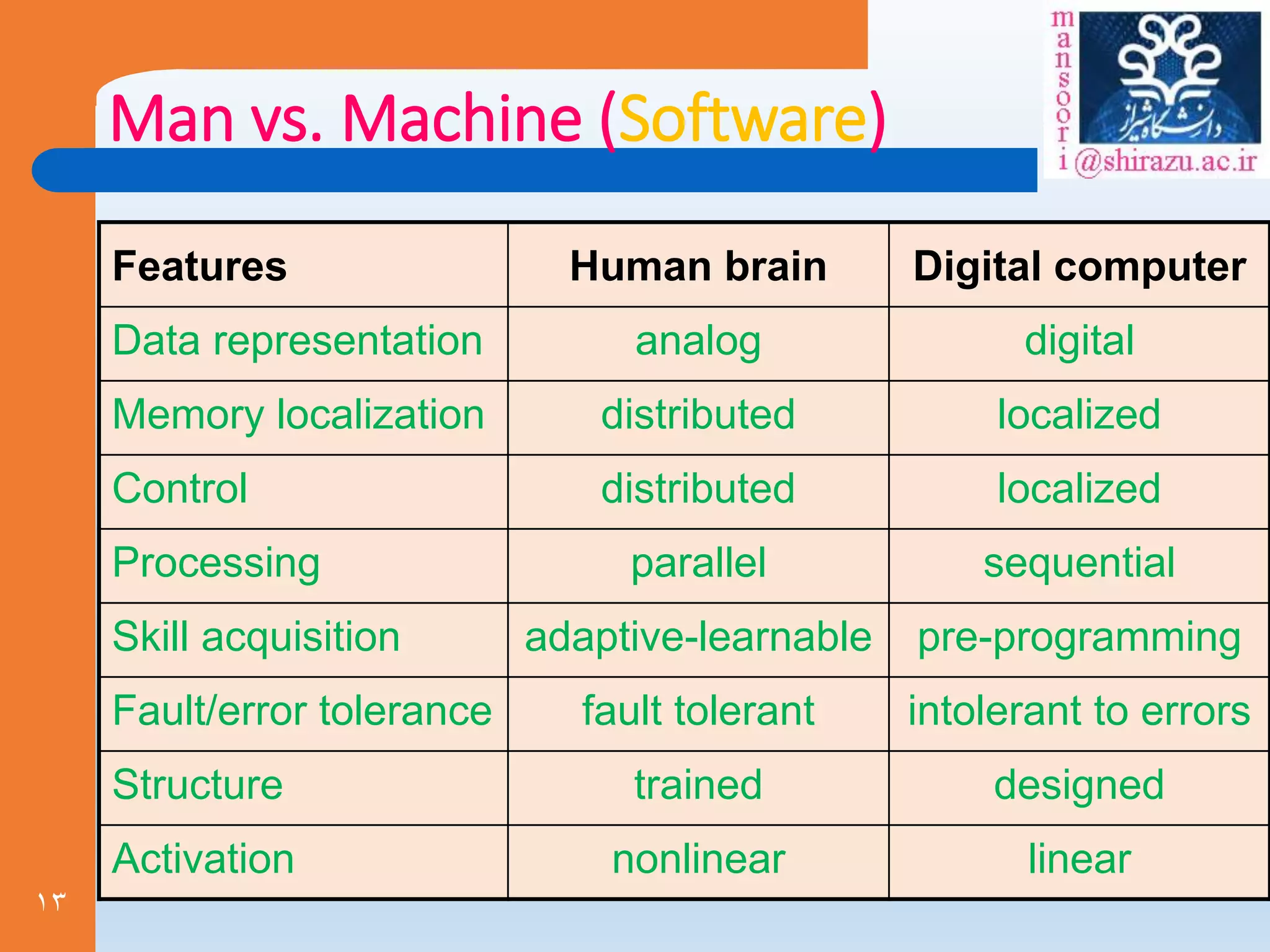

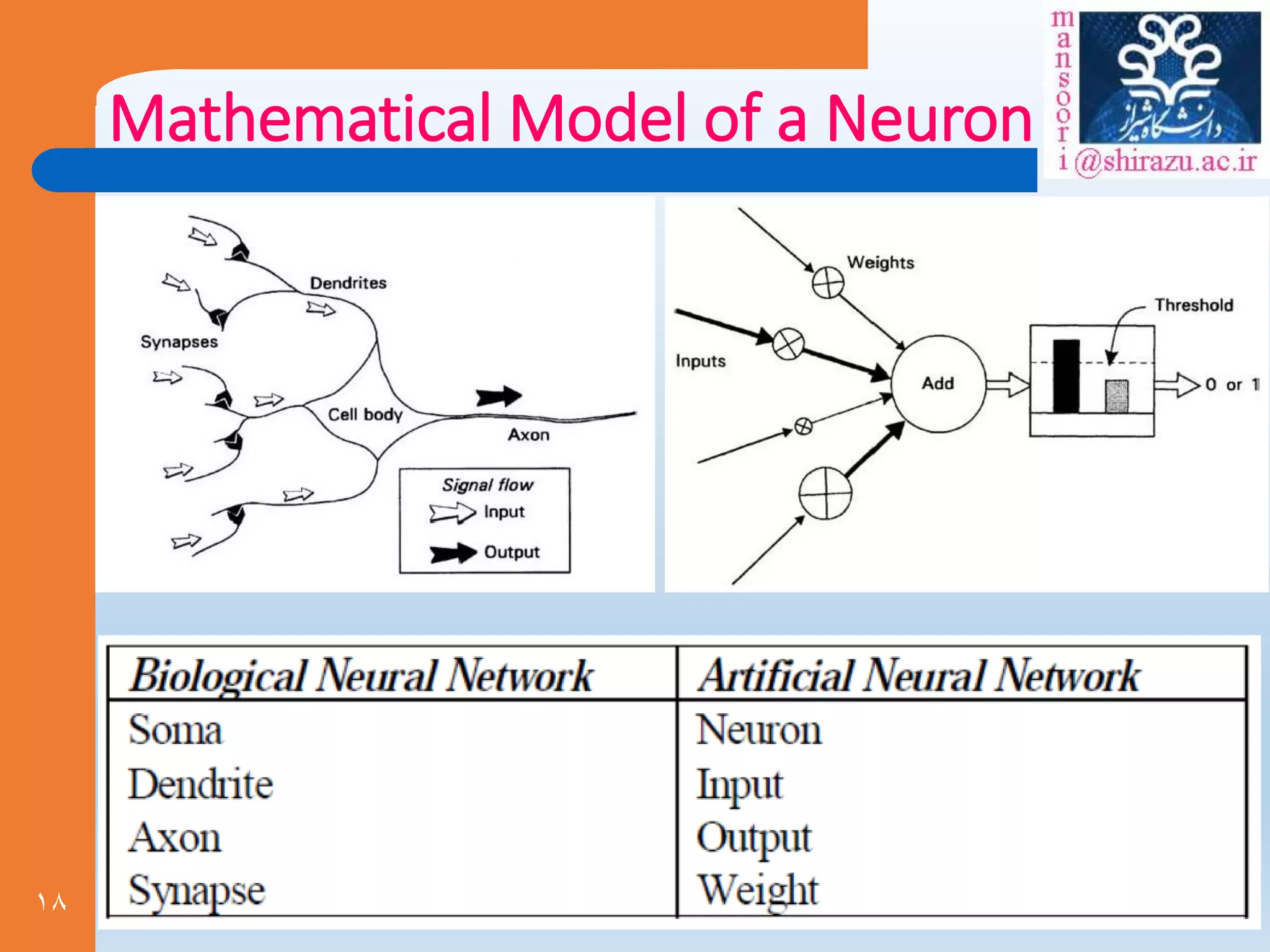

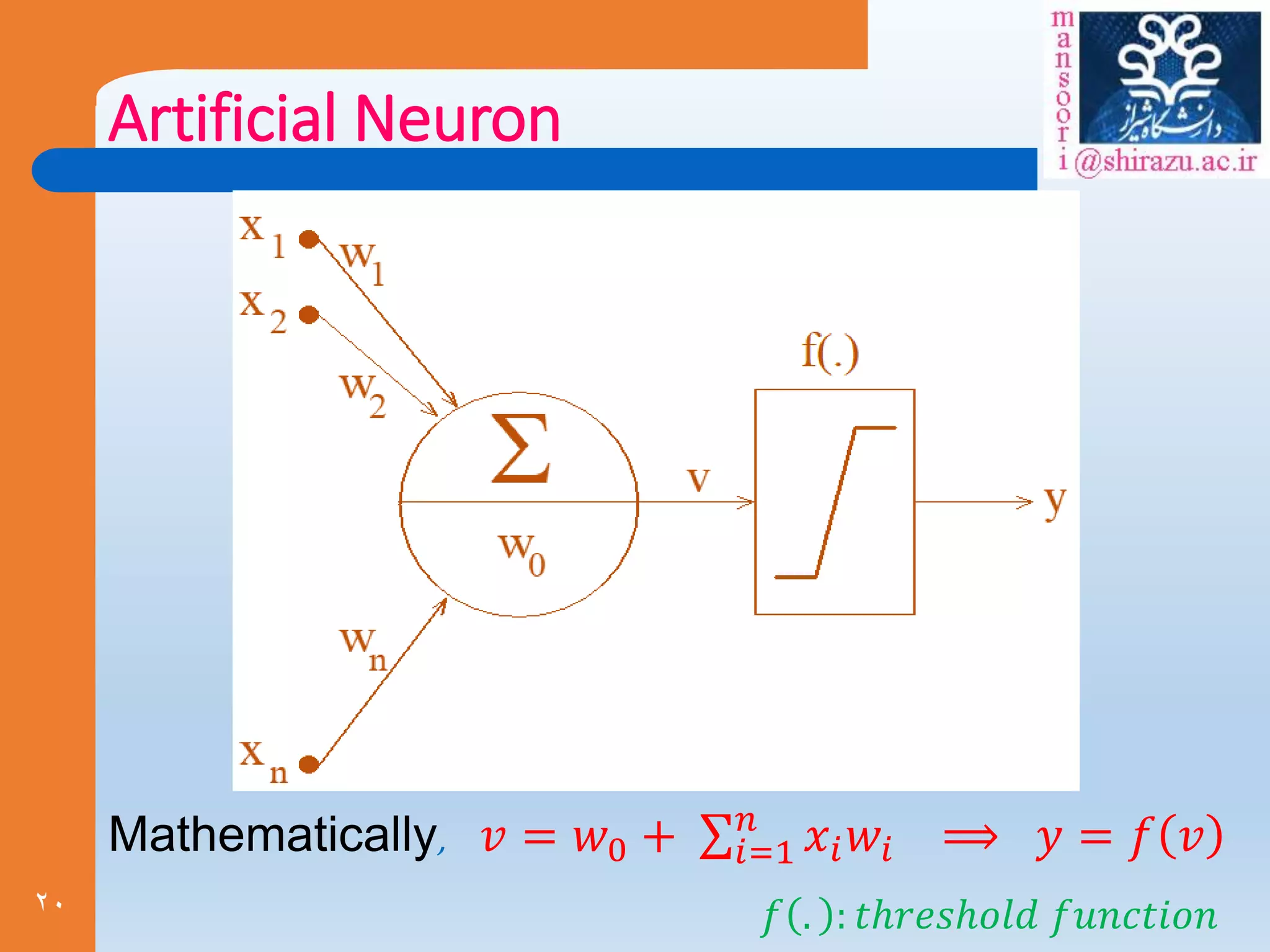

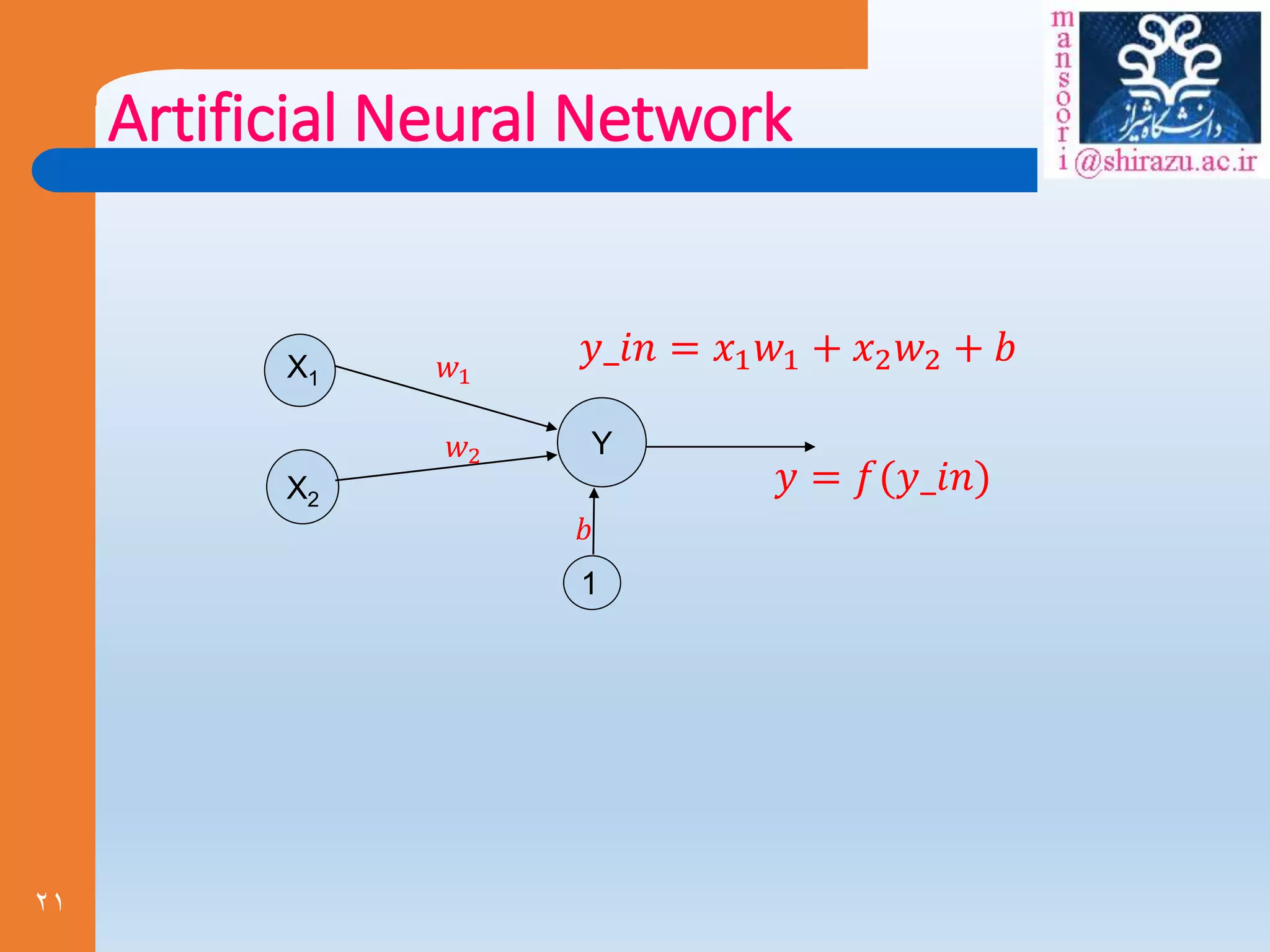

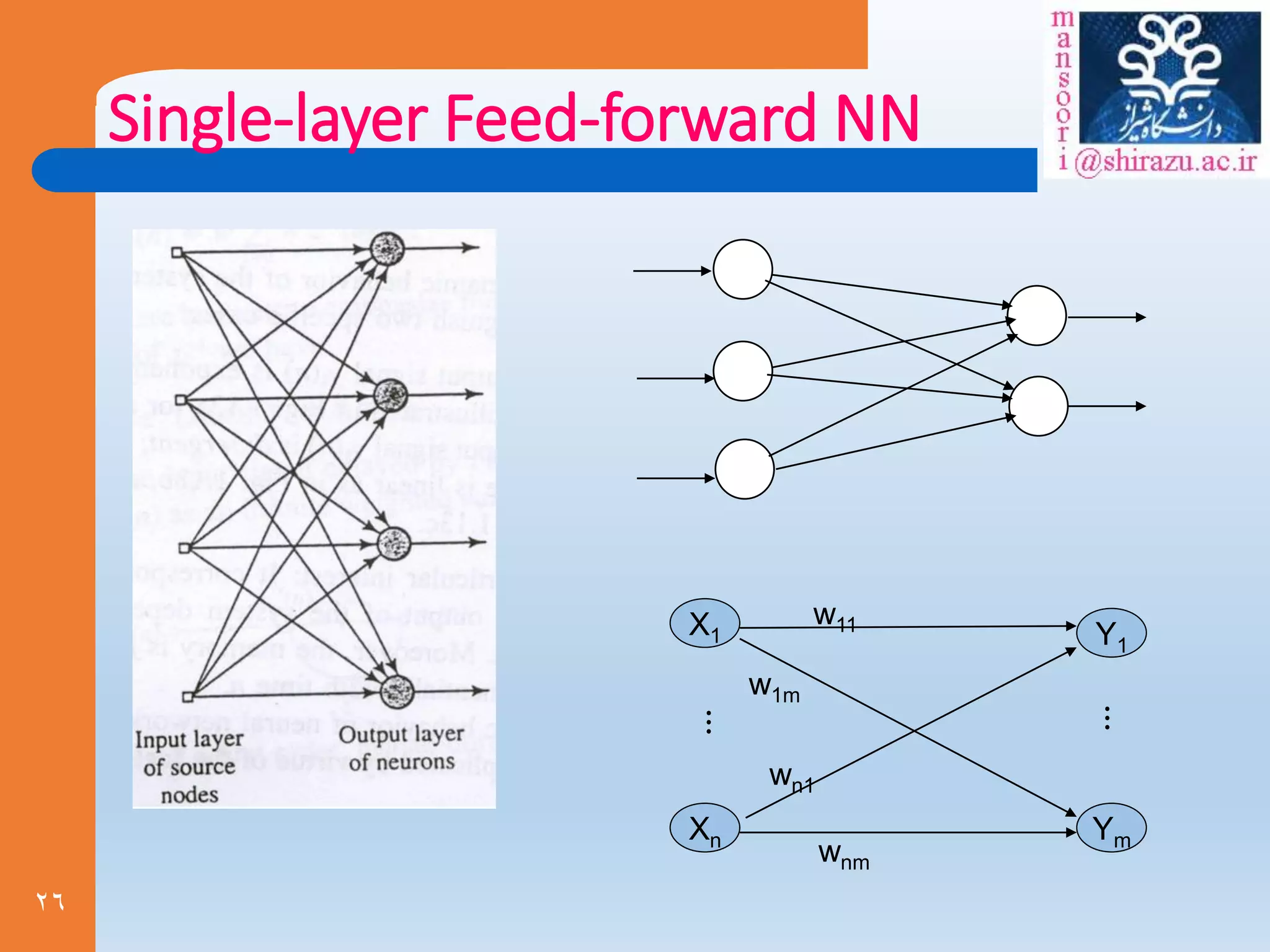

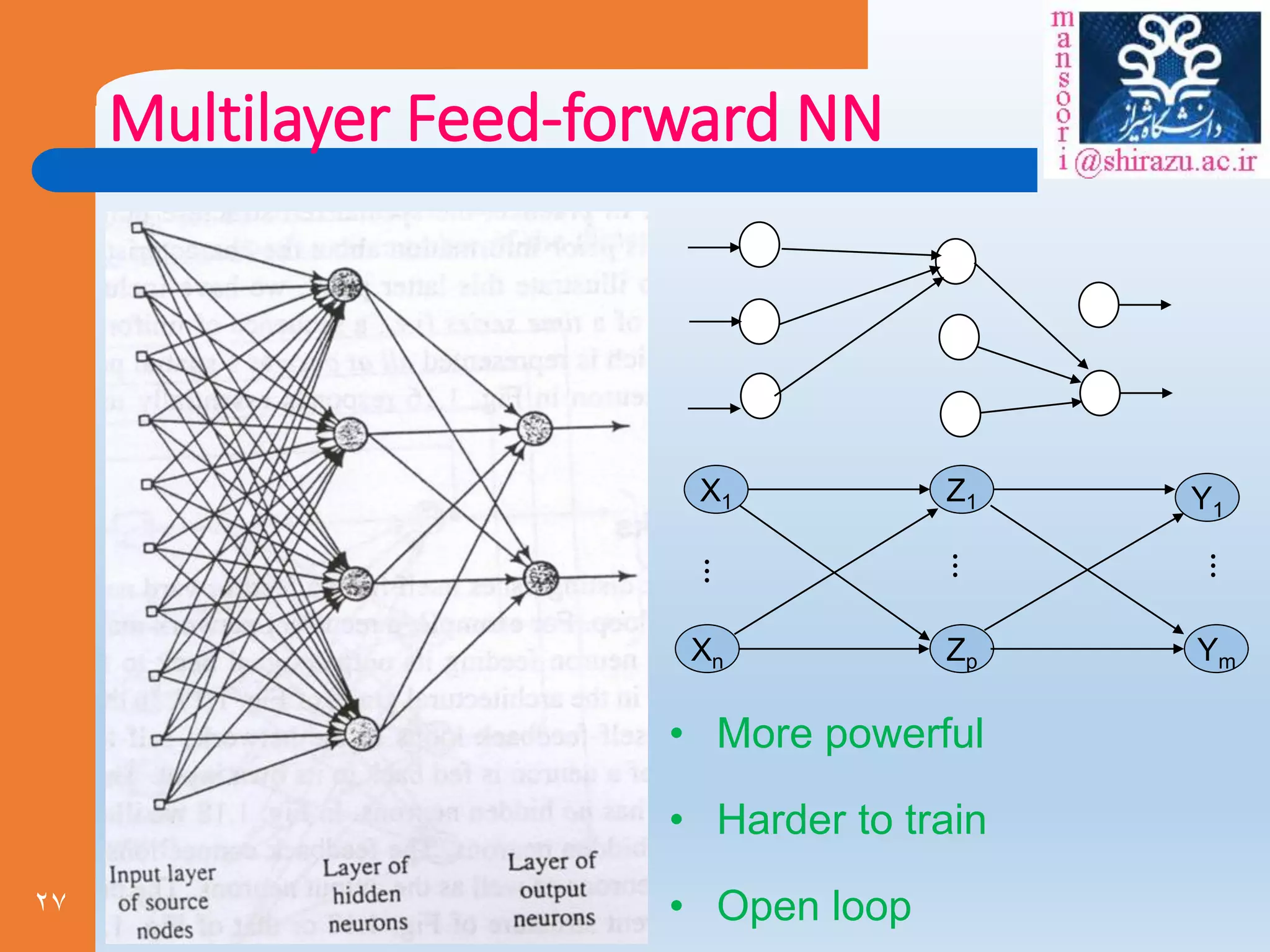

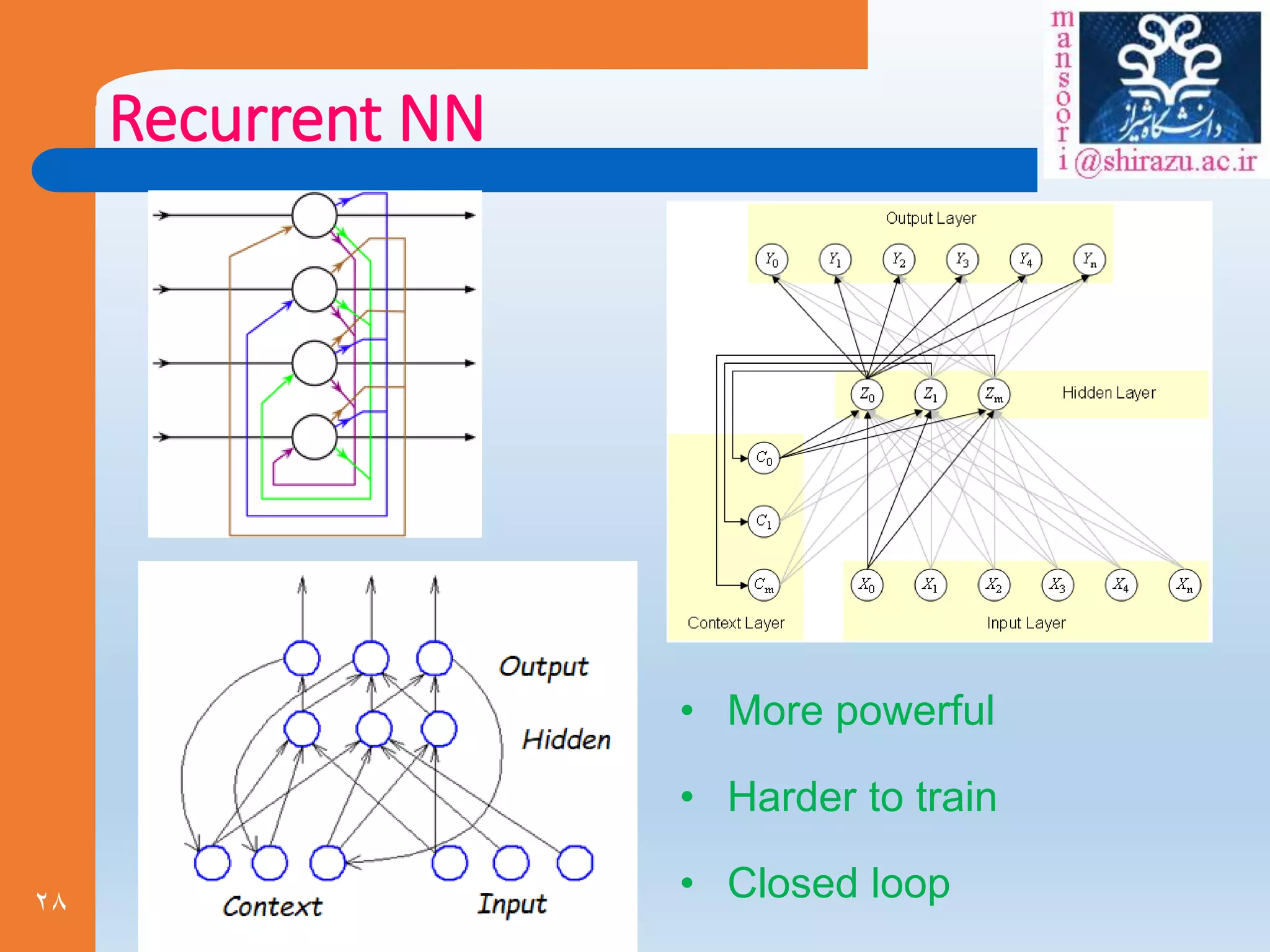

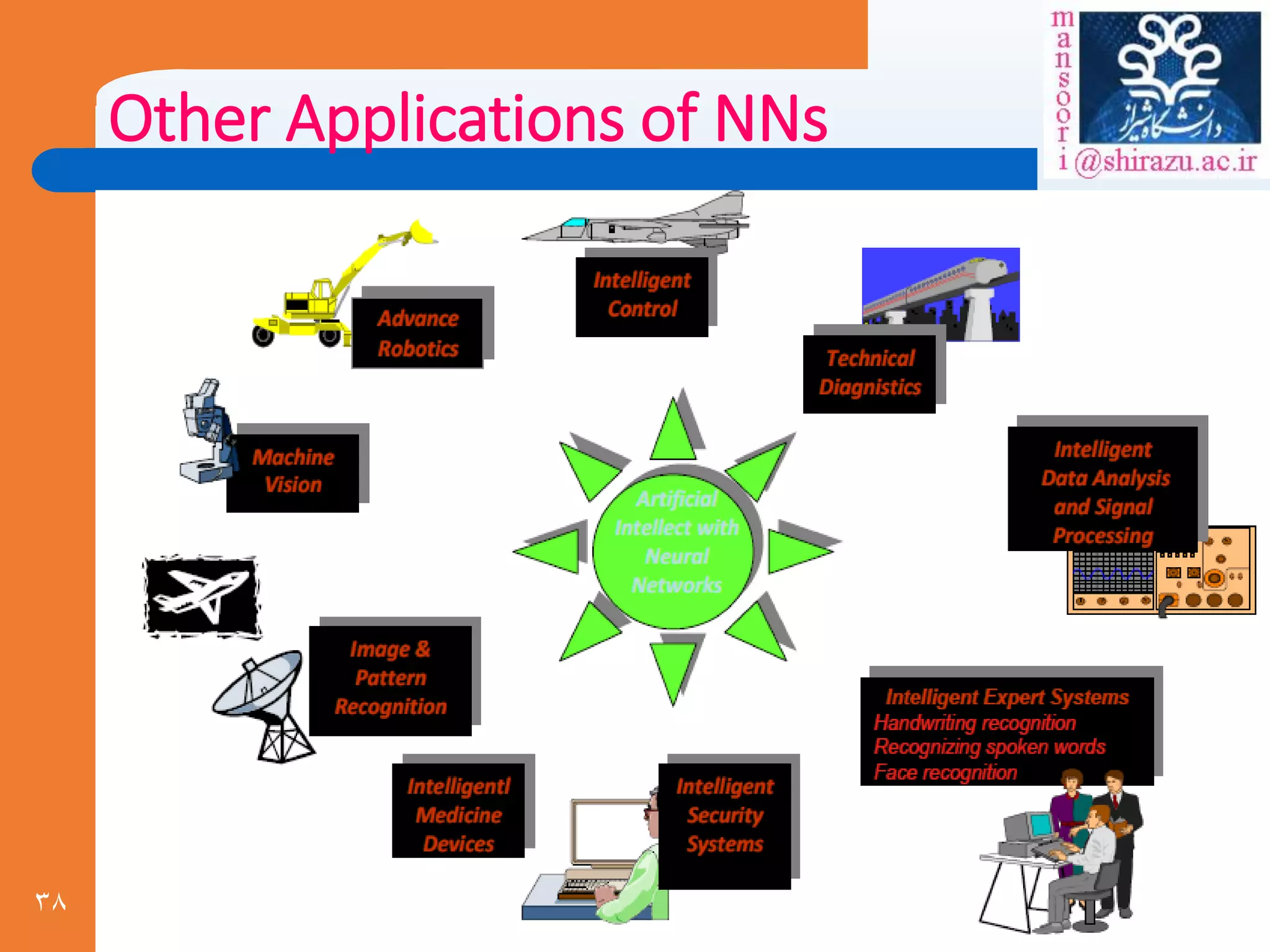

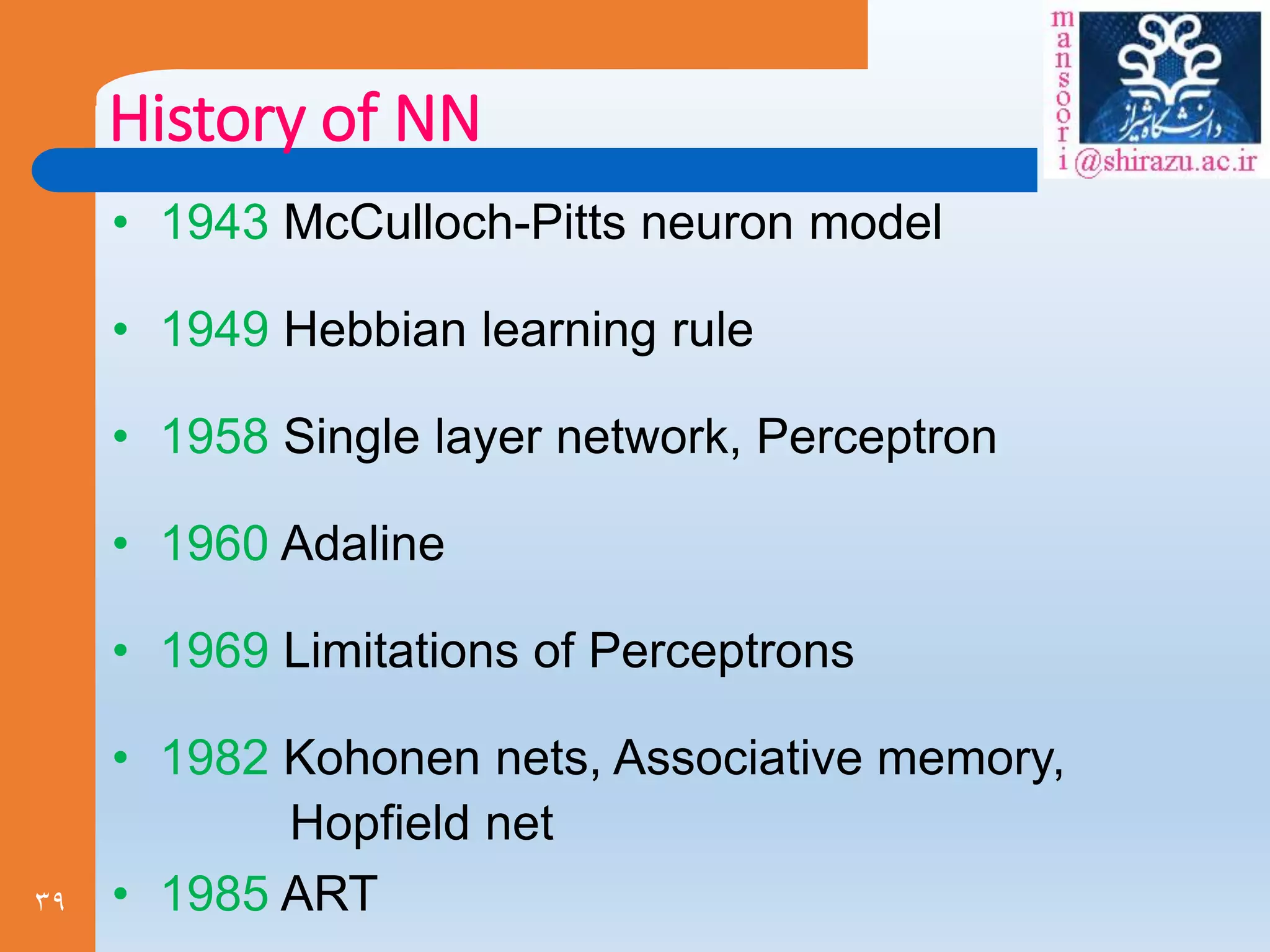

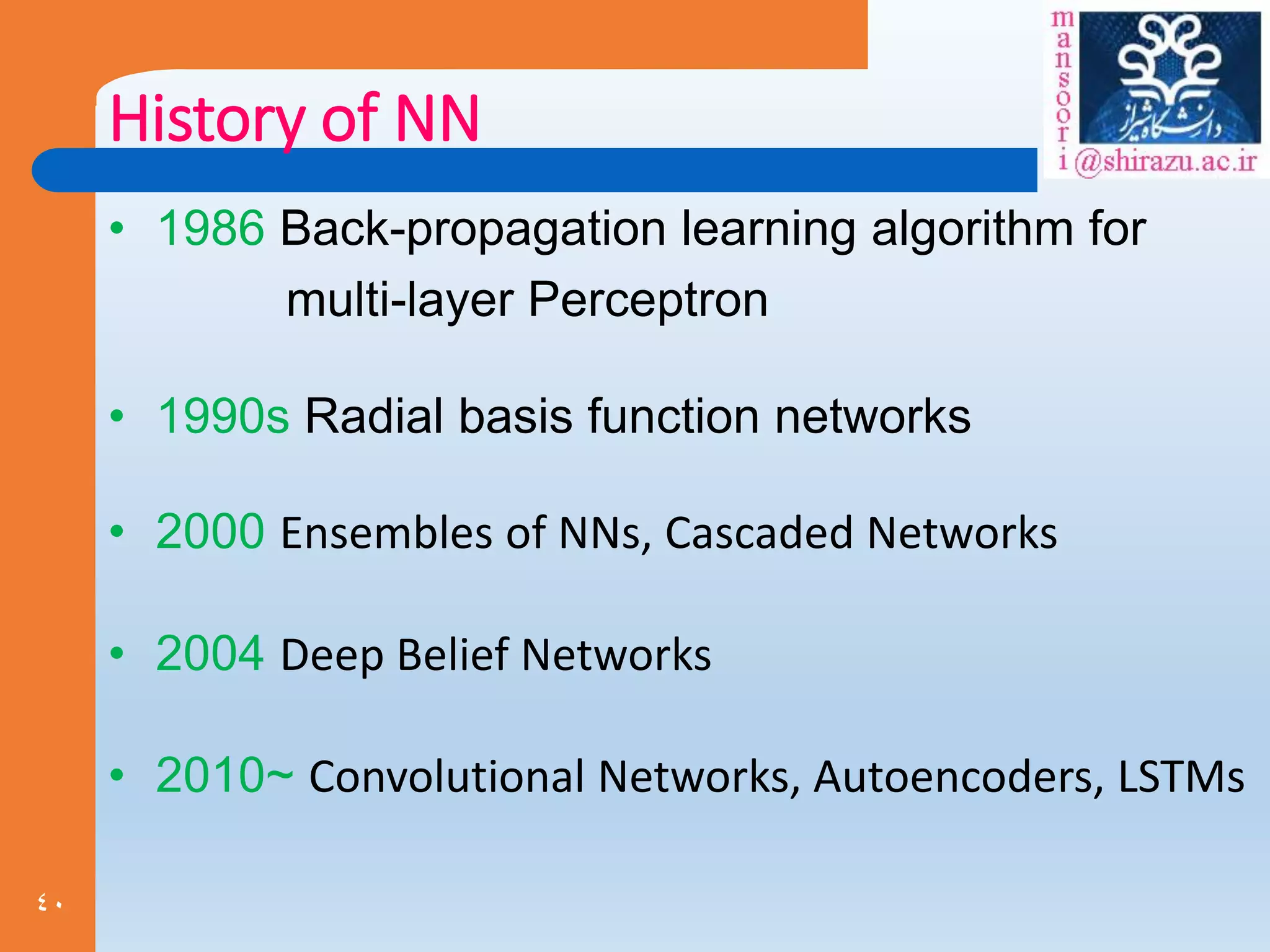

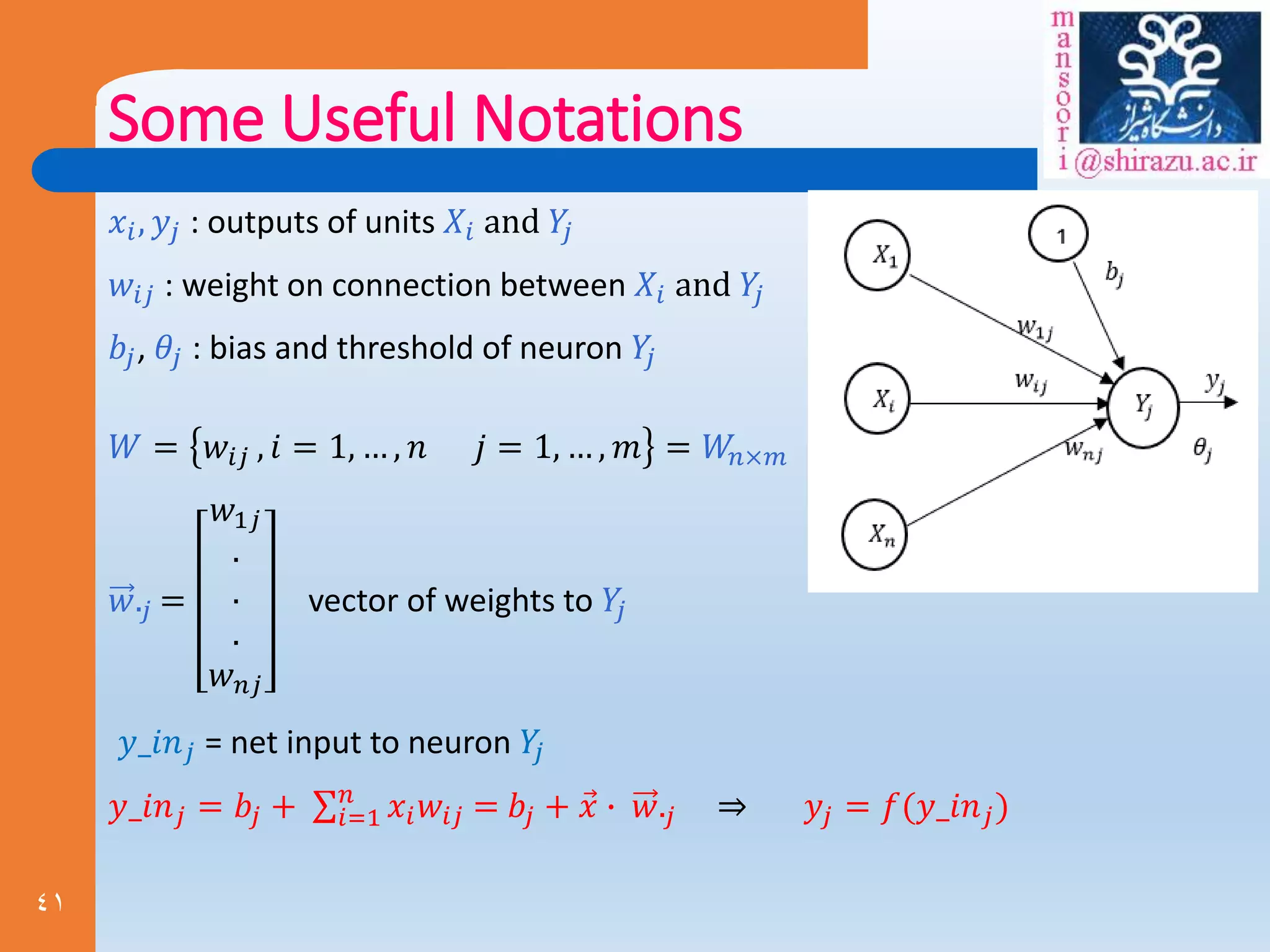

The document provides an overview of neural networks, drawing parallels between biological neurons in the human brain and artificial neural networks (ANNs). It discusses components, functioning, learning methodologies, and applications of ANNs while highlighting the advantages and disadvantages of using neural networks in various fields. Additionally, it traces the historical development of neural network models and their evolution over time.