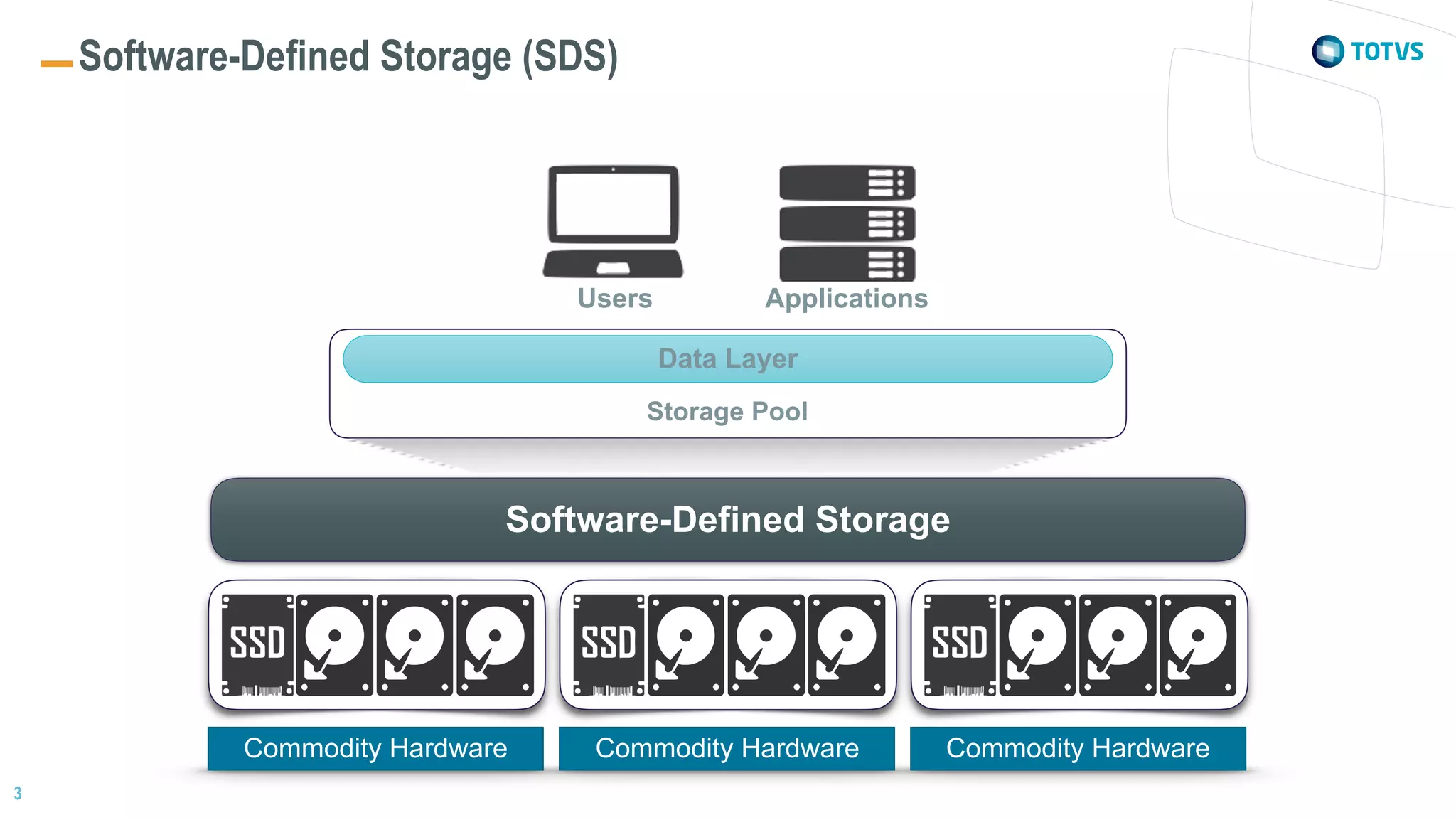

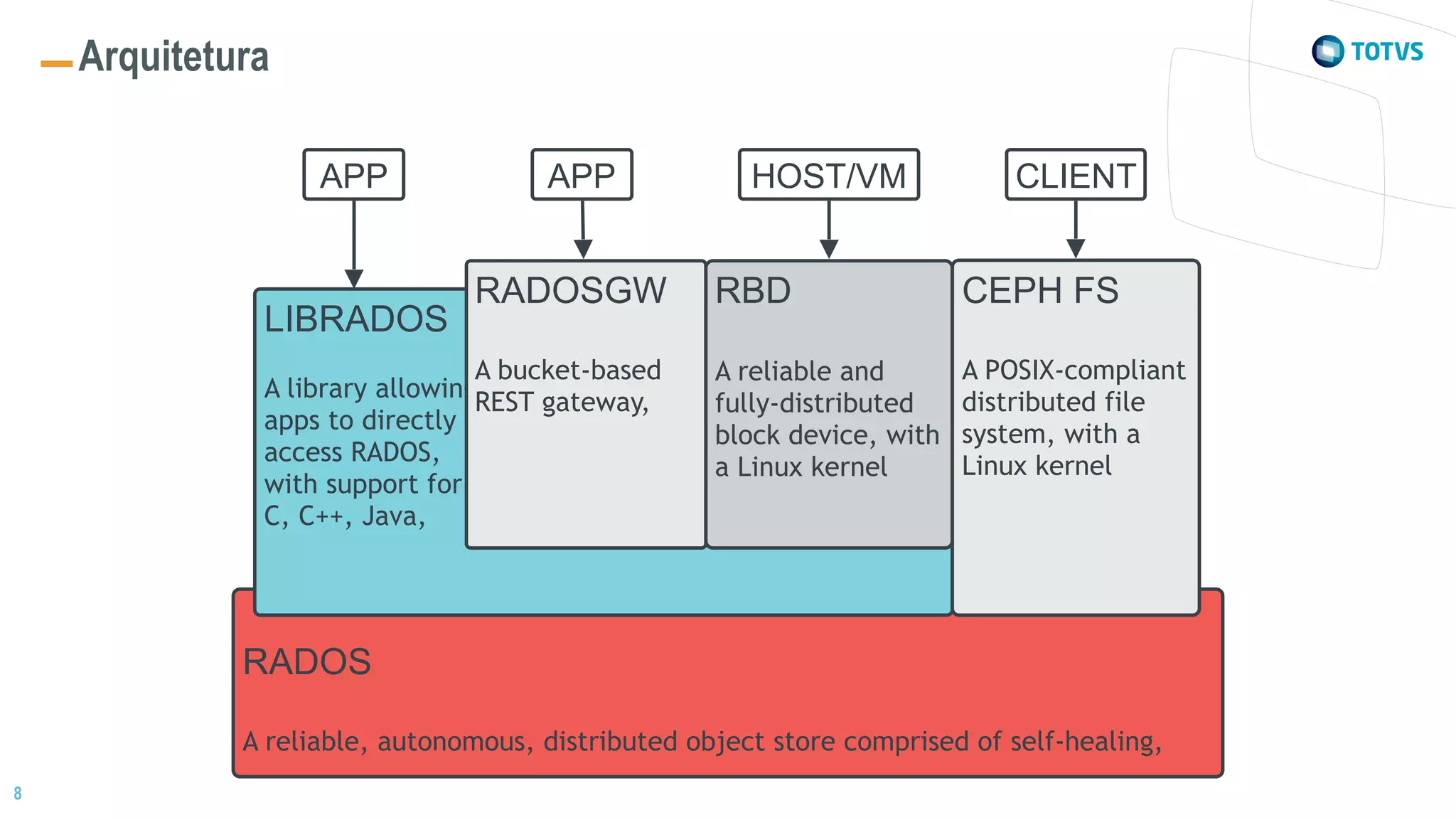

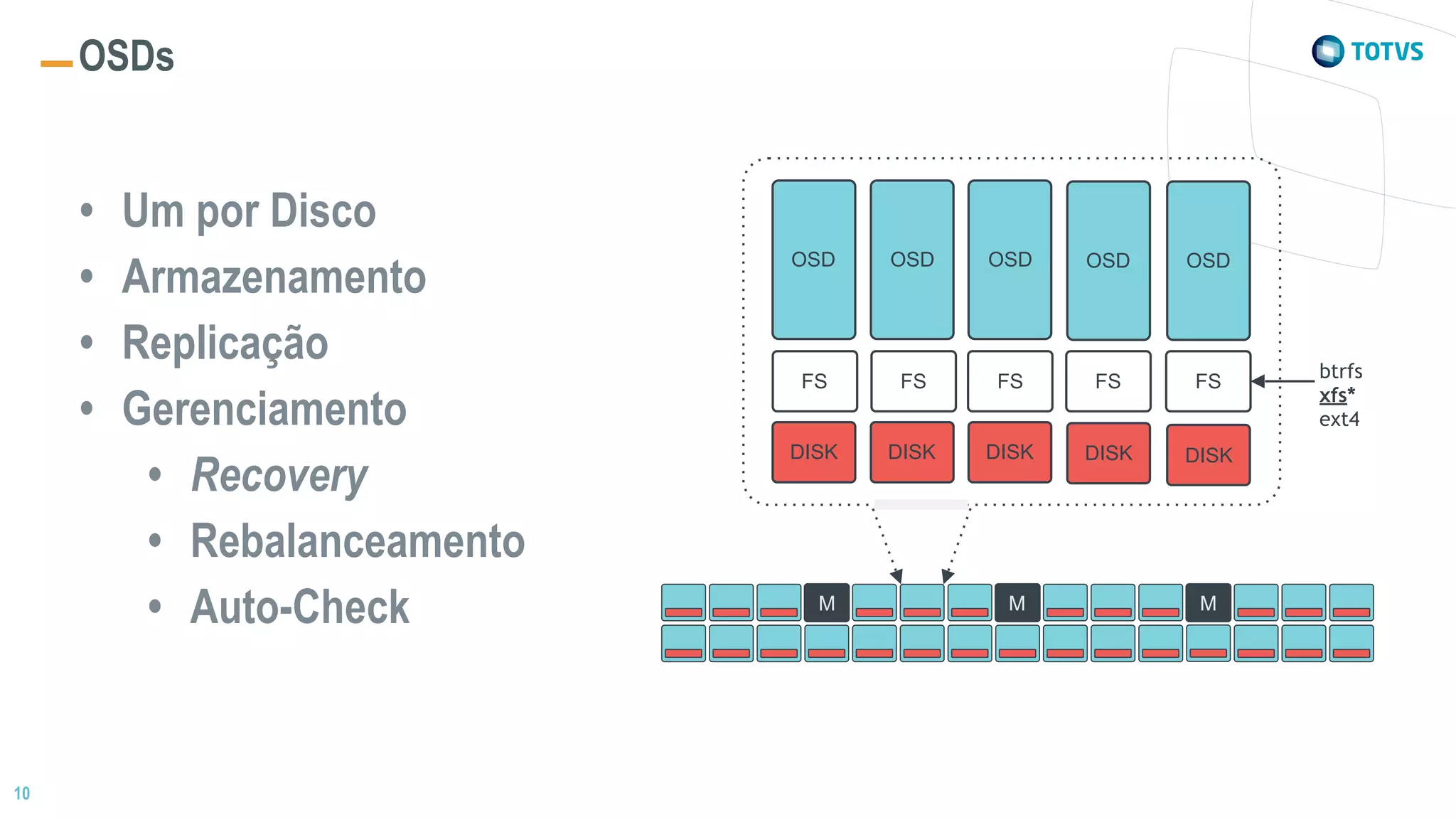

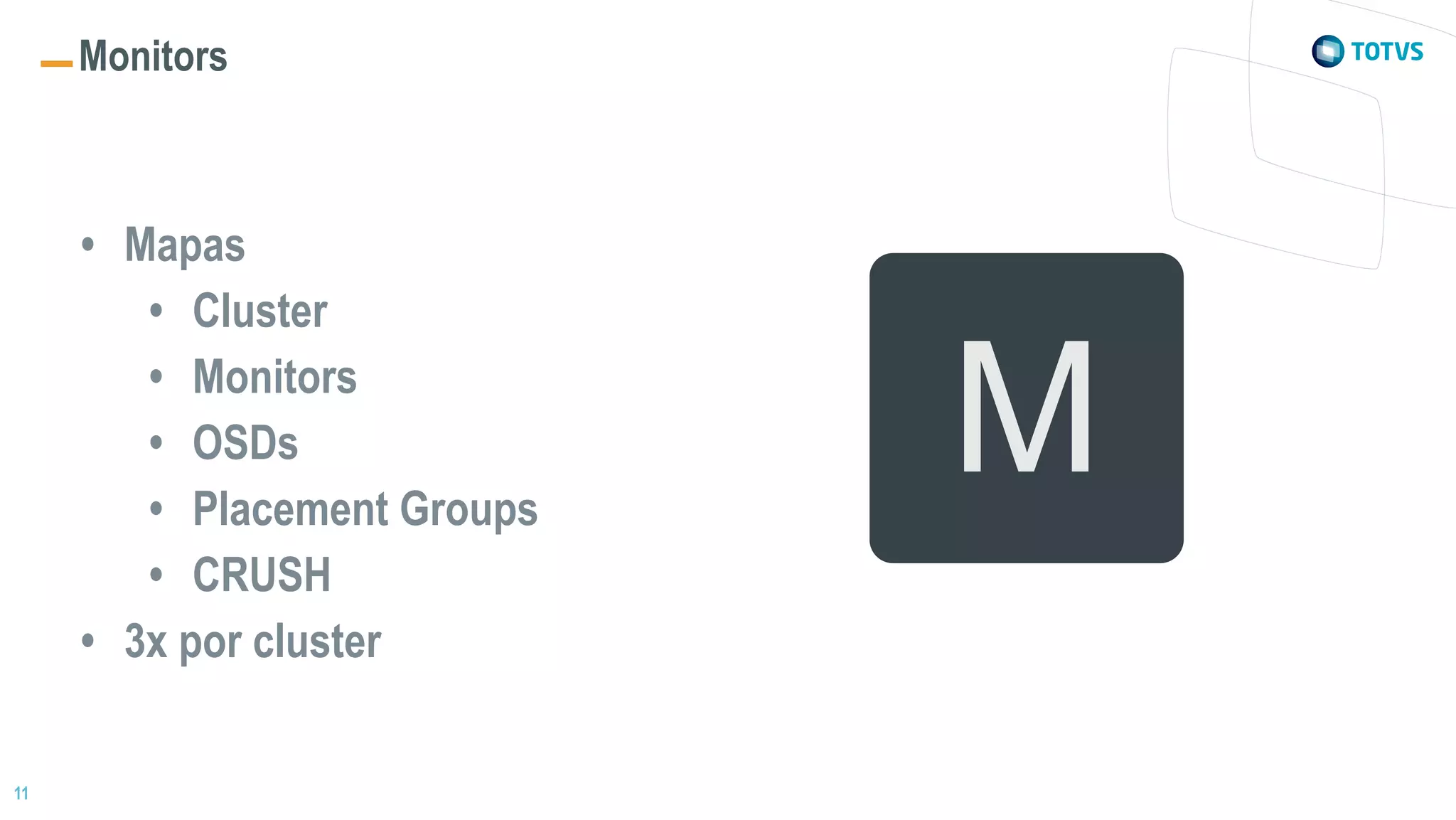

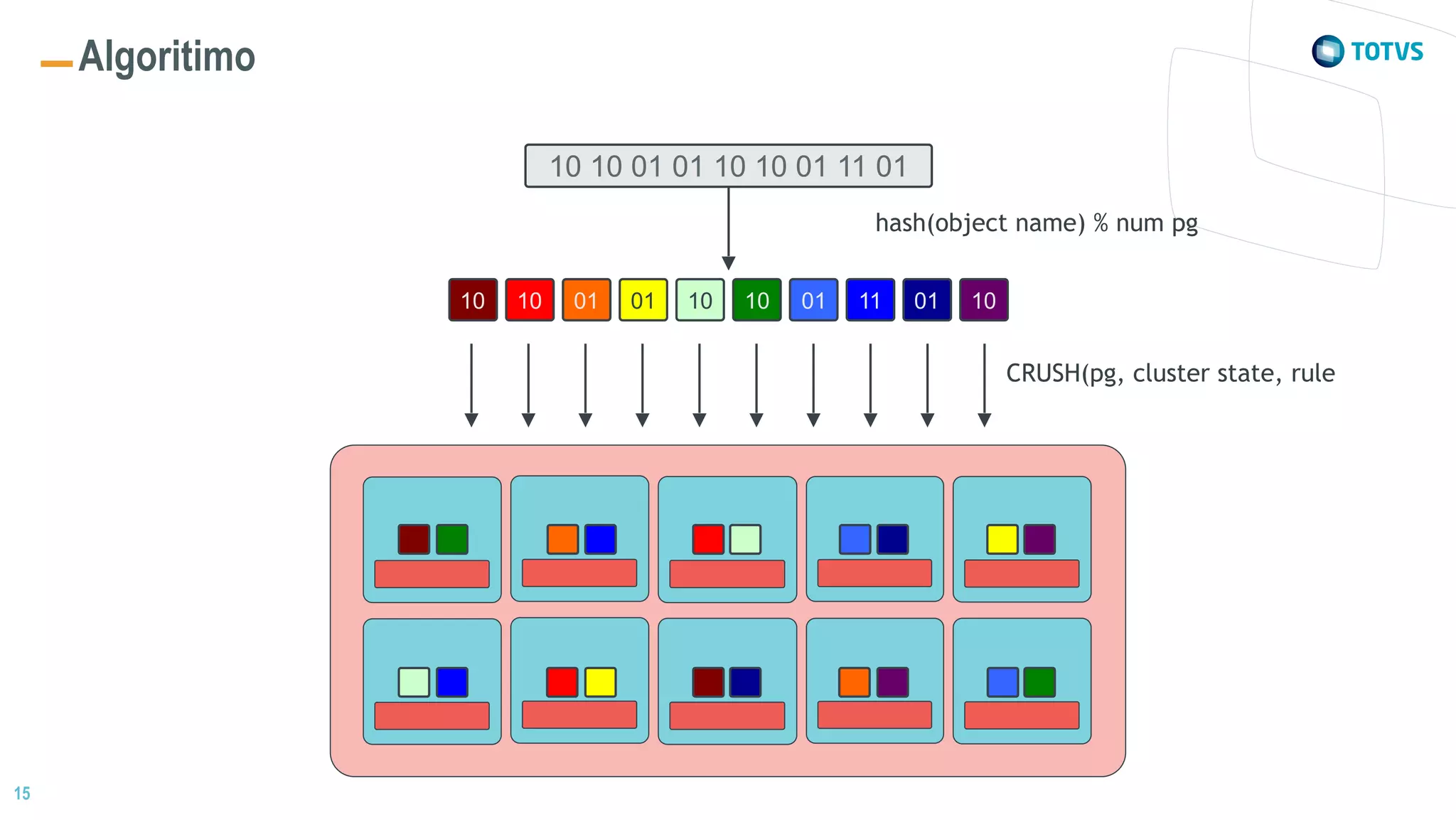

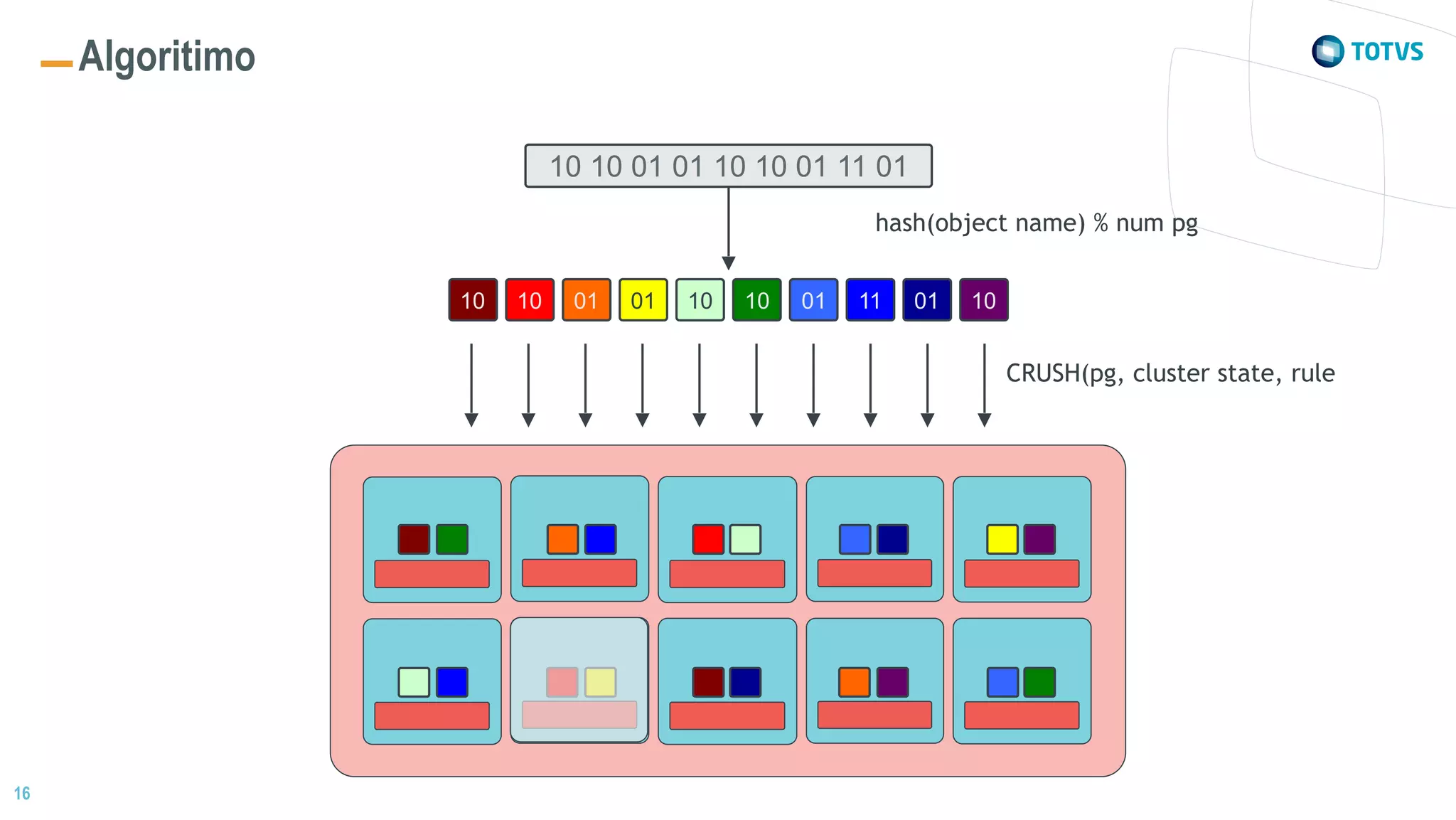

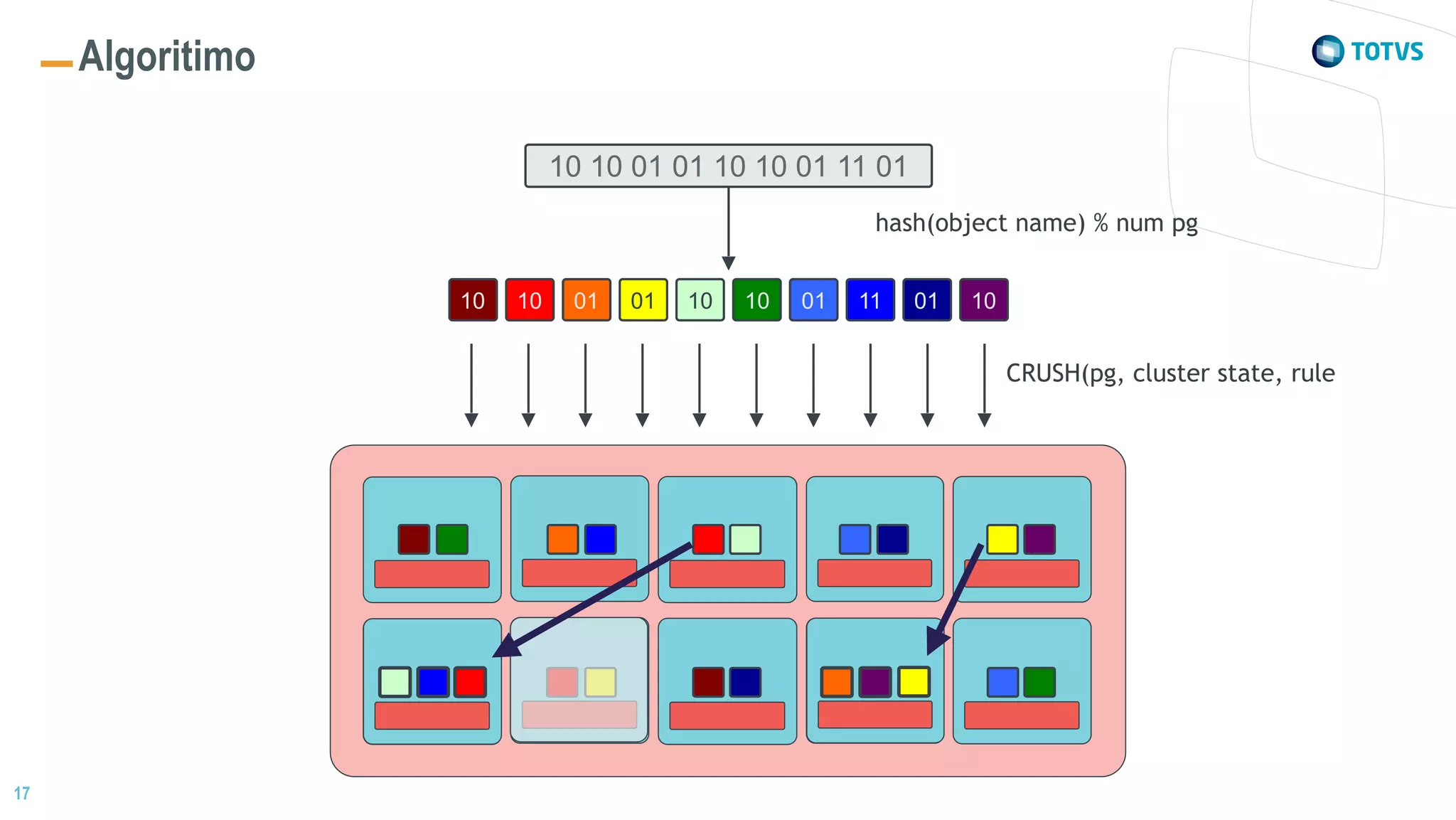

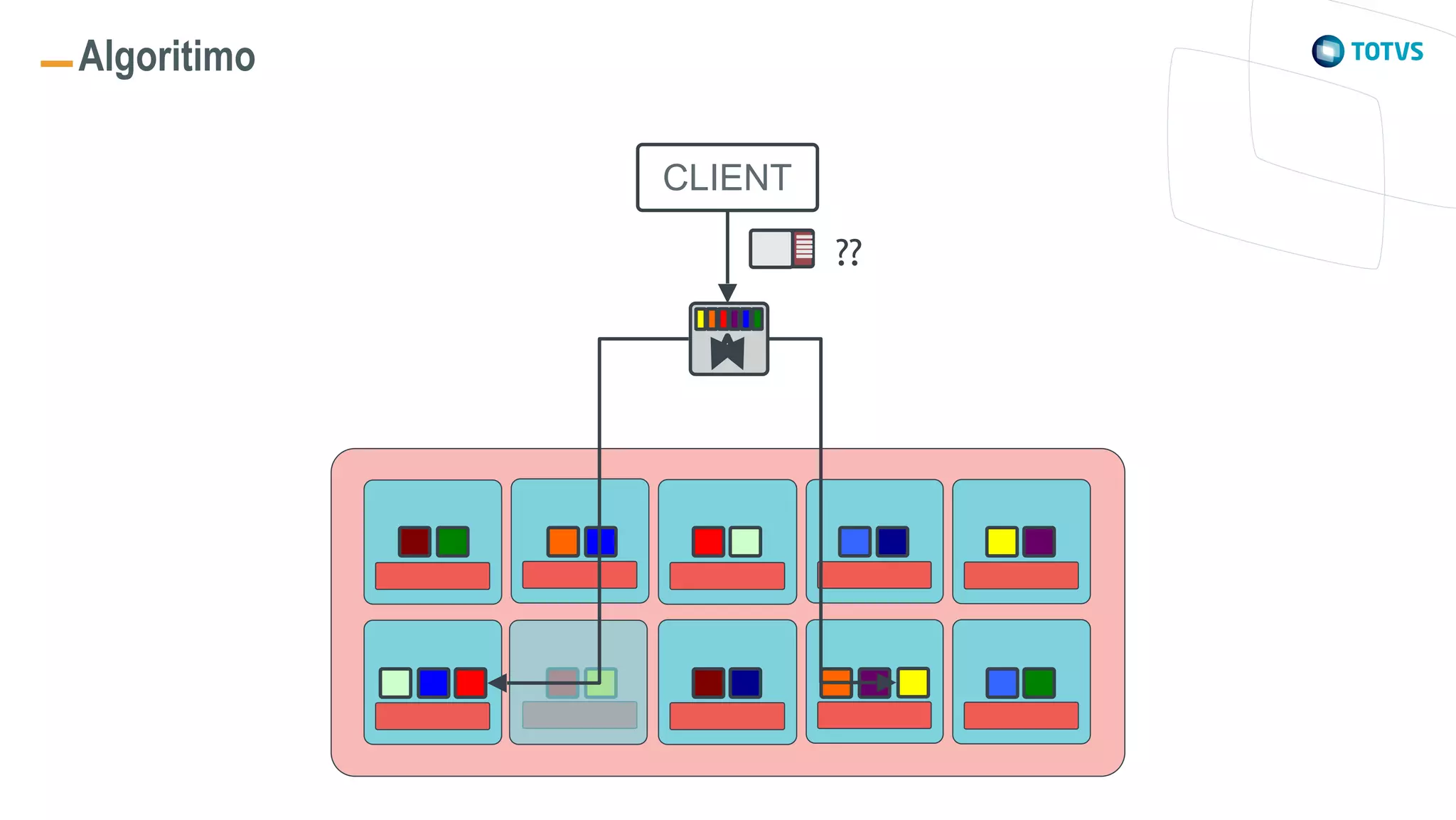

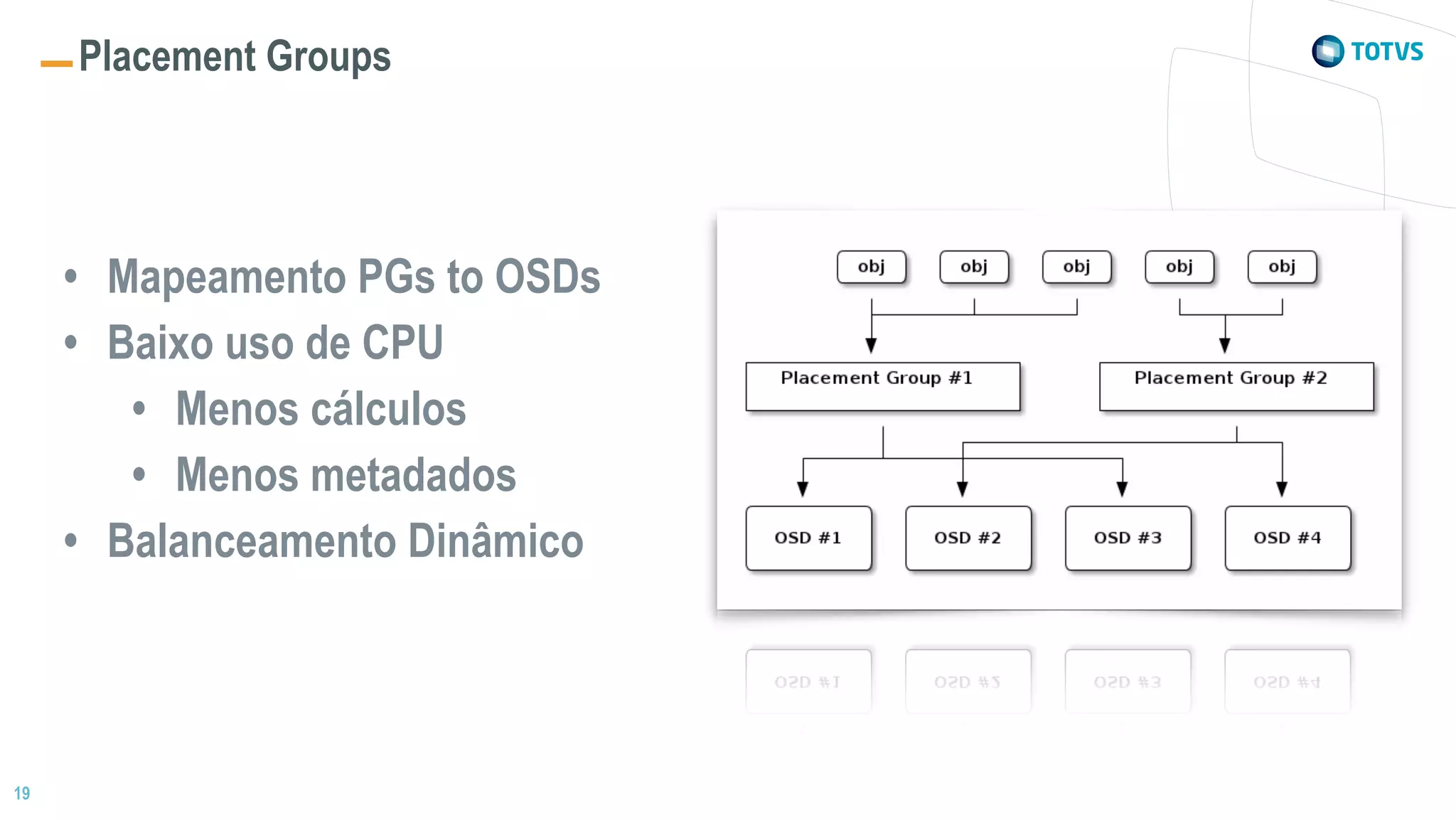

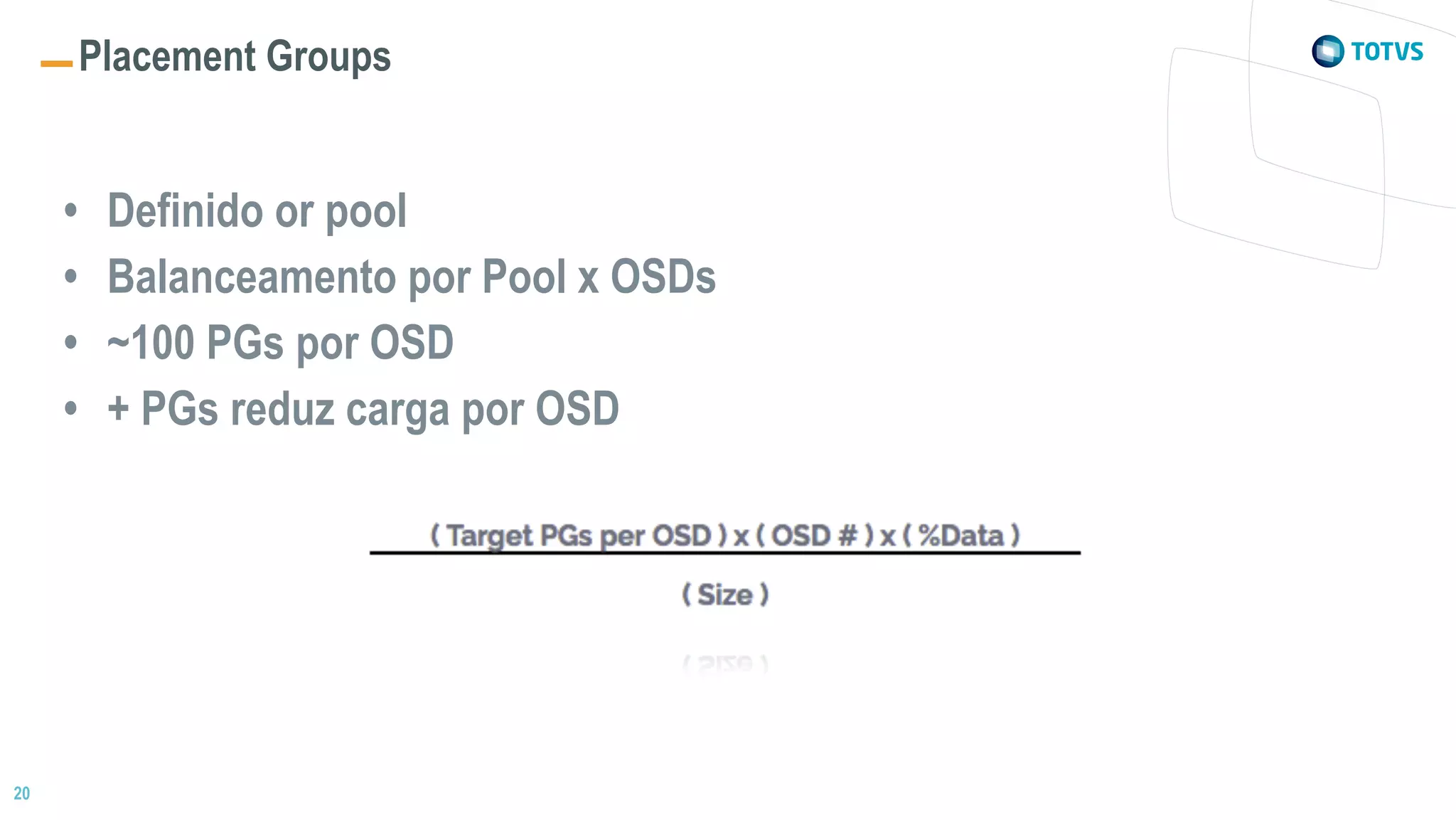

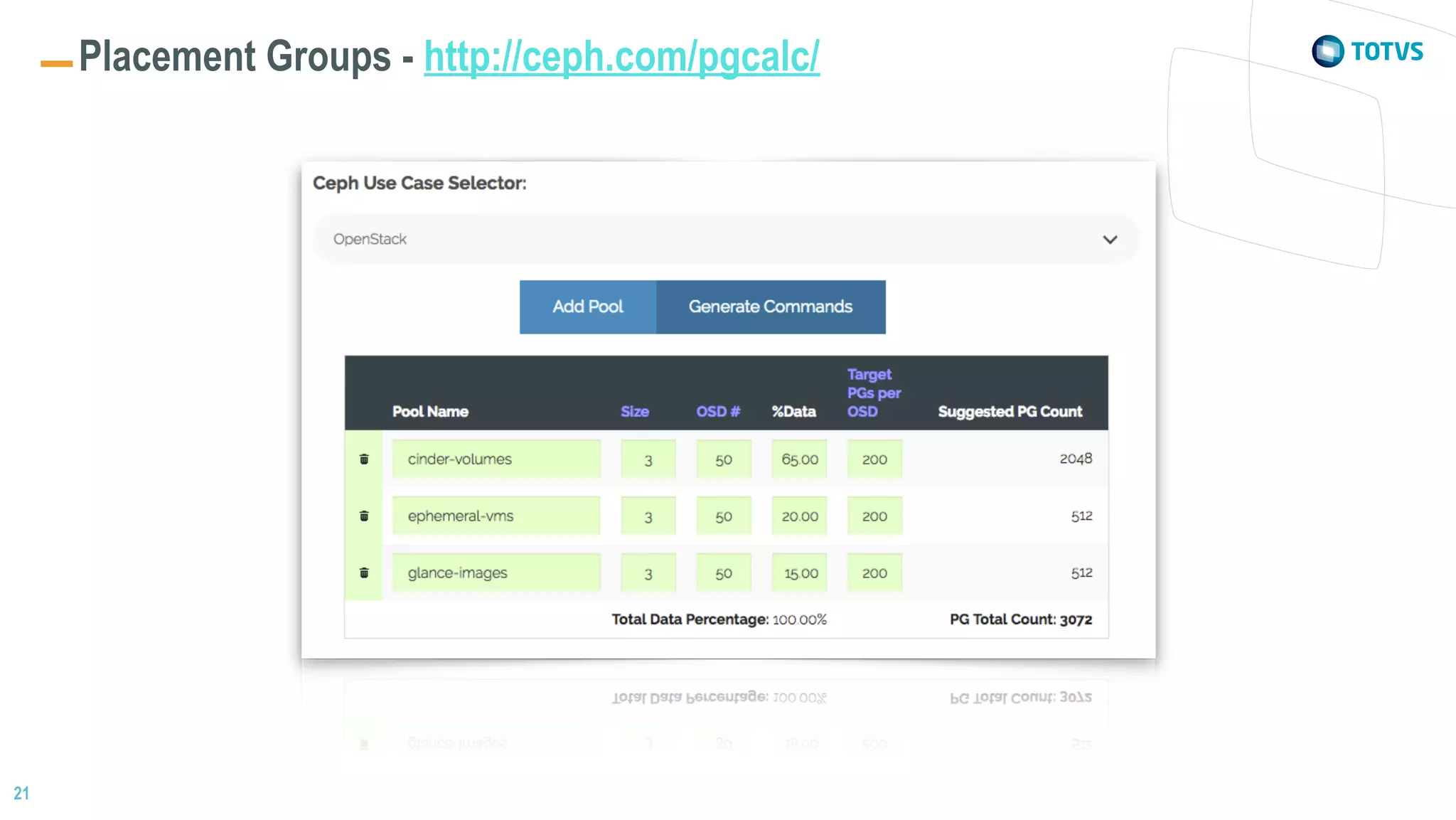

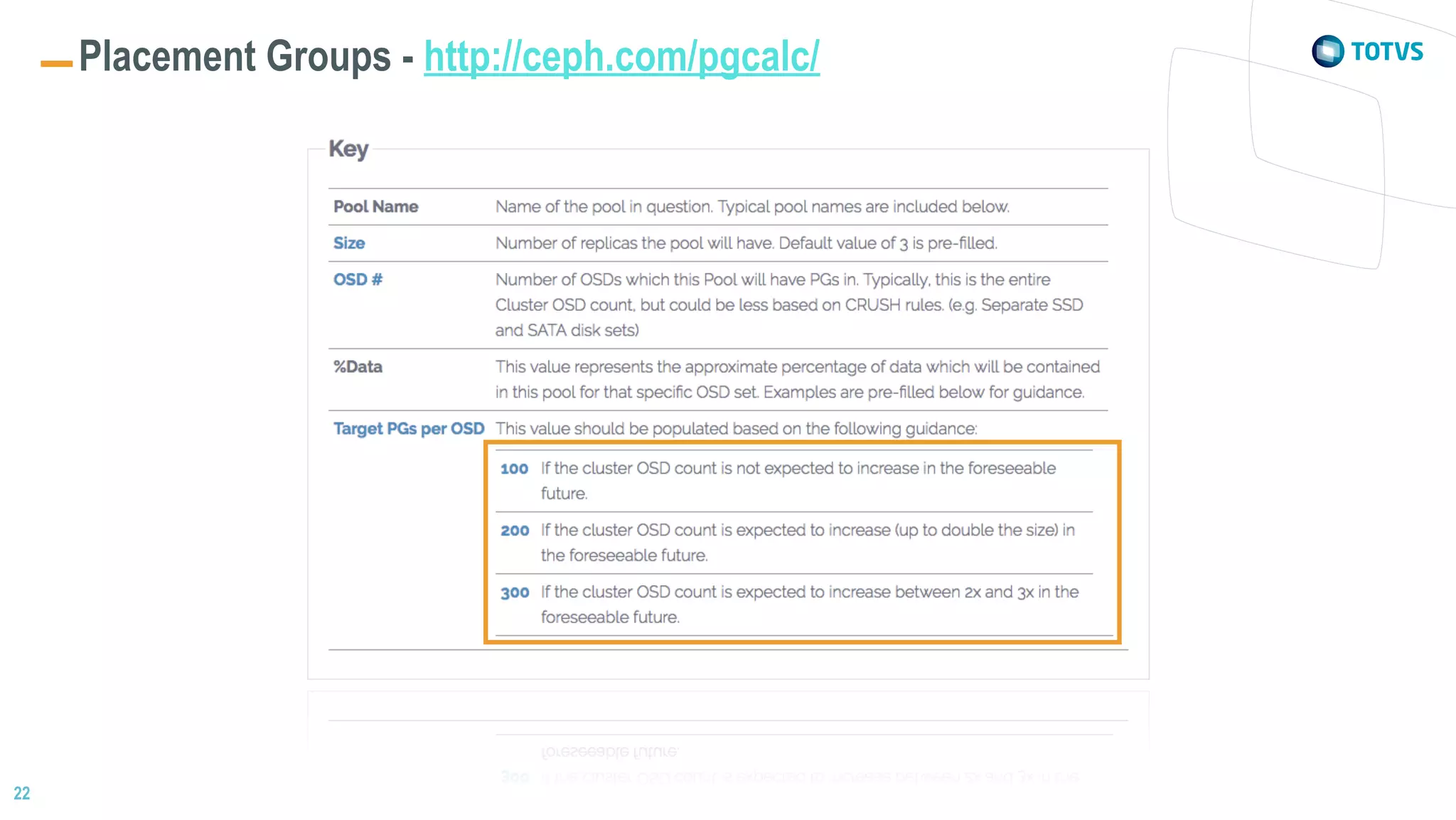

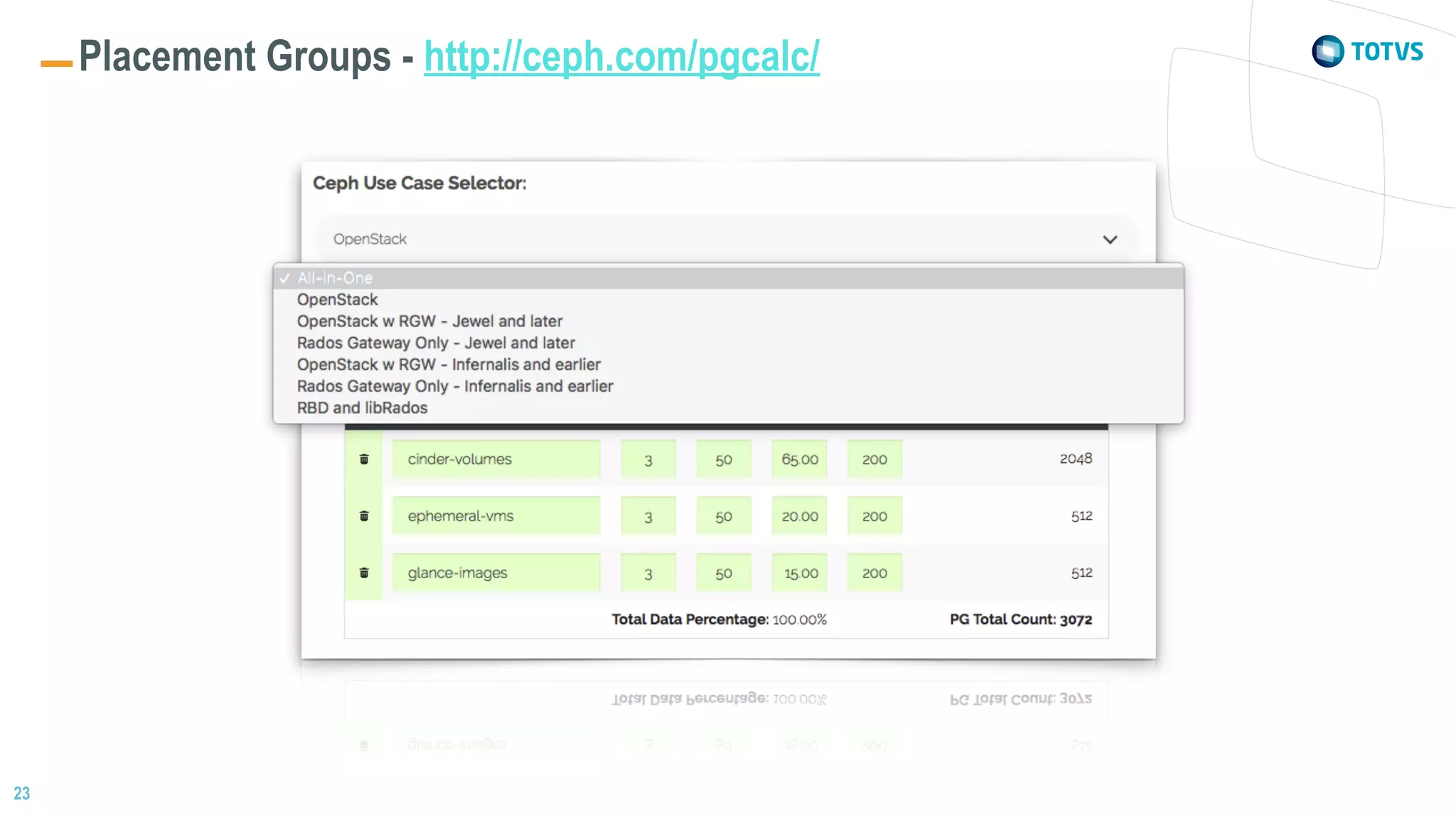

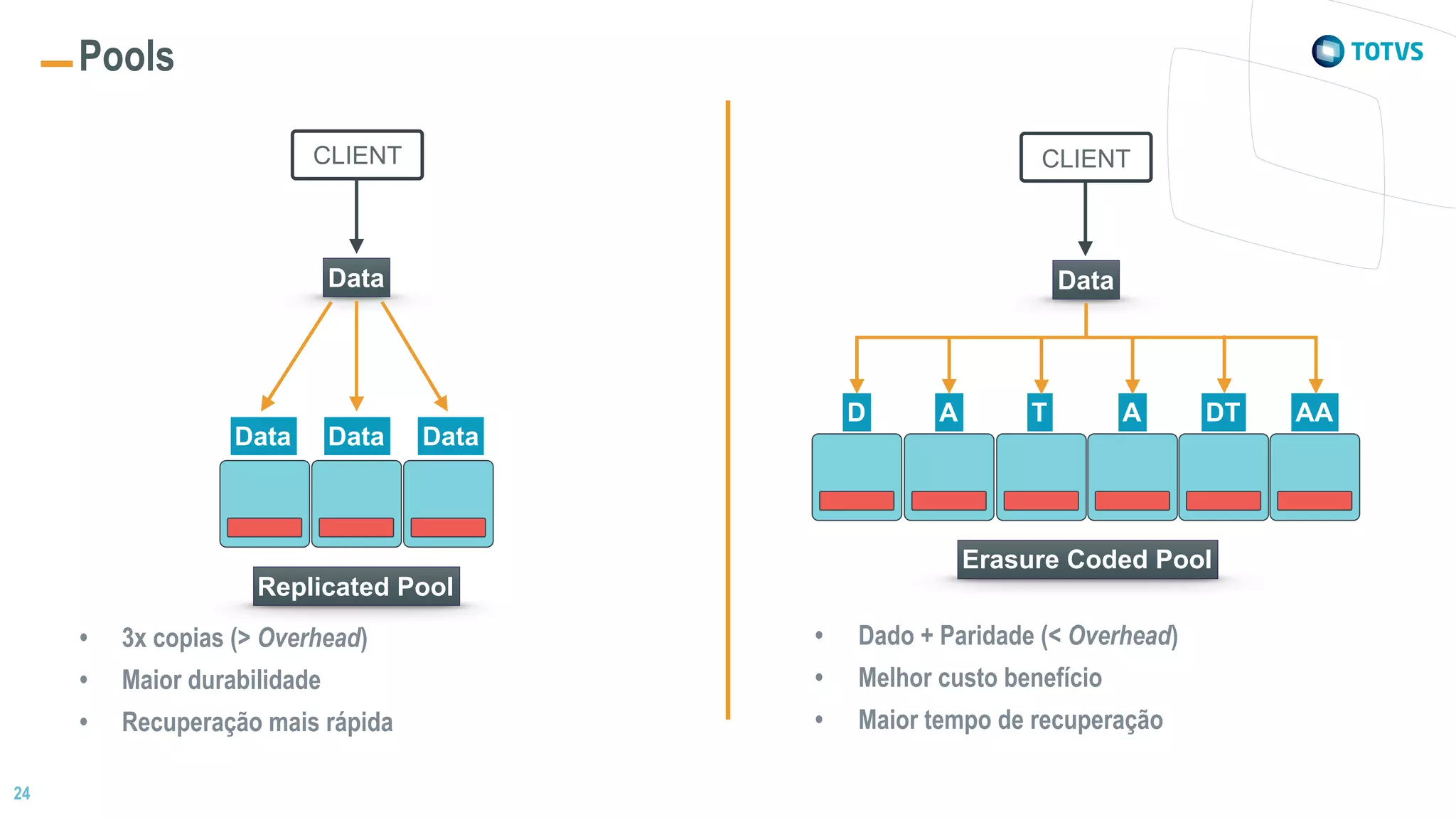

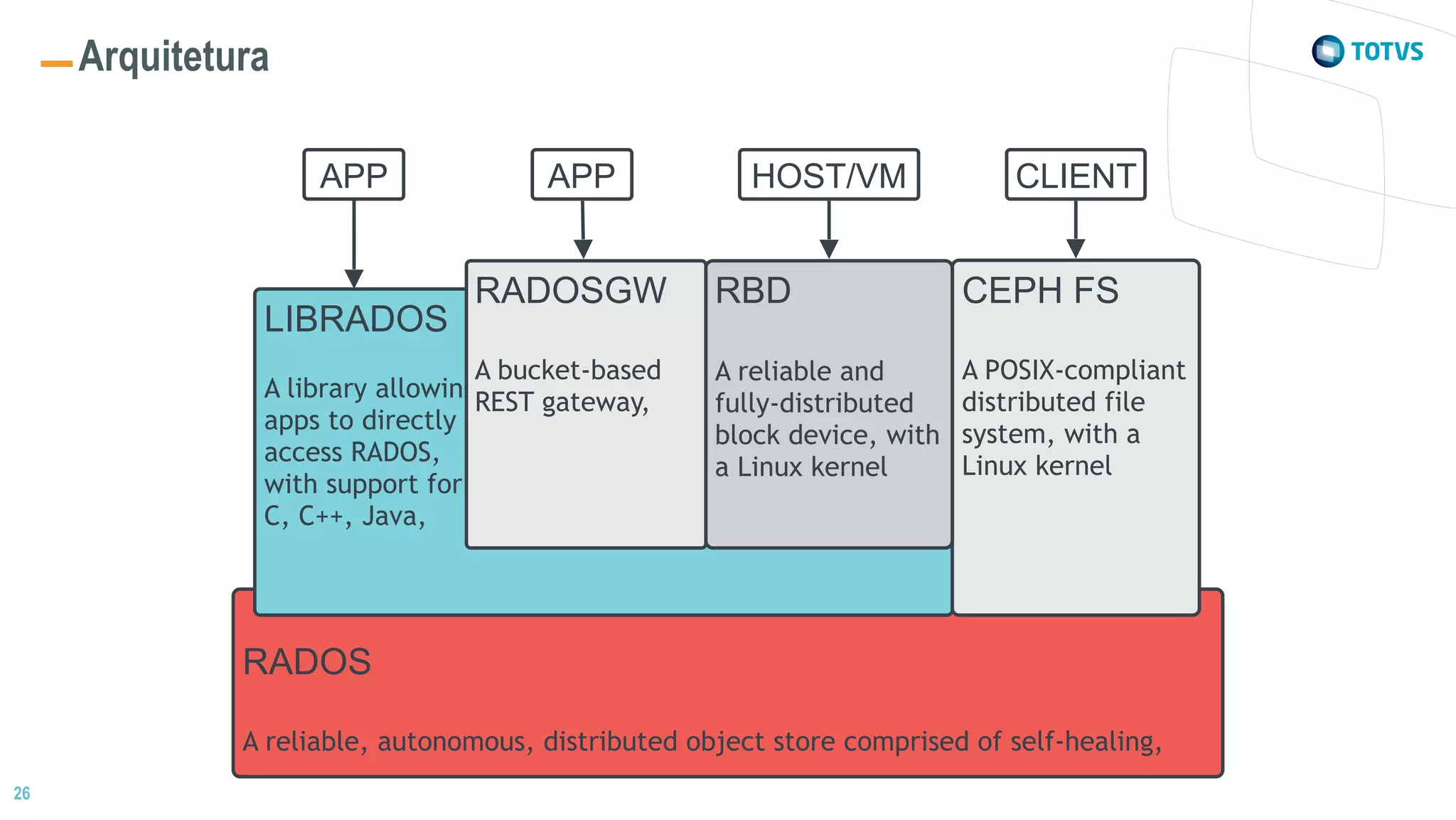

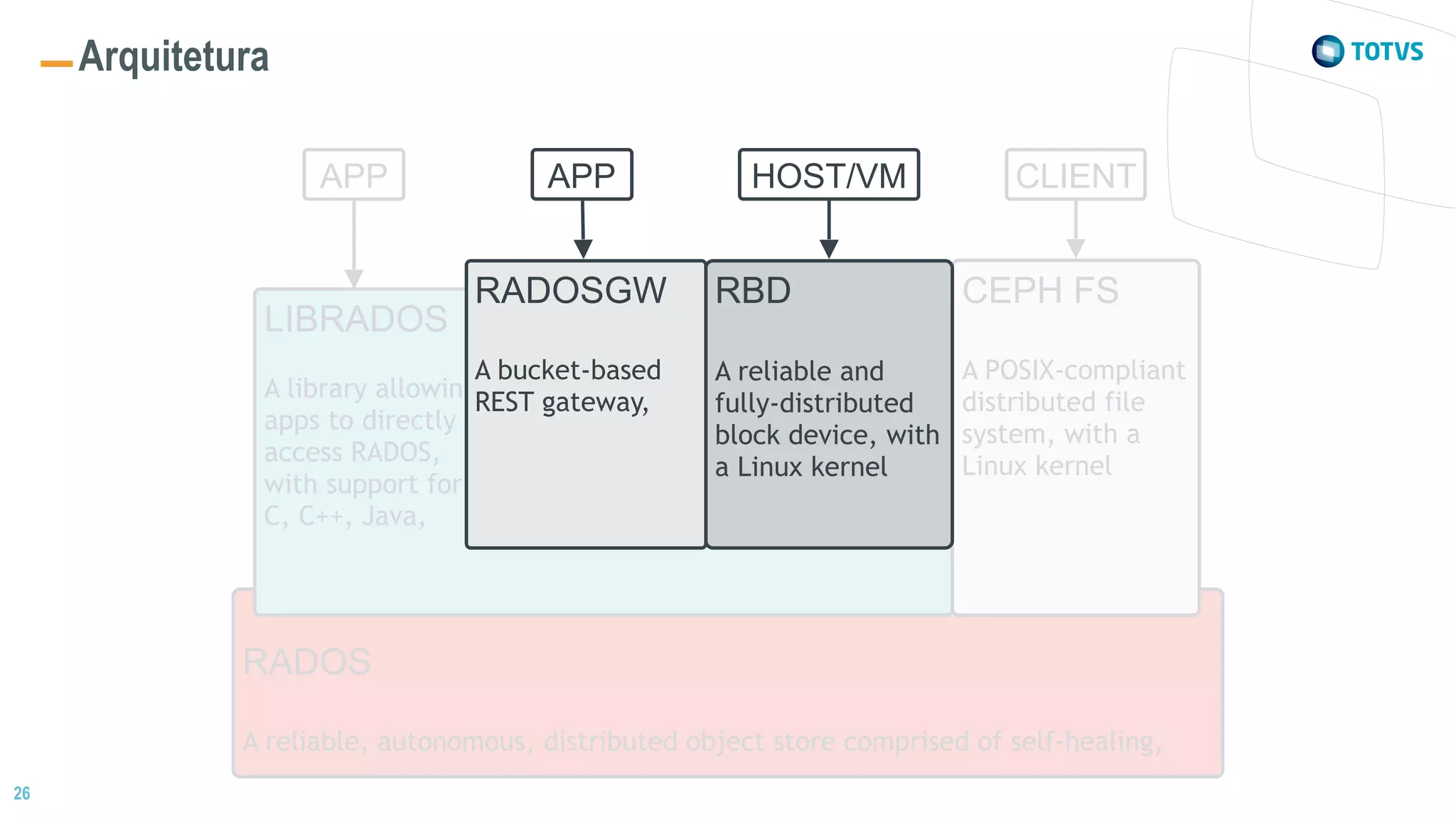

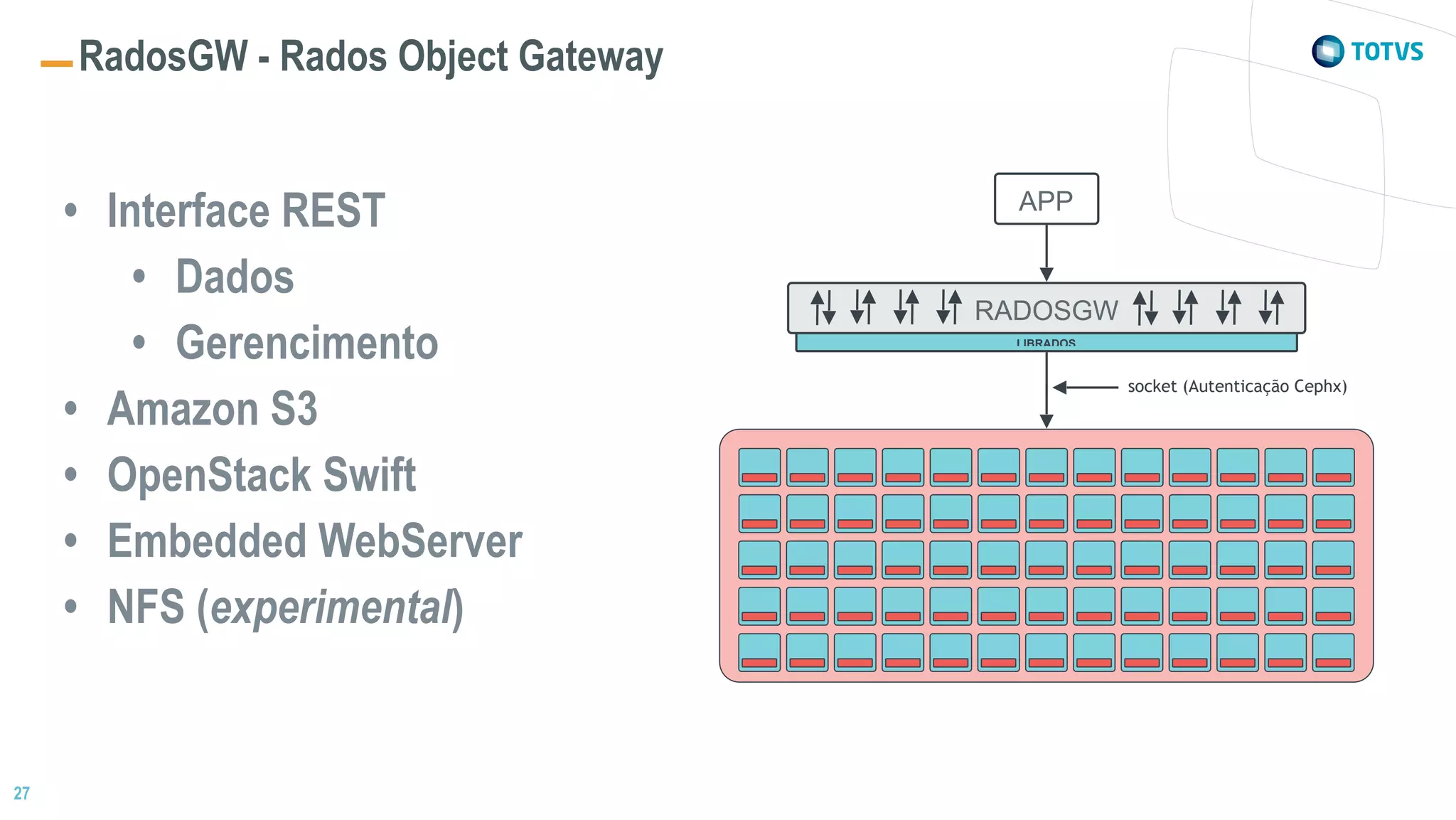

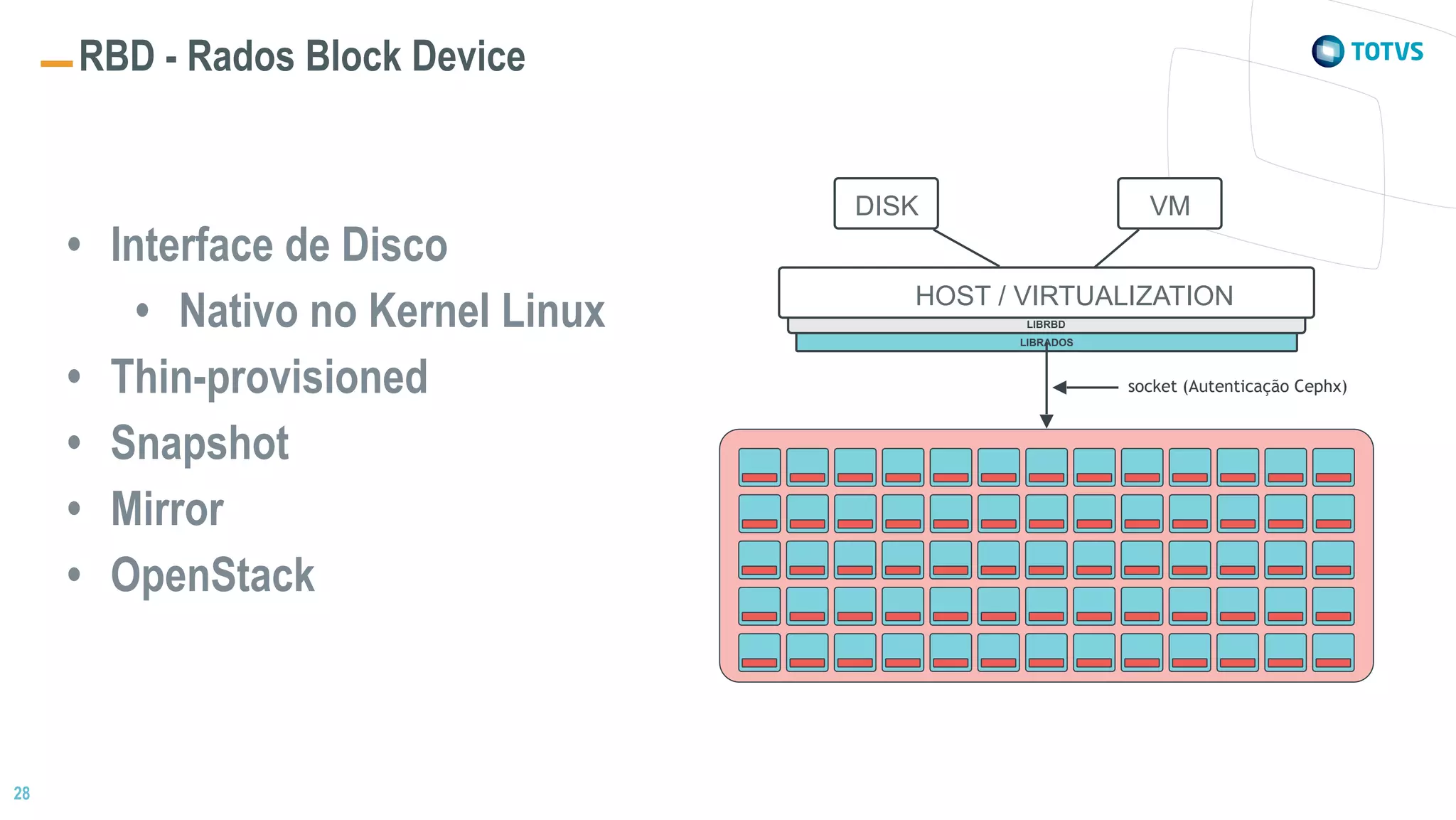

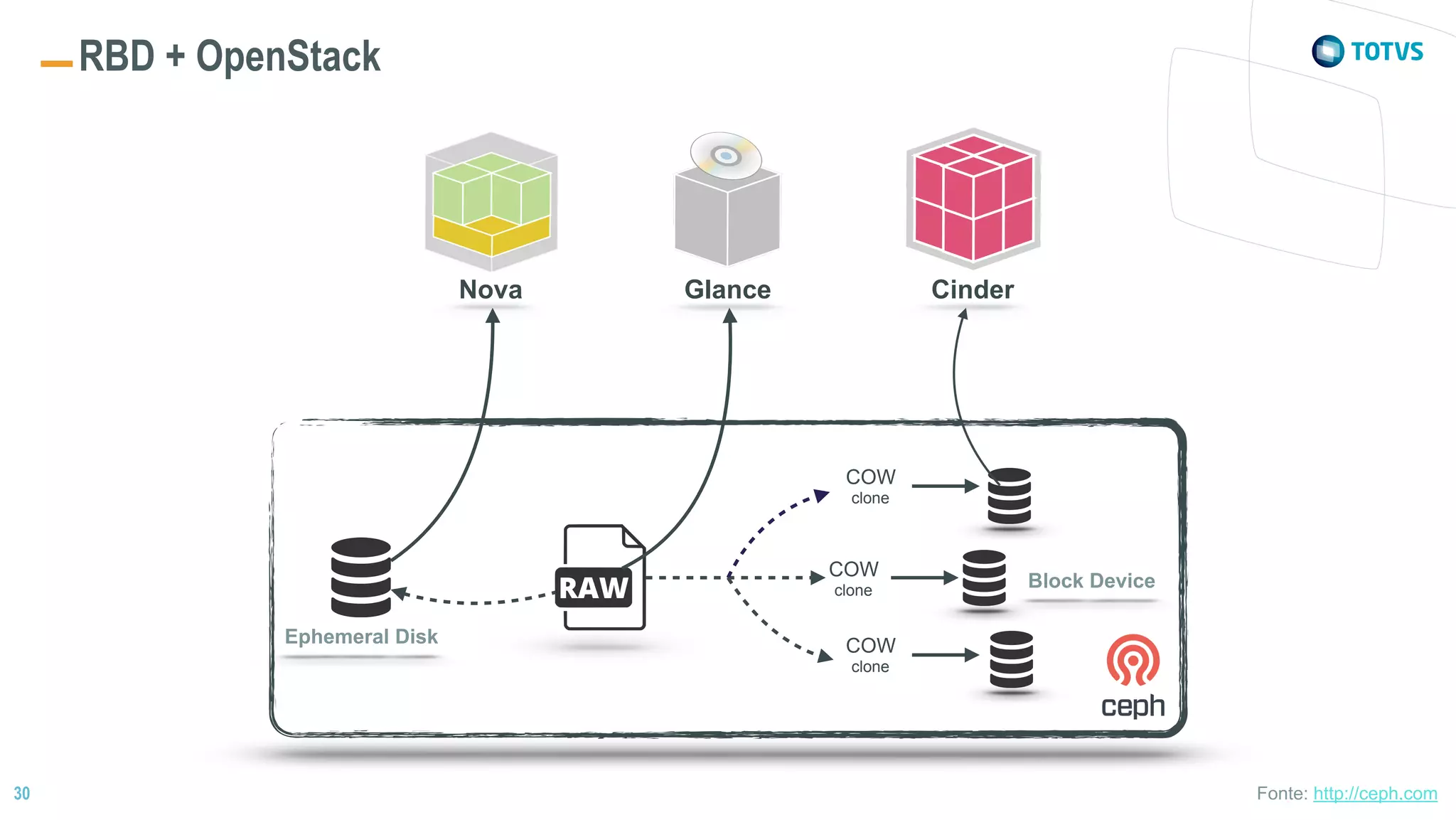

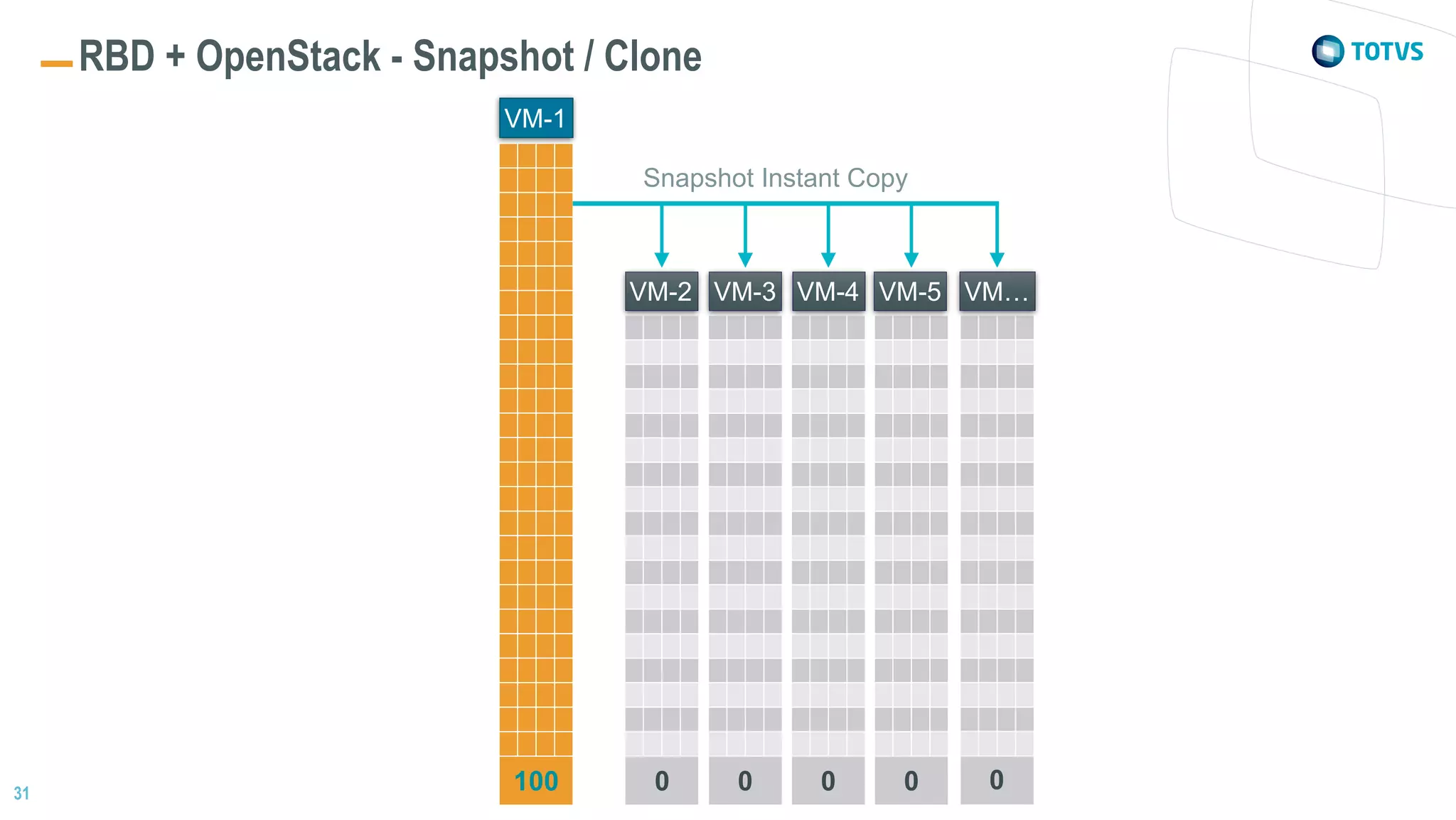

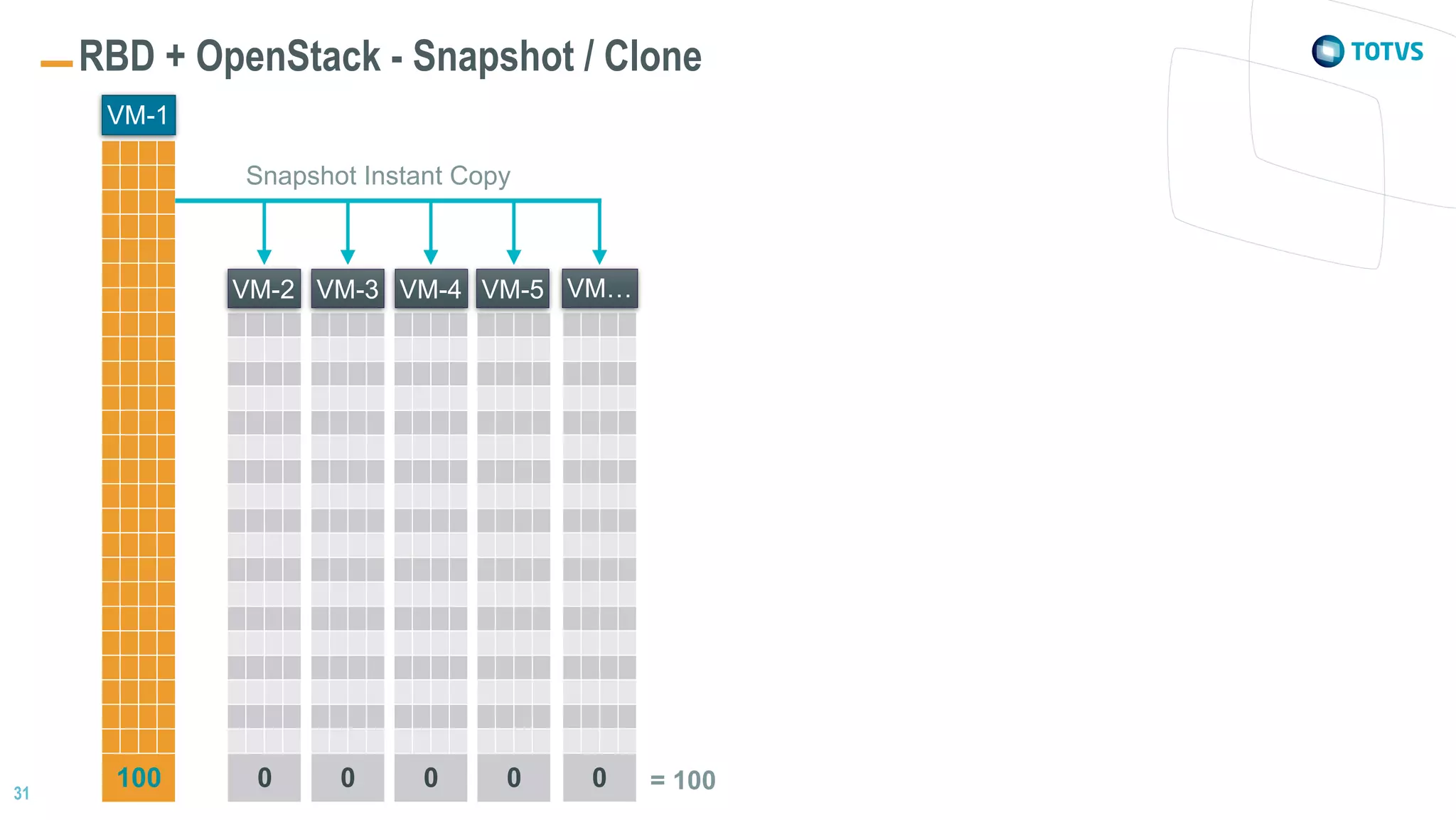

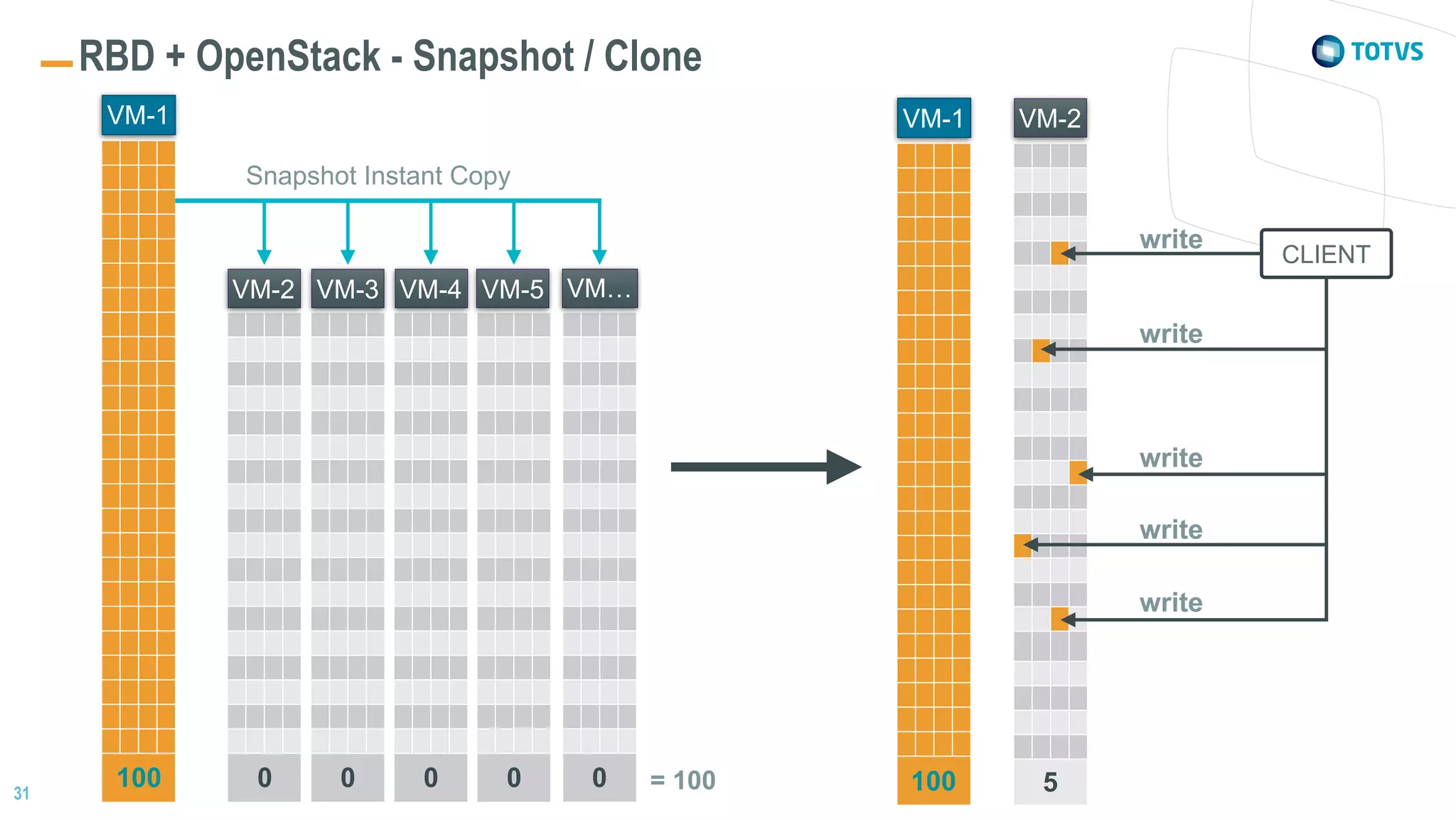

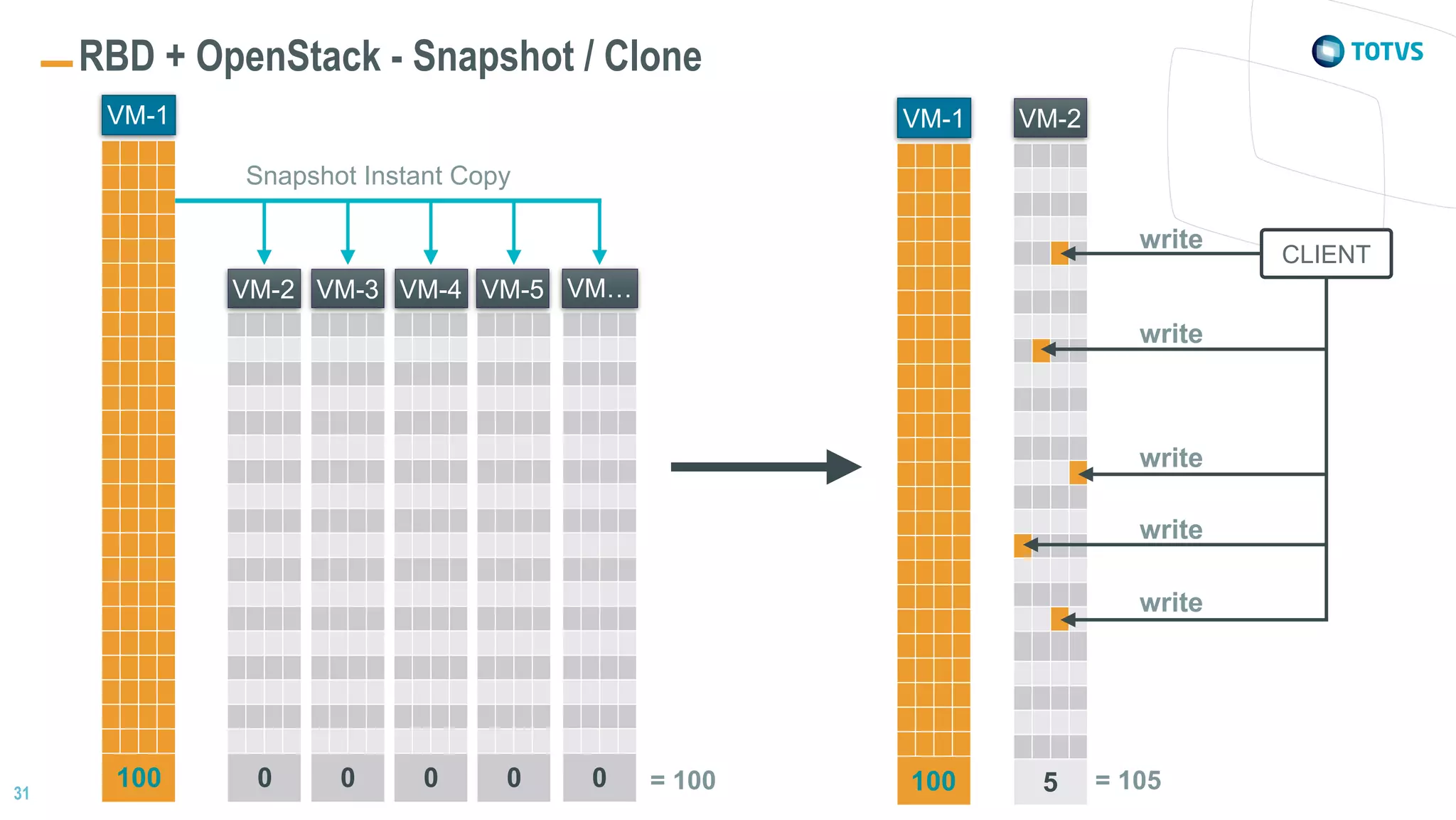

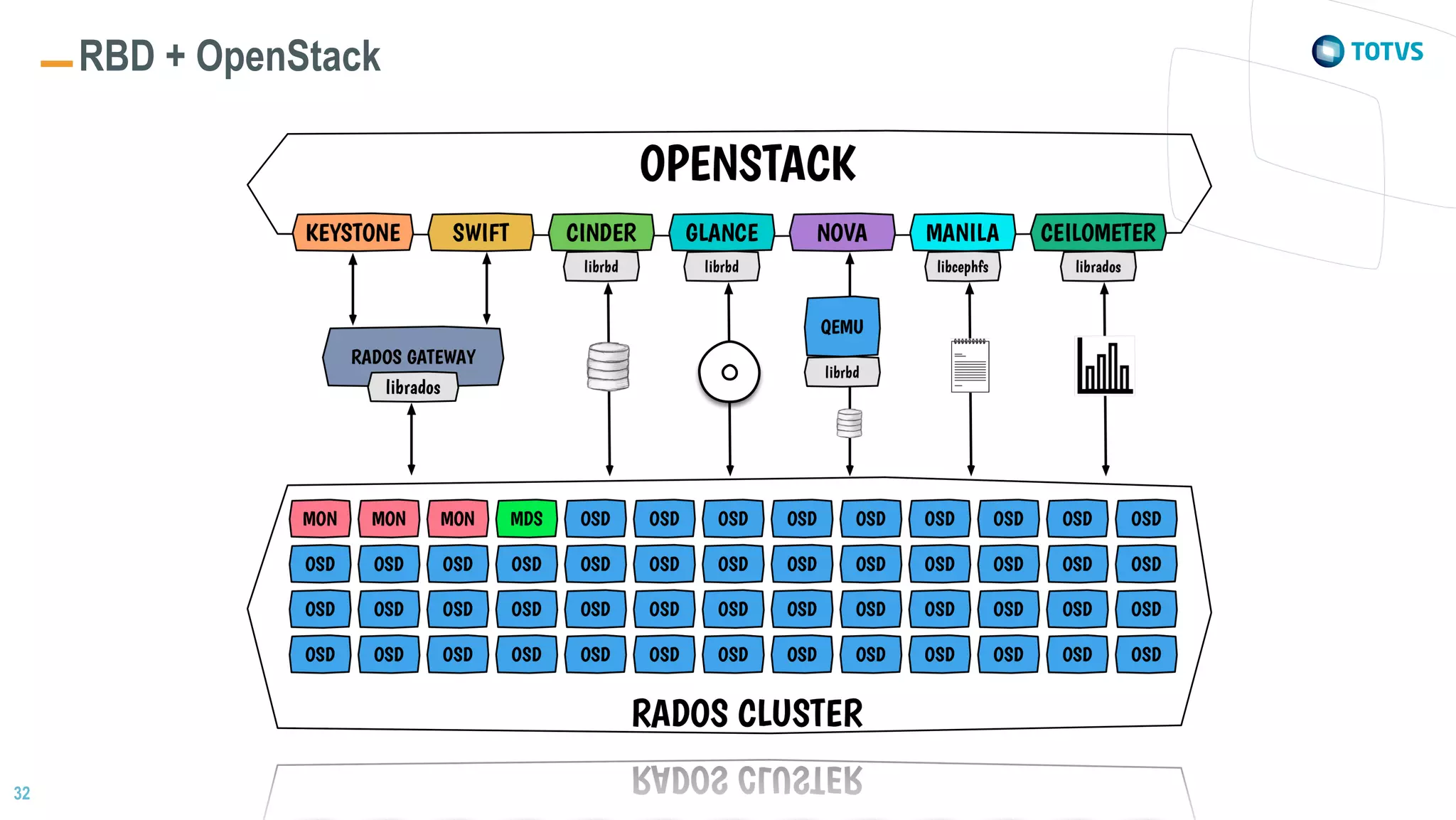

Ceph is an open-source software-defined storage system that provides scalable and fault-tolerant storage. It uses a distributed object storage platform called RADOS for data reliability and redundancy. Ceph has several interfaces including RBD for block storage, CephFS for file storage, and RADOSGW for object storage. Ceph uses CRUSH to deterministically map data across clusters and placement groups to balance data and reduce data movement. When used with OpenStack, Ceph provides block storage volumes to instances through RBD and allows snapshotting and cloning of volumes for high availability.