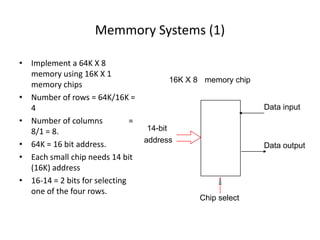

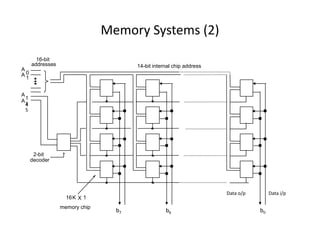

The document discusses various aspects of computer memory systems including main memory, cache memory, and memory mapping techniques. It provides details on:

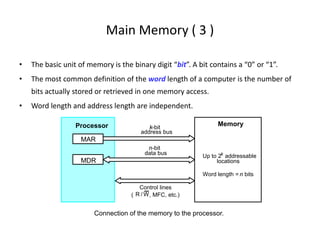

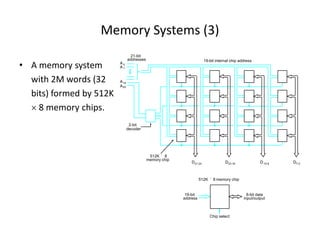

1) Main memory stores program and data during execution and consists of addressable memory cells. Memory access time is the time for a memory operation while cycle time is the minimum delay between operations.

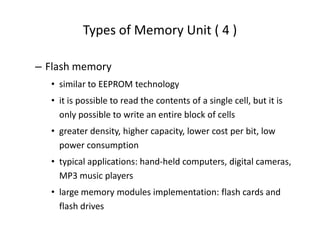

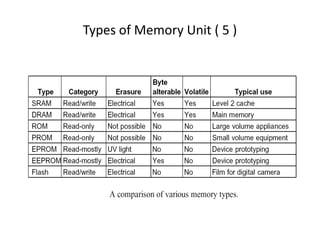

2) Memory units include RAM, ROM, PROM, EPROM, EEPROM and flash memory which have different characteristics like volatility and ability to be written.

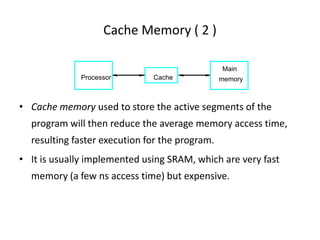

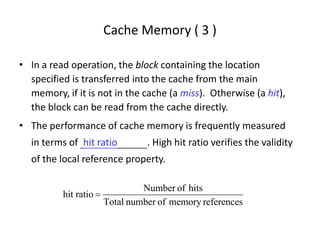

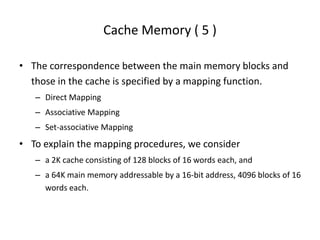

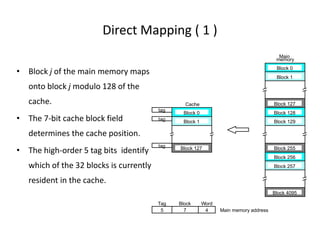

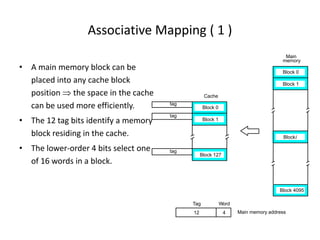

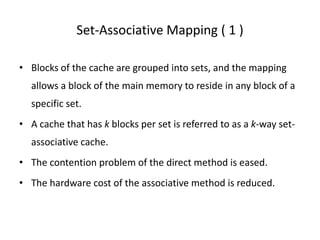

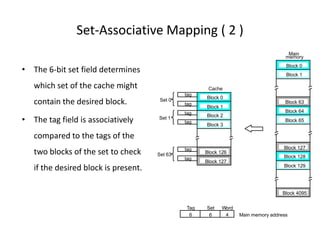

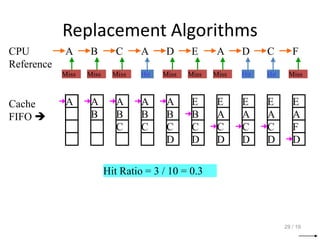

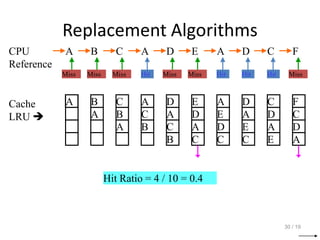

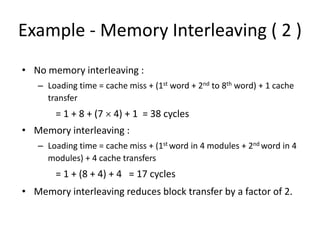

3) Cache memory uses fast SRAM to improve performance by taking advantage of locality of reference where nearby memory accesses are common. Mapping techniques like direct, associative and set-associative mapping determine how