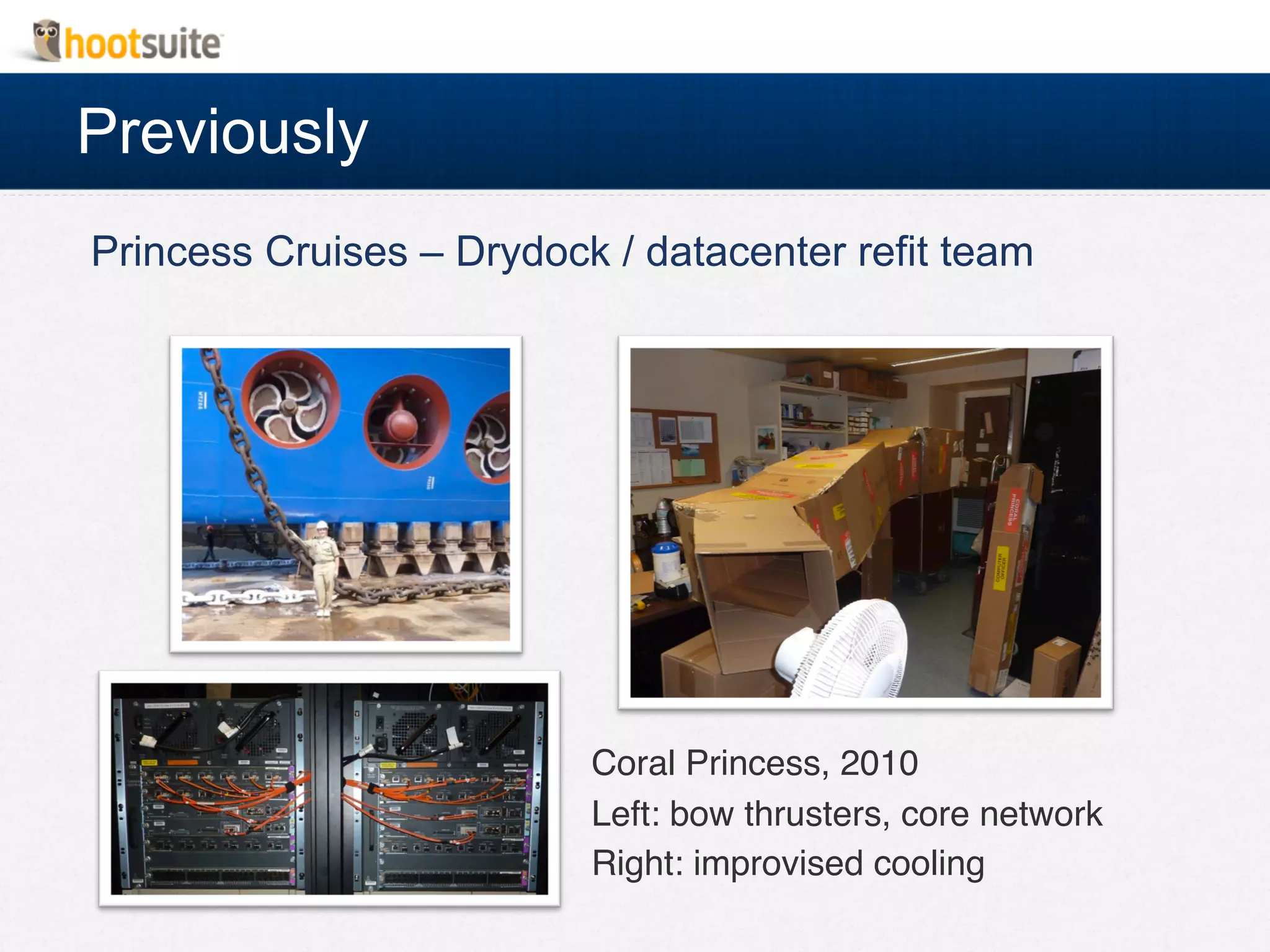

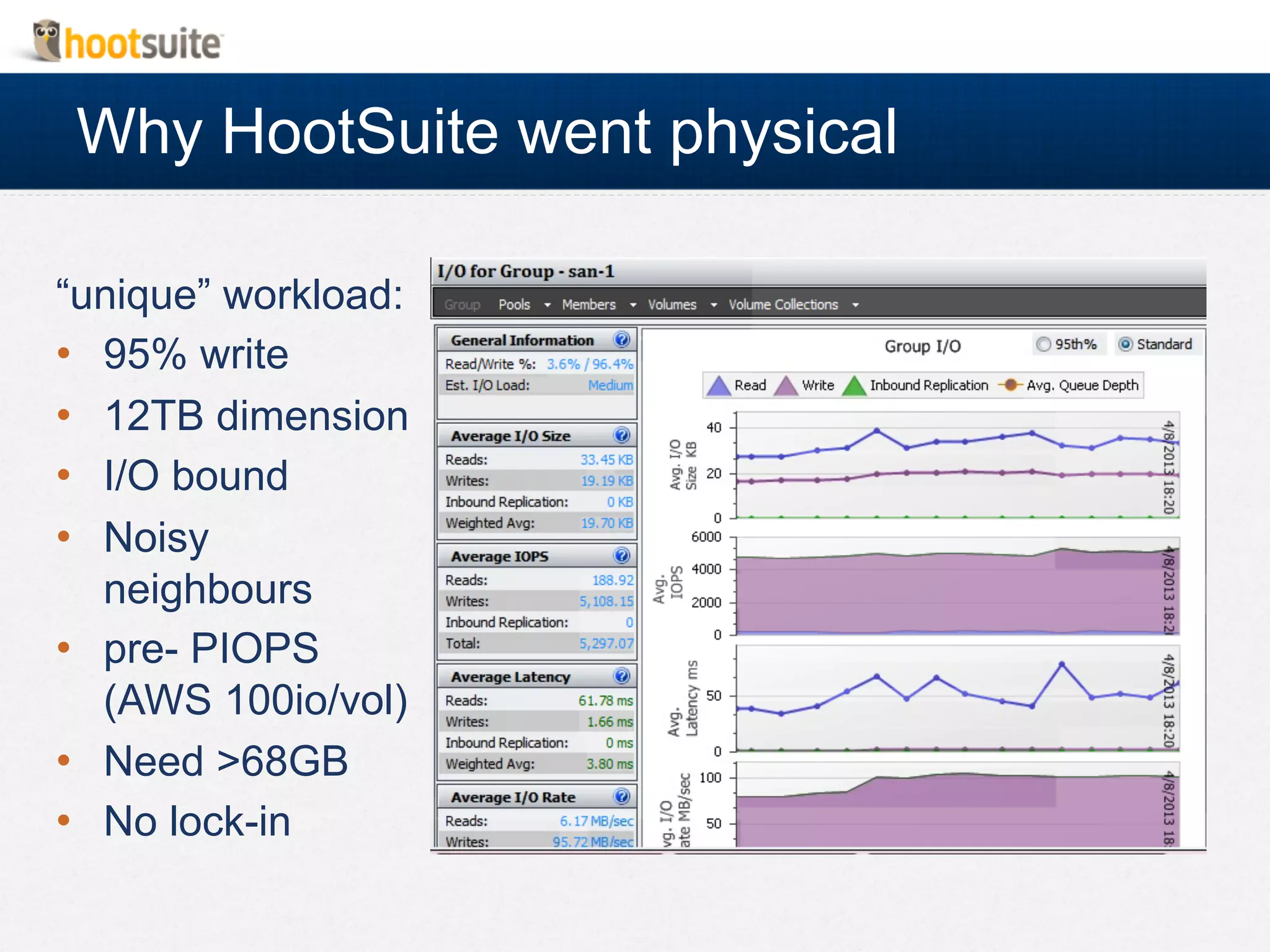

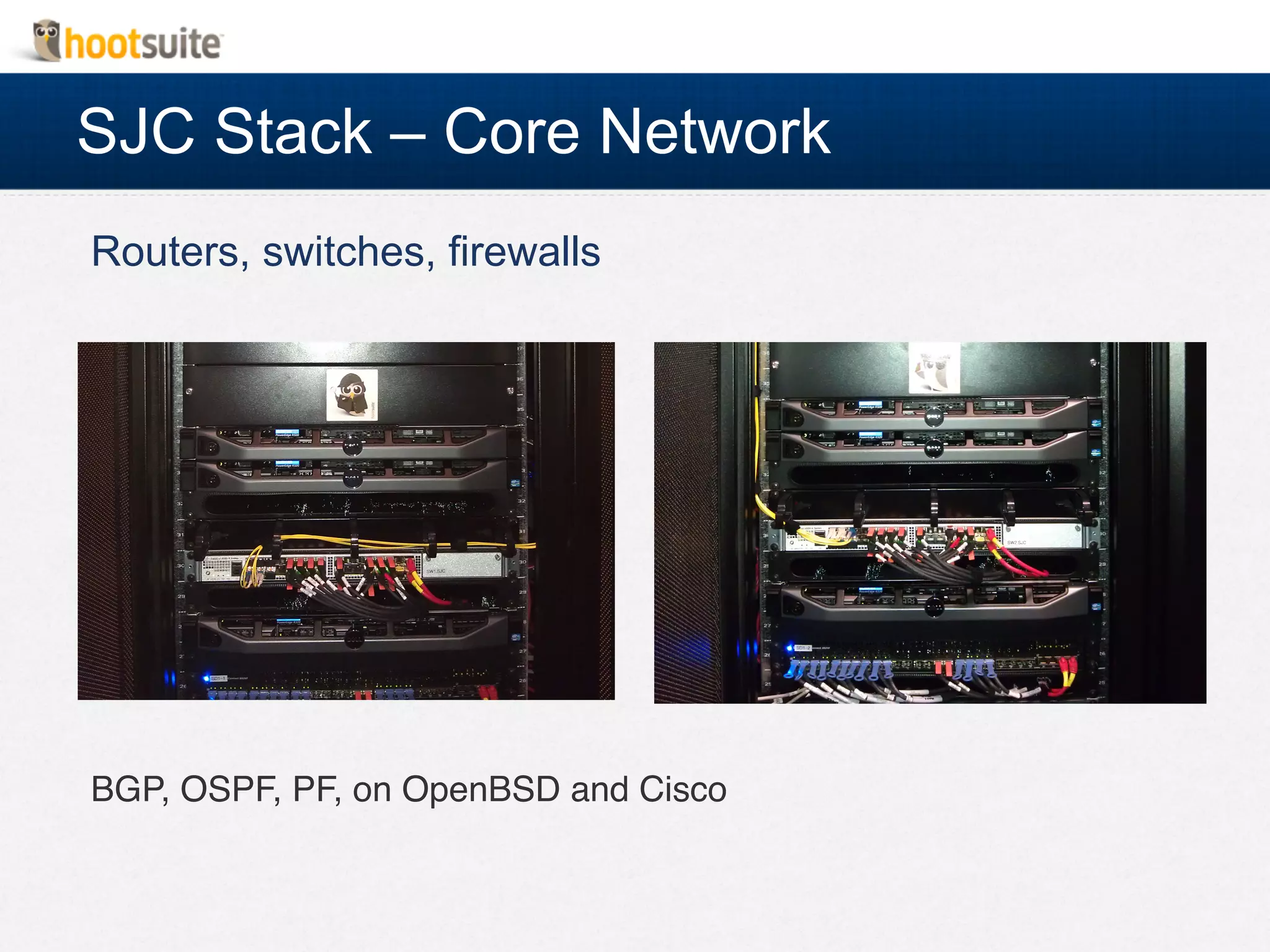

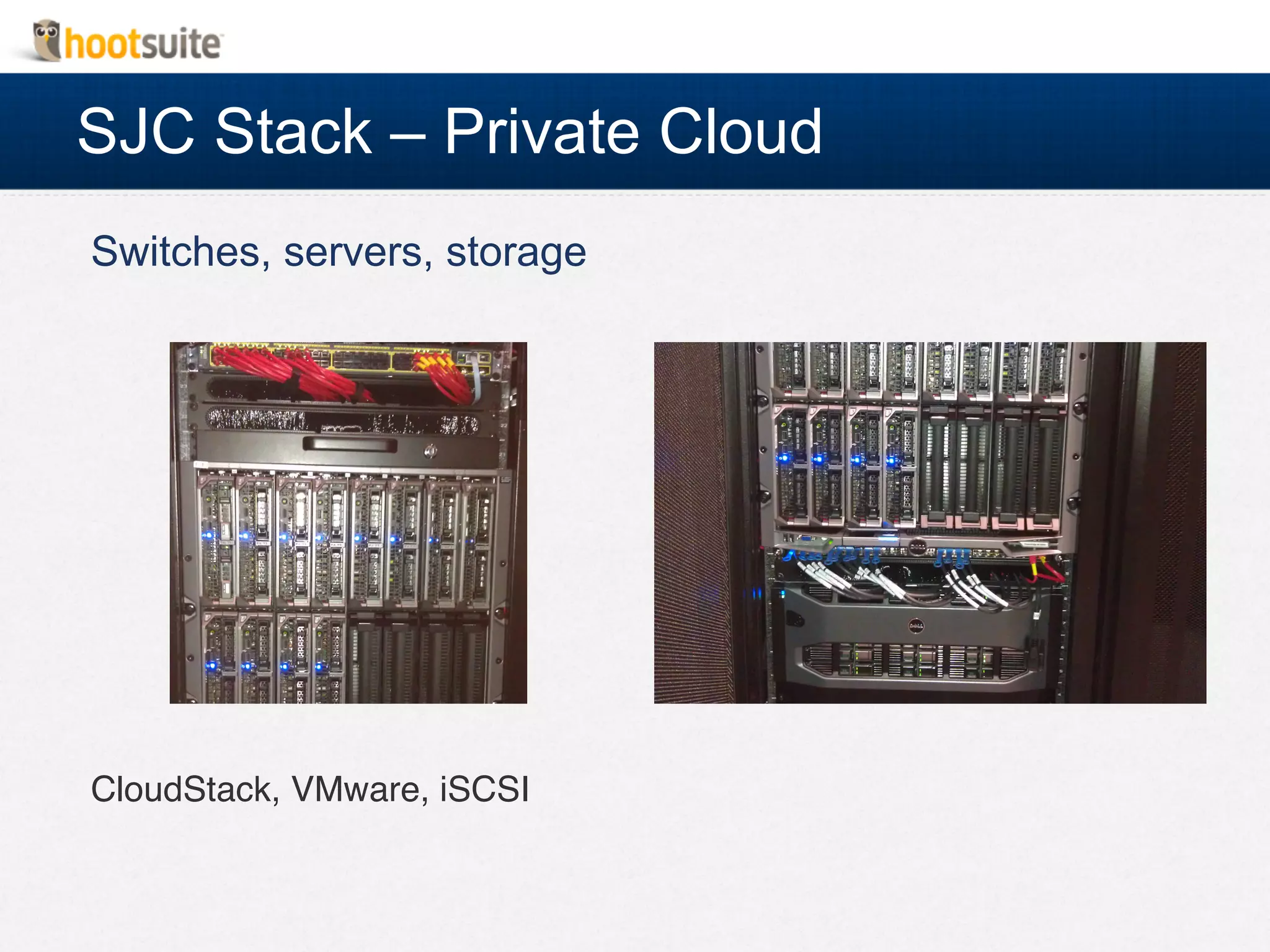

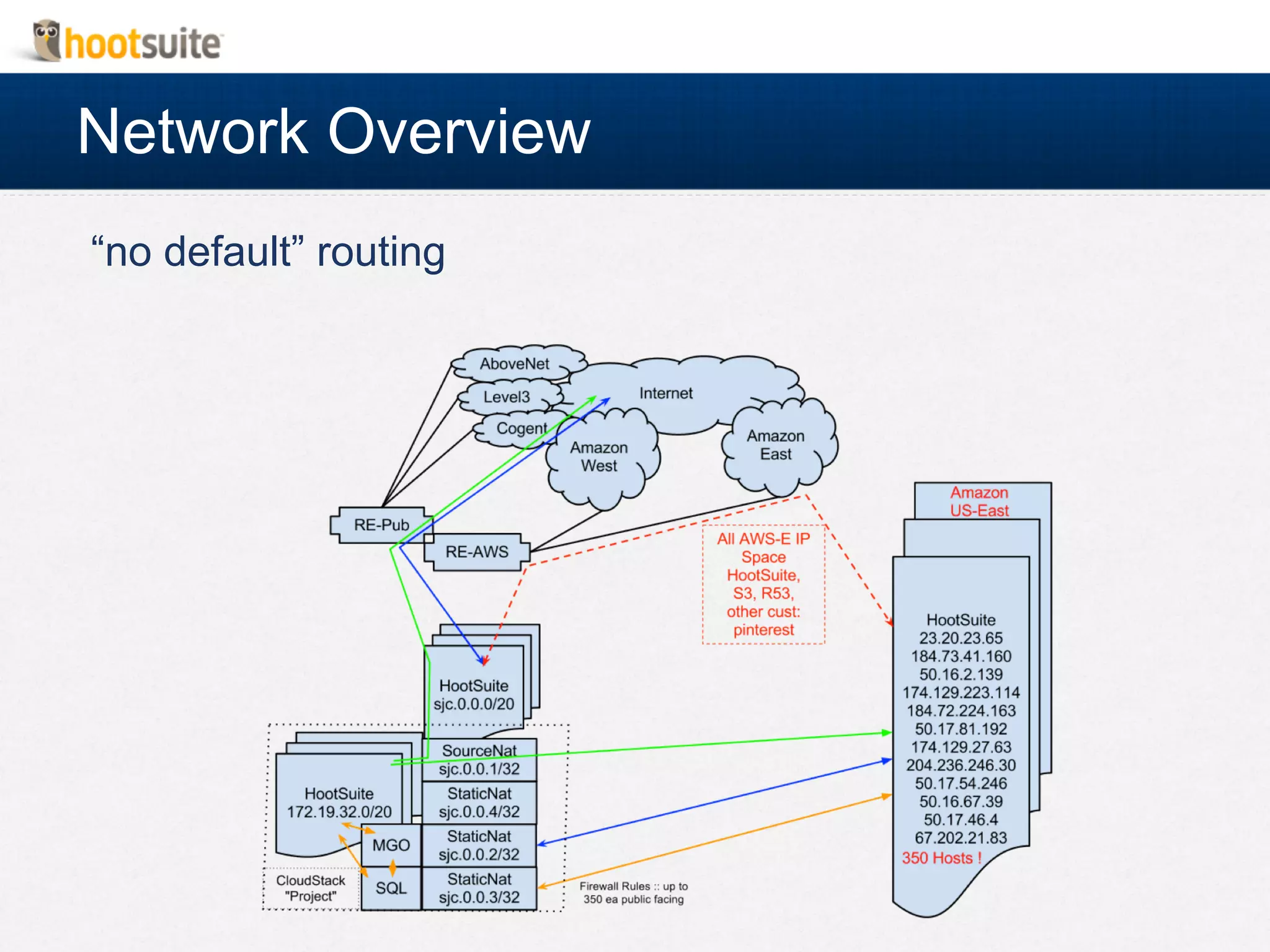

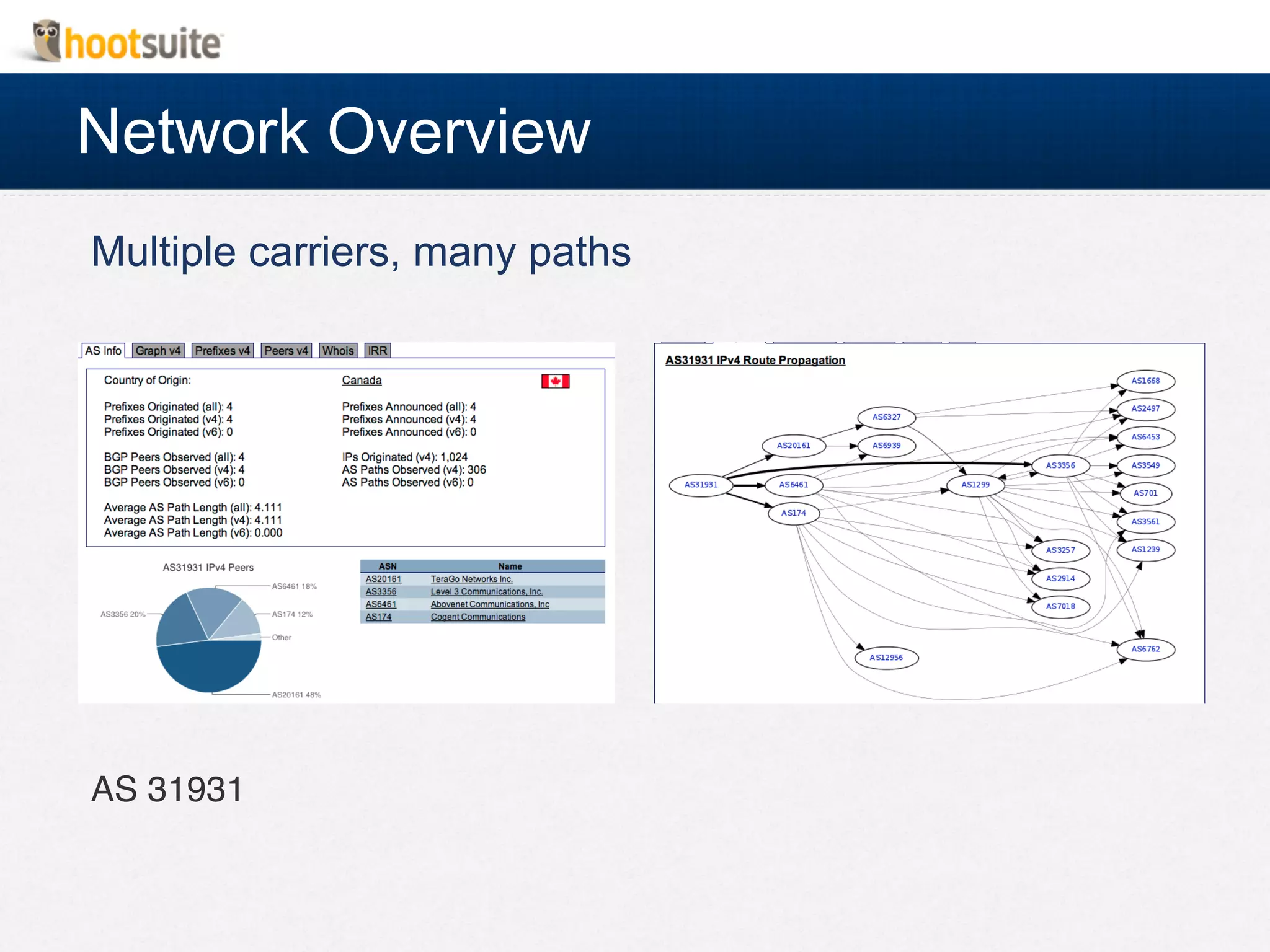

This document summarizes Chris Maxwell's presentation on HootSuite's physical infrastructure. It discusses why HootSuite chose to build a physical datacenter instead of relying solely on cloud services like AWS due to their unique workload needs. It then outlines some of the specific infrastructure choices HootSuite made, including using OpenBSD and Cisco switches for routing and firewalls, CloudStack on VMware, and iSCSI storage. Maxwell emphasizes balancing needs with budget and describes some of the tradeoffs of their choices.