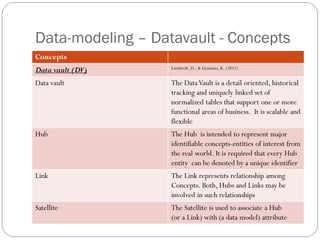

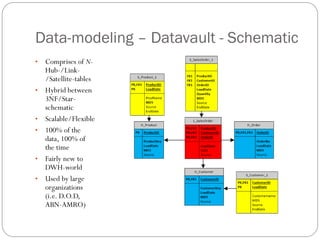

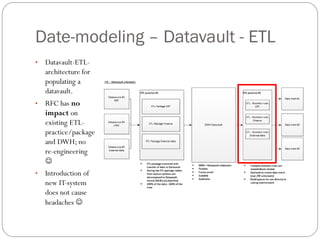

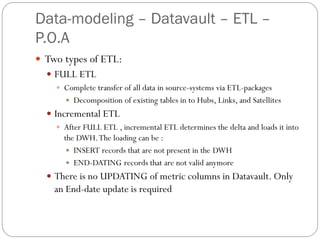

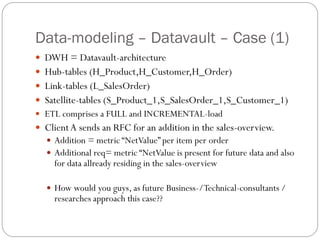

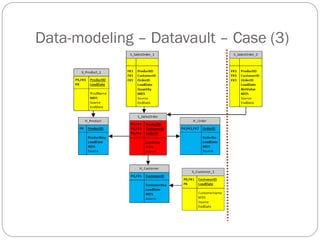

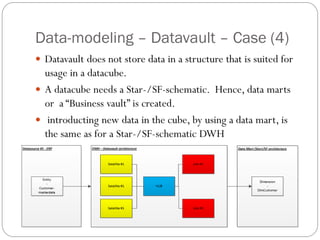

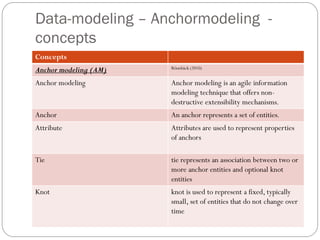

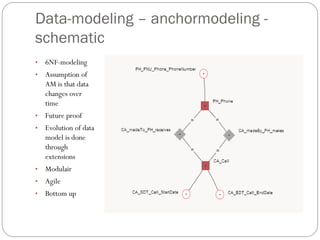

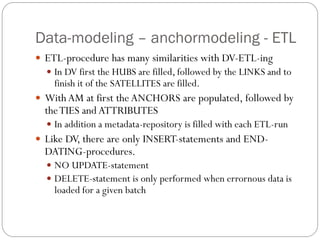

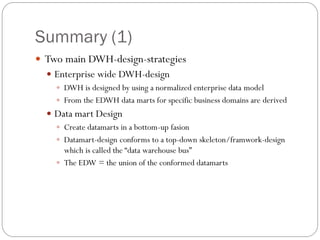

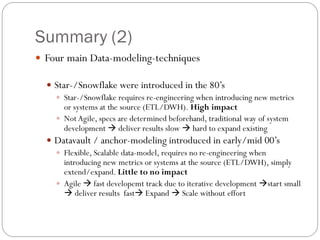

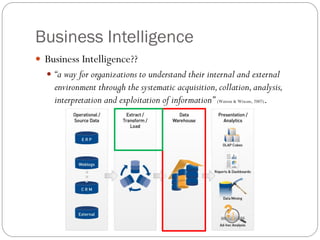

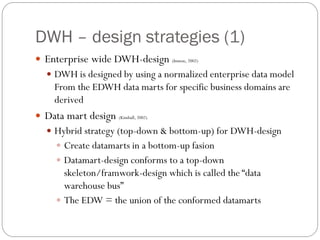

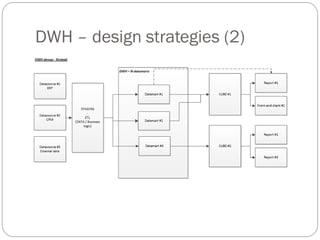

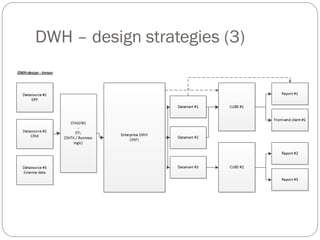

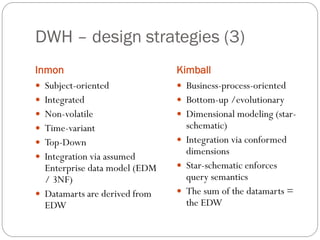

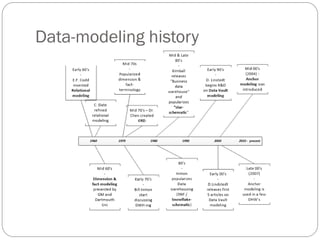

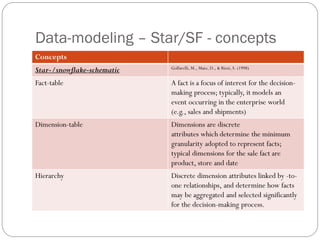

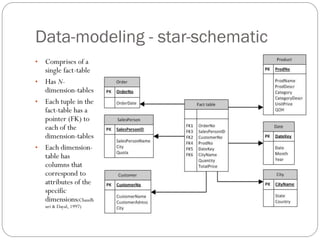

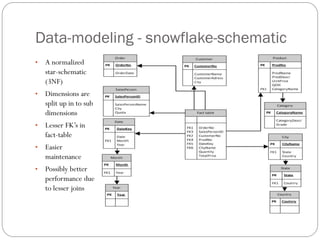

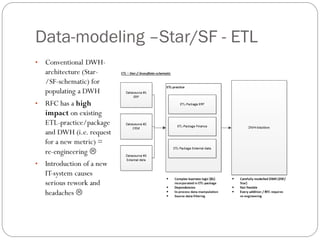

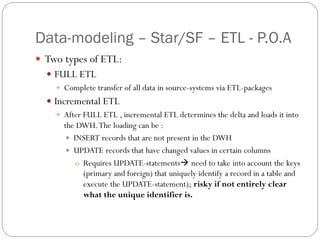

The document discusses data warehousing and modeling techniques, focusing on key concepts such as star/snowflake schemas, data vault, and anchor modeling. It highlights the evolution of data modeling strategies, emphasizing the transition from traditional models requiring re-engineering to more agile and flexible methodologies. Ultimately, it concludes that no single approach is superior; the choice of modeling technique should be based on organizational needs and specific circumstances.

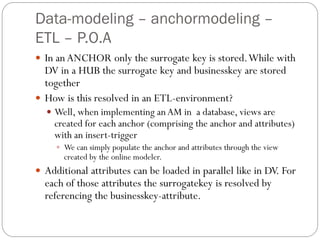

![Data-modeling – Star/SF – Case (2)

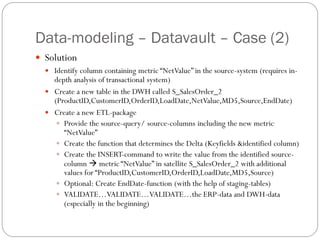

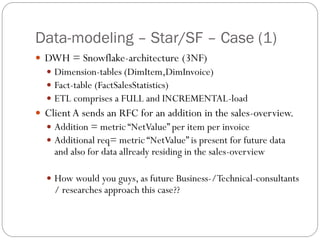

Solution

Identify column containing metric “NetValue” in the source-system

(requires in-depth analysis of transactional system)

Add column to fact table “FactSalesStatistics” ([NetValue] [decimal]

(x,y) NULL)

Revert to appropriate ETL-package;

Adjust the source-query / source-columns to include the identified column

(metric)

Adjust the function that determines the Delta (add identified column)

Adjust the INSERT-command to write the value from the identified source-

column metric “NetValue” in fact-table “FactSalesStatistics”

Adjust the UPDATE-command to update the metric “NetValue” with the

value from the identified source-column for the existing data in table

“FactSalesStatistics”

VALIDATE…VALIDATE…VALIDATE…the ERP-data and DWH-

data (especially in the beginning)](https://image.slidesharecdn.com/datawarehousinginpractice-140513080646-phpapp02/85/BI-Data-warehousing-in-practice-17-320.jpg)