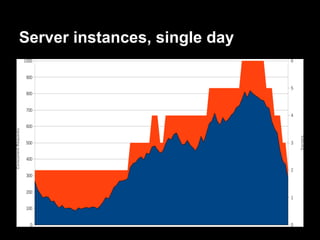

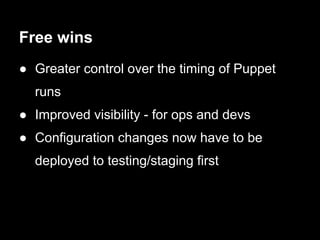

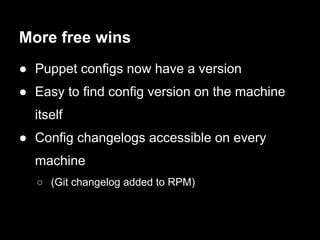

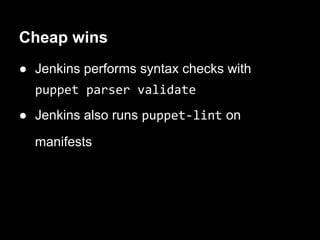

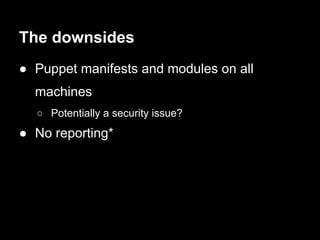

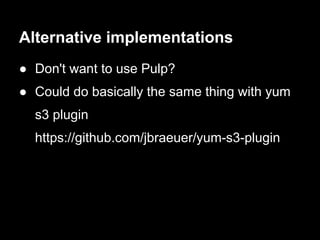

Sam Bashton discusses the challenges of using standard Puppet in dynamic, auto-scaling environments and presents a decentralized approach without a Puppetmaster. The solution involves deploying Puppet manifests as RPMs via Jenkins and Pulp for efficient configuration management and code deployment. The architecture is AWS-specific but could be adapted to other cloud environments, emphasizing improved control, visibility, and versioning of configurations.