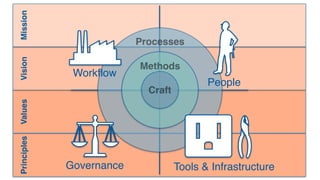

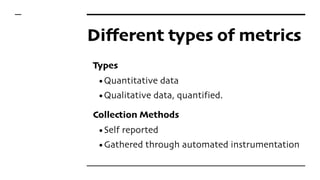

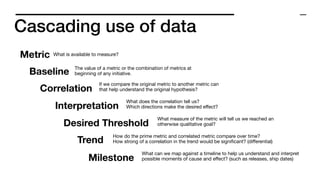

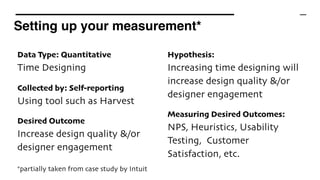

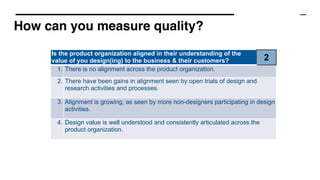

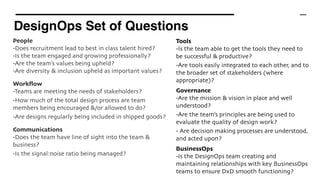

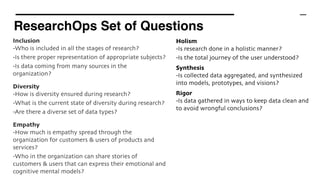

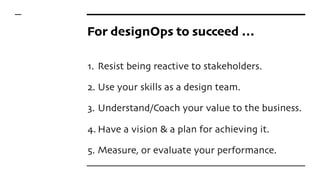

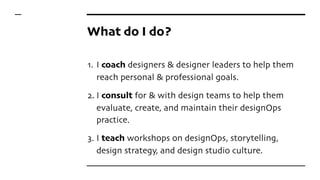

The document outlines the importance of Design Operations (DesignOps) in enhancing the value of design within organizations by aligning its goals with business objectives and improving team workflows. It discusses methods for measuring and evaluating DesignOps effectiveness through various metrics, including quantitative and qualitative data, while emphasizing the necessity for alignment among design teams and stakeholders. Additionally, it provides insights into evaluating team health, processes, and overall design impact on products and user experiences.