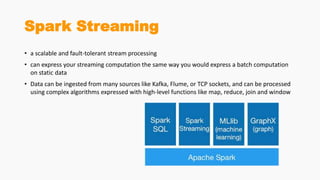

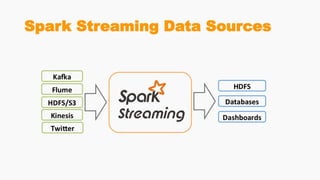

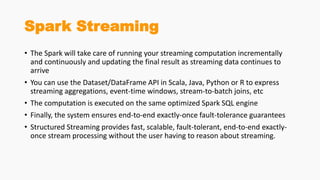

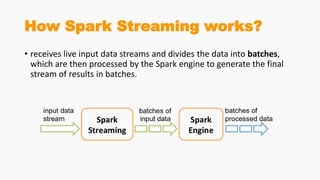

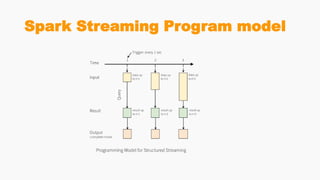

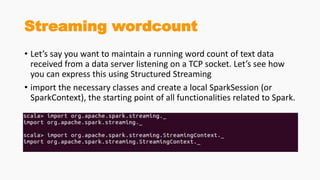

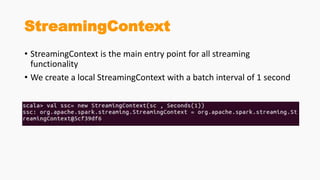

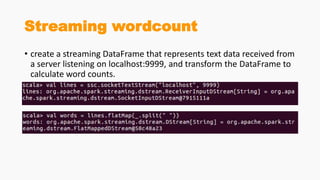

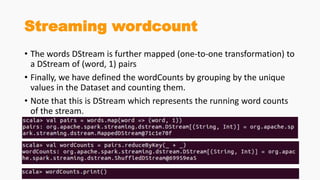

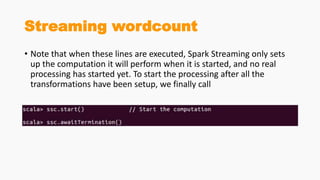

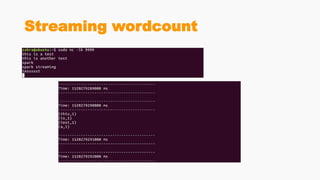

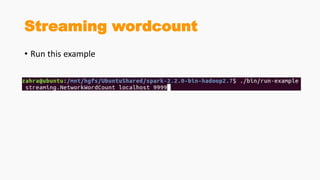

Spark Streaming allows for scalable, fault-tolerant stream processing of data ingested from sources like Kafka. It works by dividing the data streams into micro-batches, which are then processed using transformations like map, reduce, join using the Spark engine. This allows streaming aggregations, windows, and stream-batch joins to be expressed similarly to batch queries. The example shows a streaming word count application that receives text from a TCP socket, splits it into words, counts the words, and updates the result continuously.