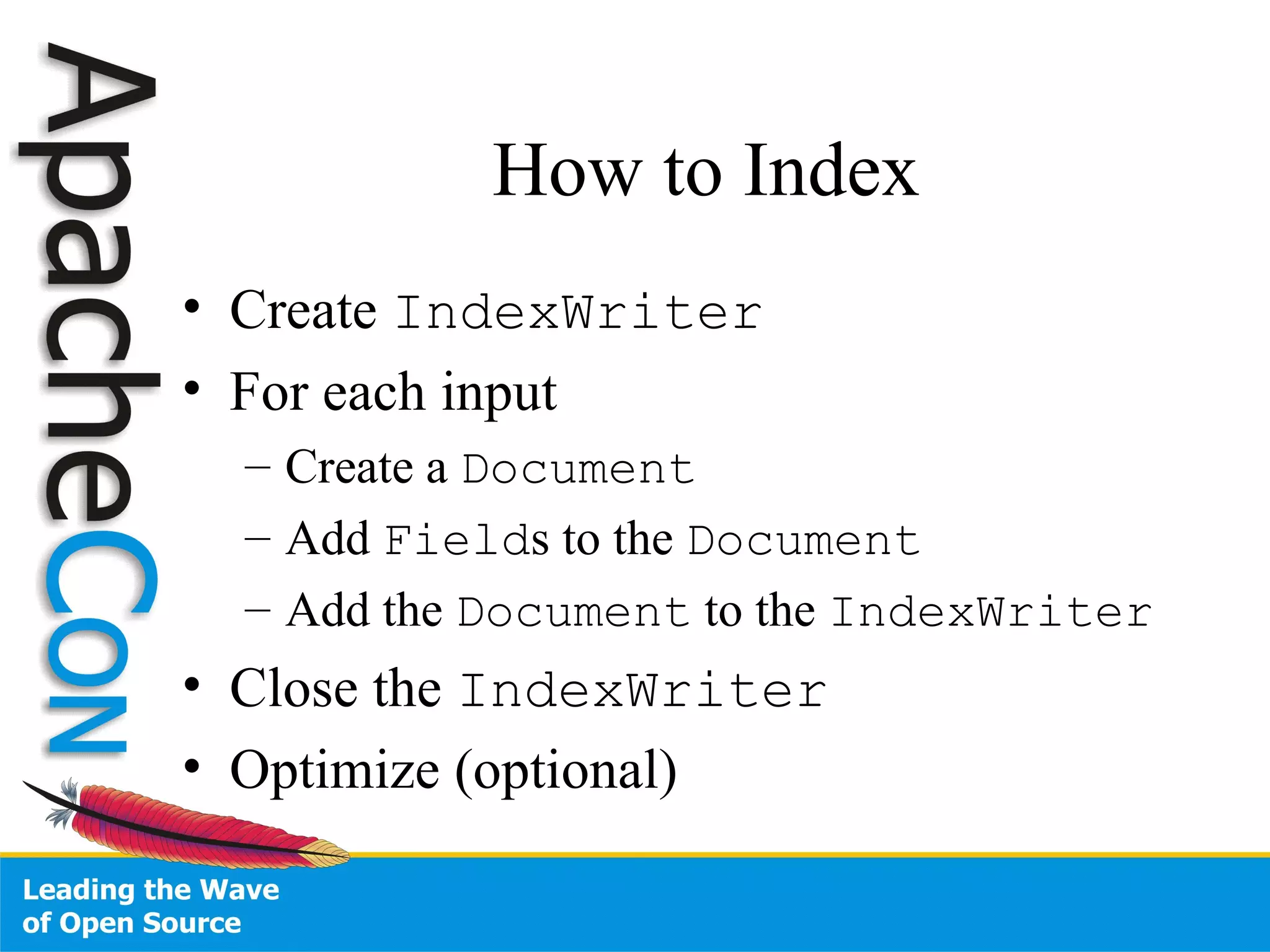

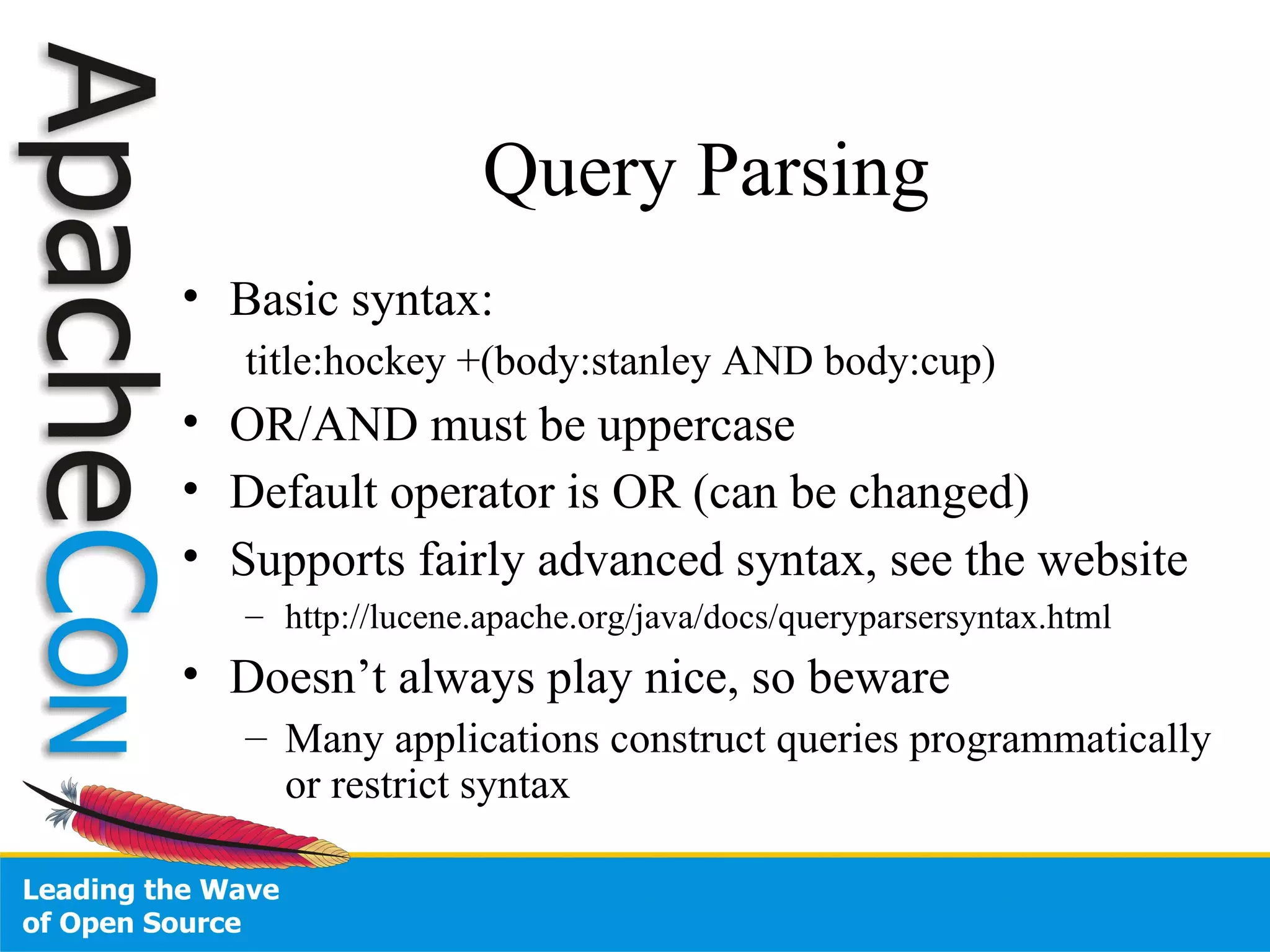

The document provides an overview of Lucene, an open source search library. It discusses Lucene concepts like indexing, searching, analysis and contributions. The tutorial covers the basics of indexing and searching documents, analyzing text, and popular contributed modules like highlighting, spellchecking and finding similar documents. Attendees will gain hands-on experience with Lucene through code examples and exercises.

![Resources http://lucene.apache.org/ http://en.wikipedia.org/wiki/Vector_space_model Modern Information Retrieval by Baeza-Yates and Ribeiro-Neto Lucene In Action by Hatcher and Gospodnetić Wiki Mailing Lists [email_address] Discussions on how to use Lucene [email_address] Discussions on how to develop Lucene Issue Tracking https://issues.apache.org/jira/secure/Dashboard.jspa We always welcome patches Ask on the mailing list before reporting a bug](https://image.slidesharecdn.com/978480/75/Lucene-Bootcamp-1-47-2048.jpg)

![Resources [email_address] Lucid Imagination Support Training Value Add [email_address]](https://image.slidesharecdn.com/978480/75/Lucene-Bootcamp-1-48-2048.jpg)