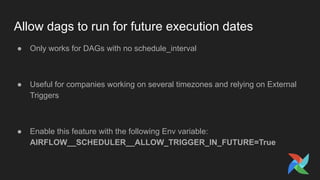

This document provides an overview of new features in Airflow 1.10.8/1.10.9 and best practices for writing DAGs and configuring Airflow for production. It also outlines the roadmap for Airflow 2.0, including dag serialization, a revamped real-time UI, developing a production-grade modern API, releasing official Docker/Helm support, and improving the scheduler. The document aims to help users understand recent Airflow updates and plan their migration to version 2.0.

![Store Sensitive data in Connections

● Don’t put passwords in your DAG files!

● Use Airflow connections to store any kind of sensitive data like Passwords,

private keys, etc

● Airflow stores the connections data in Airflow MetadataDB

● If you install “crypto” package (“pip install apache-airflow[crypto]”), password

field in Connections would be encrypted in DB too.](https://image.slidesharecdn.com/talk1-airflowbestpracticesroadmapto2-200510190455/85/Airflow-Best-Practises-Roadmap-to-Airflow-2-0-20-320.jpg)

![Use Flask-Appbuilder based UI

● Enabled using “rbac=True” under “[webserver]”

● Airflow ships with a set of roles by default: Admin, User, Op, Viewer, and

Public

● Creating custom roles is possible

● DAG Level Access Control. User can declare the read or write permission

inside the DAG file as shown below](https://image.slidesharecdn.com/talk1-airflowbestpracticesroadmapto2-200510190455/85/Airflow-Best-Practises-Roadmap-to-Airflow-2-0-28-320.jpg)