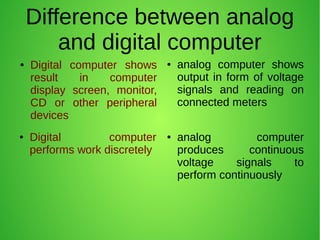

This document provides an introduction to analog and digital computers by defining each type, giving examples, and explaining their differences. It defines analog computers as those that perform functions continuously based on varying inputs like voltage or temperature using electrical or mechanical parts. Digital computers are defined as those that perform and accept converted binary data. Key differences outlined are that digital computers show results on screens while analog uses continuous voltage signals, digital works discretely while analog is continuous, and digital requires memory storage versus analog having no memory concept.