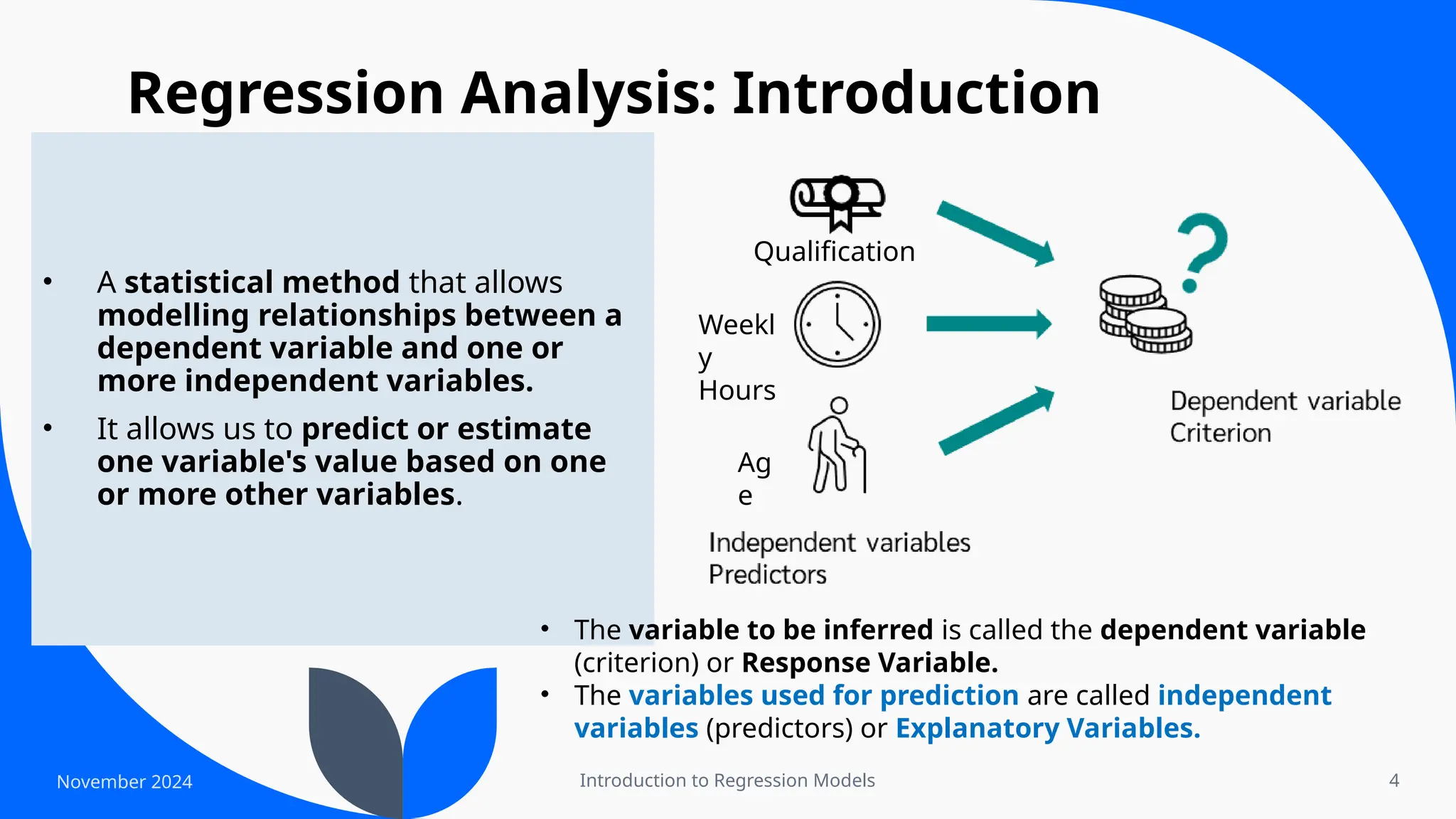

The document provides an overview of regression models, focusing on multiple linear and logistic regression approaches. It explains the importance of regression analysis in modeling relationships between dependent and independent variables, as well as various types of regression based on the number of independent variables and measurement scales. Additionally, it discusses key concepts such as residuals, outliers, and the assumptions necessary for valid regression analysis.