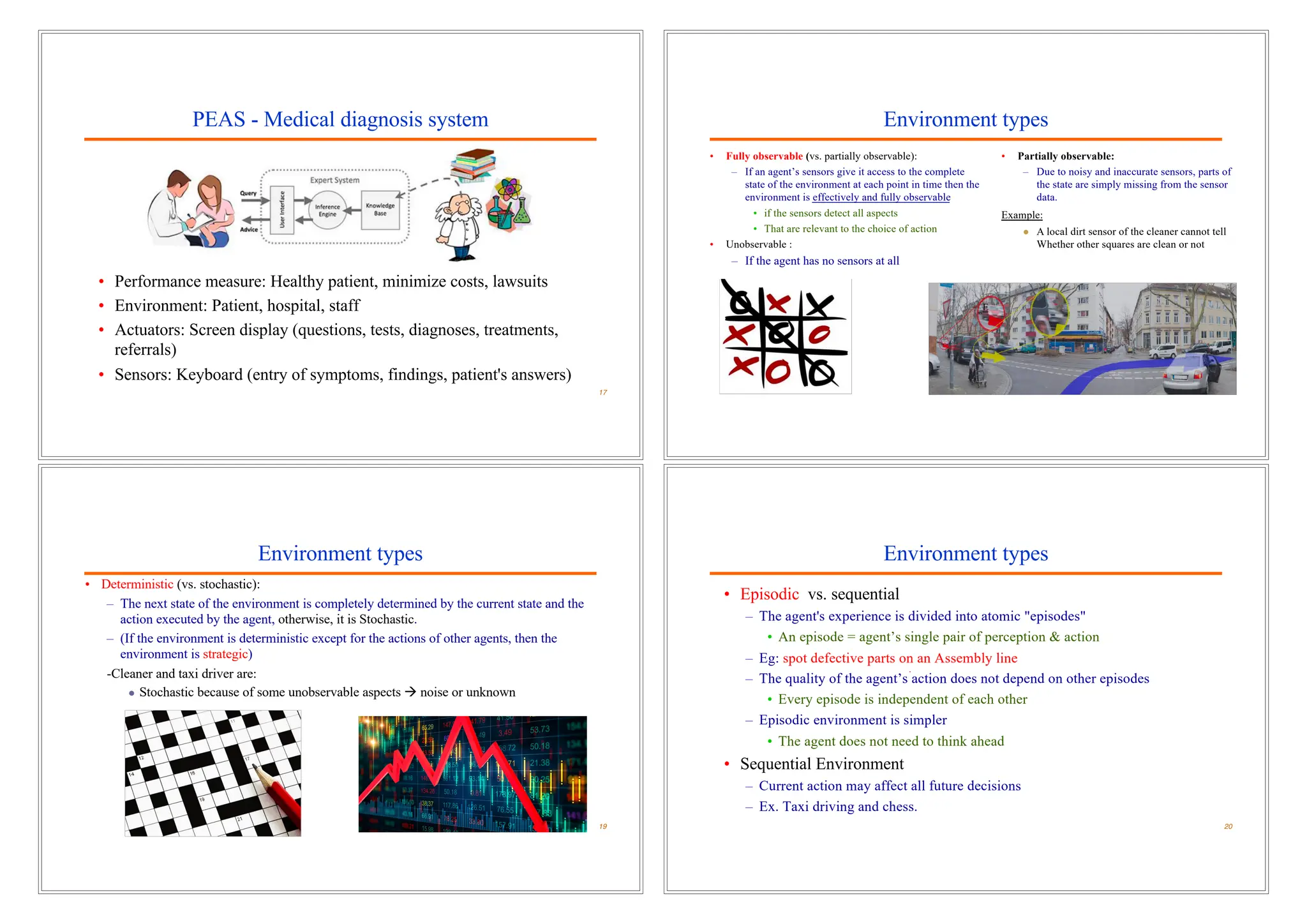

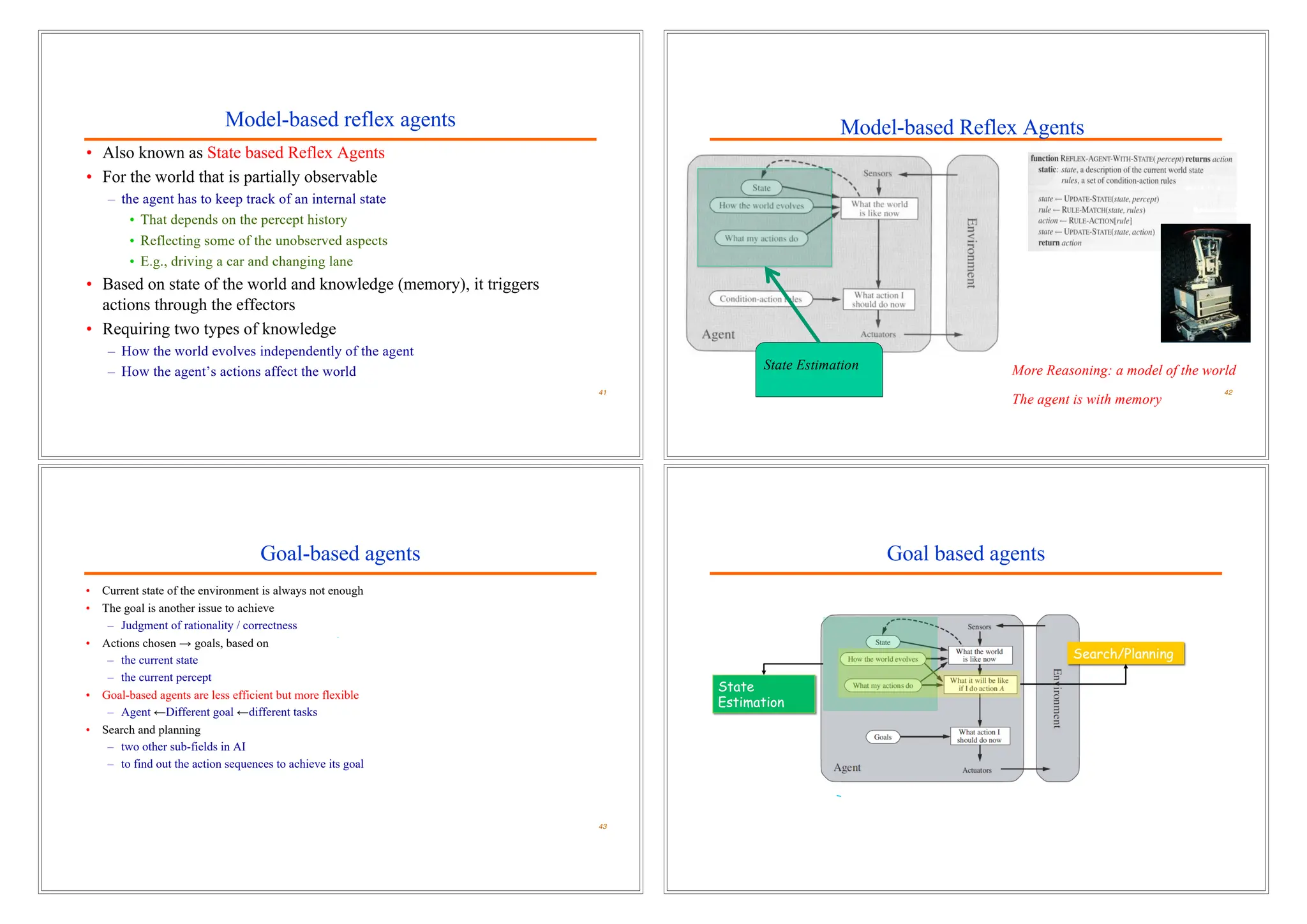

The document discusses the concept of intelligent agents and their environments within the framework of artificial intelligence, emphasizing the characteristics and functionalities that define rational agents. It outlines various types of agents, such as simple reflex agents, goal-based agents, and utility-based agents, as well as the importance of learning and adapting based on past experiences. Additionally, it explains the significance of task environments, performance measures, and the different factors that affect agent design.

![Intelligent Agents

5

• Percept

• Agent’s perceptual inputs

at any given instant

• Percept sequence

• Complete history of

everything that the agent

has ever perceived.

Agent’s behavior is mathematically described by

Agent function maps from percept histories to actions:

[f: P* à A]

Agent program runs on the physical architecture to produce f

Diagram of an agent

6

What AI should fill

Agents that Plan Ahead

7

An agent should strive to "do the right thing", based on what it can perceive and the

actions it can perform.

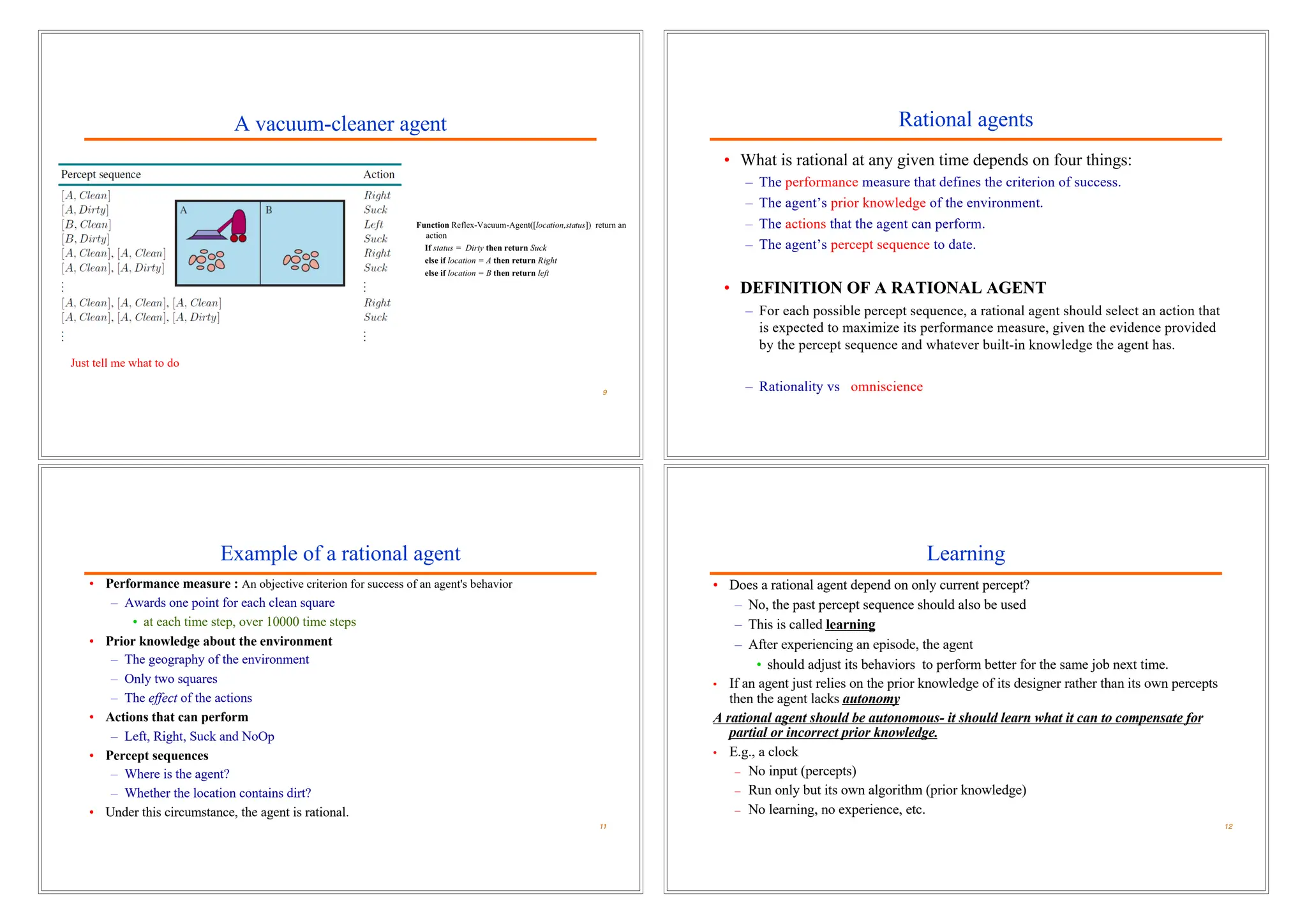

Example : Vacuum-cleaner world Agent

• Percepts:

– location and contents(Clean or Dirty?),

– e.g., [A , Dirty]

• Actions:

– Left: Move left,

– Right: Move right

– Suck: suck up the dirt,

– NoOp: do nothing

8](https://image.slidesharecdn.com/21intelligentagent-240815175603-1b94507b/75/2_1_Intelligent-Agent-Type-of-Intelligent-Agent-and-Environment-pdf-2-2048.jpg)