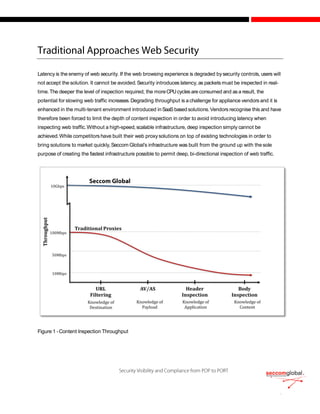

The document discusses the evolution of cyber attacks from targeting servers to exploiting client-side vulnerabilities, emphasizing the inadequacy of traditional security methods. It highlights the increasing effectiveness of Security-as-a-Service (SaaS) solutions that offer real-time, deep content inspection without introducing latency, a crucial factor for user acceptance. Furthermore, it underscores the importance of dynamic, real-time content evaluation and the network effect, enabling collective defense against emerging threats through off-line analysis and knowledge sharing among security vendors.