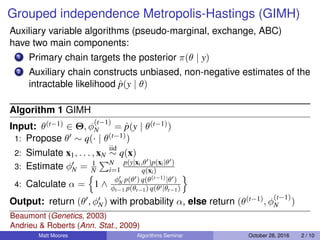

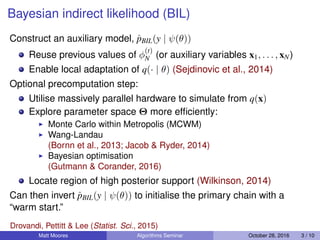

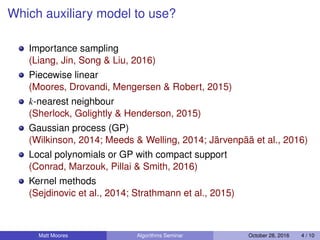

The document discusses the acceleration of pseudo-marginal Markov Chain Monte Carlo (MCMC) methods using Gaussian processes in the context of Bayesian statistics. It presents various algorithms and techniques for improving the efficiency and scalability of these methods, focusing on the Bayesian indirect likelihood and adaptive algorithms. Key components include the utilization of auxiliary models and the optimization of computational resources through parallel precomputation.

![Multiplicative Noise

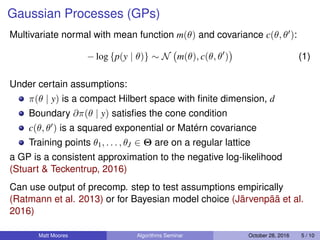

Can’t evaluate p(y|θ) pointwise, but by lognormal CLT:

φ

(t)

N = W p y | θ(t)

(2)

E[W] = 1 (3)

log{W}

d

−−−−→

N→∞

N −

1

2

σ2

, σ2

(4)

when x1, . . . , xN are generated from a particle filter

(Bérard, Del Moral & Doucet, 2014)

We can account for this noise by adding a nugget term to our GP:

− log ˆφ

(j)

N ∼ N mβ(θ) +

δ

2

, cγ(θ, θ ) + δI (5)

where θ(j), φ

(j)

N

J

j=1

are obtained from the precomputation step

Matt Moores Algorithms Seminar October 28, 2016 6 / 10](https://image.slidesharecdn.com/talk-alg-170124175307/85/Accelerating-Pseudo-Marginal-MCMC-using-Gaussian-Processes-6-320.jpg)

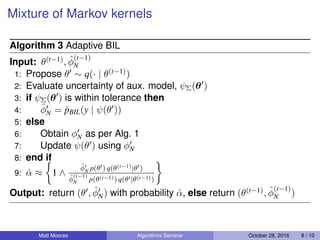

![Delayed Acceptance (DA)

Algorithm 2 BIL with DA

Input: θ(t−1) ∈ Θ, φ

(t−1)

N = ˆp(y | θ(t−1))

1: Propose θ ∼ q(· | θ(t−1))

2: Calculate αBIL = 1 ∧ ˆpBIL(y|ψ(θ )) p(θ ) q(θ(t−1)|θ )

ˆpBIL(y|ψ(θ(t−1))) p(θ(t−1)) q(θ |θ(t−1))

Output: return (θ(t−1), φ

(t−1)

N ) with probability 1 − α, else

3: Obtain φN as per Alg. 1

4: Calculate αDA = 1 ∧

φN ˆpBIL(y|ψ(θ(t−1)))

φ

(t−1)

N ˆpBIL(y|ψ(θ ))

Output: return (θ , φN) with probability αDA, else return (θ(t−1), φ

(t−1)

N )

Matt Moores Algorithms Seminar October 28, 2016 7 / 10

Christen & Fox (JCGS, 2005)

Sherlock, Golightly & Henderson (arXiv:1509.00172 [stat.CO])](https://image.slidesharecdn.com/talk-alg-170124175307/85/Accelerating-Pseudo-Marginal-MCMC-using-Gaussian-Processes-7-320.jpg)

![For Further Reading

C. C. Drovandi, M. Moores & R. Boys

Accelerating Pseudo-Marginal MCMC using Gaussian Processes.

Tech. Rep., QLD Univ. of Tech., 2015.

M. Moores, C. C. Drovandi, K. Mengersen & C. P. Robert

Pre-processing for approximate Bayesian computation in image analysis.

Statistics & Computing 25(1): 23–33, 2015.

C. C. Drovandi, A. N. Pettitt & A. Lee

Bayesian indirect inference using a parametric auxiliary model.

Statist. Sci. 30(1): 72–95, 2015.

M. Moores, A. N. Pettitt & K. Mengersen

Scalable Bayesian inference for the inverse temperature of a hidden Potts model.

arXiv:1503.08066 [stat.CO], 2015.

C. C. Drovandi, A. N. Pettitt & M. J. Faddy

Approximate Bayesian computation using indirect inference.

J. R. Stat. Soc. Ser. C 60(3): 317–337, 2011.

Matt Moores Algorithms Seminar October 28, 2016 10 / 10](https://image.slidesharecdn.com/talk-alg-170124175307/85/Accelerating-Pseudo-Marginal-MCMC-using-Gaussian-Processes-10-320.jpg)