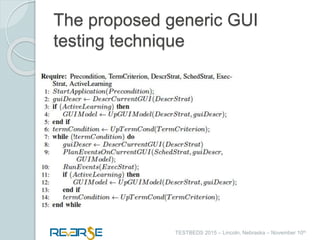

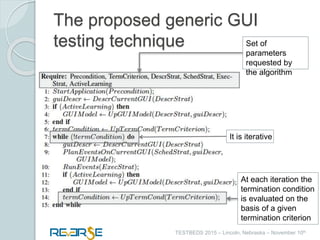

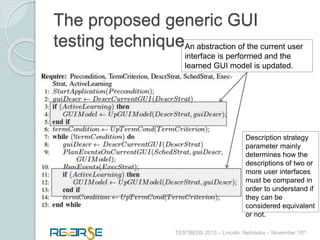

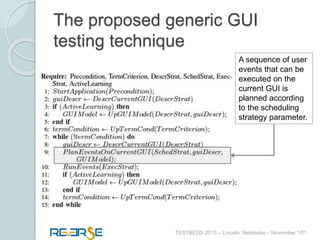

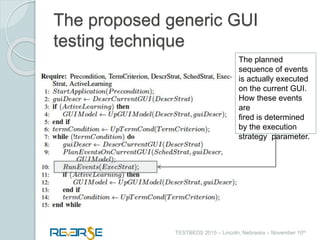

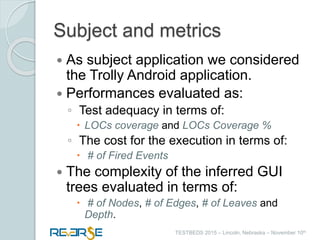

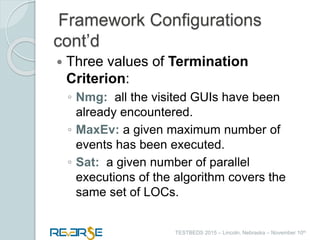

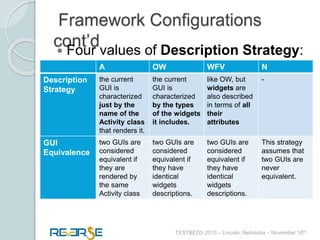

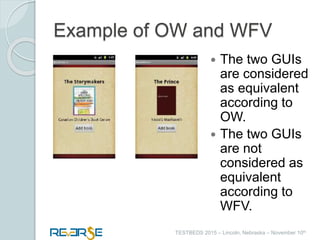

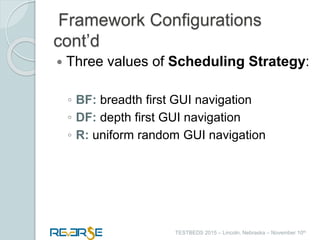

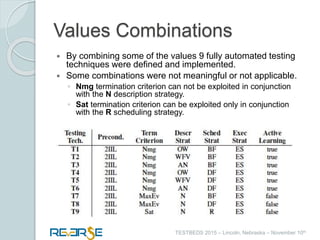

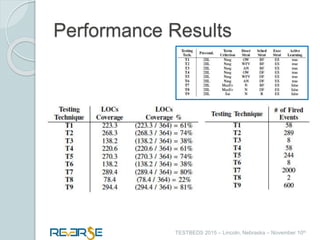

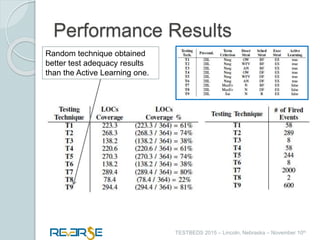

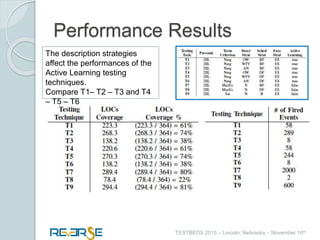

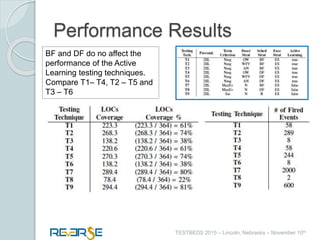

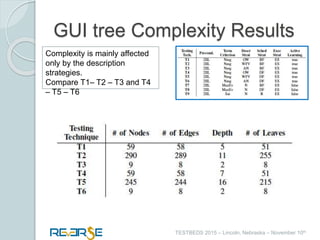

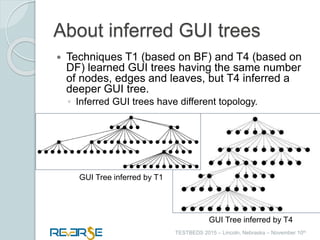

The document presents a conceptual framework for comparing various fully automated GUI testing techniques, particularly in dynamic online testing for Android applications. This framework categorizes testing methods into random and active learning techniques, evaluates their performances based on parameter choices, and proposes a generalized algorithm for systematic comparison. Additionally, it outlines experiments using the Trolly Android application, analyzing factors like test adequacy and complexity, while also emphasizing the influence of description strategies on testing outcomes.