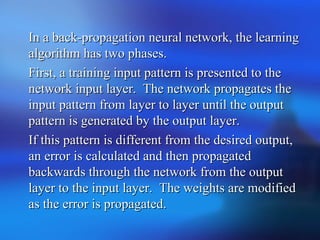

This document discusses artificial neural networks, specifically multilayer perceptrons (MLPs). It provides the following information:

- MLPs are feedforward neural networks with one or more hidden layers between the input and output layers. The input signals are propagated in a forward direction through each layer.

- Backpropagation is a common learning algorithm for MLPs. It calculates error signals that are propagated backward from the output to the input layers to adjust the weights, reducing errors between the actual and desired outputs.

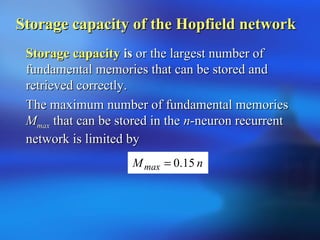

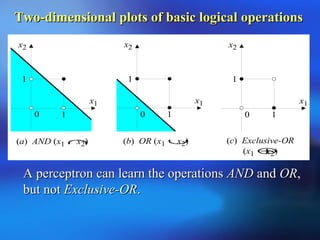

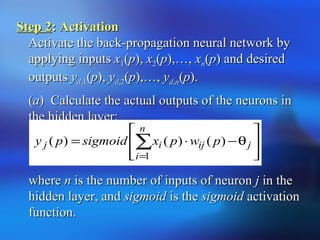

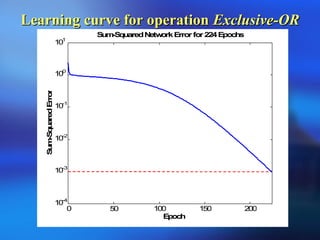

- A three-layer backpropagation network is presented as an example to solve the exclusive OR (XOR) logic problem, which a single-layer perceptron cannot do. Initial weights and thresholds are set randomly,

![Step 3Step 3: Weight training: Weight training

Update the weights in the back-propagation networkUpdate the weights in the back-propagation network

propagating backward the errors associated withpropagating backward the errors associated with

output neurons.output neurons.

((aa) Calculate the error gradient for the neurons in the) Calculate the error gradient for the neurons in the

output layer:output layer:

wherewhere

Calculate the weight corrections:Calculate the weight corrections:

Update the weights at the output neurons:Update the weights at the output neurons:

[ ] )()(1)()( pepypyp kkkk ⋅−⋅=δ

)()()( , pypype kkdk −=

)()()( ppypw kjjk δα ⋅⋅=∆

)()()1( pwpwpw jkjkjk ∆+=+](https://image.slidesharecdn.com/6-130916074850-phpapp02/85/6-13-320.jpg)

![((bb) Calculate the error gradient for the neurons in) Calculate the error gradient for the neurons in

the hidden layer:the hidden layer:

Calculate the weight corrections:Calculate the weight corrections:

Update the weights at the hidden neurons:Update the weights at the hidden neurons:

)()()(1)()(

1

][ pwppypyp jk

l

k

kjjj ∑

=

⋅−⋅= δδ

)()()( ppxpw jiij δα ⋅⋅=∆

)()()1( pwpwpw ijijij ∆+=+

Step 3Step 3: Weight training (continued): Weight training (continued)](https://image.slidesharecdn.com/6-130916074850-phpapp02/85/6-14-320.jpg)

![We consider a training set where inputsWe consider a training set where inputs xx11 andand xx22 areare

equal to 1 and desired outputequal to 1 and desired output yydd,5,5 is 0. The actualis 0. The actual

outputs of neurons 3 and 4 in the hidden layer areoutputs of neurons 3 and 4 in the hidden layer are

calculated ascalculated as

[ ] 5250.01/1)( )8.014.015.01(

32321313 =+=θ−+= ⋅−⋅+⋅−

ewxwxsigmoidy

[ ] 8808.01/1)( )1.010.119.01(

42421414 =+=θ−+= ⋅+⋅+⋅−

ewxwxsigmoidy

Now the actual output of neuron 5 in the output layerNow the actual output of neuron 5 in the output layer

is determined as:is determined as:

Thus, the following error is obtained:Thus, the following error is obtained:

[ ] 5097.01/1)( )3.011.18808.02.15250.0(

54543535 =+=θ−+= ⋅−⋅+⋅−−

ewywysigmoidy

5097.05097.0055, −=−=−= yye d](https://image.slidesharecdn.com/6-130916074850-phpapp02/85/6-18-320.jpg)

![The stable state-vertex is determined by the weightThe stable state-vertex is determined by the weight

matrixmatrix WW, the current input vector, the current input vector XX, and the, and the

threshold matrixthreshold matrix θθ. If the input vector is partially. If the input vector is partially

incorrect or incomplete, the initial state will convergeincorrect or incomplete, the initial state will converge

into the stable state-vertex after a few iterations.into the stable state-vertex after a few iterations.

Suppose, for instance, that our network is required toSuppose, for instance, that our network is required to

memorise two opposite states, (1, 1, 1) and (memorise two opposite states, (1, 1, 1) and (−−1,1, −−1,1, −−1).1).

Thus,Thus,

oror

wherewhere YY11 andand YY22 are the three-dimensional vectors.are the three-dimensional vectors.

=

1

1

1

1Y

−

−

−

=

1

1

1

2Y [ ]1111 =T

Y [ ]1112 −−−=T

Y](https://image.slidesharecdn.com/6-130916074850-phpapp02/85/6-41-320.jpg)

![The 3The 3 ×× 3 identity matrix3 identity matrix II isis

Thus, we can now determine the weight matrix asThus, we can now determine the weight matrix as

follows:follows:

Next, the network is tested by the sequence of inputNext, the network is tested by the sequence of input

vectors,vectors, XX11 andand XX22, which are equal to the output (or, which are equal to the output (or

target) vectorstarget) vectors YY11 andand YY22, respectively., respectively.

=

100

010

001

I

[ ] [ ]

−−−−

−

−

−

+

=

100

010

001

2111

1

1

1

111

1

1

1

W

=

022

202

220](https://image.slidesharecdn.com/6-130916074850-phpapp02/85/6-42-320.jpg)