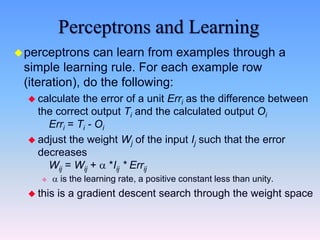

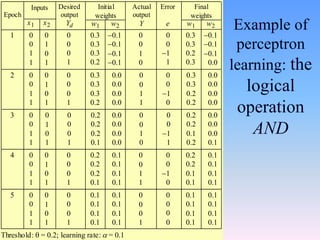

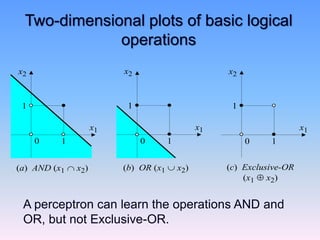

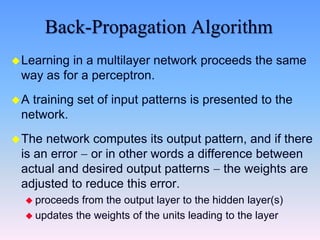

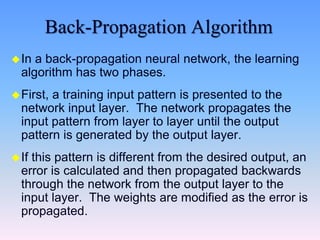

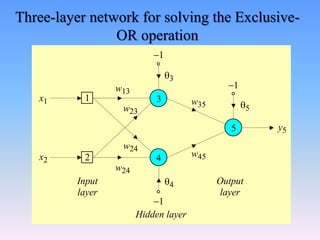

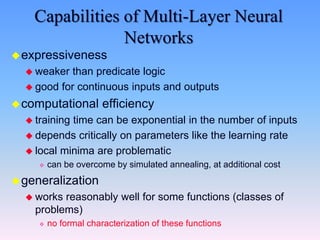

This document discusses neural networks and their learning capabilities. It describes how neural networks are composed of simple interconnected elements that can learn patterns from examples through training. Perceptrons are introduced as single-layer neural networks that can learn linearly separable functions through a simple learning rule. Multi-layer networks are shown to have greater learning capabilities than perceptrons using an algorithm called backpropagation that propagates errors backward through the network to update weights. Applications of neural networks include pattern recognition, control problems, and time series prediction tasks.

![Artificial Neuron (Perceptron) Diagram

weighted inputs are summed up by the input function

the (nonlinear) activation function calculates the activation

value, which determines the output

[Russell & Norvig, 1995]](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-4-320.jpg)

![Common Activation Functions

Stept(x) = 1 if x >= t, else 0

Sign(x) = +1 if x >= 0, else –1

Sigmoid(x) = 1/(1+e-x)

[Russell & Norvig, 1995]](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-5-320.jpg)

![Neural Networks and Logic Gates

simple neurons can act as logic gates

appropriate choice of activation function, threshold, and

weights

step function as activation function

[Russell & Norvig, 1995]](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-6-320.jpg)

![Perceptrons

single layer, feed-

forward network

historically one of the

first types of neural

networks

late 1950s

the output is

calculated as a step

function applied to

the weighted sum of

inputs

capable of learning

simple functions

linearly separable

[Russell & Norvig, 1995]](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-8-320.jpg)

![[Russell & Norvig, 1995]

Perceptrons and Linear Separability

perceptrons can deal with linearly separable

functions

some simple functions are not linearly separable

XOR function

0,0

0,1

1,0

1,1

0,0

0,1

1,0

1,1

AND XOR](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-9-320.jpg)

![Perceptrons and Linear Separability

linear separability can be extended to more than two dimensions

more difficult to visualize

[Russell & Norvig, 1995]](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-10-320.jpg)

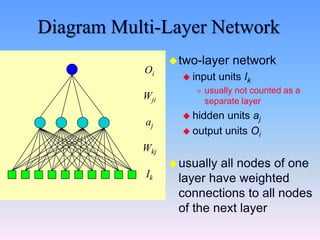

![How does the perceptron learn its

classification tasks?

This is done by making small adjustments in the

weights

to reduce the difference between the actual and desired

outputs of the perceptron.

The initial weights are randomly assigned

usually in the range [0.5, 0.5], or [0, 1]

Then the they are updated to obtain the output

consistent with the training examples.](https://image.slidesharecdn.com/19learning-230719140235-e304c753/85/19_Learning-ppt-11-320.jpg)