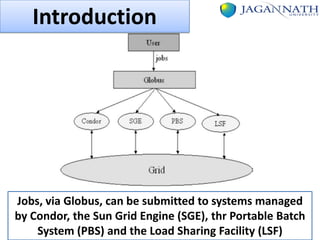

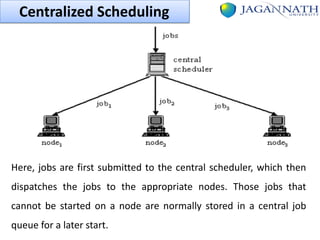

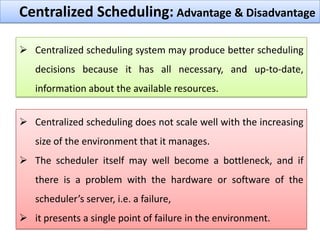

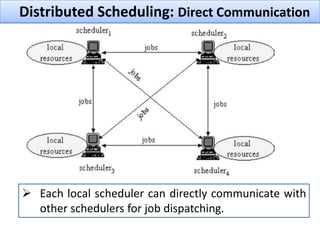

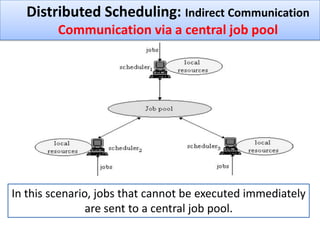

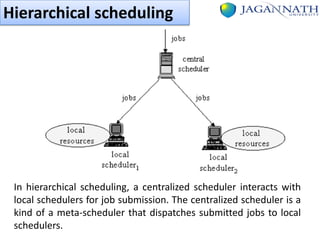

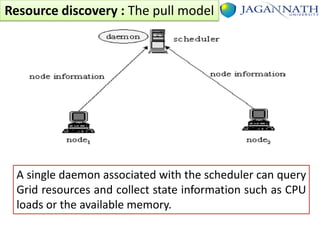

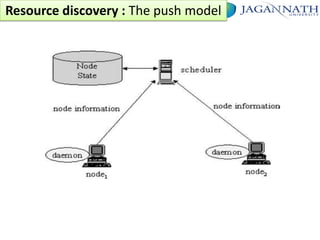

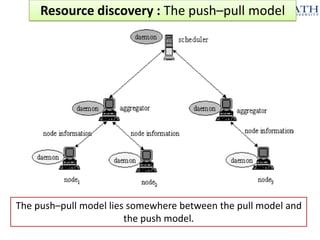

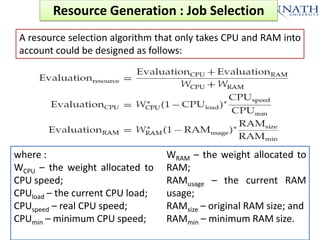

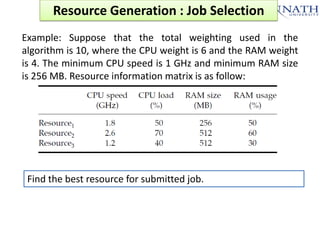

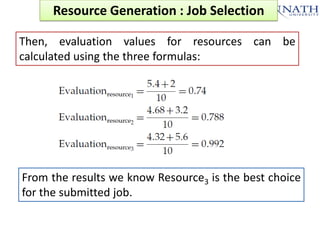

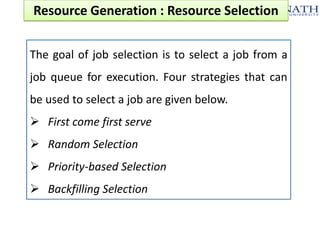

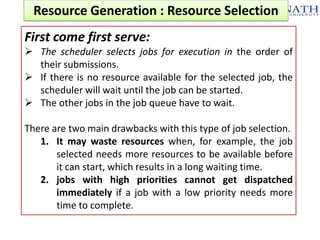

The document discusses grid computing and various scheduling paradigms such as centralized, hierarchical, and distributed scheduling, detailing the processes involved in job scheduling across multiple resources. It outlines four main stages of scheduling: resource discovery, resource selection, schedule generation, and job execution, emphasizing strategies for efficient resource utilization. Additionally, it covers the advantages and disadvantages of each scheduling paradigm and different job selection strategies like first come first serve, priority-based selection, and backfilling.