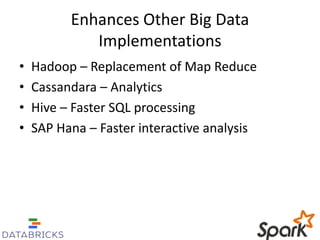

The document outlines ten notable features of Apache Spark, emphasizing its speed, open-source nature, and strong integration with major data ecosystems. Spark is highlighted for its scalable performance, essential API stability, enterprise applicability, and security integration. Additionally, it mentions events and resources for learning about Apache Spark.