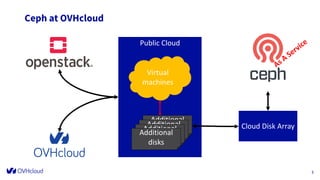

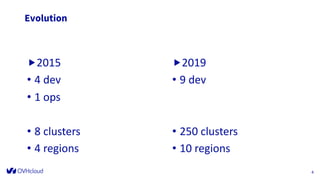

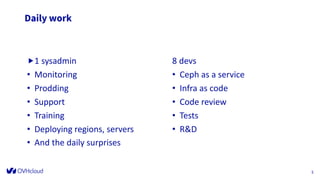

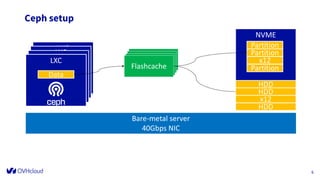

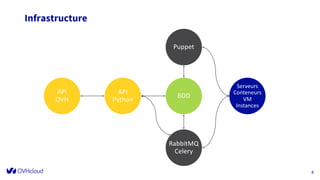

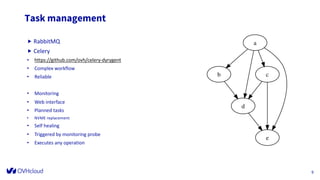

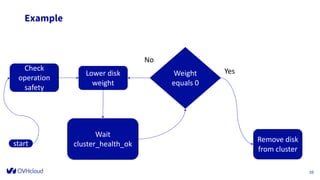

The document outlines the infrastructure management of OVHcloud, detailing the growth from 4 clusters in 2015 to 250 clusters by 2019, managed by a single sysadmin. It highlights the various technologies and processes used, such as Ceph as a service for storage and the automation of infrastructure management through tools like RabbitMQ and continuous delivery practices. Additionally, it includes insights on metrics monitoring and logging capabilities to ensure operational efficiency.