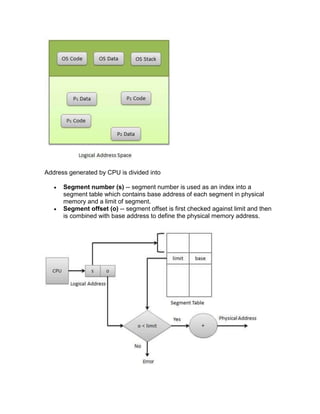

Memory management handles allocation of memory to processes and tracks used and free memory. It uses techniques like paging, segmentation, and dynamic allocation from a heap. Paging maps logical addresses to physical pages, avoiding external fragmentation. Segmentation divides memory into logical segments of varying sizes. Dynamic allocation fulfills requests from the heap, managing free blocks and avoiding fragmentation and memory leaks.