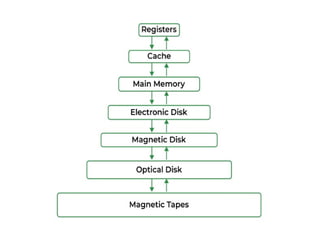

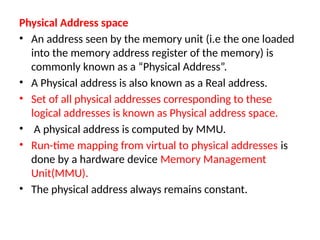

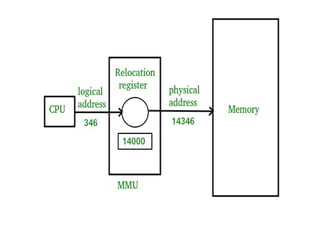

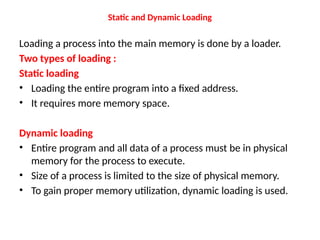

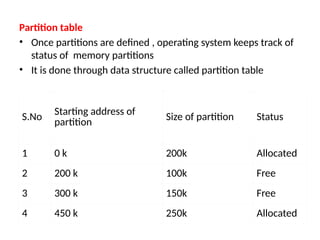

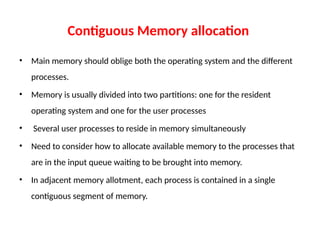

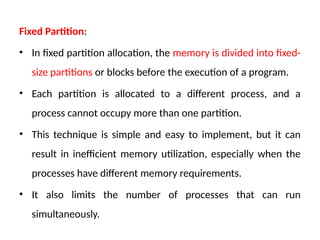

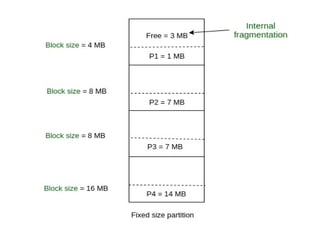

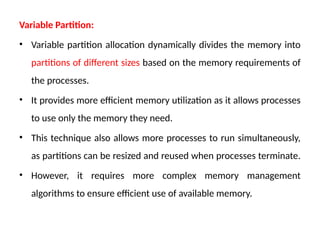

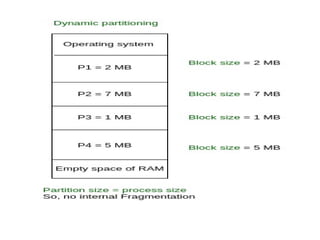

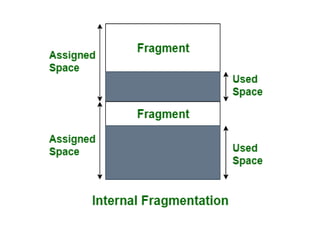

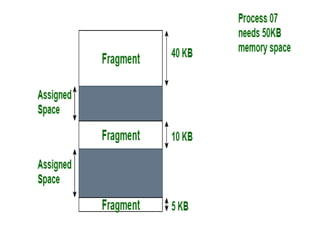

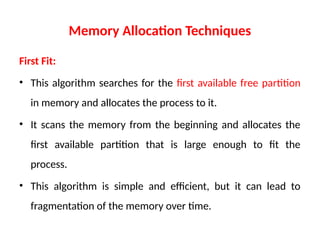

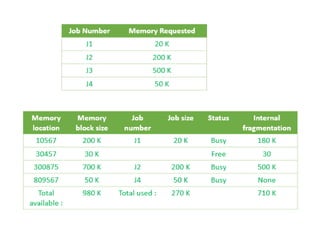

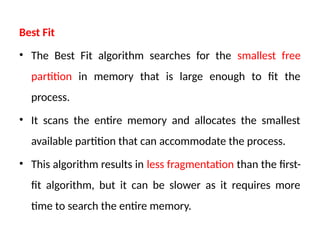

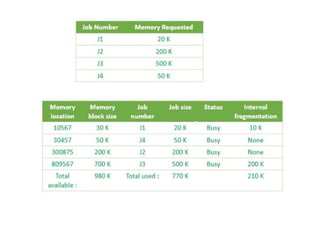

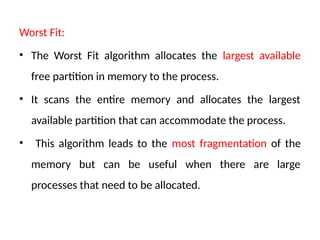

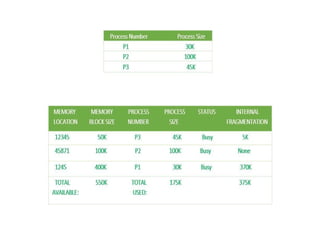

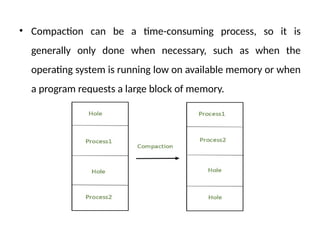

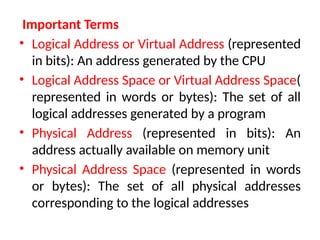

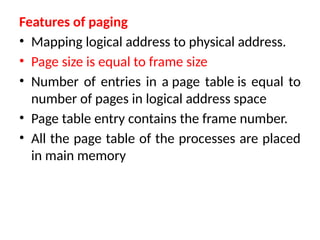

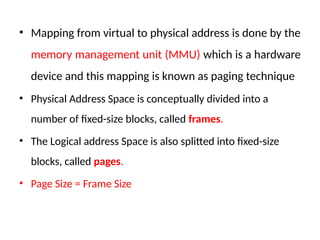

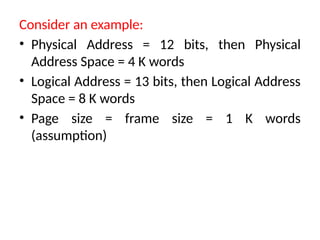

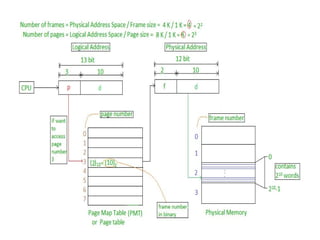

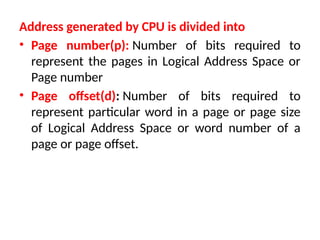

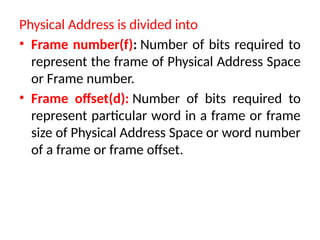

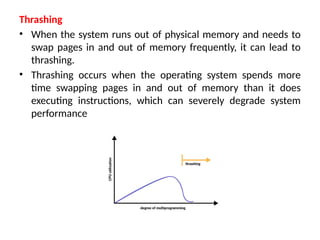

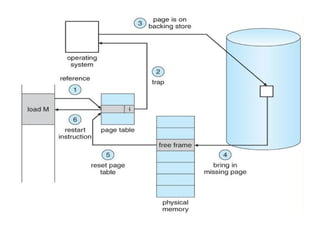

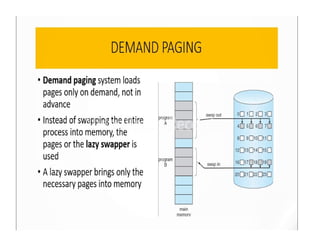

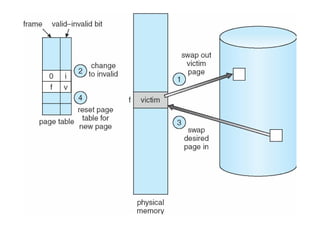

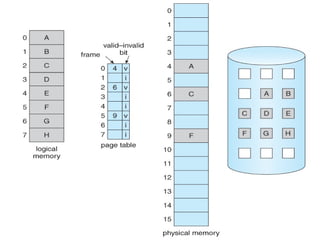

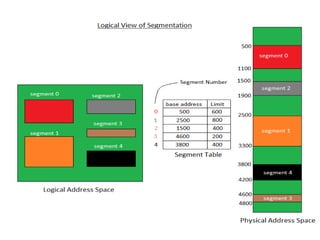

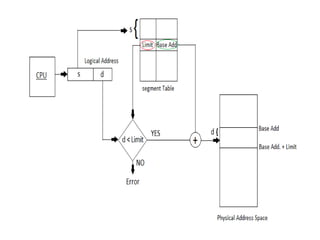

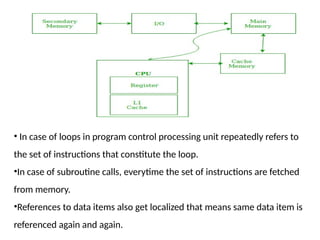

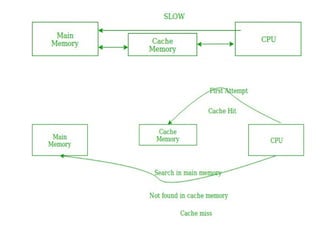

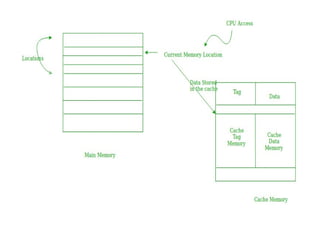

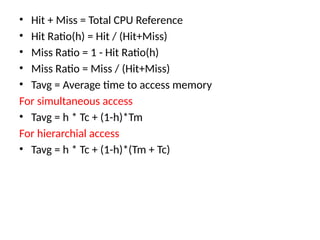

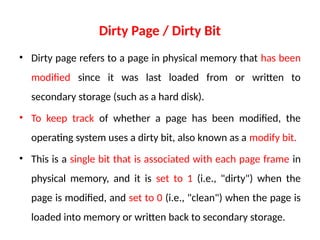

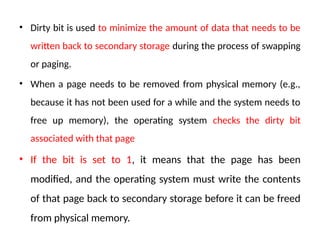

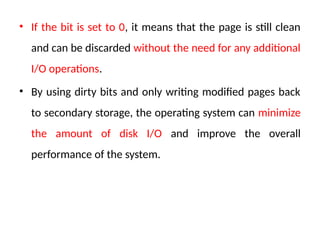

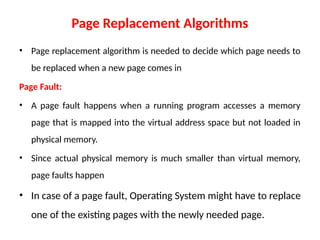

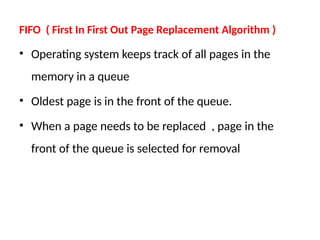

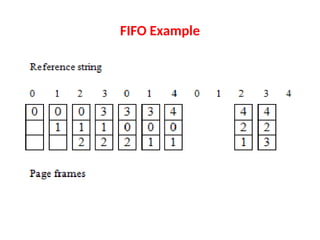

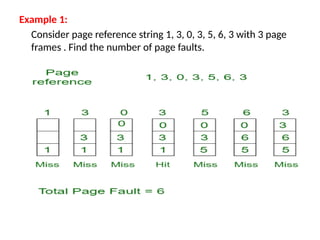

The document covers memory management concepts such as main memory, logical and physical address spaces, and the importance of memory allocation techniques. It discusses dynamic and static loading, fragmentation issues, and strategies like paging and virtual memory to optimize memory usage. Additionally, it examines memory allocation methods including first fit, best fit, and worst fit, and explores the effects of locality of reference on cache operations.