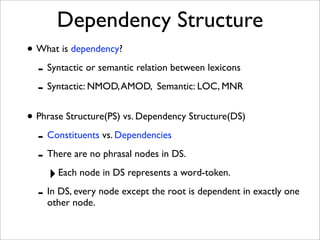

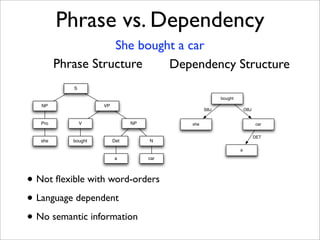

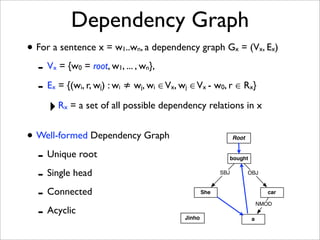

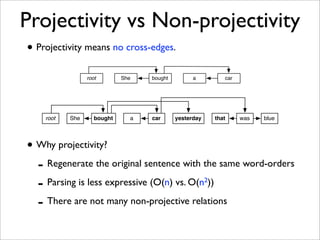

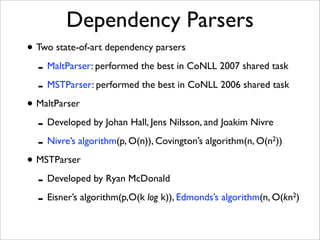

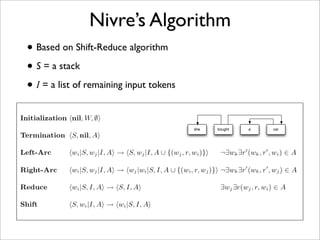

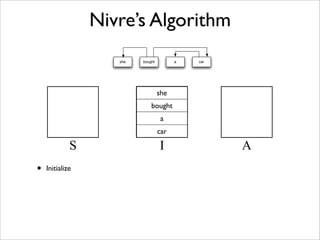

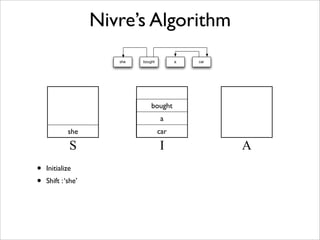

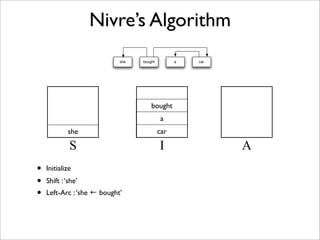

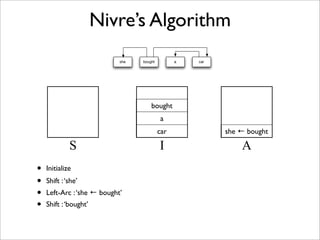

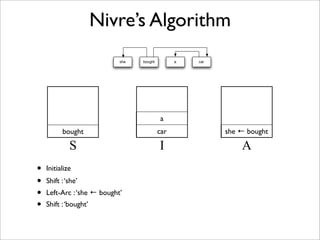

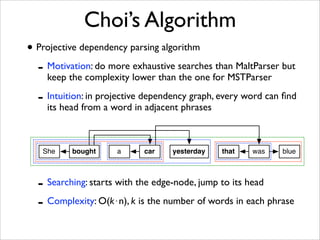

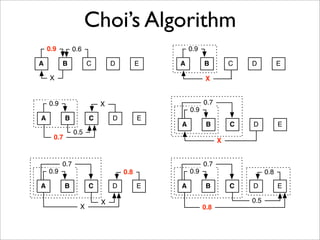

The document discusses dependency parsing, covering its structure, parsers such as Maltparser and MSTparser, and their algorithms. It compares projective and non-projective parsing and outlines various applications including semantic role labeling and sentiment analysis. The Choi’s algorithm is highlighted for balancing efficiency and complexity in projective dependency parsing.