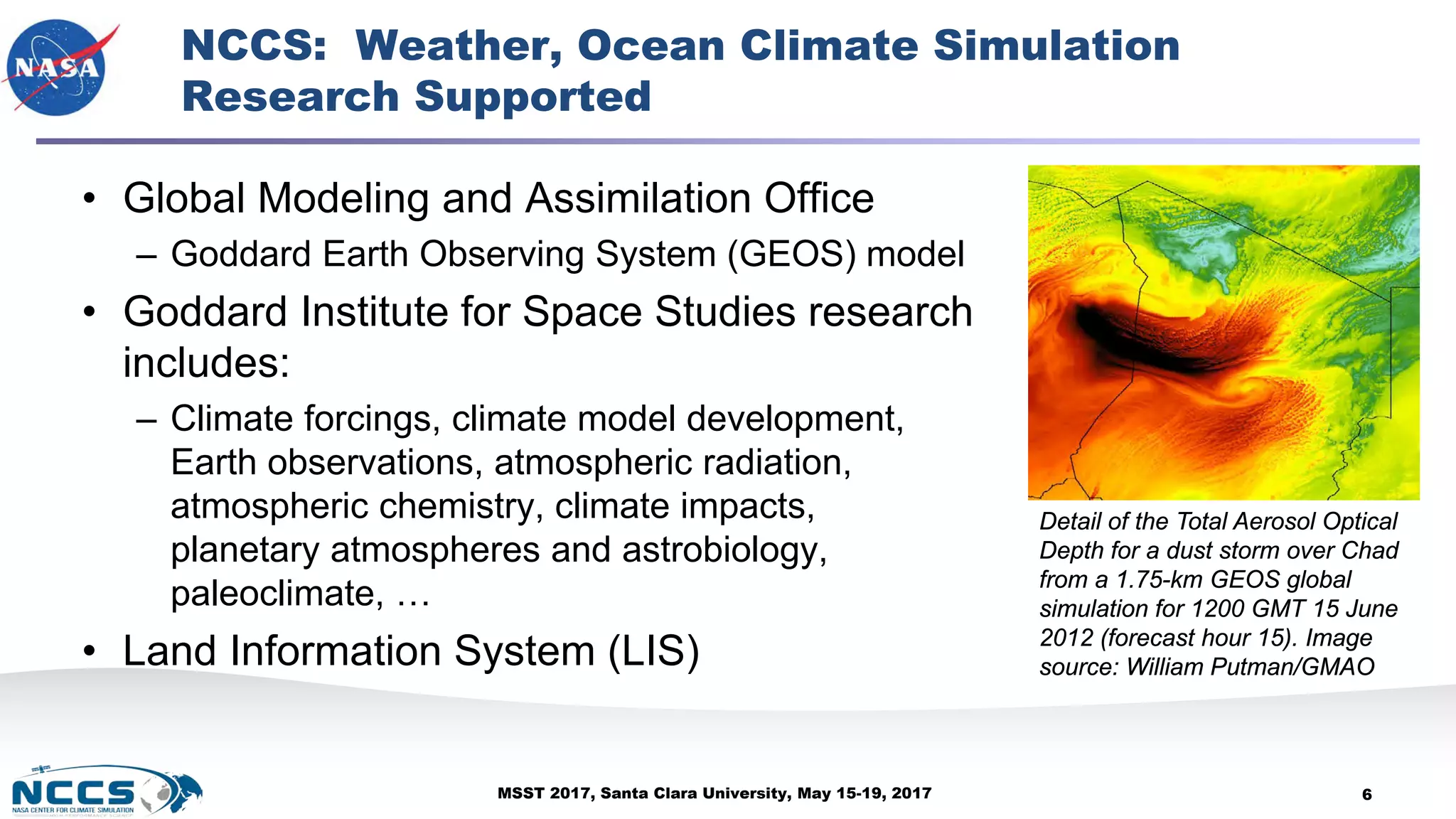

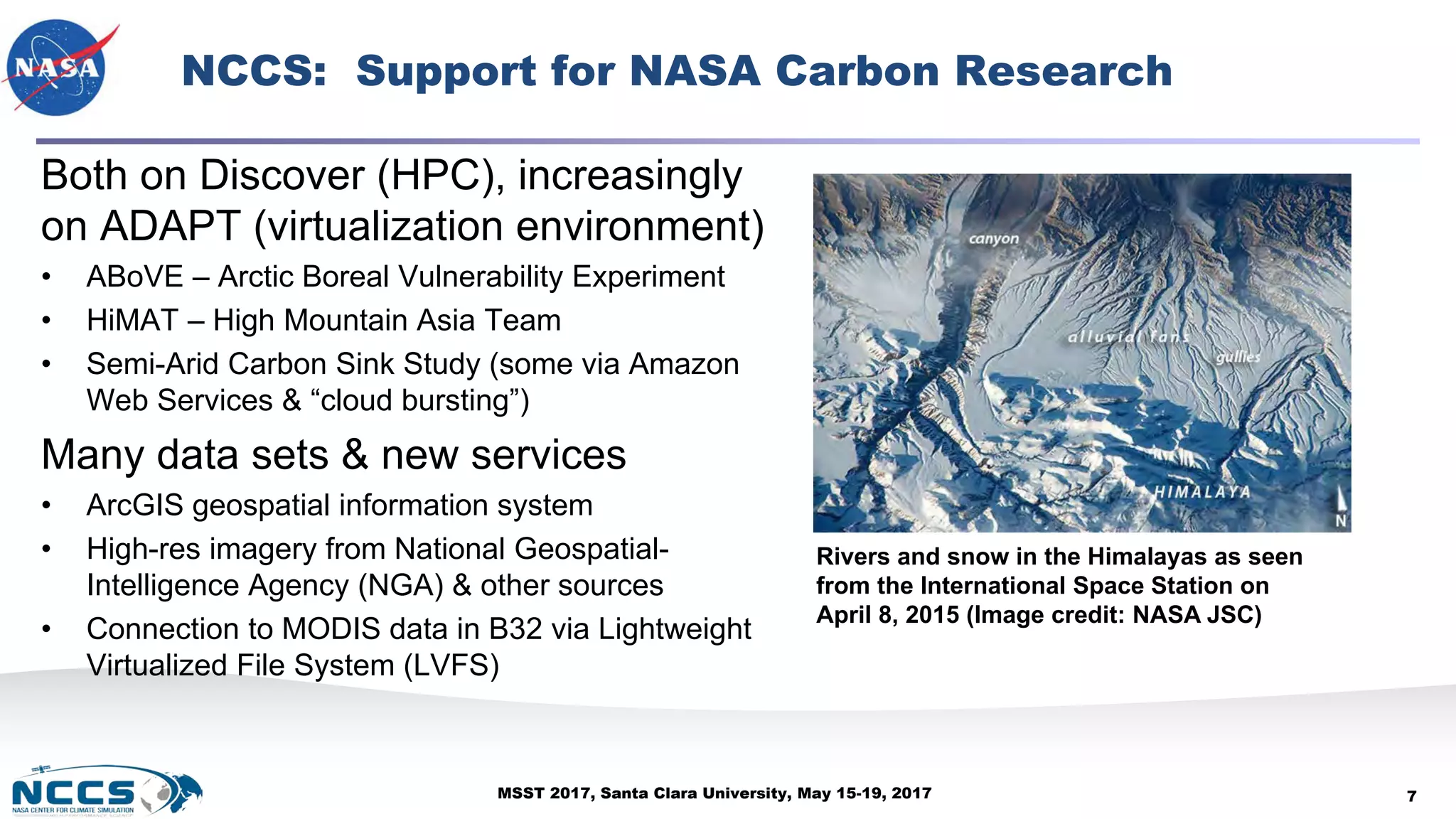

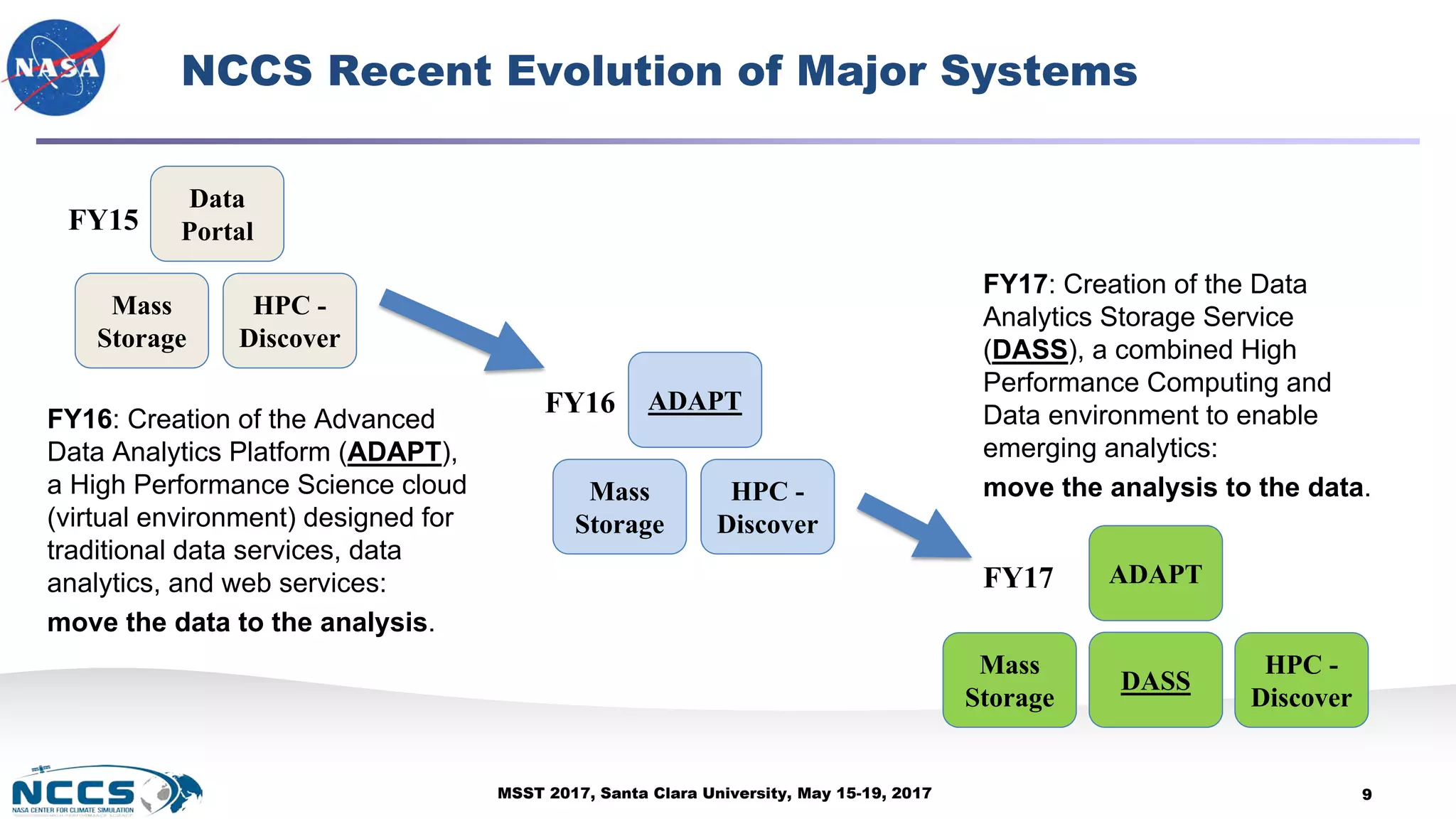

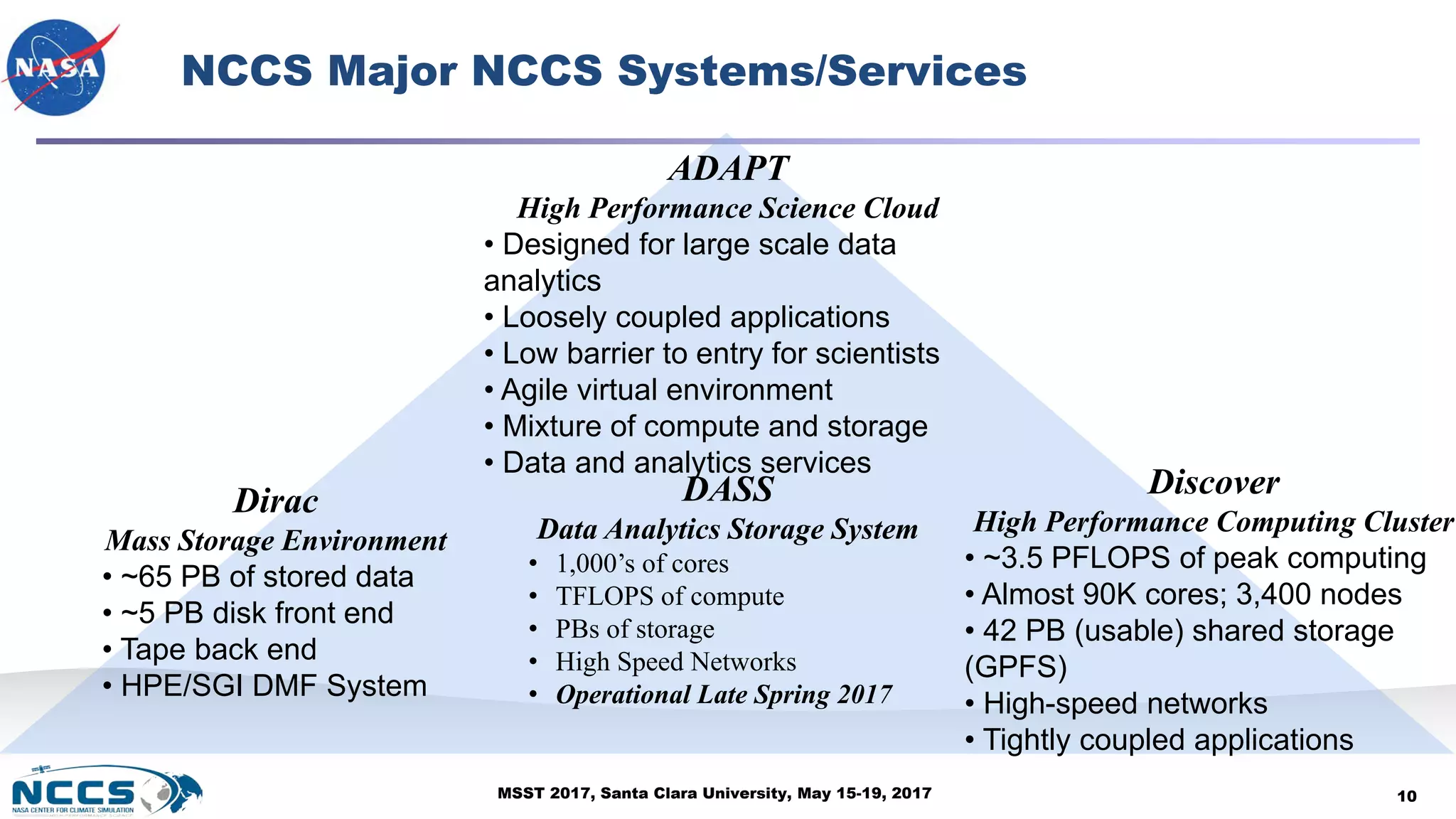

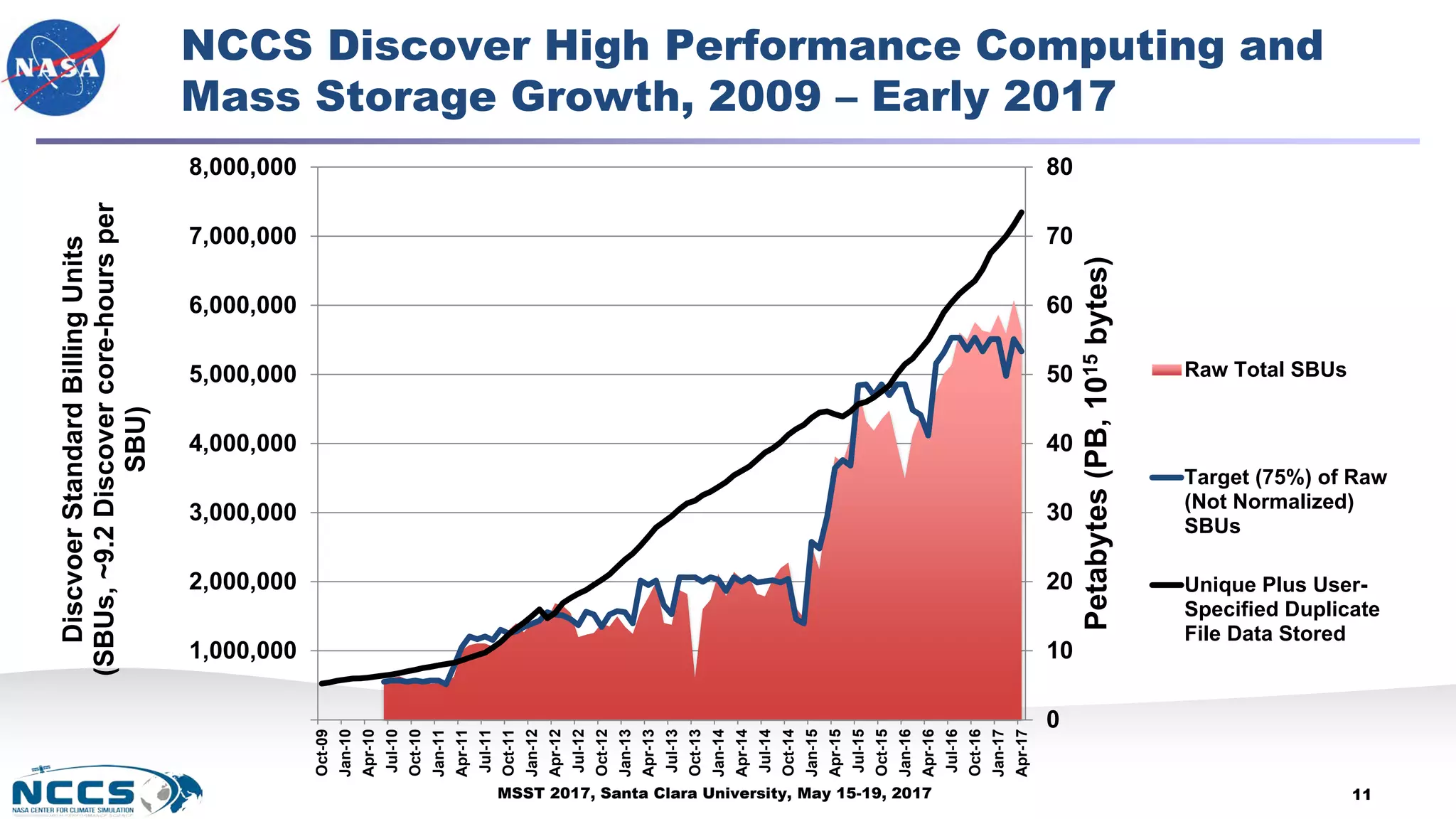

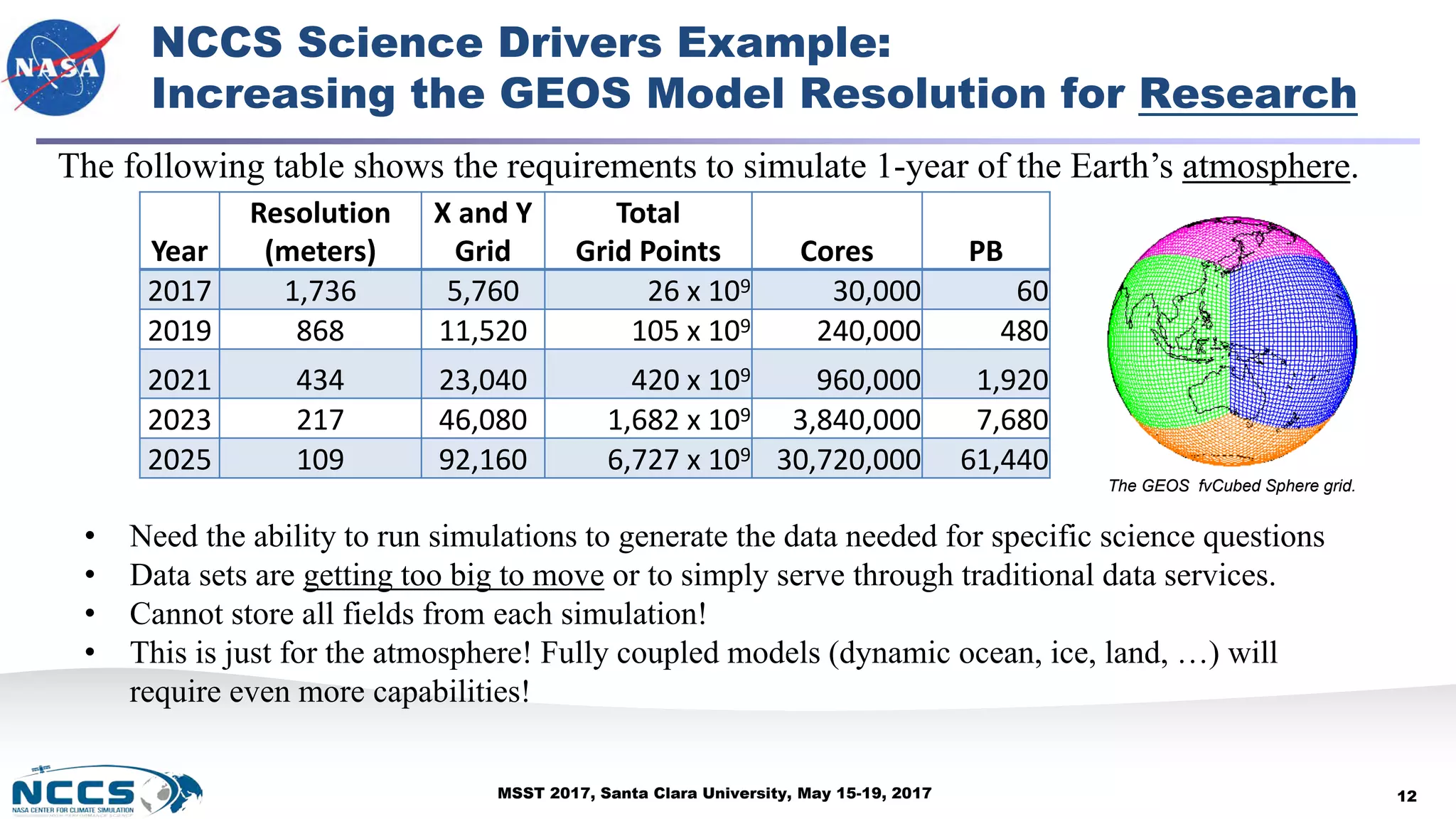

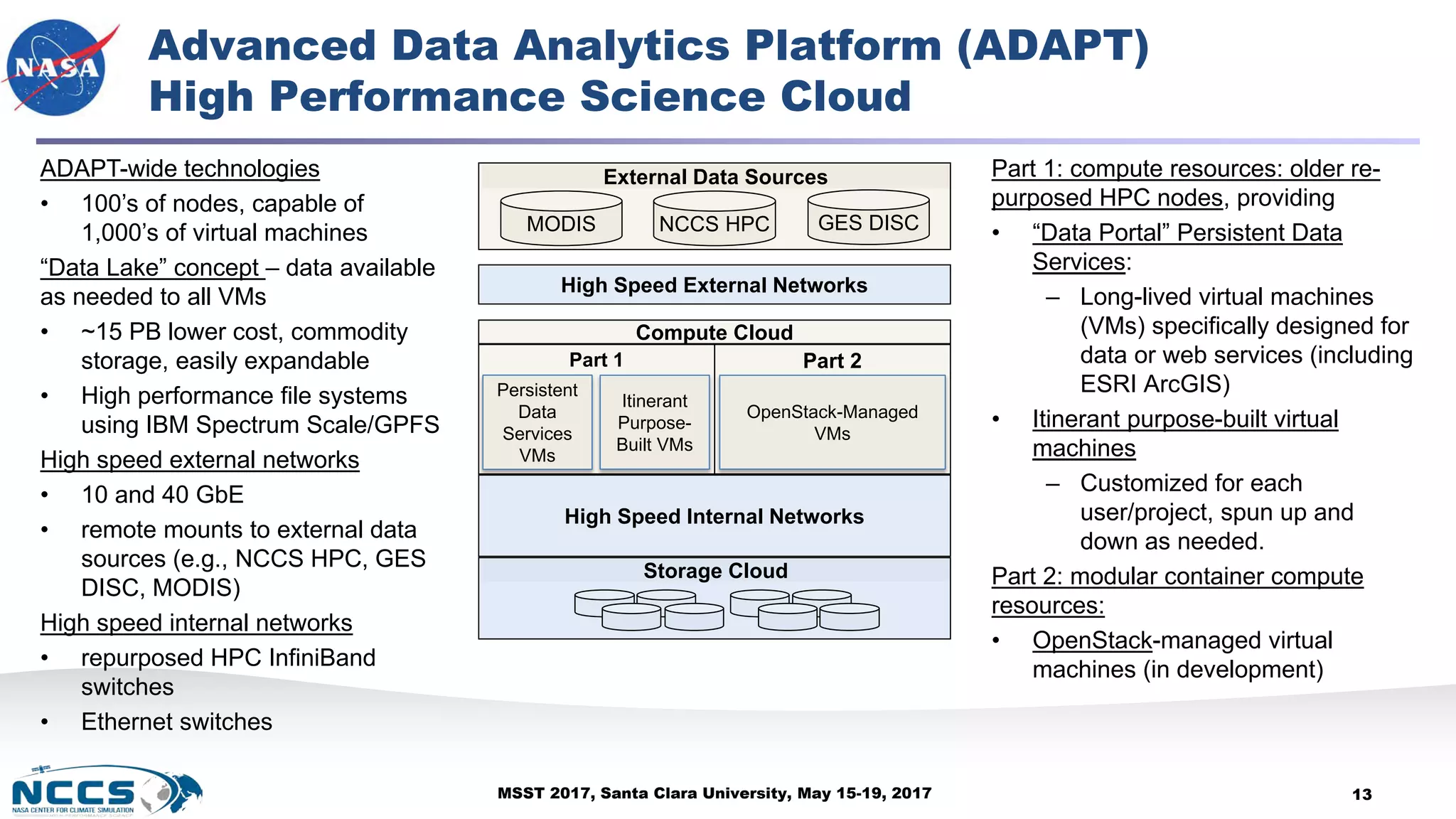

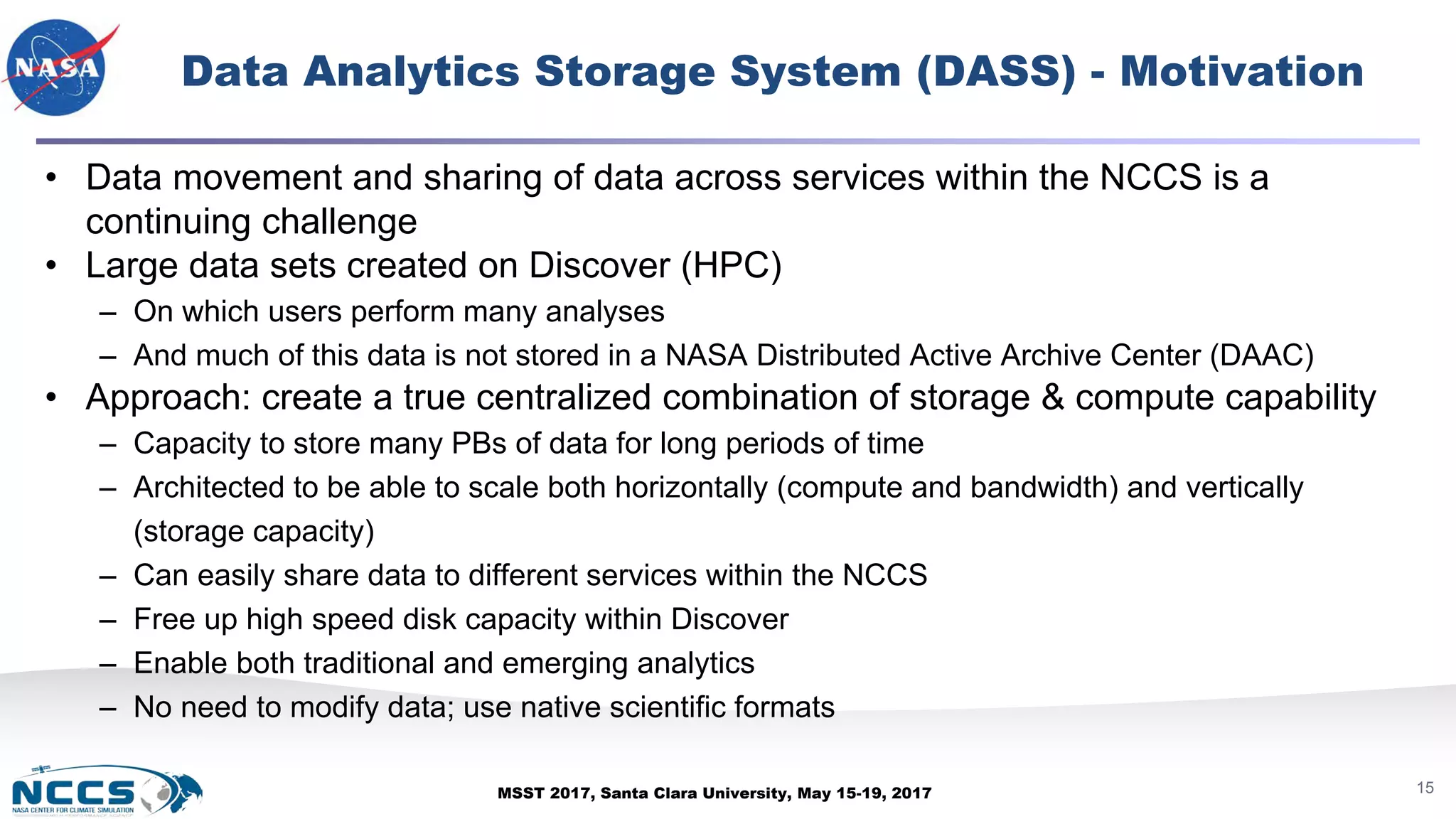

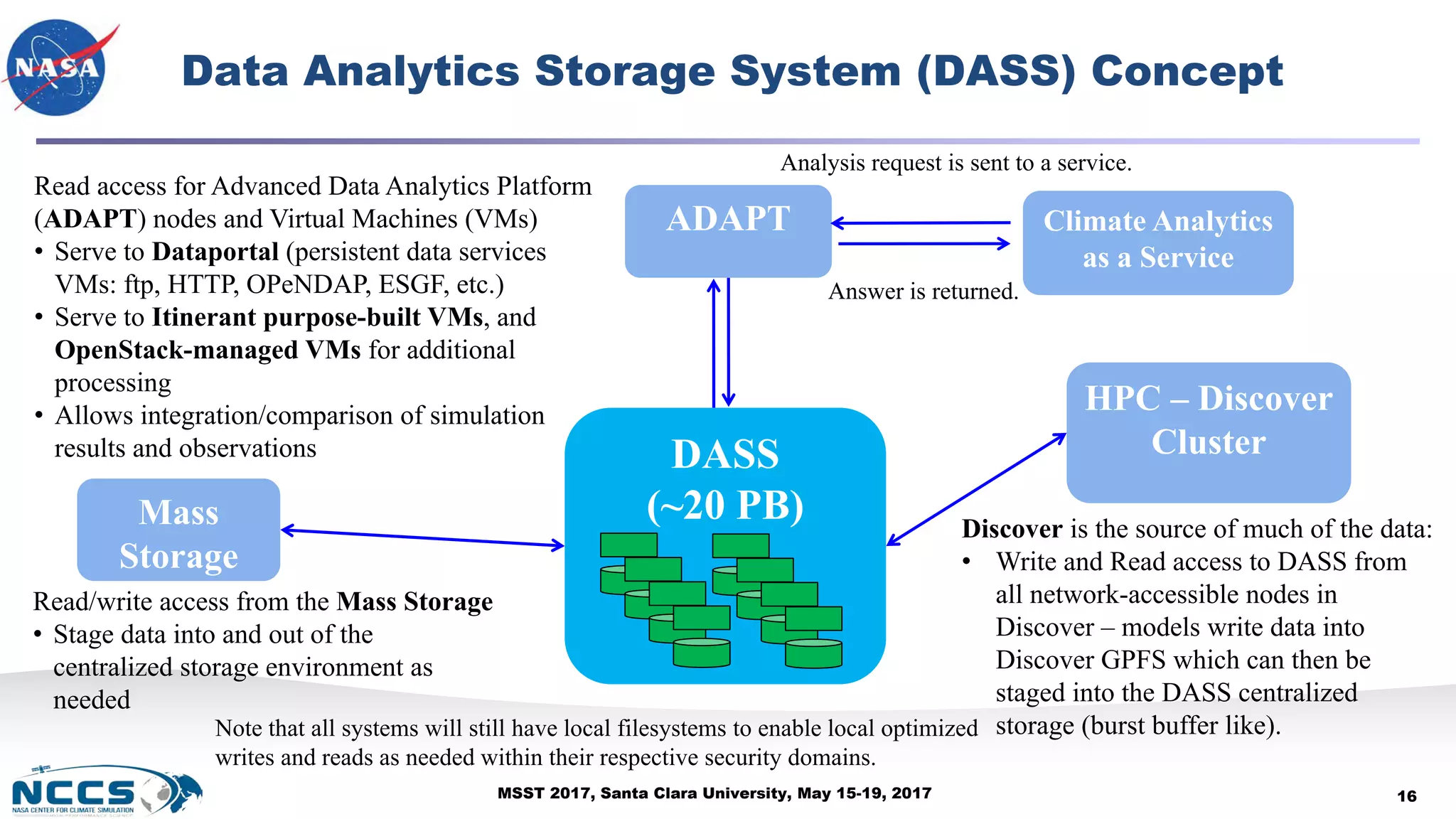

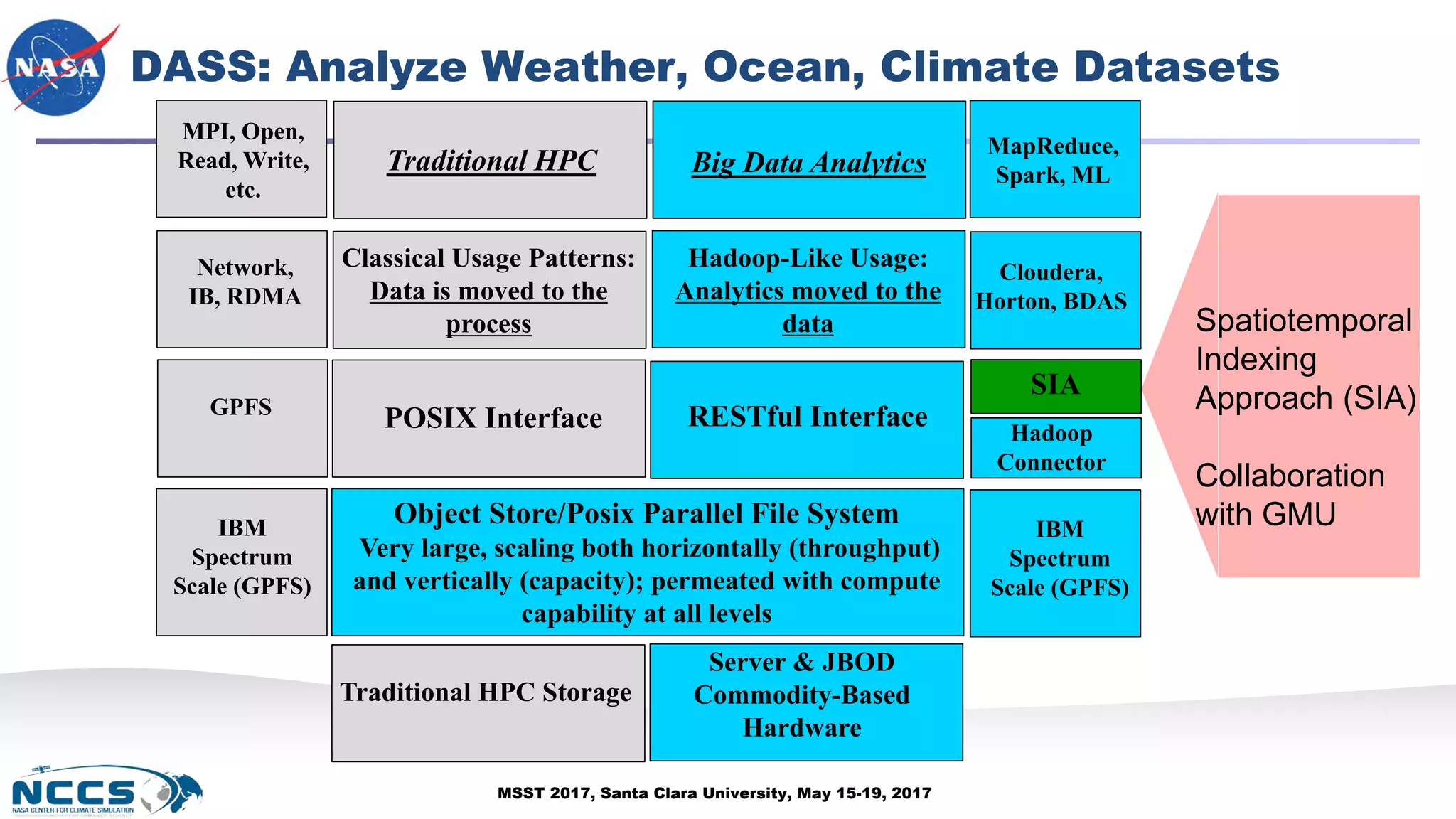

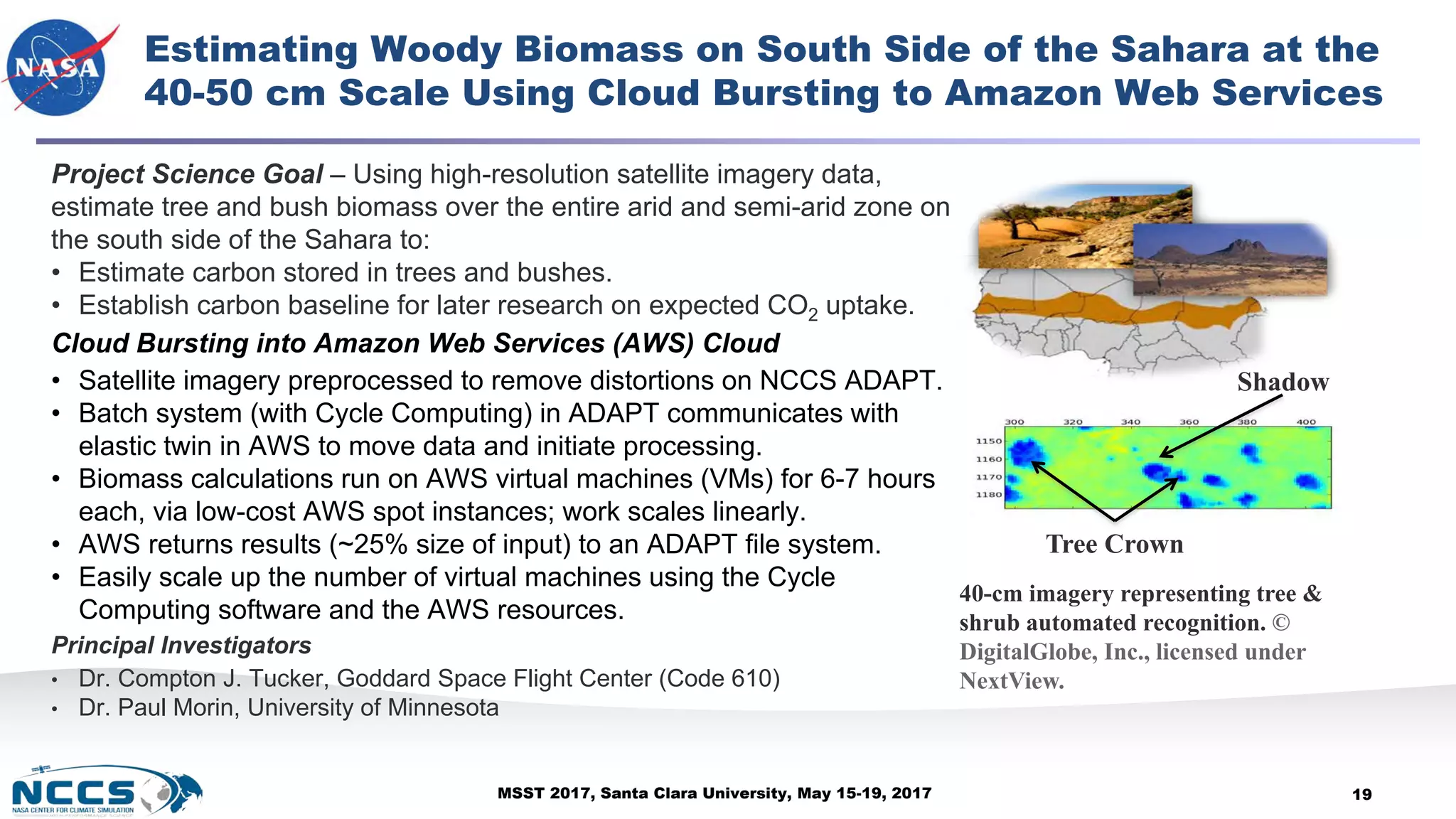

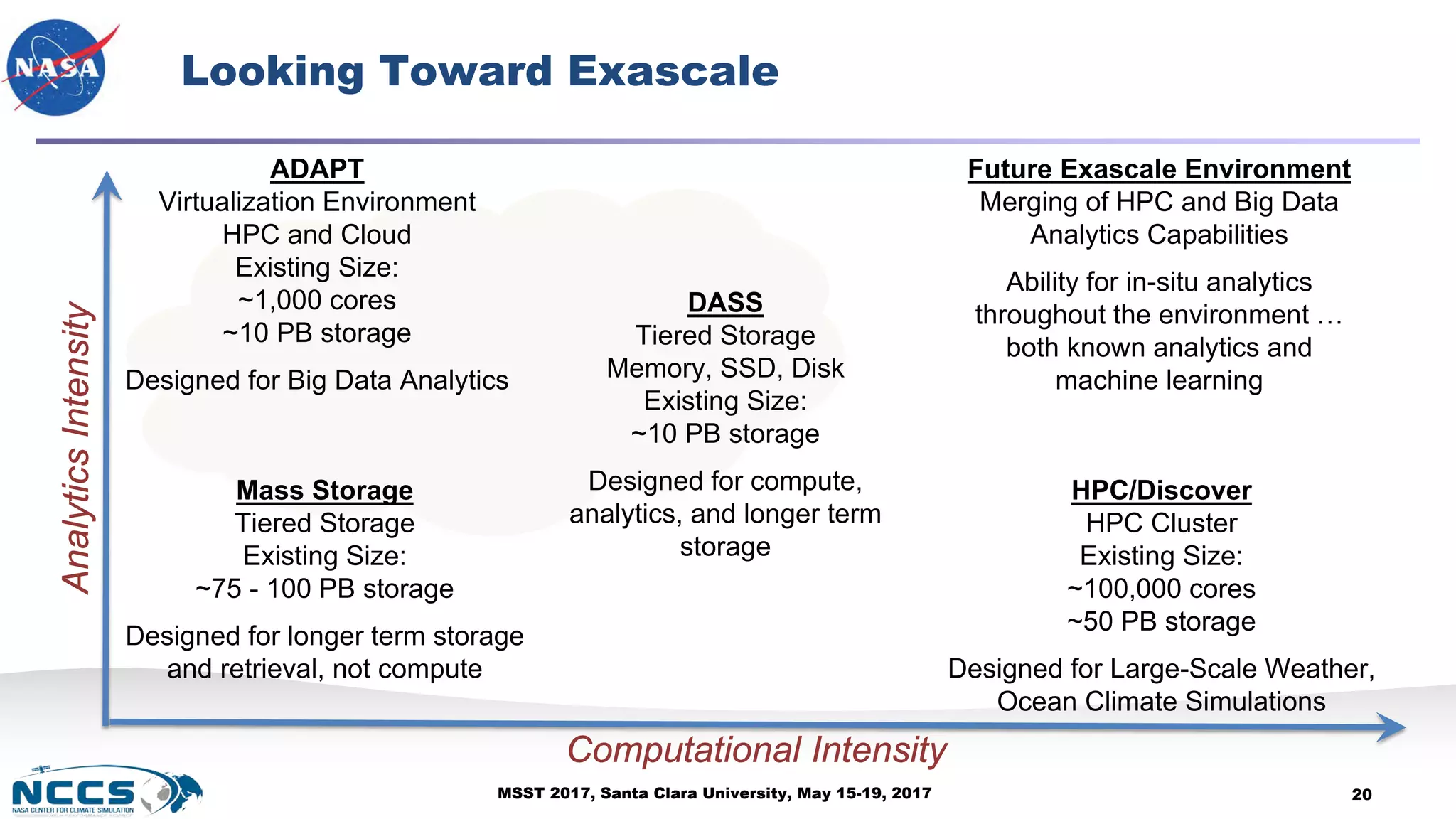

The document discusses advancements in storage and cyber infrastructure at NASA's Center for Climate Simulation (NCCS) during MSST 2017, highlighting the development of high-performance computing, cloud computing, and data analytics services for climate modeling. Key features include the Advanced Data Analytics Platform (ADAPT) and Data Analytics Storage Service (DASS), which enhance data accessibility and facilitate complex climate research. The presentation outlines the NCCS's role in providing integrated computing environments that support various NASA projects and scientific initiatives.