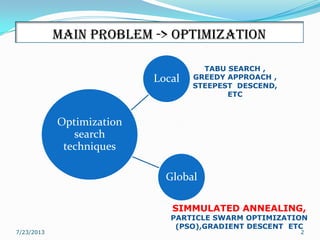

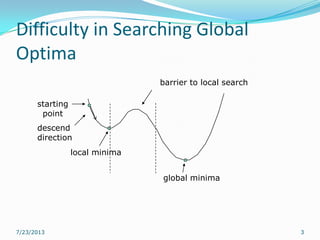

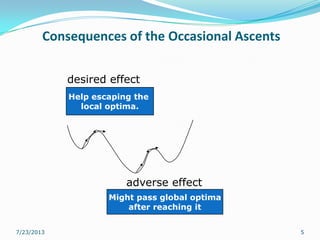

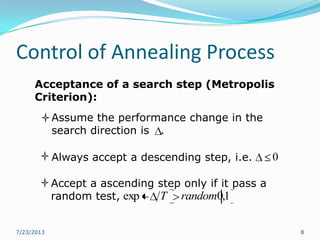

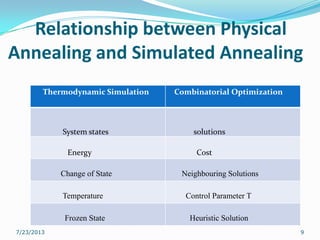

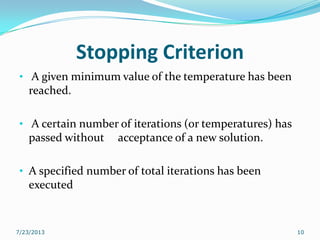

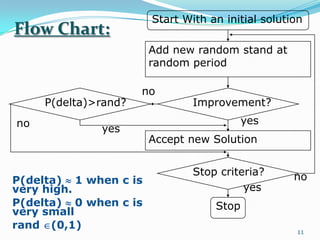

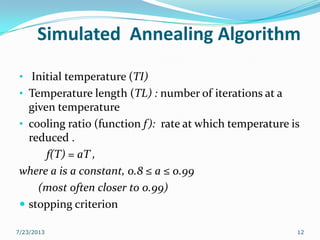

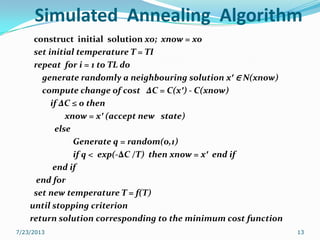

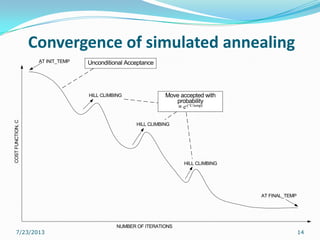

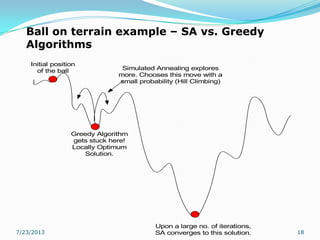

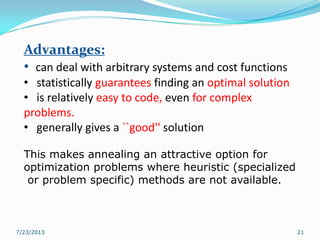

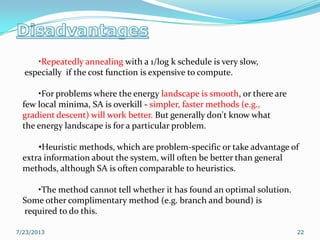

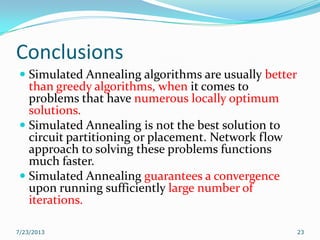

The document discusses simulated annealing (SA), a global optimization technique inspired by the physical process of annealing in solids, characterized by its ability to escape local optima. It explains the mechanics of SA, its stopping criteria, advantages, and applications in various fields such as circuit partitioning and image processing. Additionally, it compares SA's effectiveness against greedy algorithms, particularly in problems with numerous local optima.