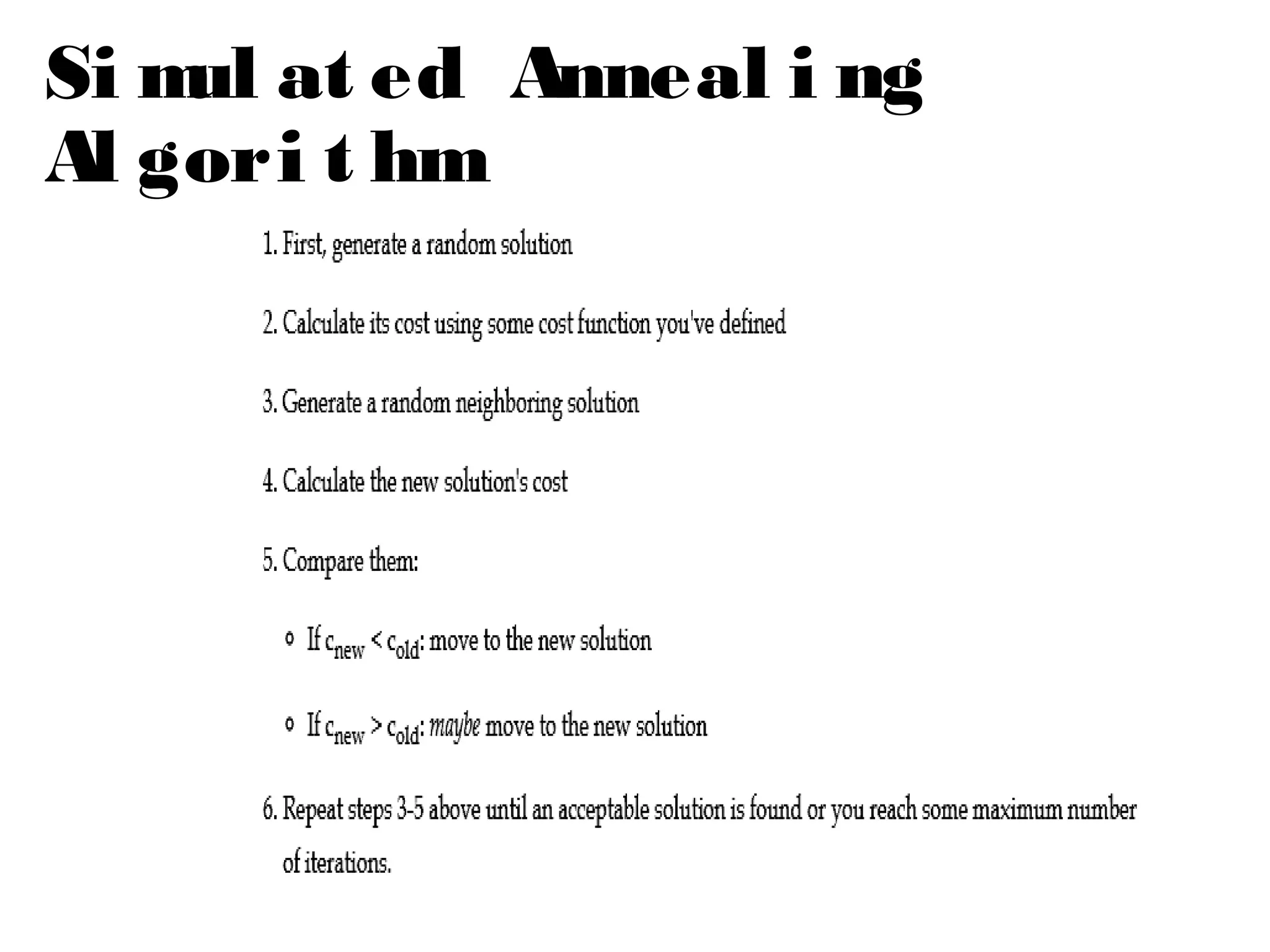

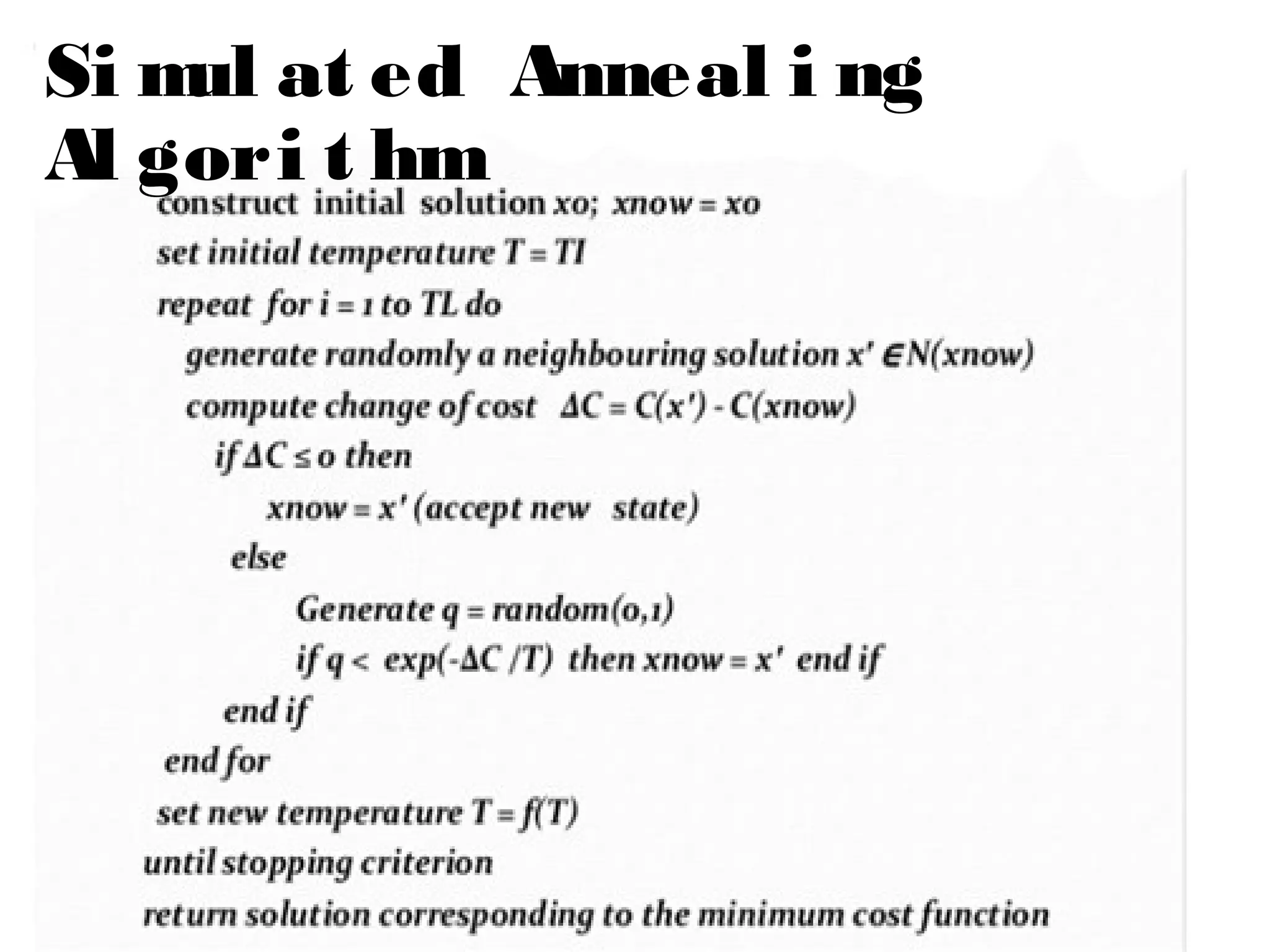

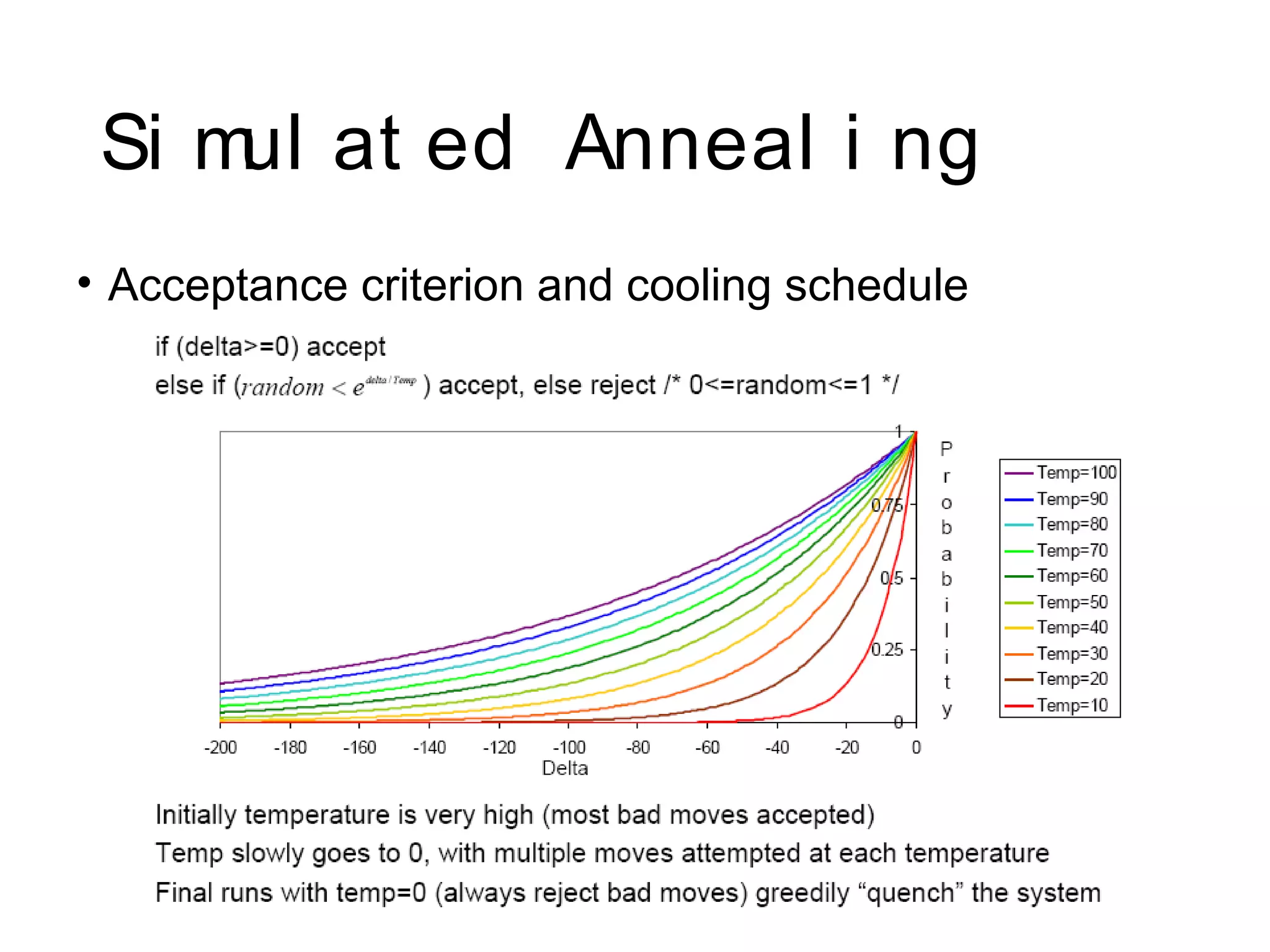

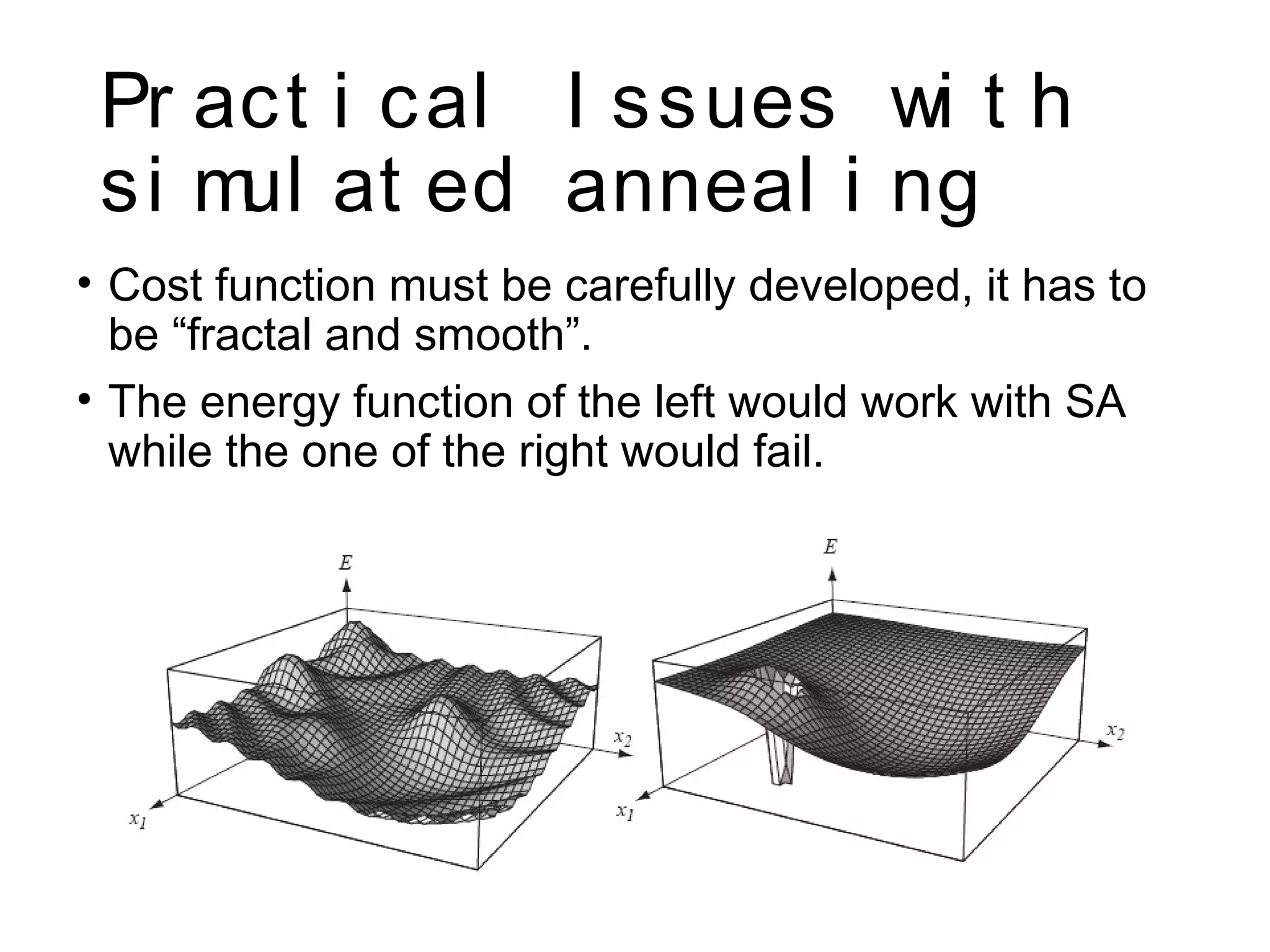

Simulated annealing is a local search algorithm inspired by the annealing process in metallurgy. It is used to find optimal solutions to complex problems by simulating the cooling of materials in a heat bath. The algorithm starts with a random solution and explores neighboring solutions, accepting worse solutions with a probability based on temperature. The temperature is slowly decreased, limiting acceptance of worse solutions over time to converge to a global optimum. Practical issues include developing an effective cost function and proper cooling schedule to ensure convergence. Simulated annealing has applications in optimization problems like the traveling salesman problem.

![Present ed by:

Amarendra Kumar Saroj ( Rol l

No: 182901)

Ram Kumar Basnet ( Rol l No: 182921)

ME Comput er Engi neeri ng [ III

Semest er]

Presentation on: Simulated Annealing (AI)](https://image.slidesharecdn.com/simulatedannealingpresentation2-190620022430/75/Simulated-annealing-presentation-1-2048.jpg)