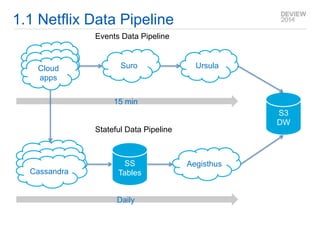

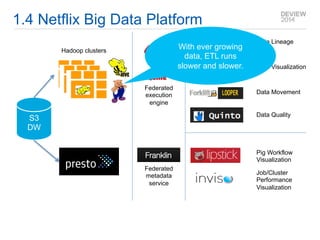

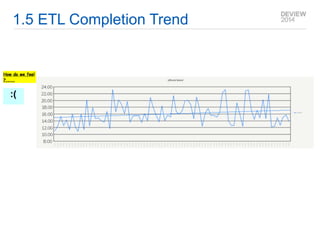

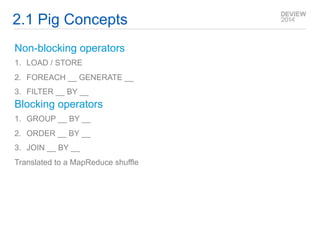

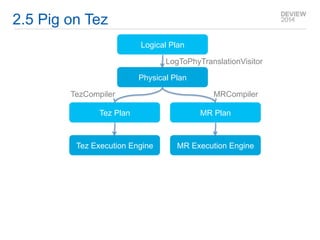

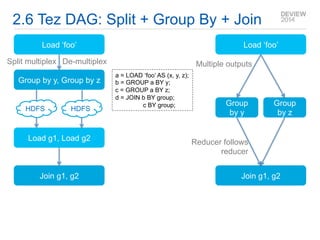

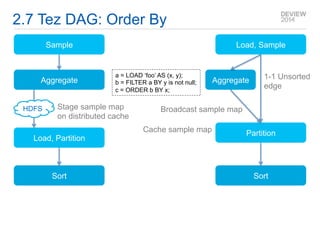

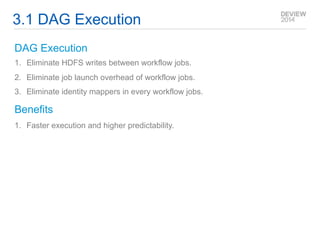

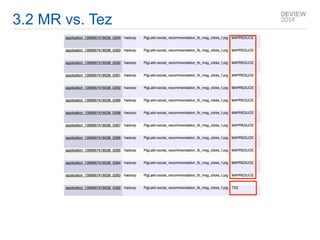

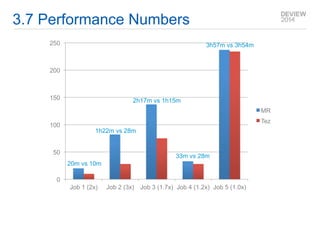

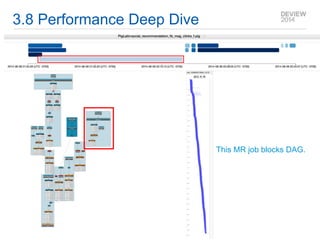

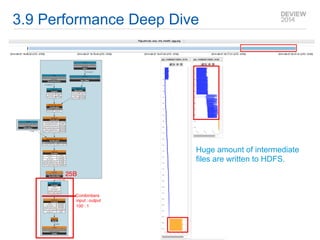

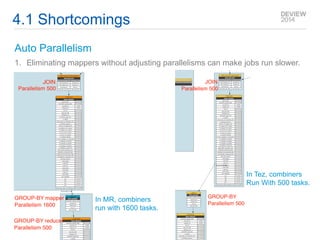

This document discusses the development of Apache Pig on Tez, an execution engine for Pig jobs. Pig on Tez allows Pig workflows to be executed as directed acyclic graphs (DAGs) using Tez, improving performance over the default MapReduce execution. Key benefits of Tez include eliminating intermediate data writes, reducing job launch overhead, and allowing more flexible data flows. However, challenges remain around automatically determining optimal parallelism and integrating Tez with user interface and monitoring tools. Future work is needed to address these issues.