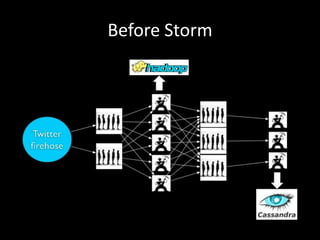

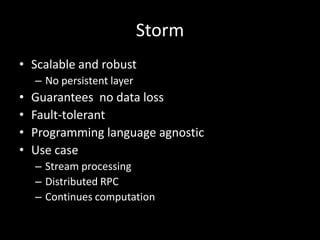

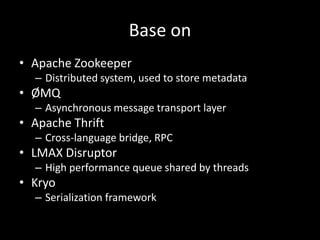

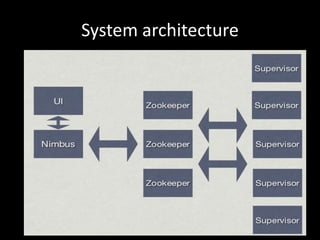

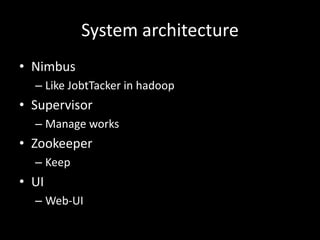

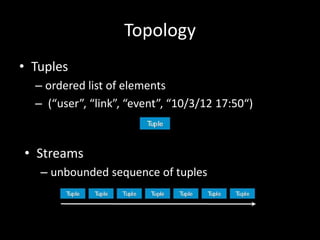

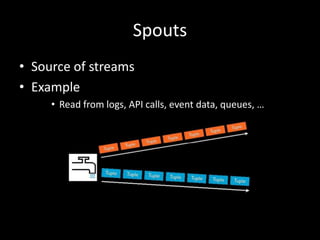

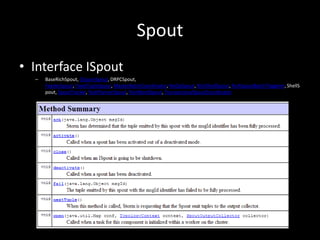

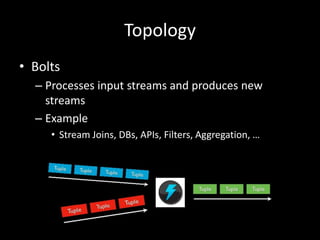

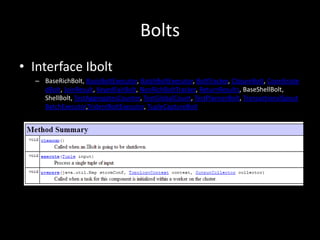

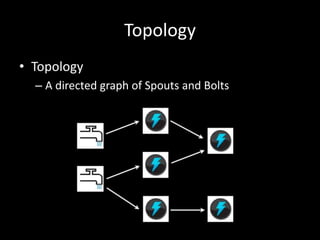

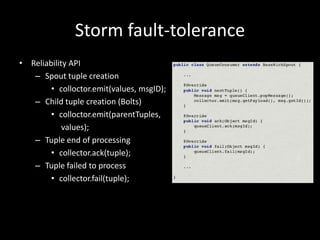

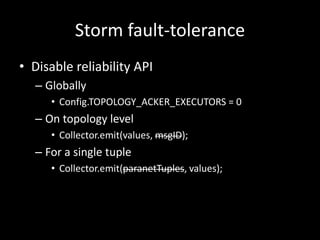

Storm is a distributed and fault-tolerant realtime computation system. It was created at BackType/Twitter to analyze tweets, links, and users on Twitter in realtime. Storm provides scalability, reliability, and ease of programming. It uses components like Zookeeper, ØMQ, and Thrift. A Storm topology defines the flow of data between spouts that read data and bolts that process data. Storm guarantees processing of all data through its reliability APIs and guarantees no data loss even during failures.

![Storm

Distributed and fault-tolerant realtime computation system

Chandler@PyHug

previa [at] gmail.com](https://image.slidesharecdn.com/storm-130121040308-phpapp01/75/Introduction-to-Storm-1-2048.jpg)