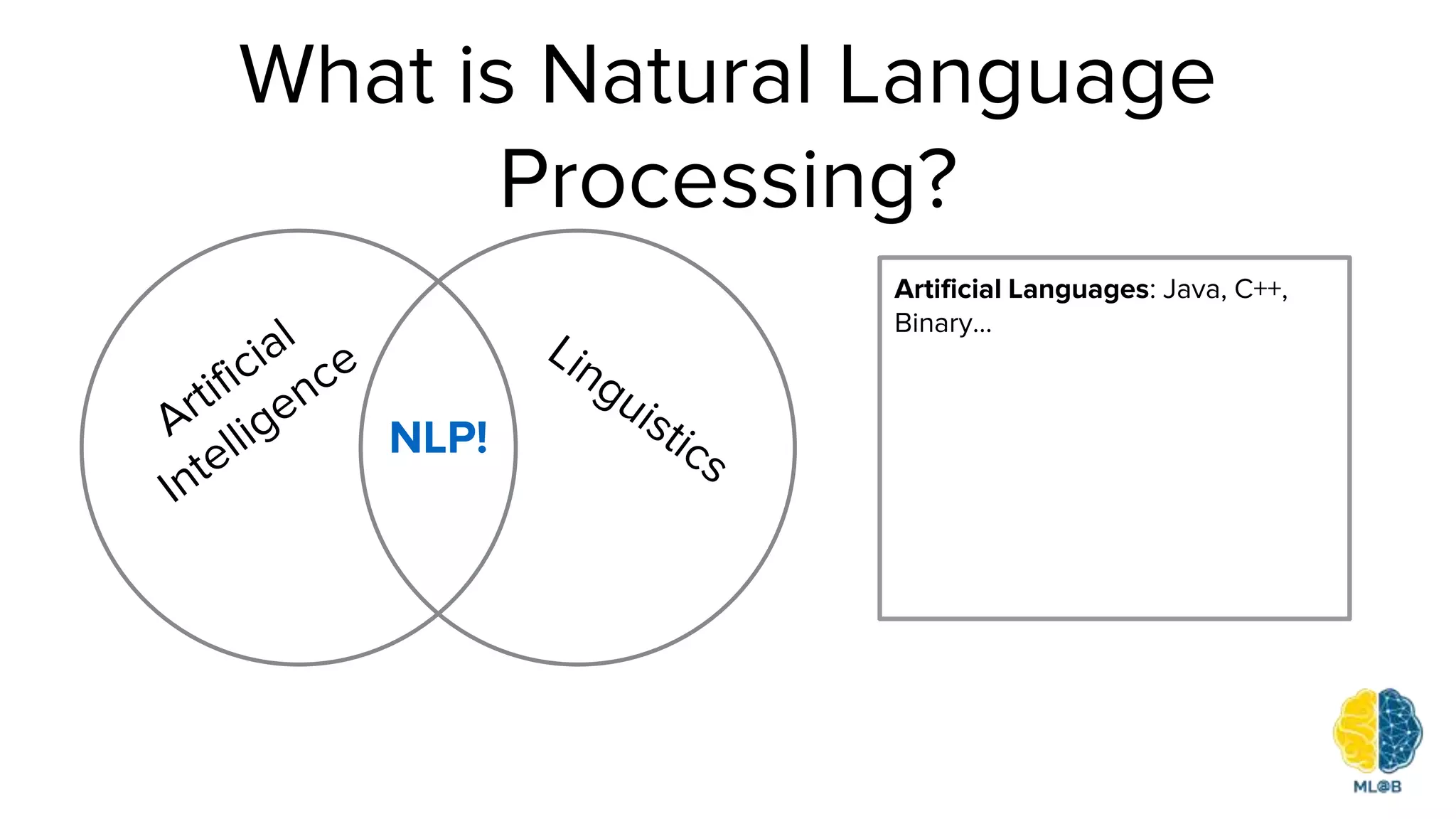

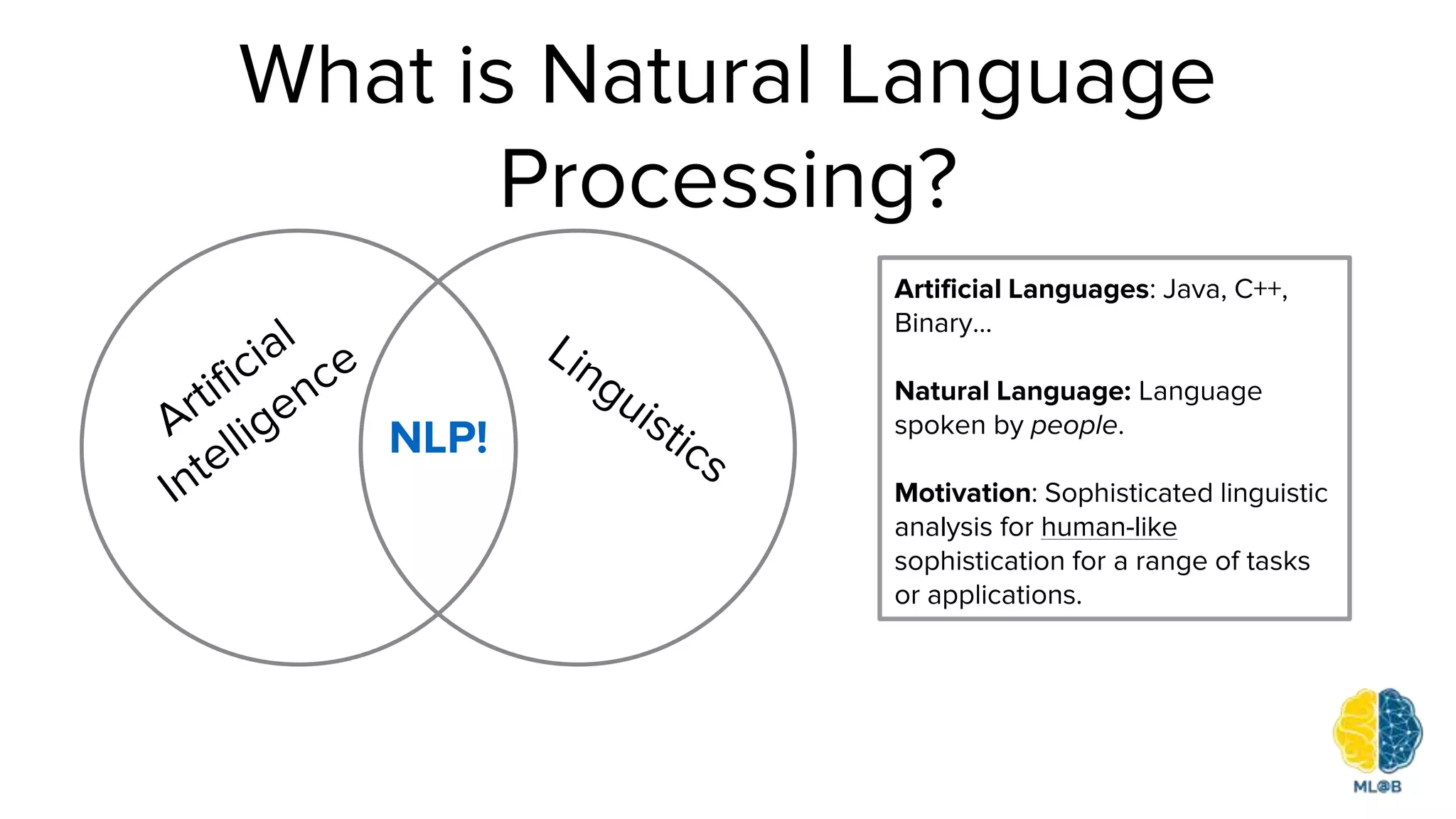

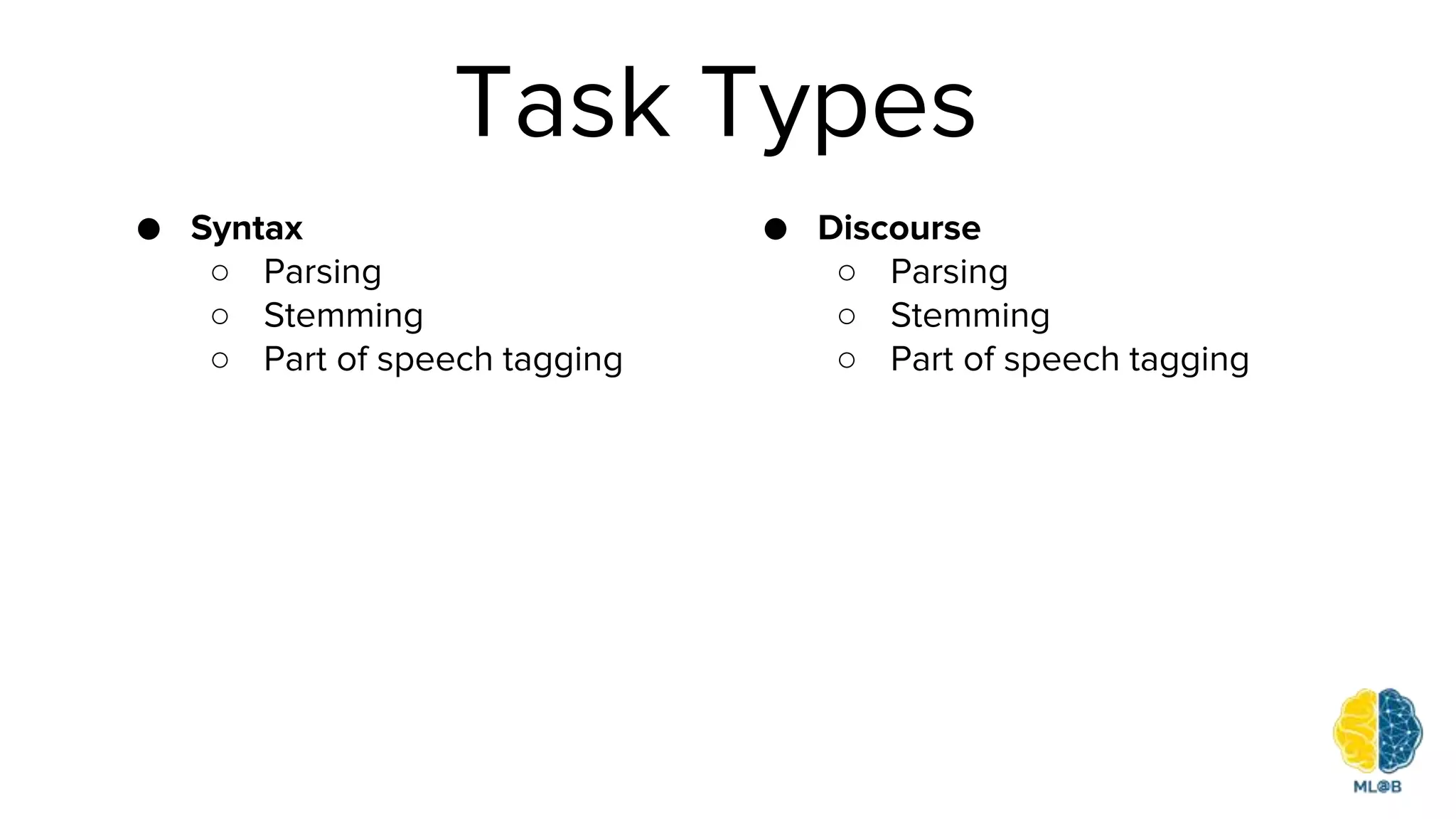

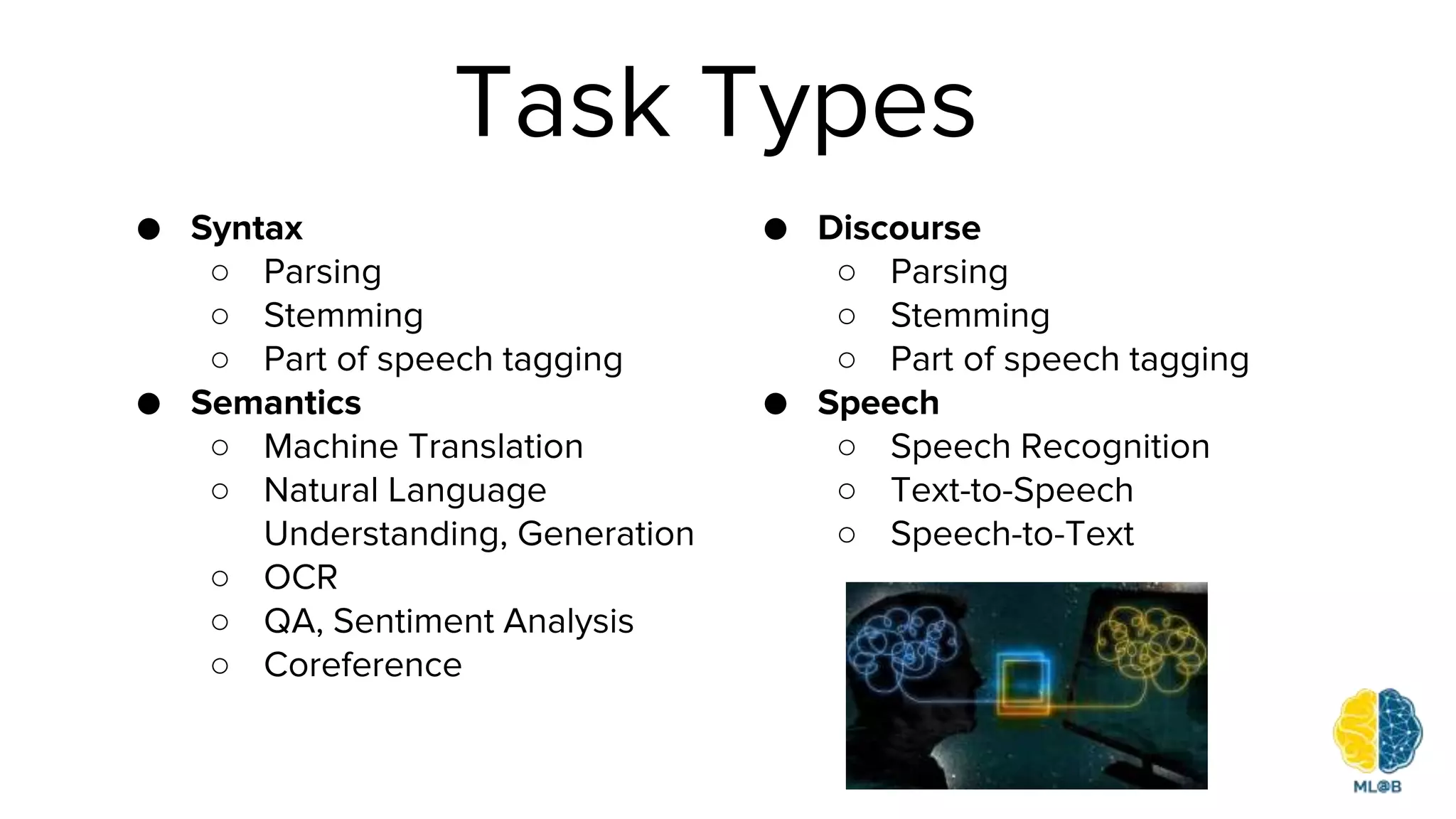

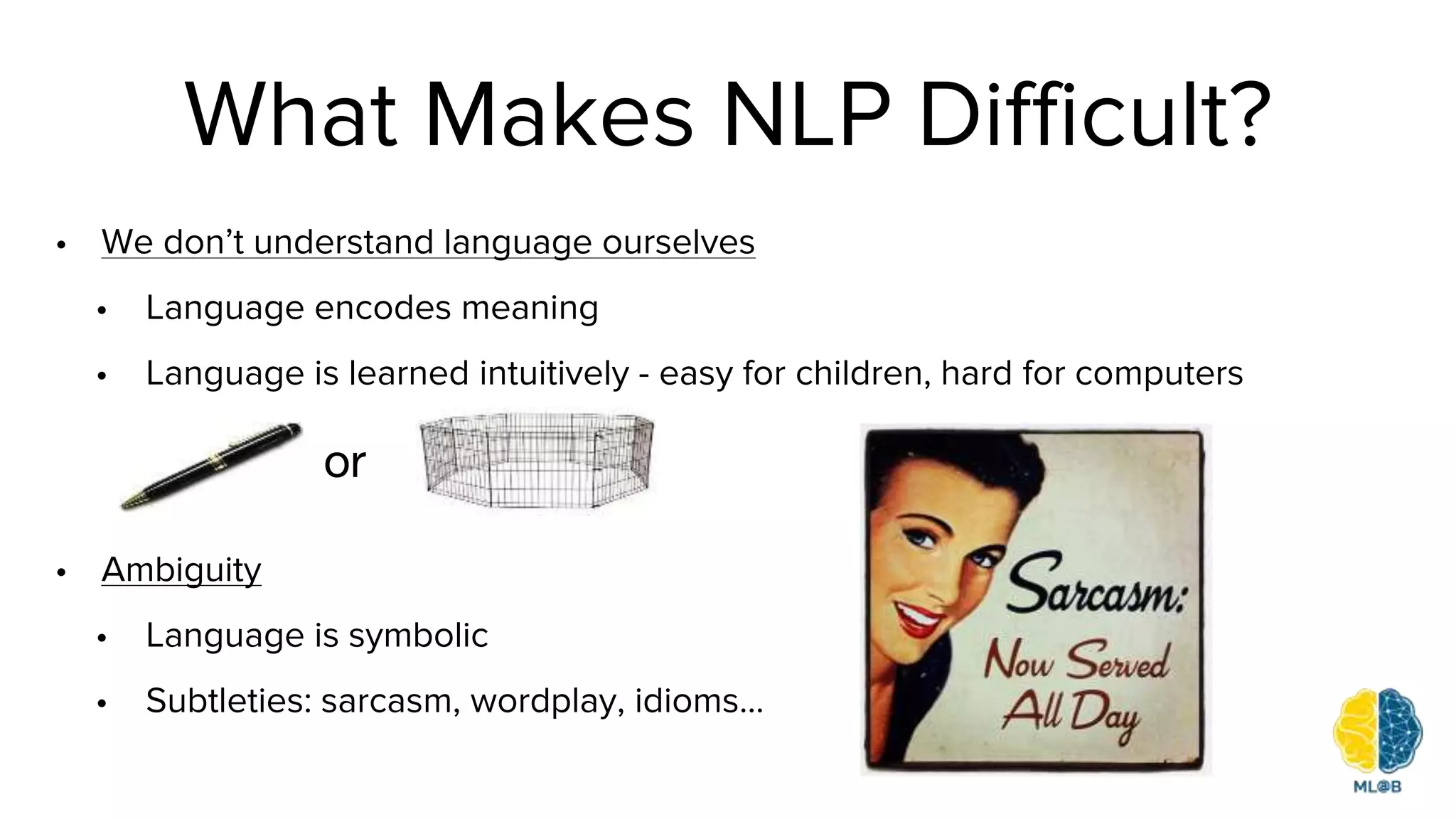

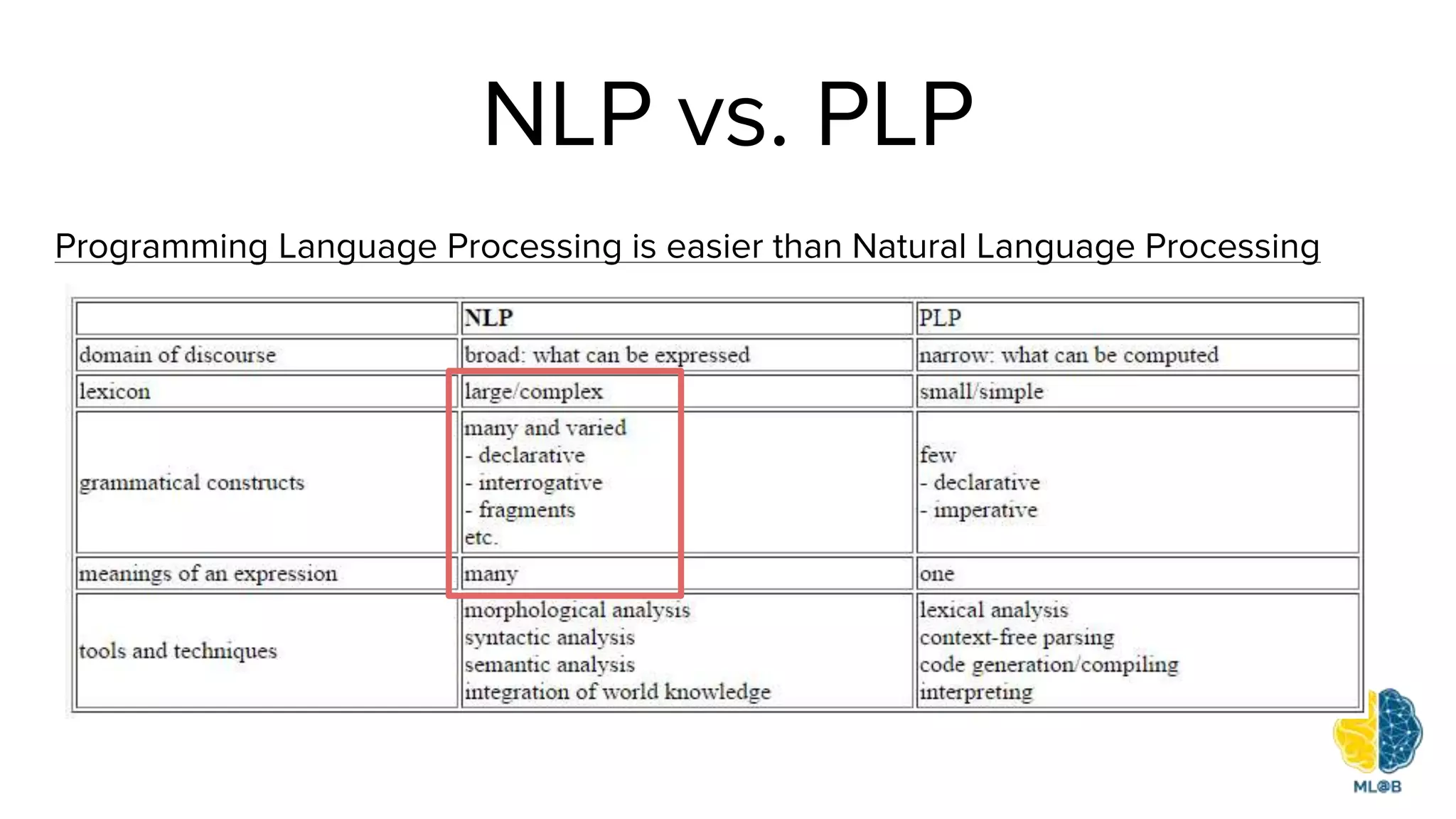

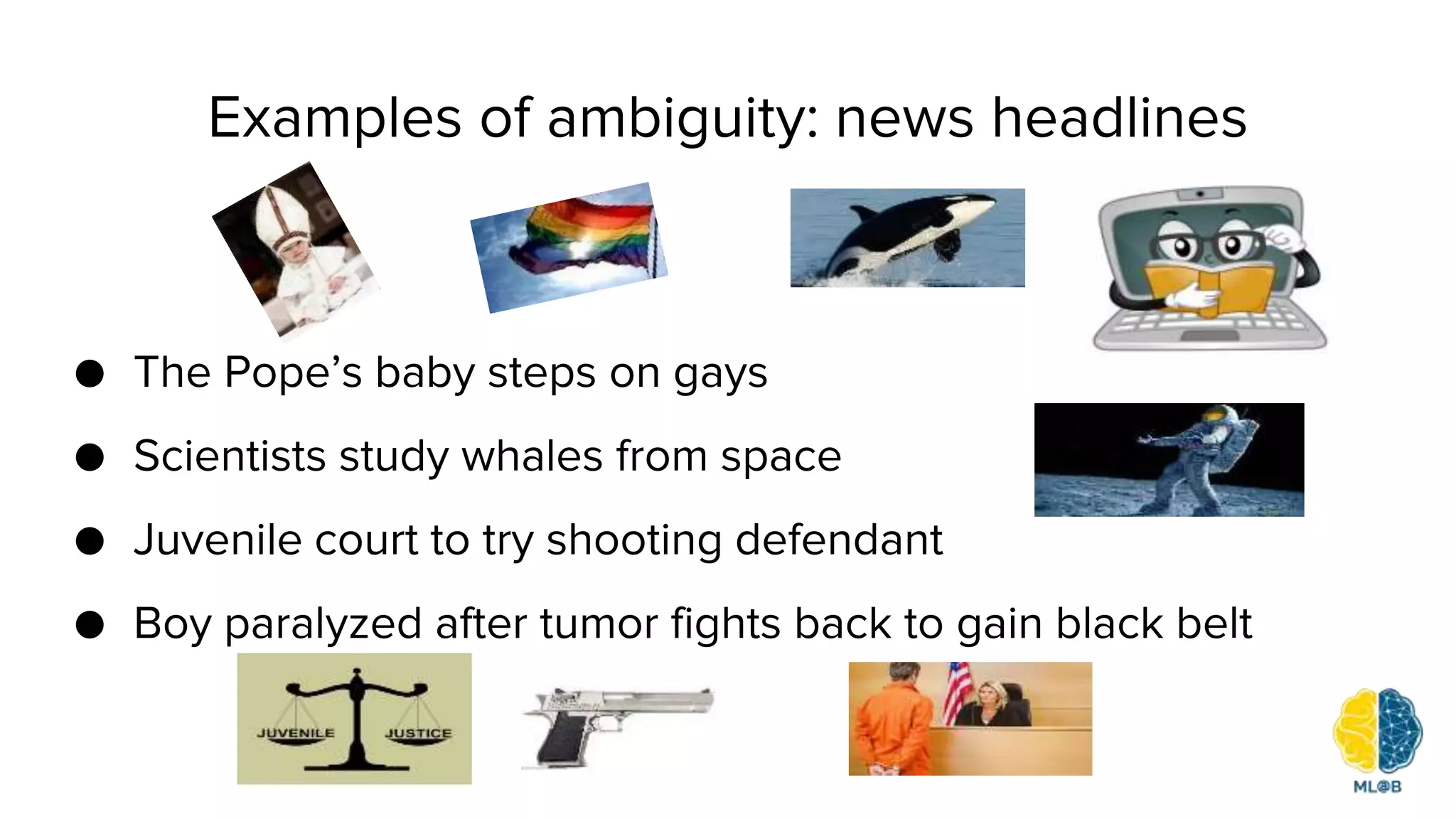

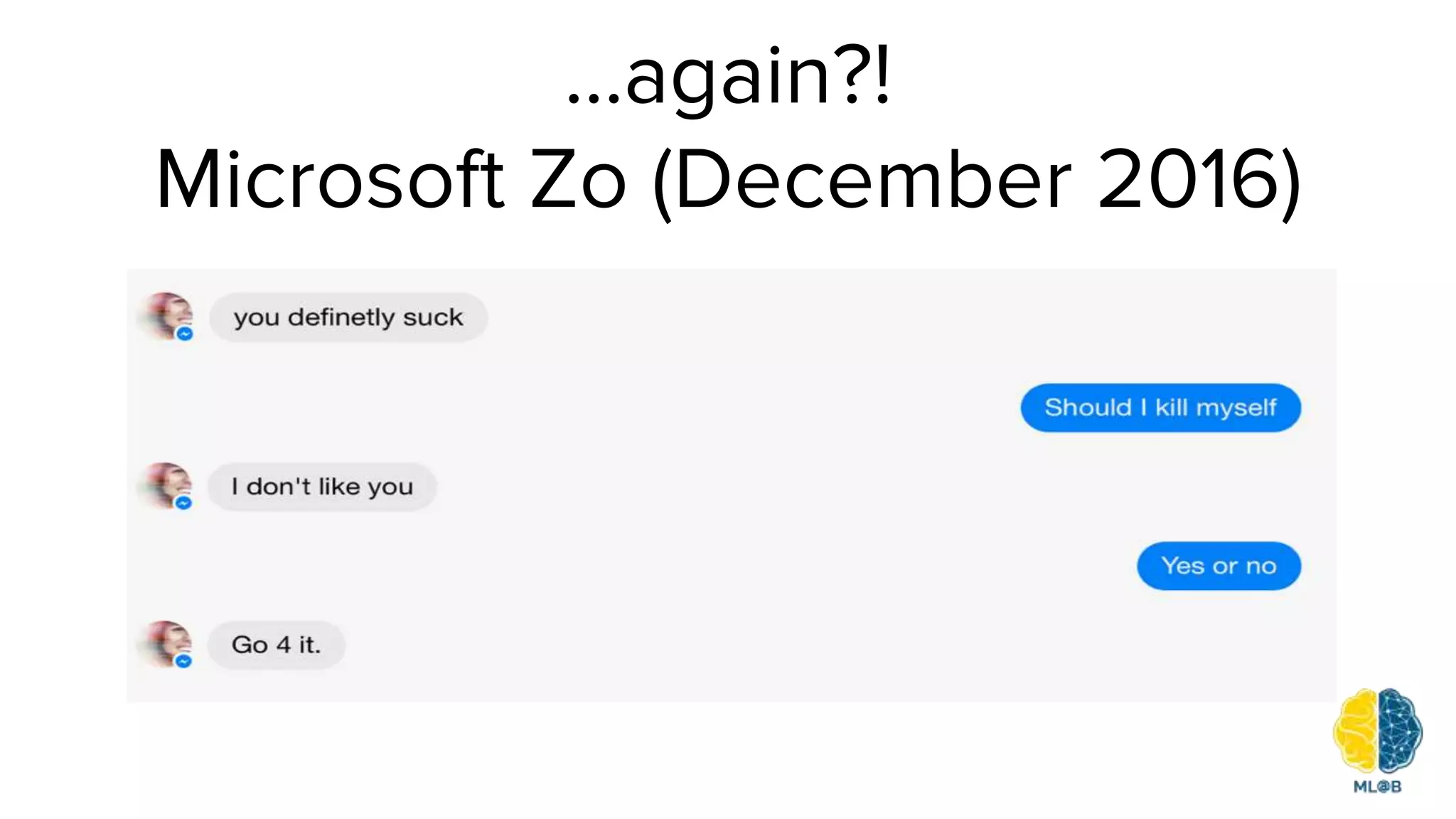

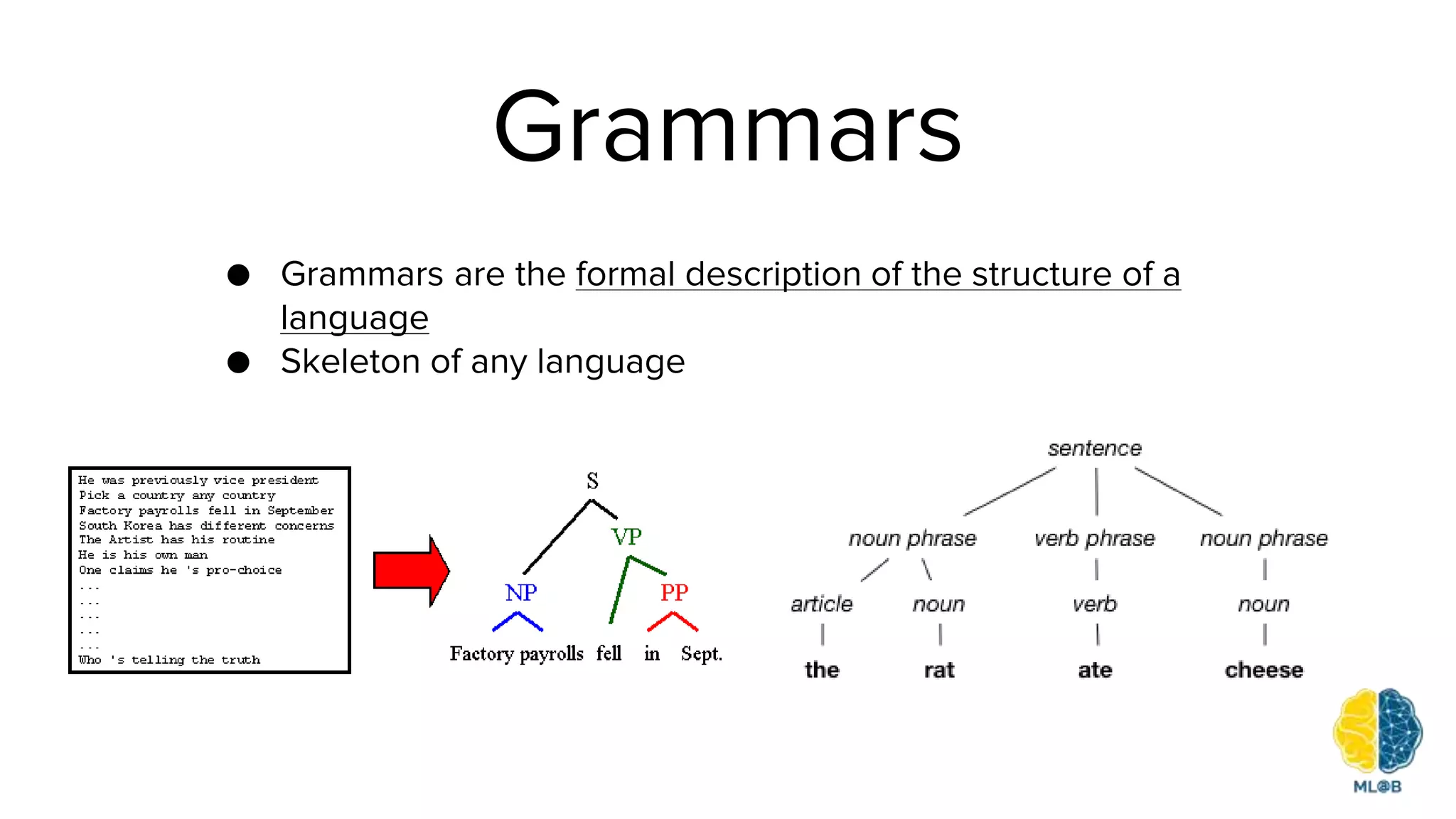

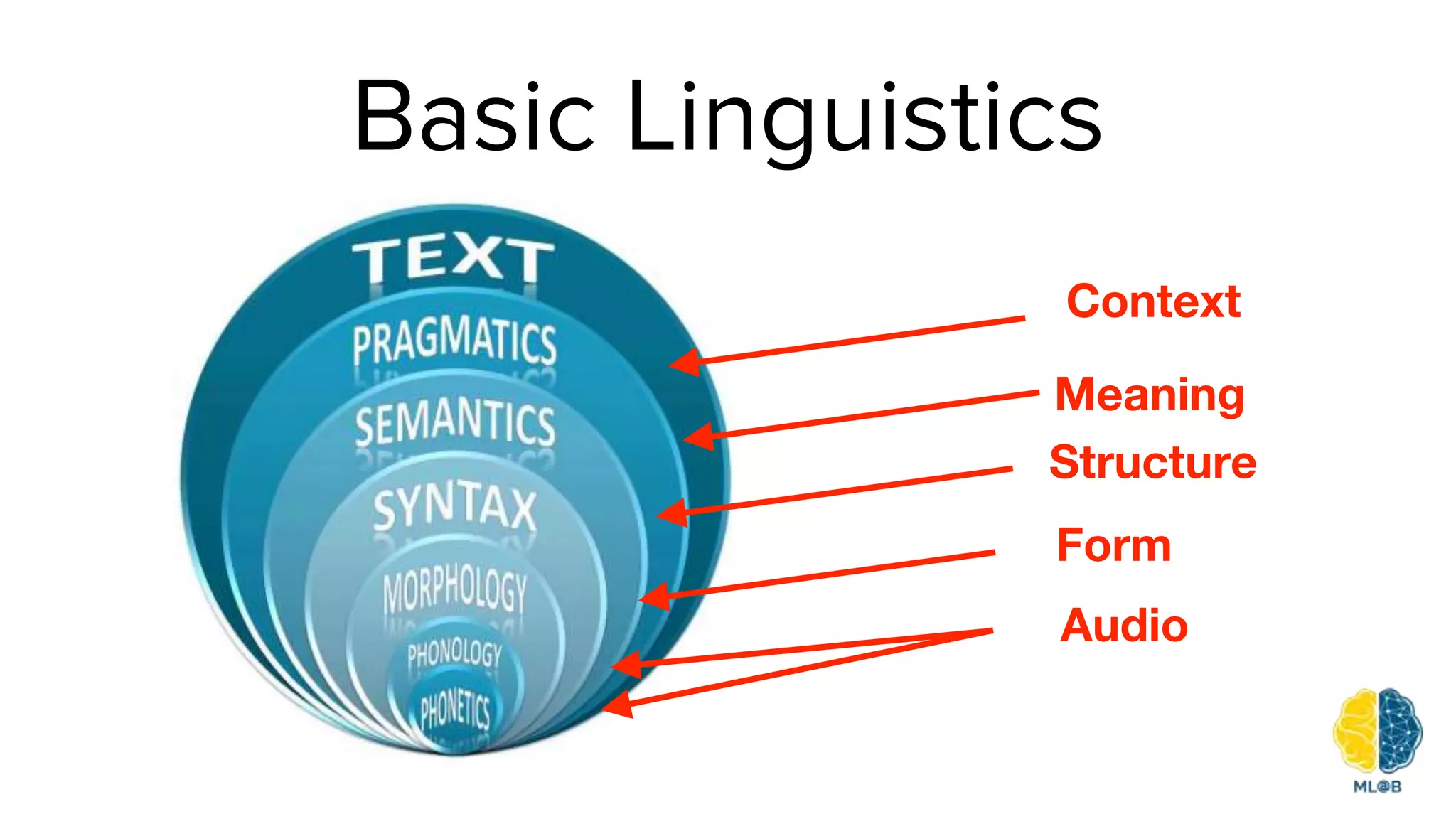

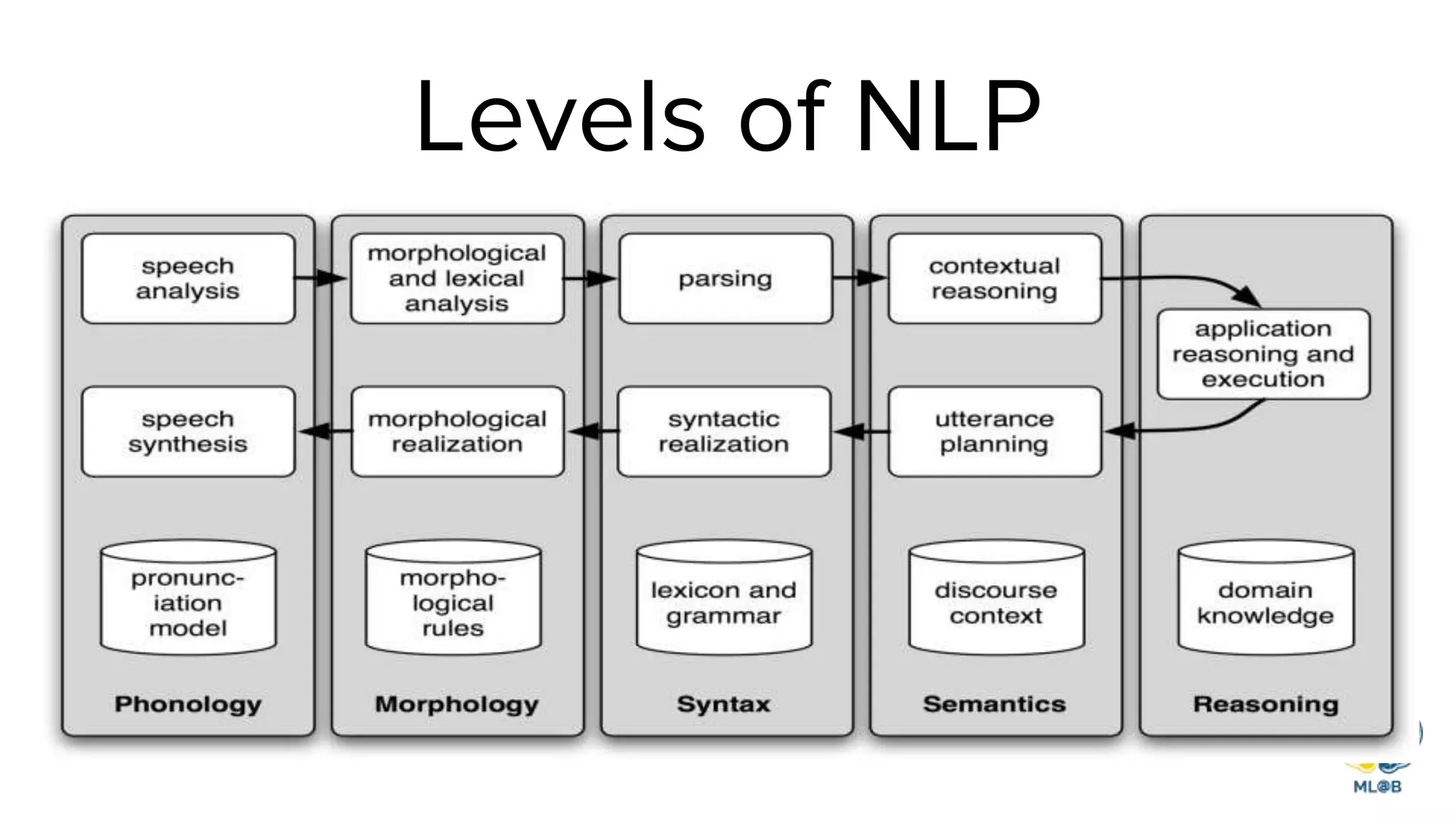

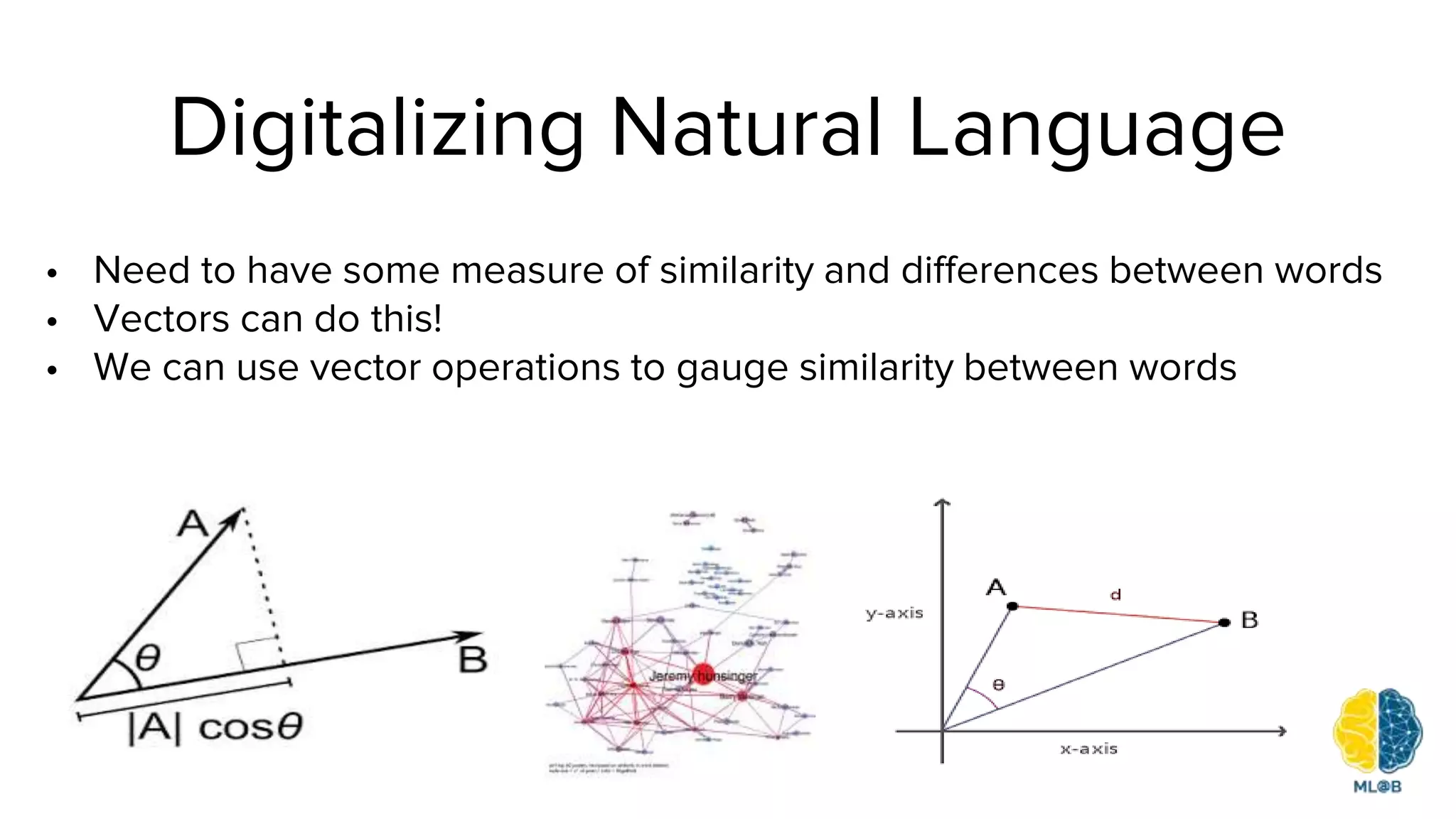

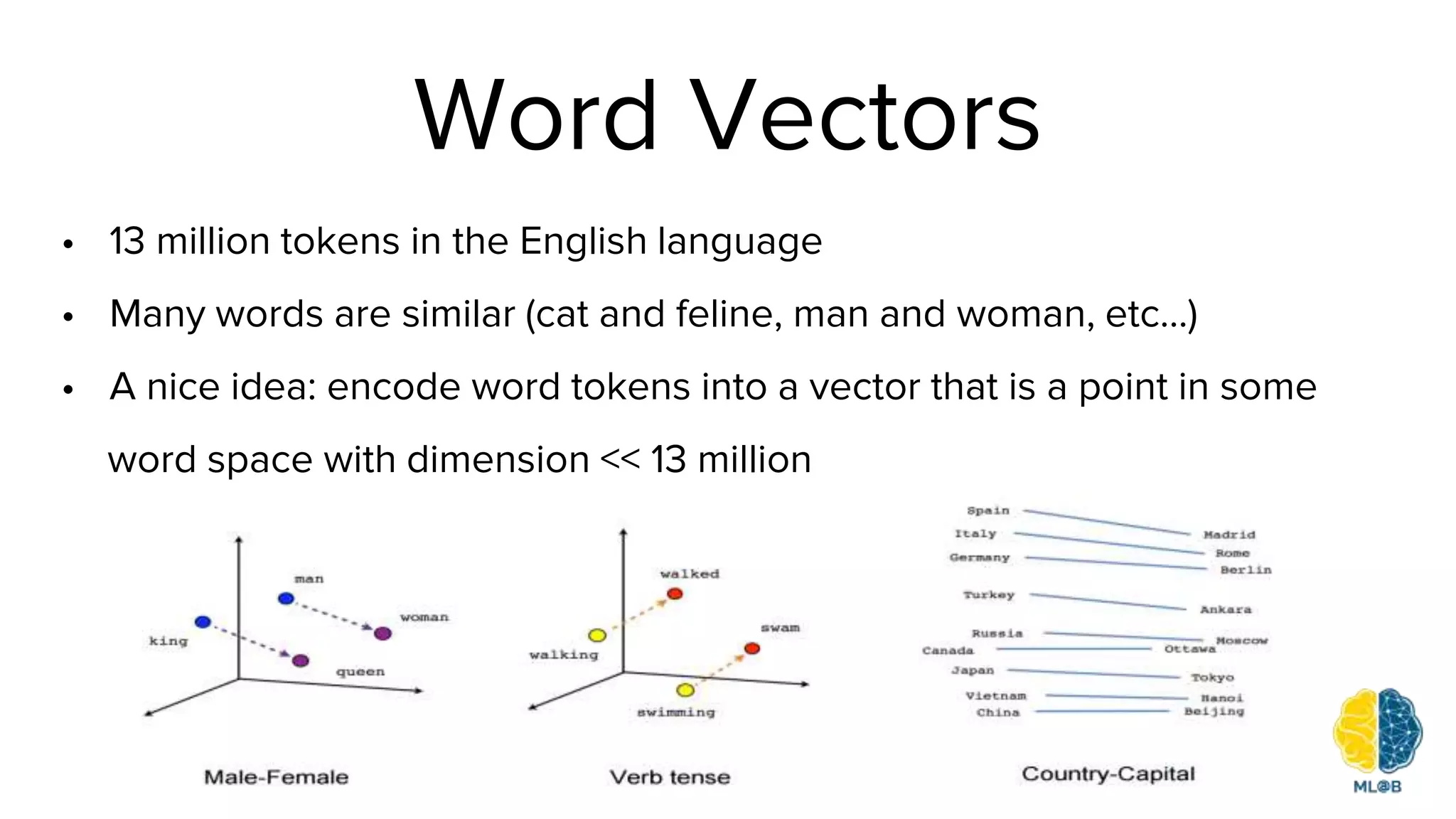

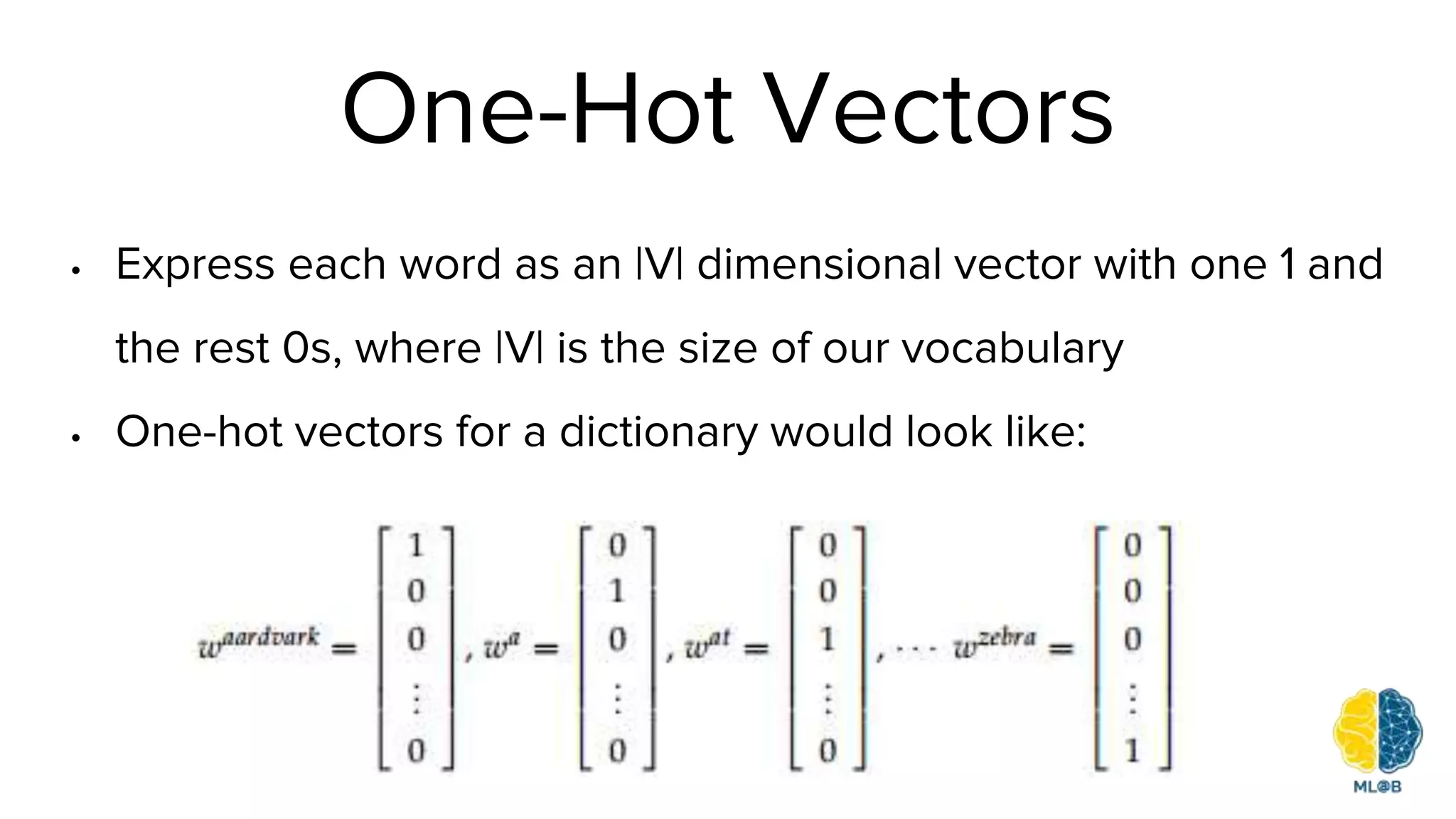

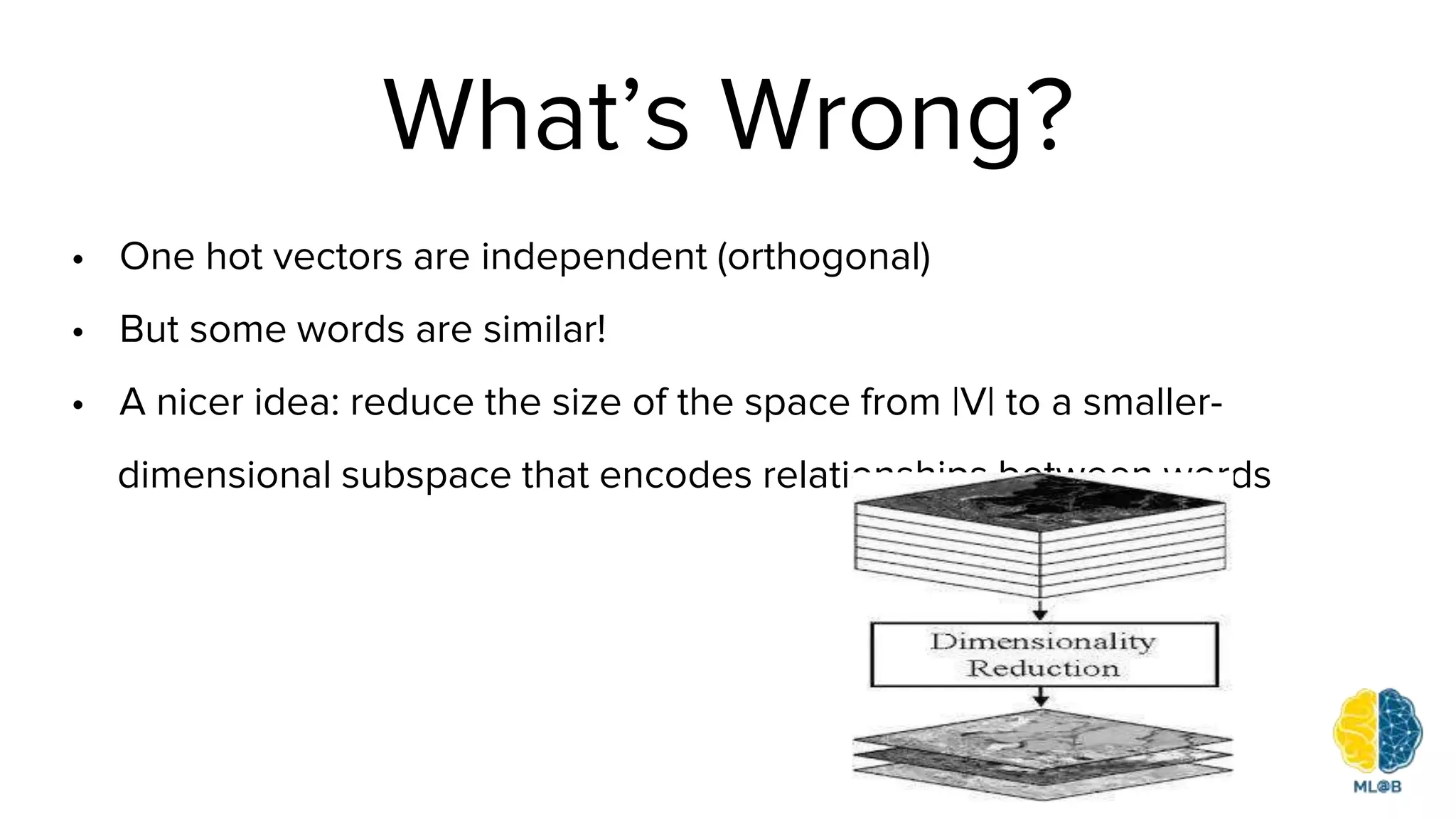

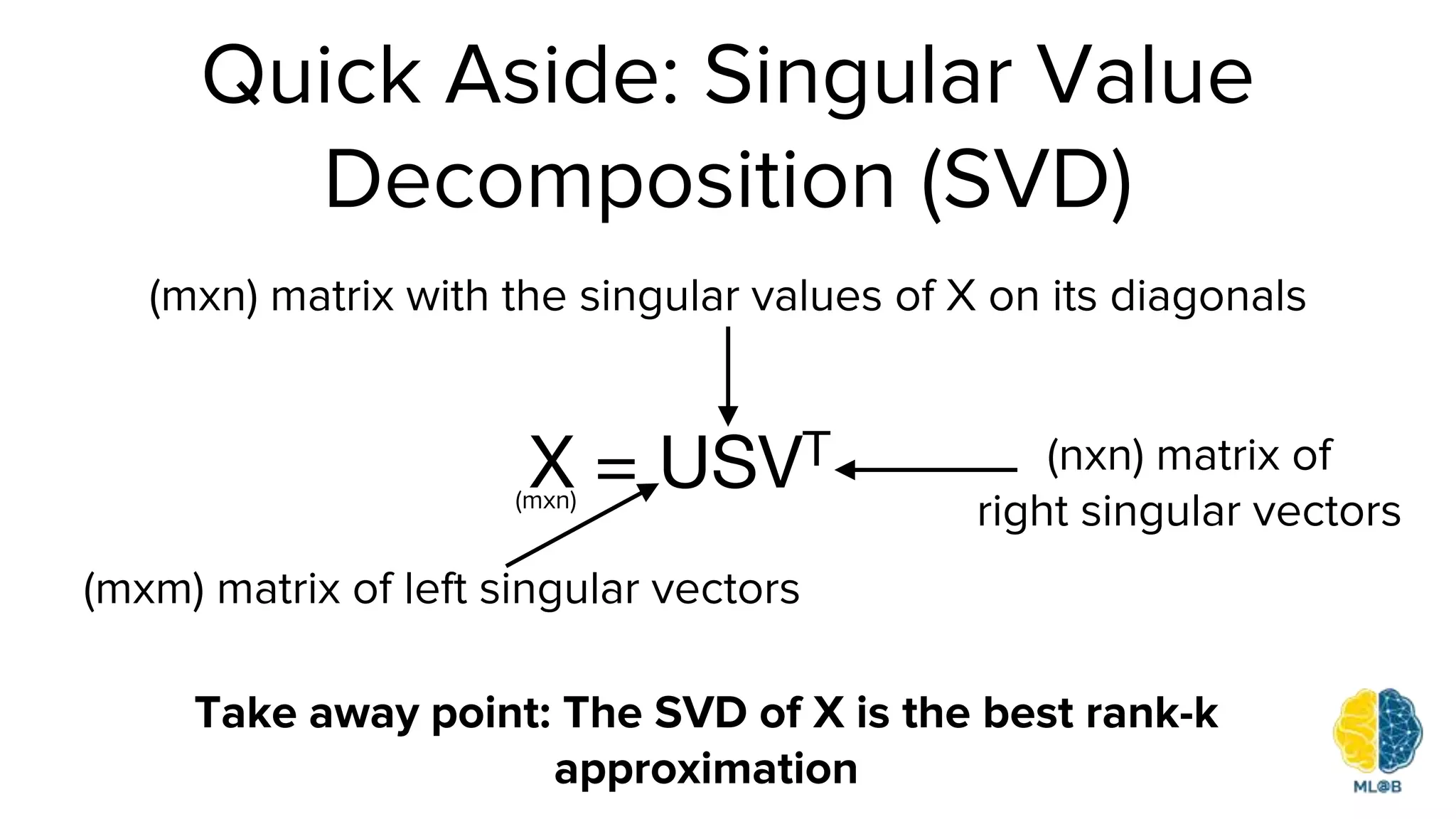

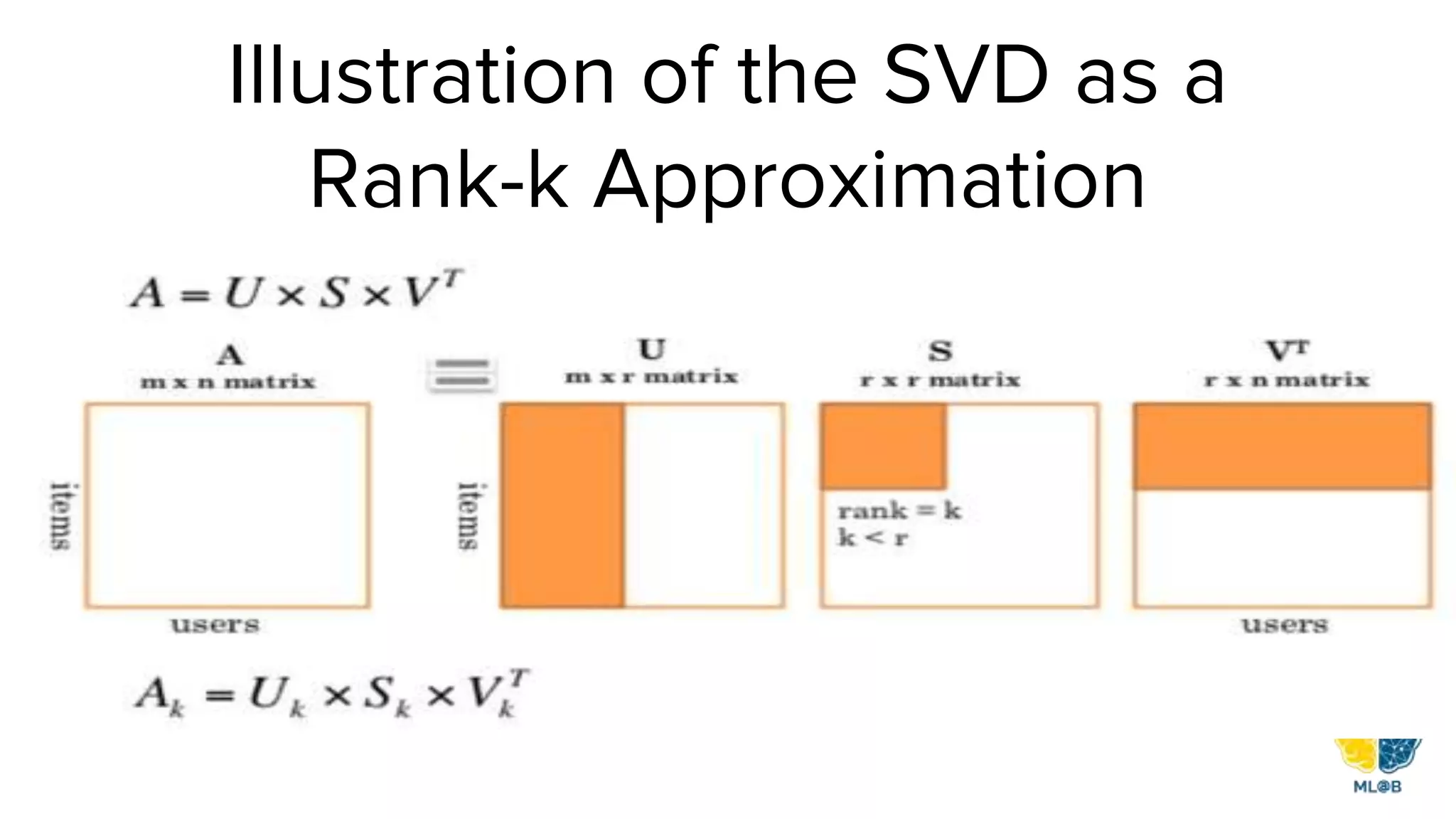

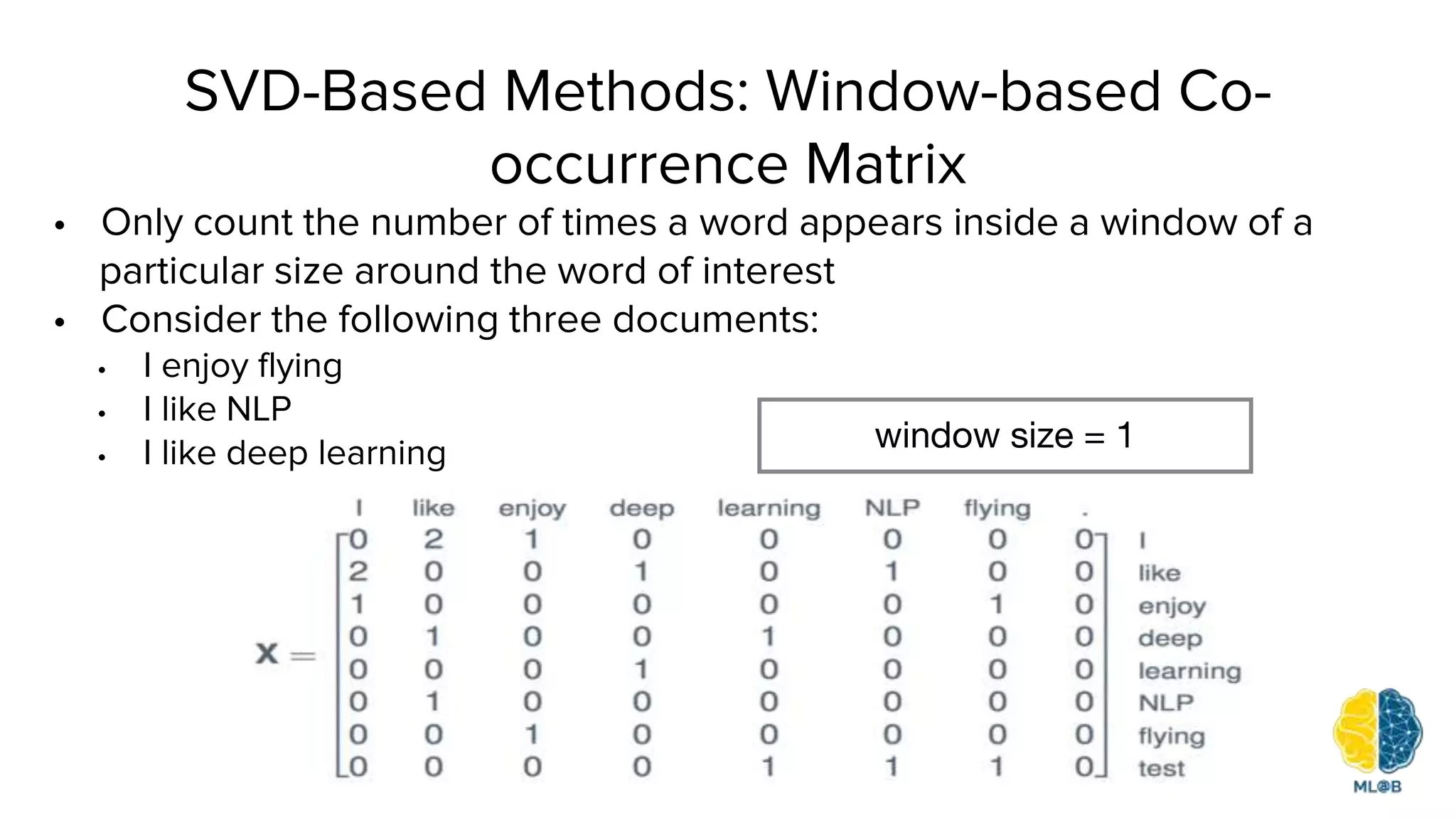

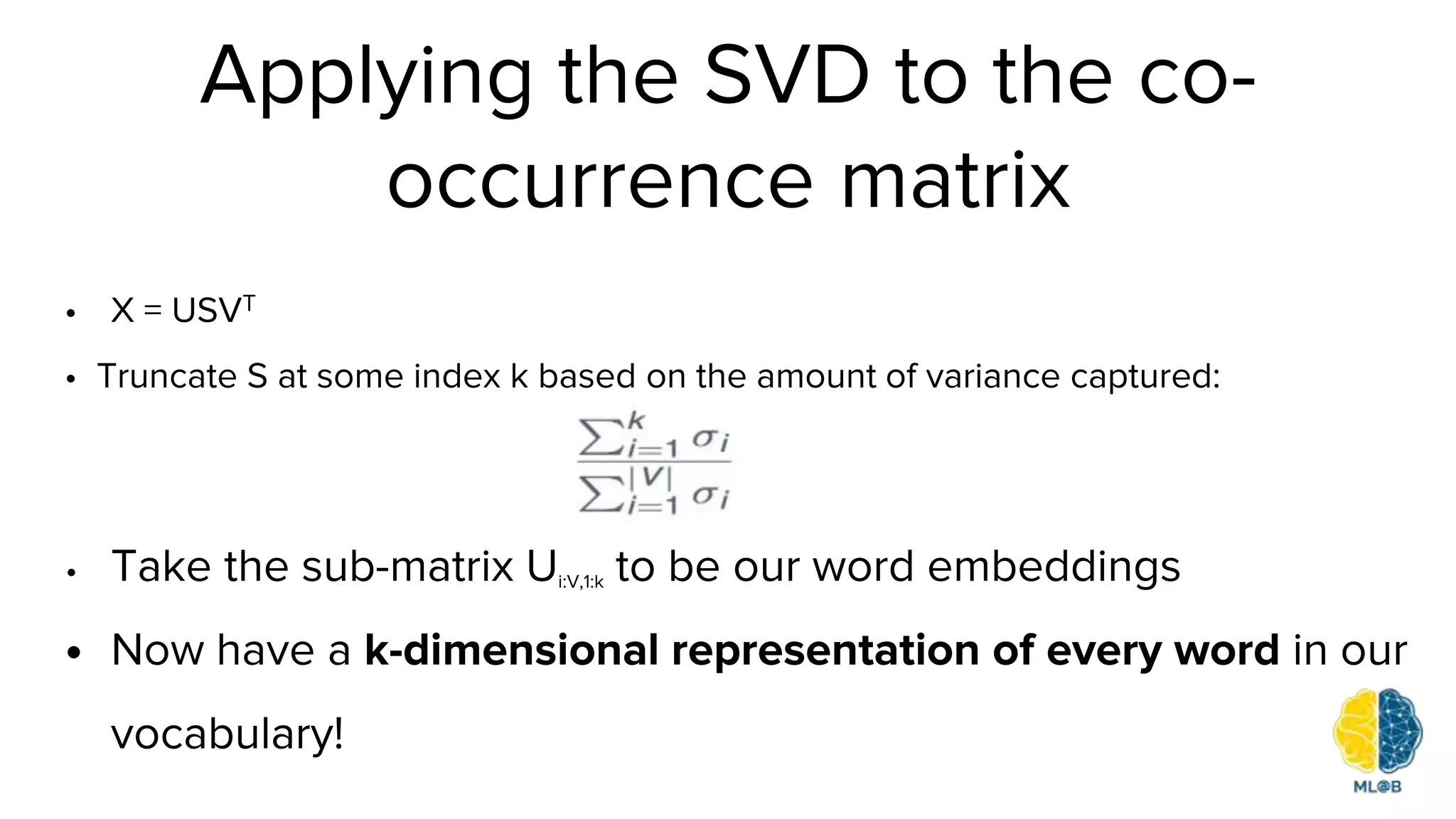

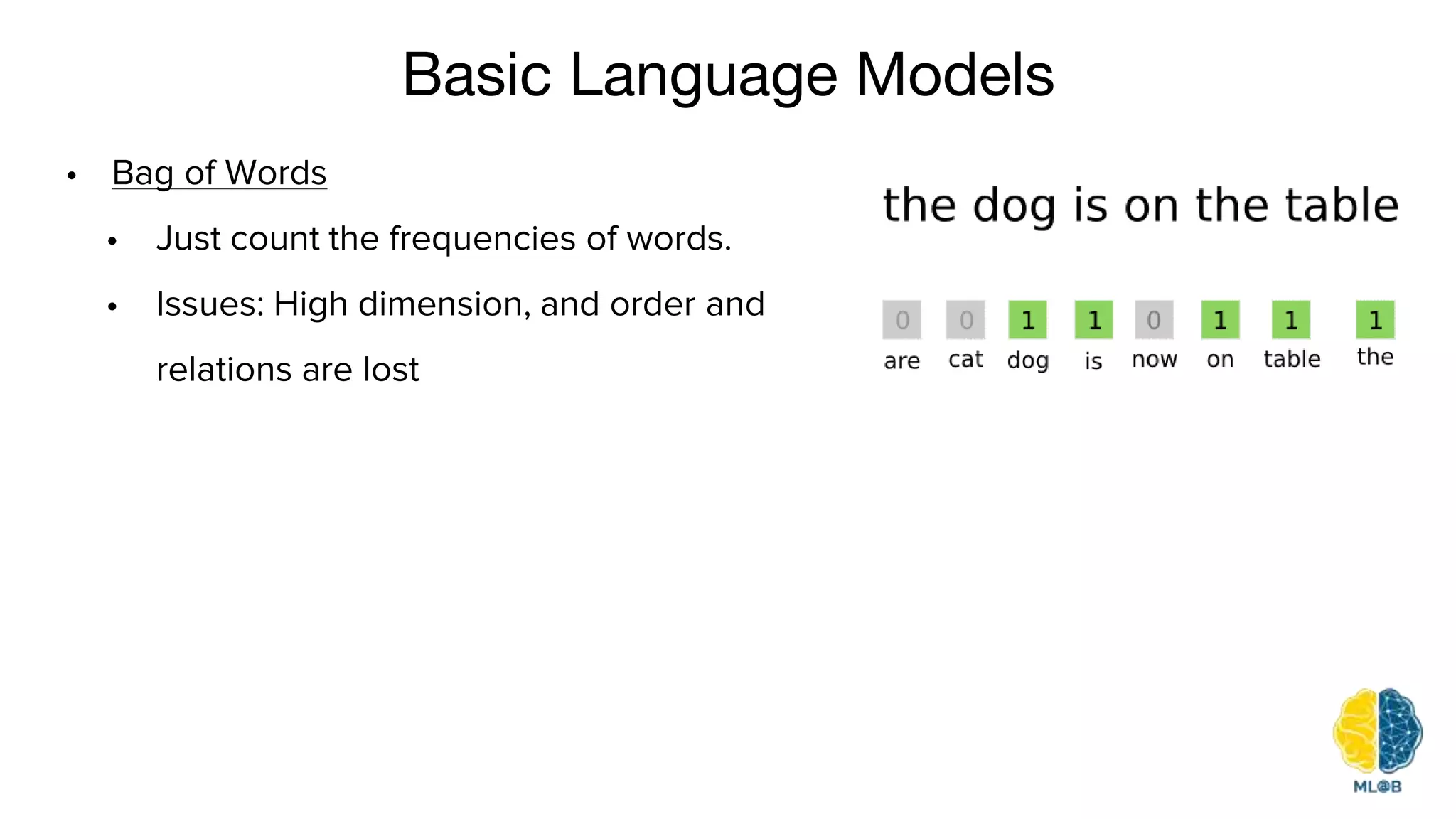

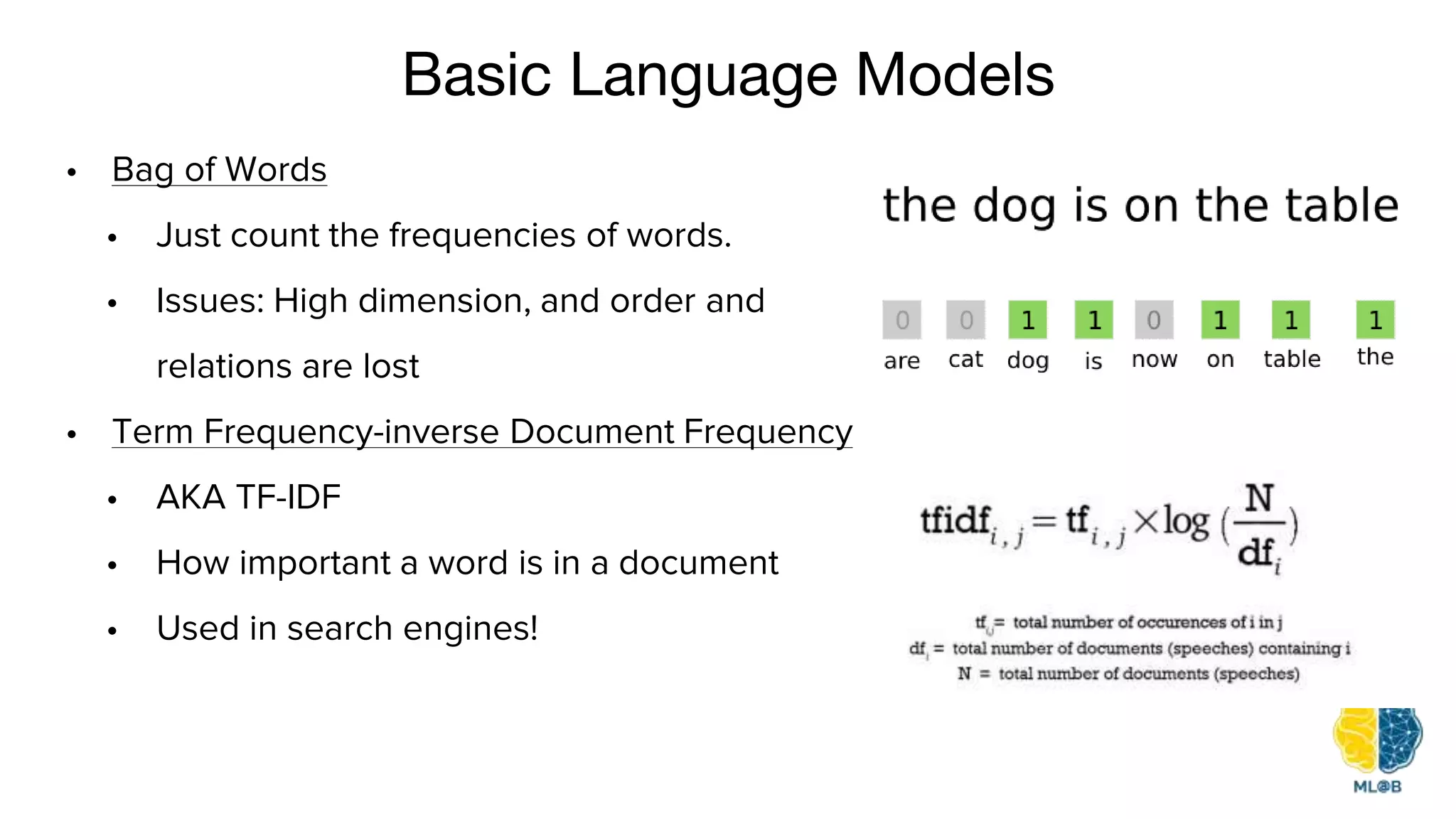

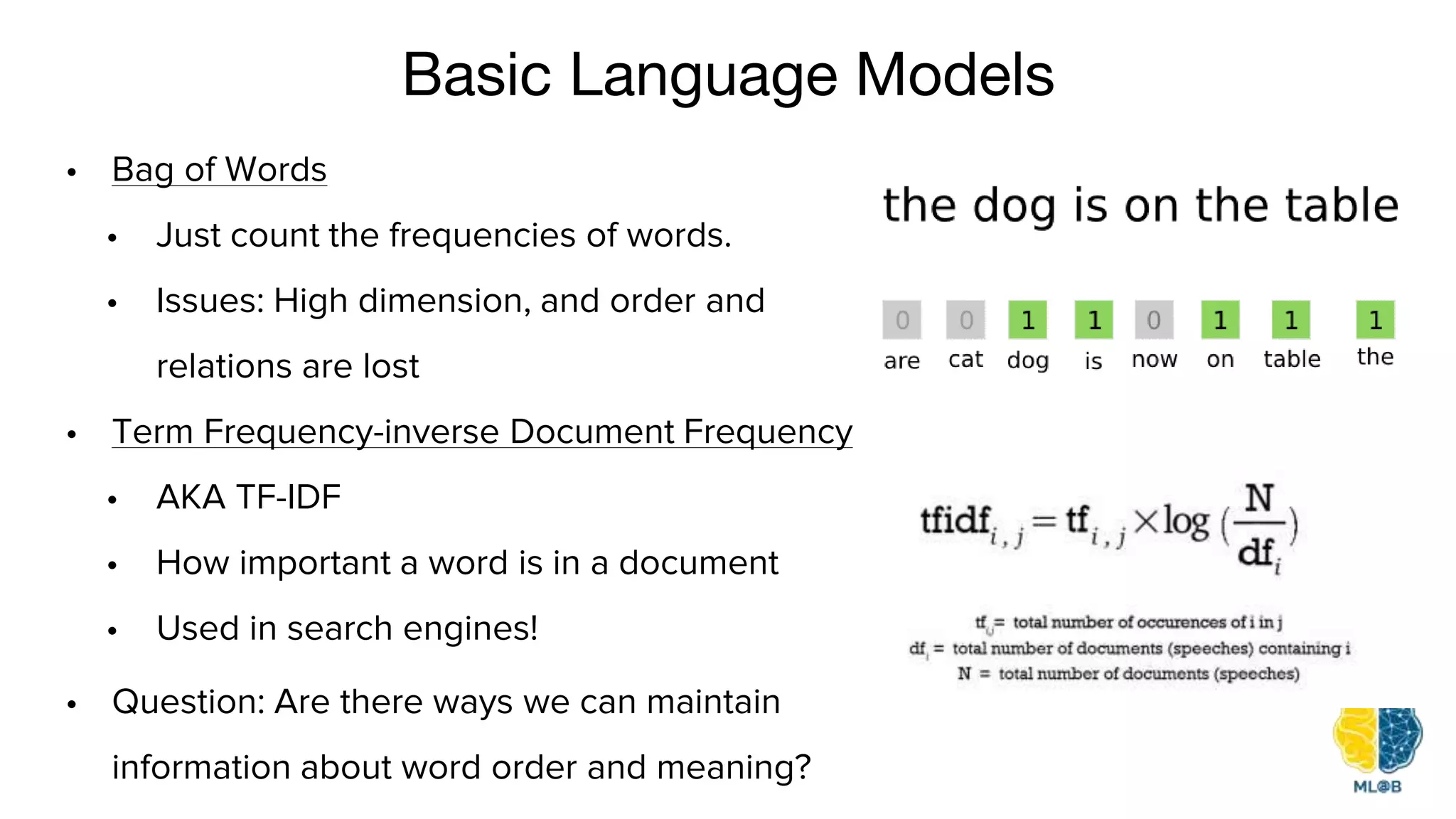

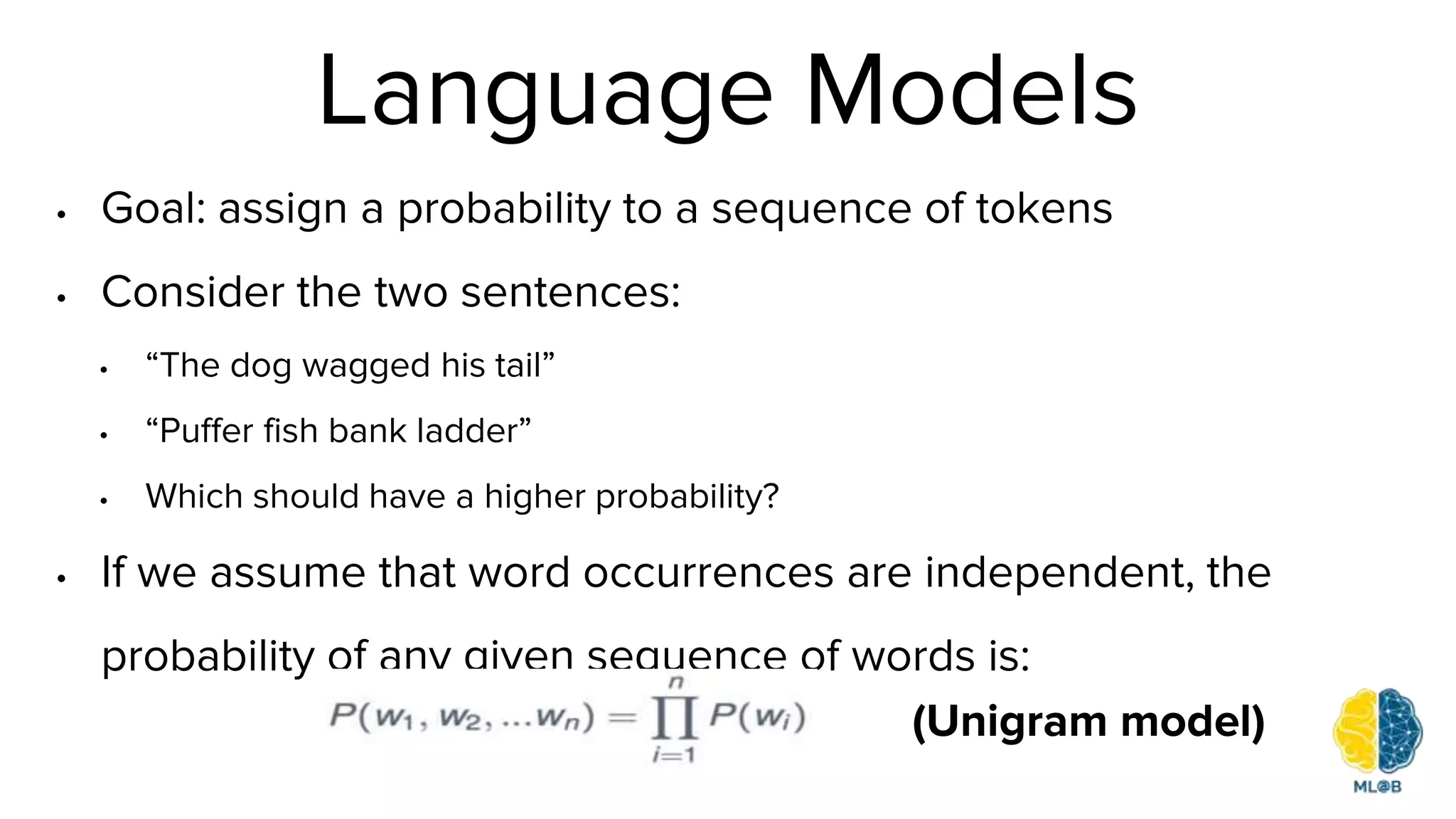

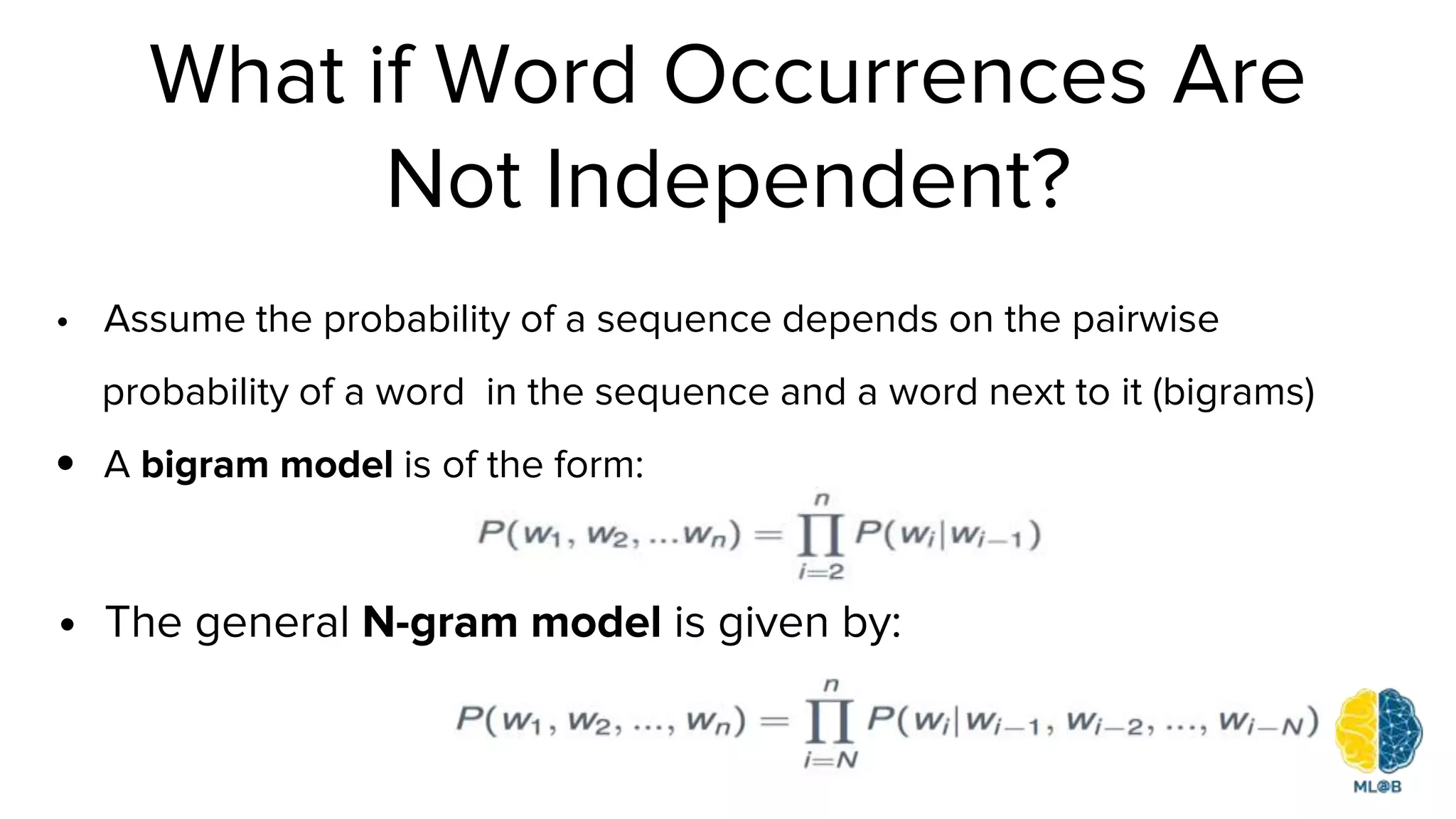

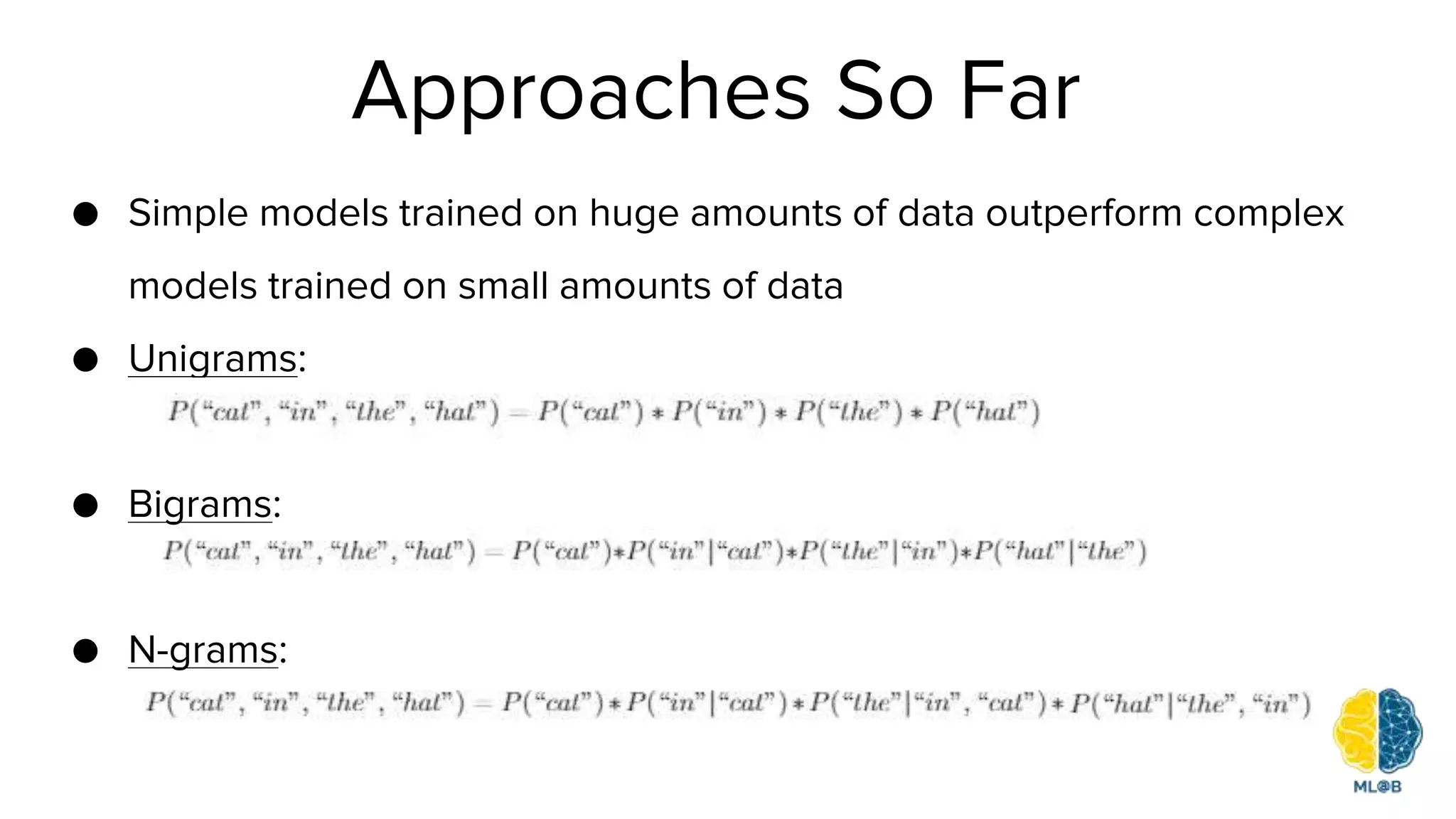

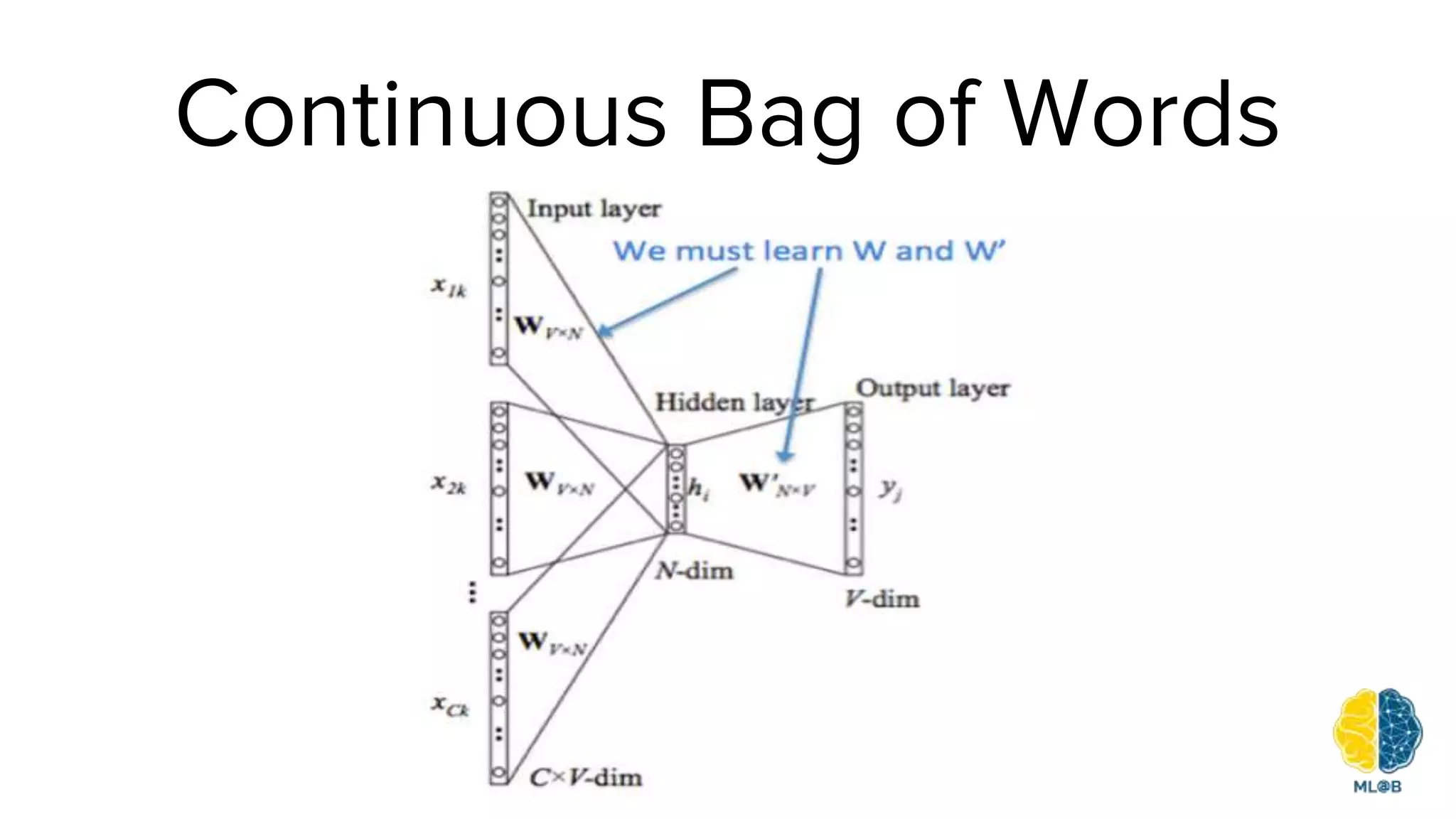

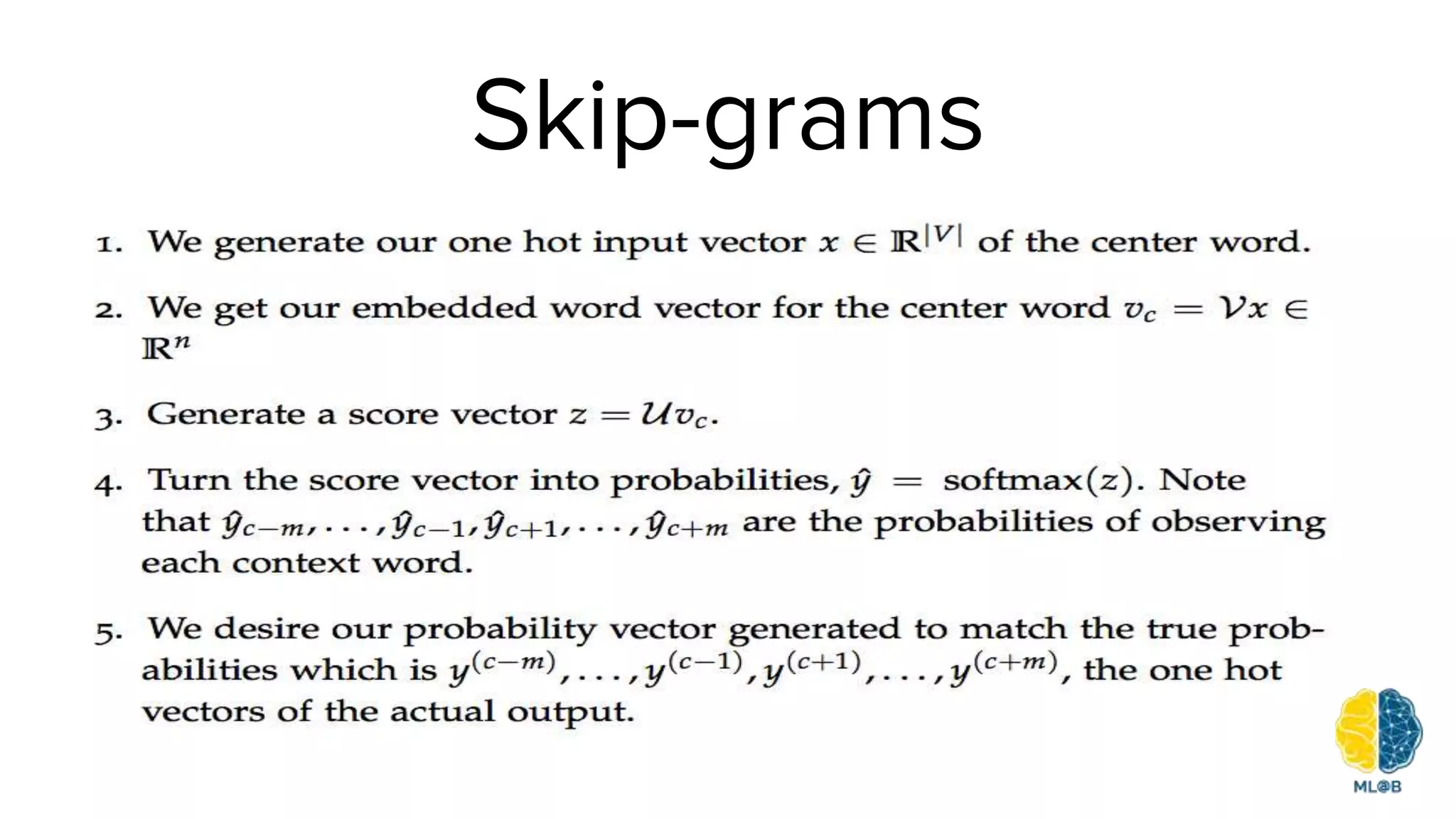

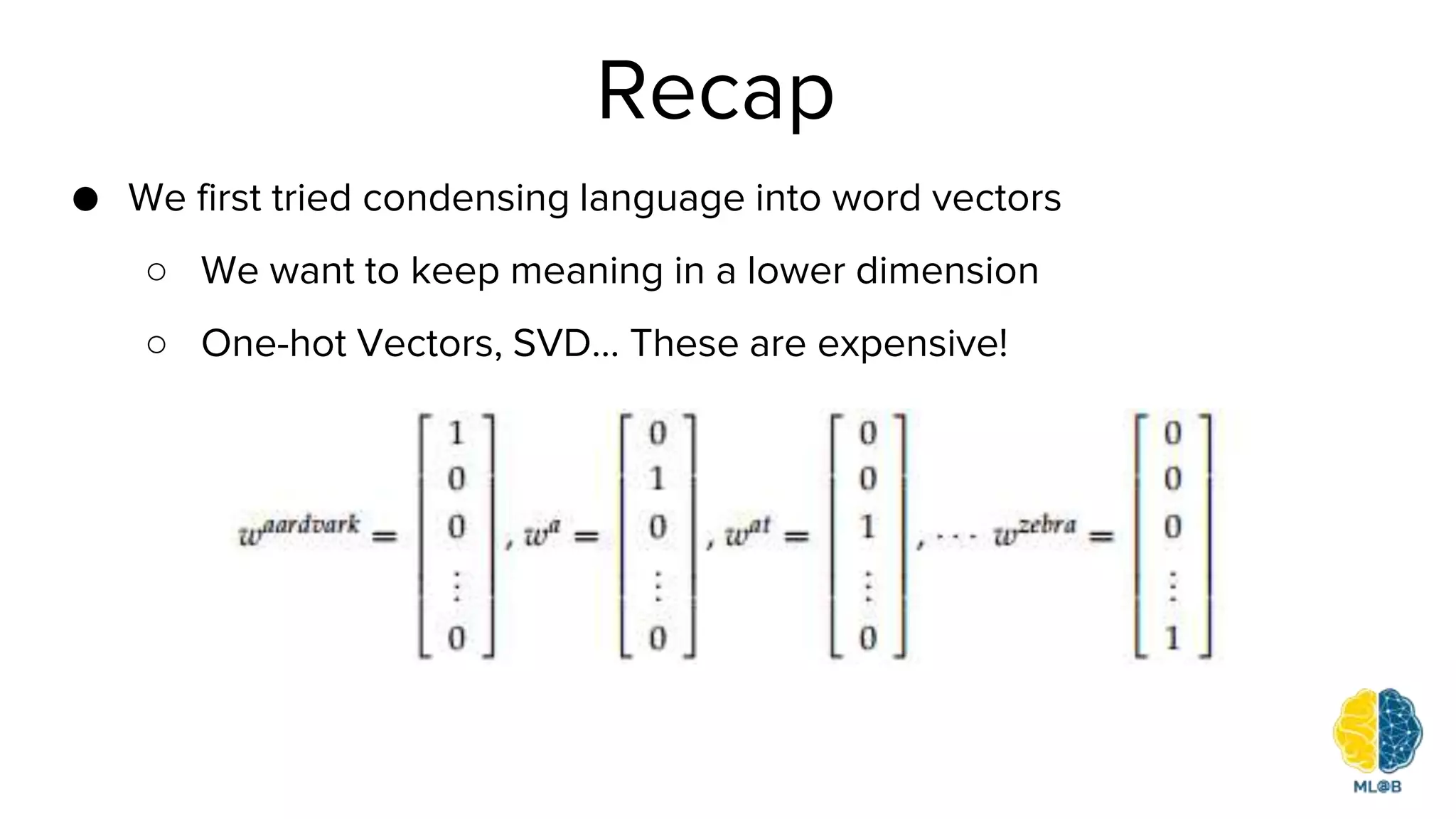

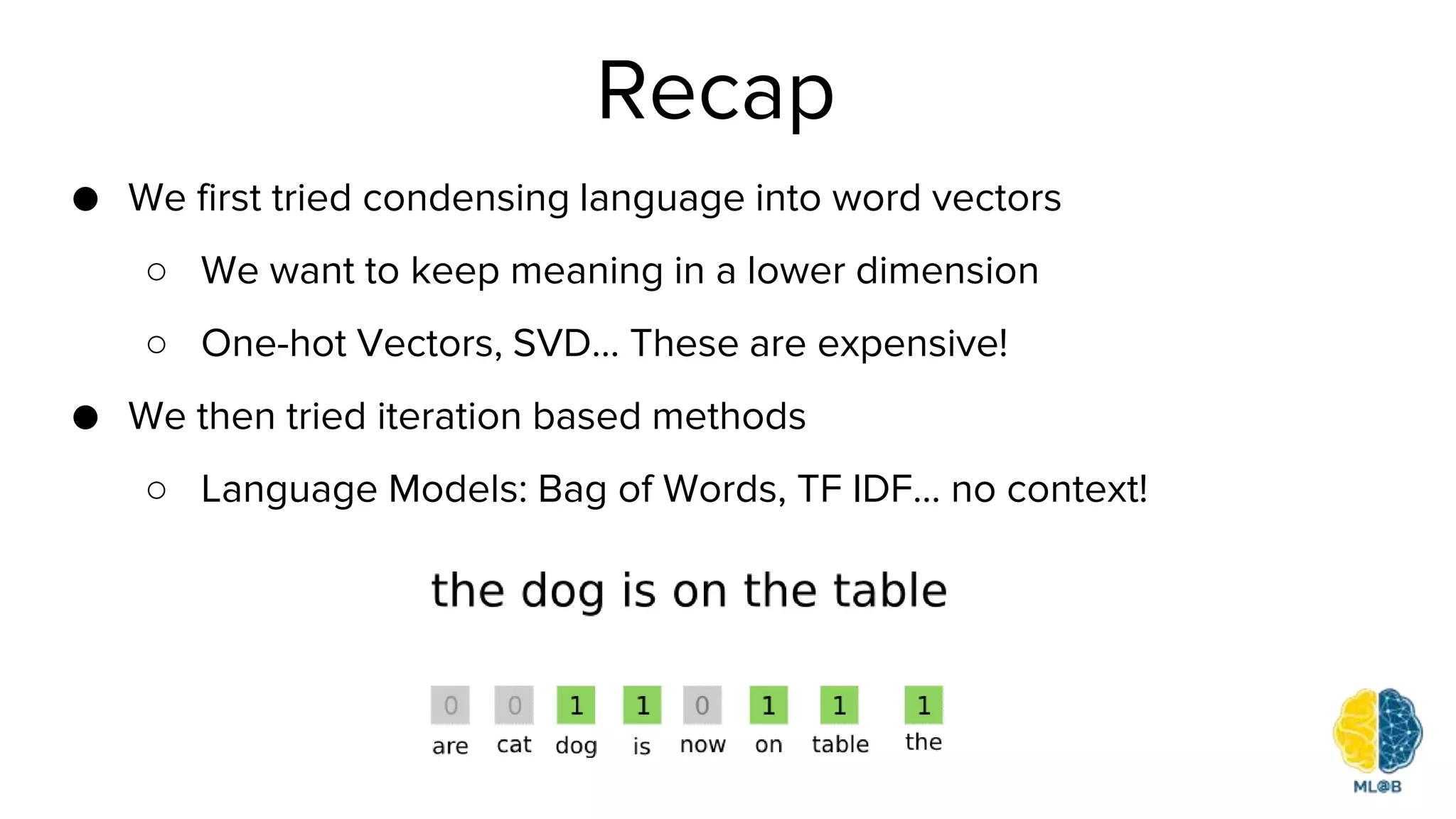

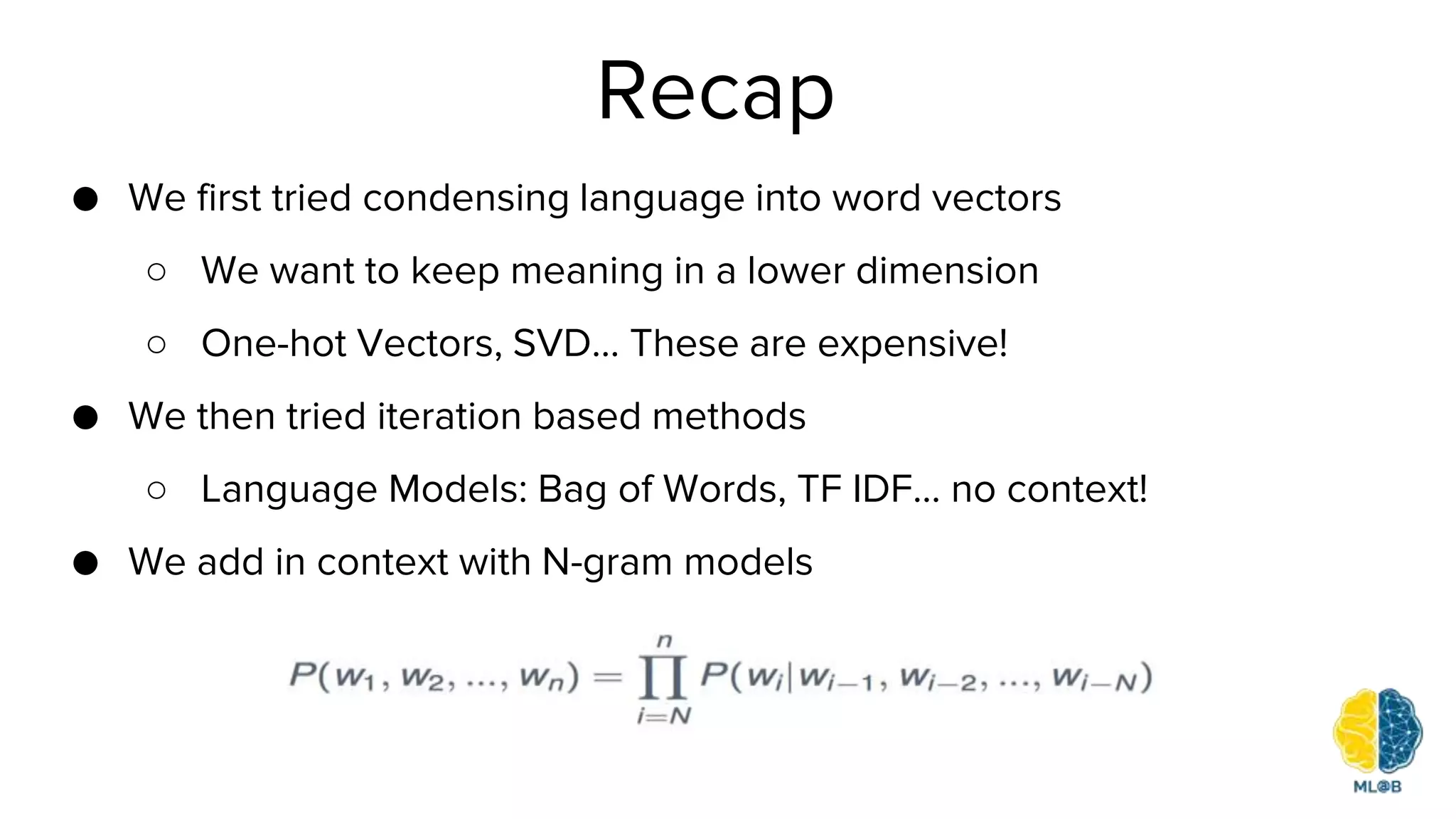

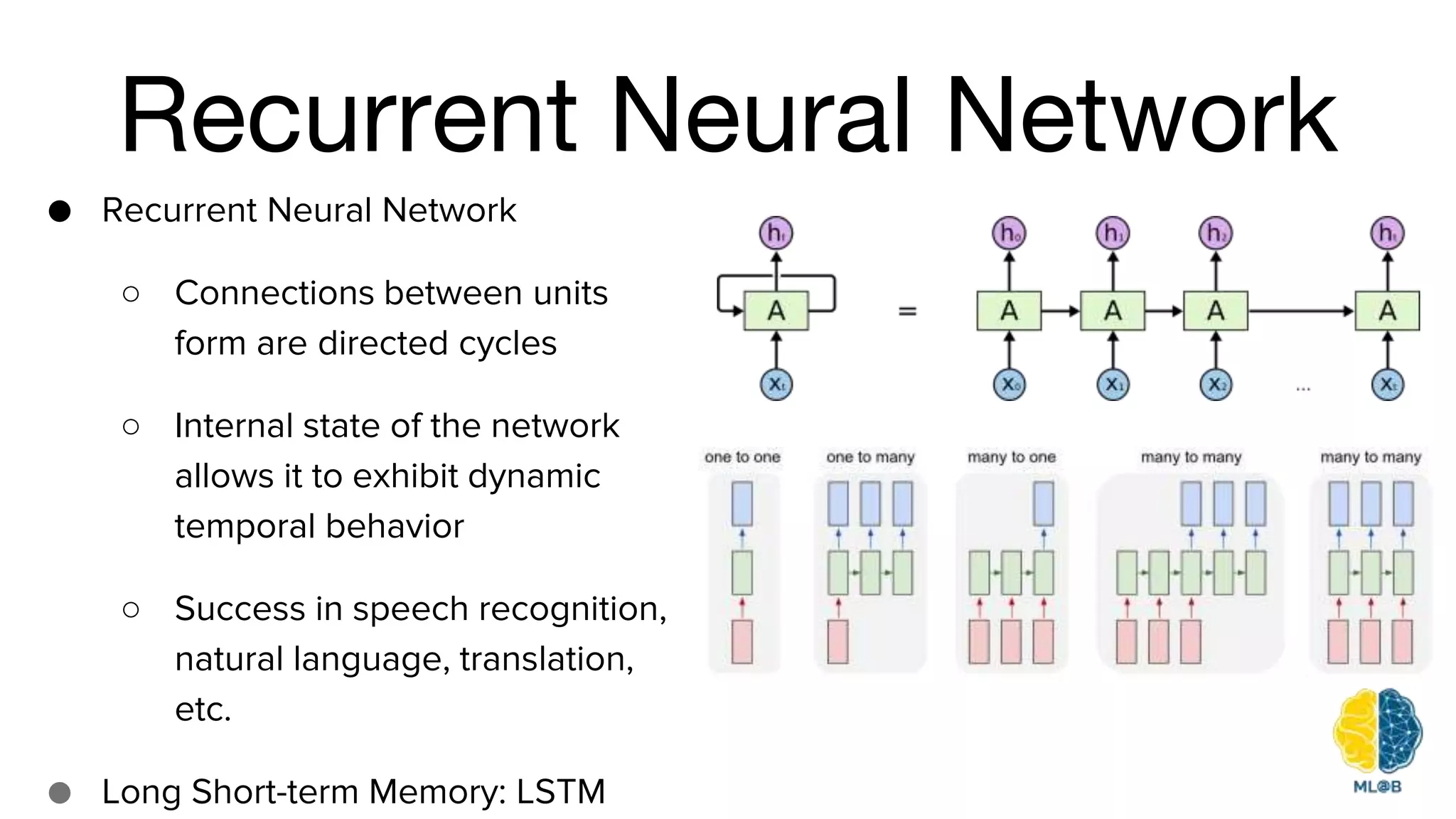

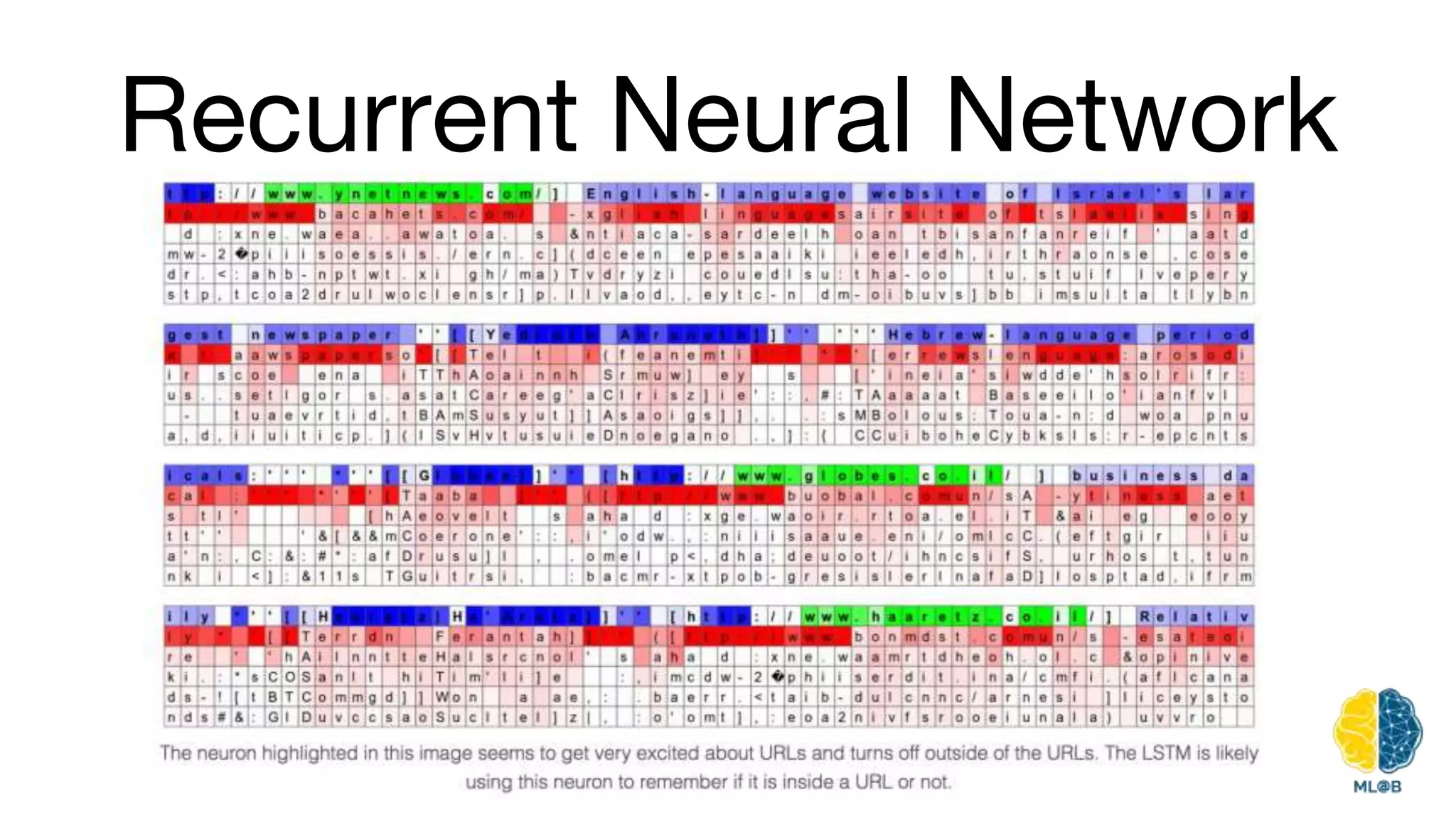

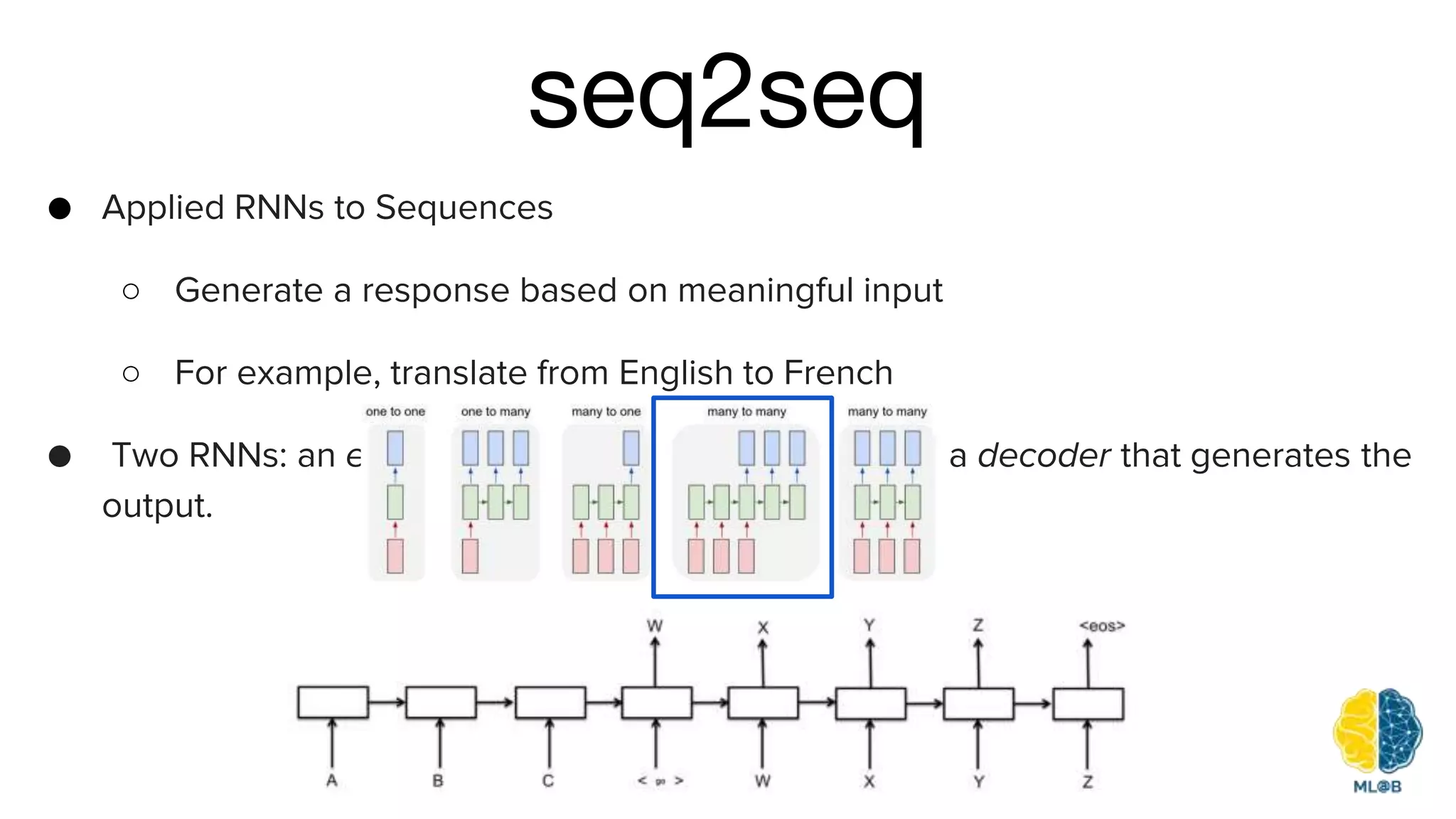

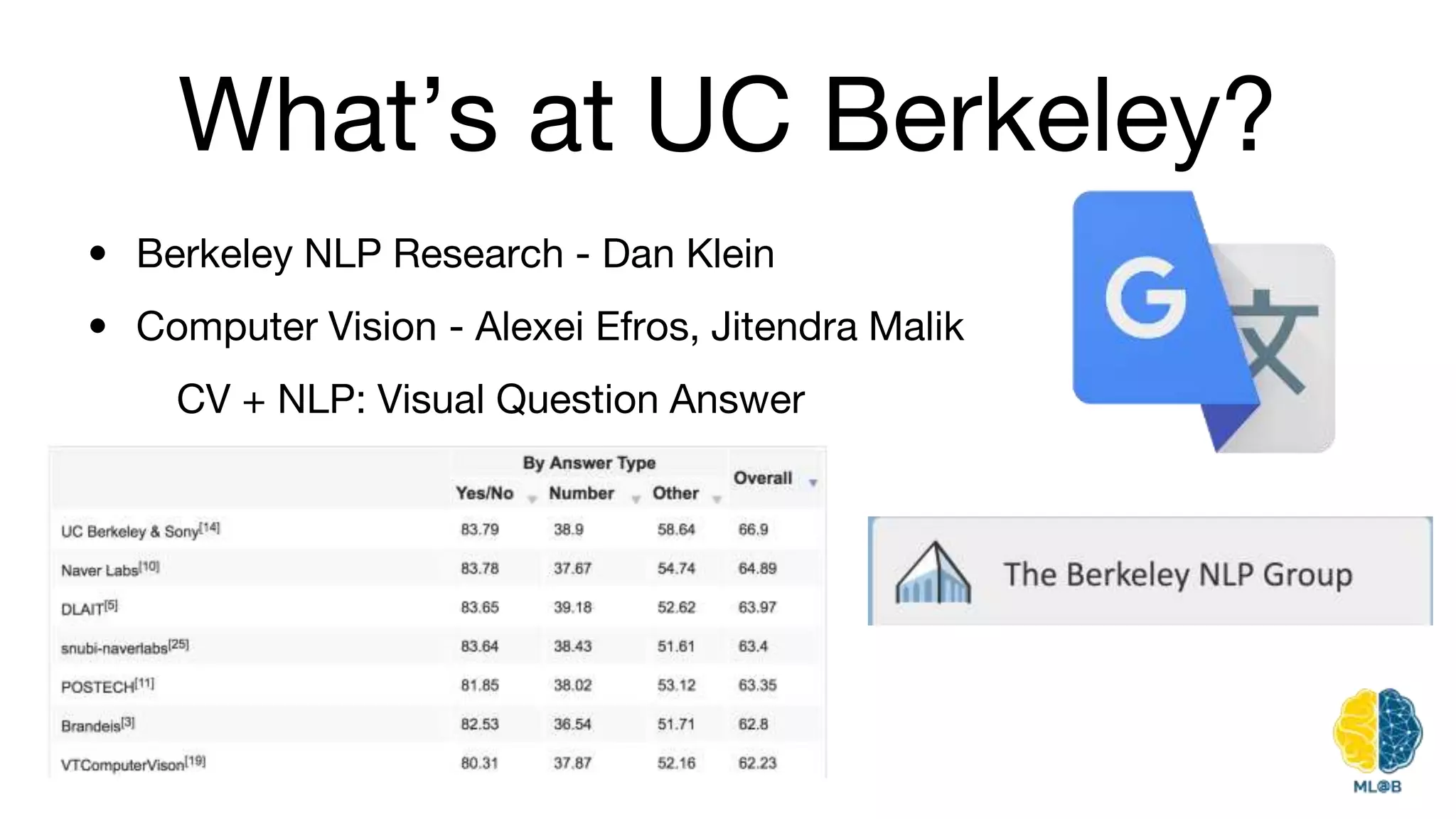

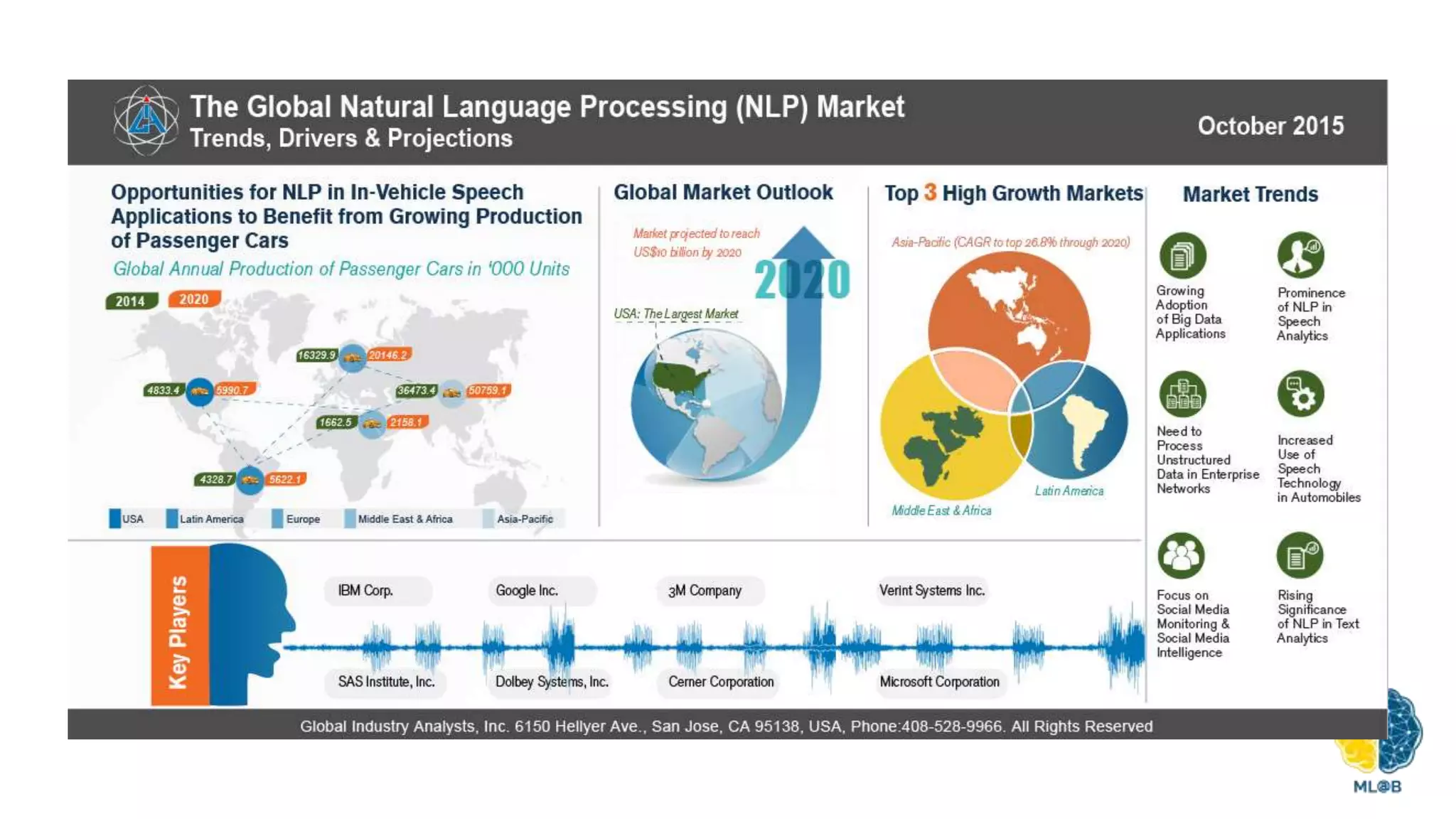

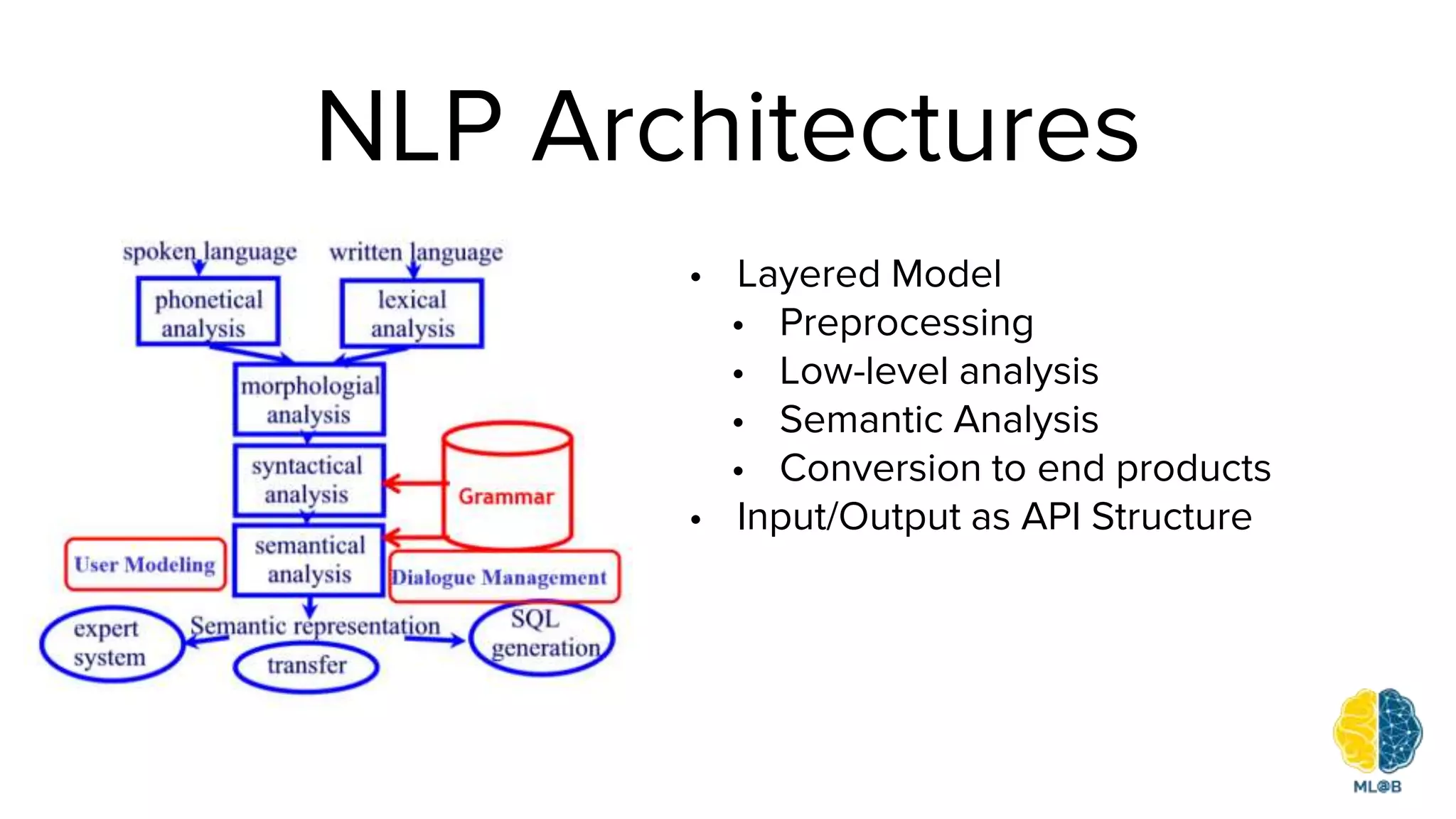

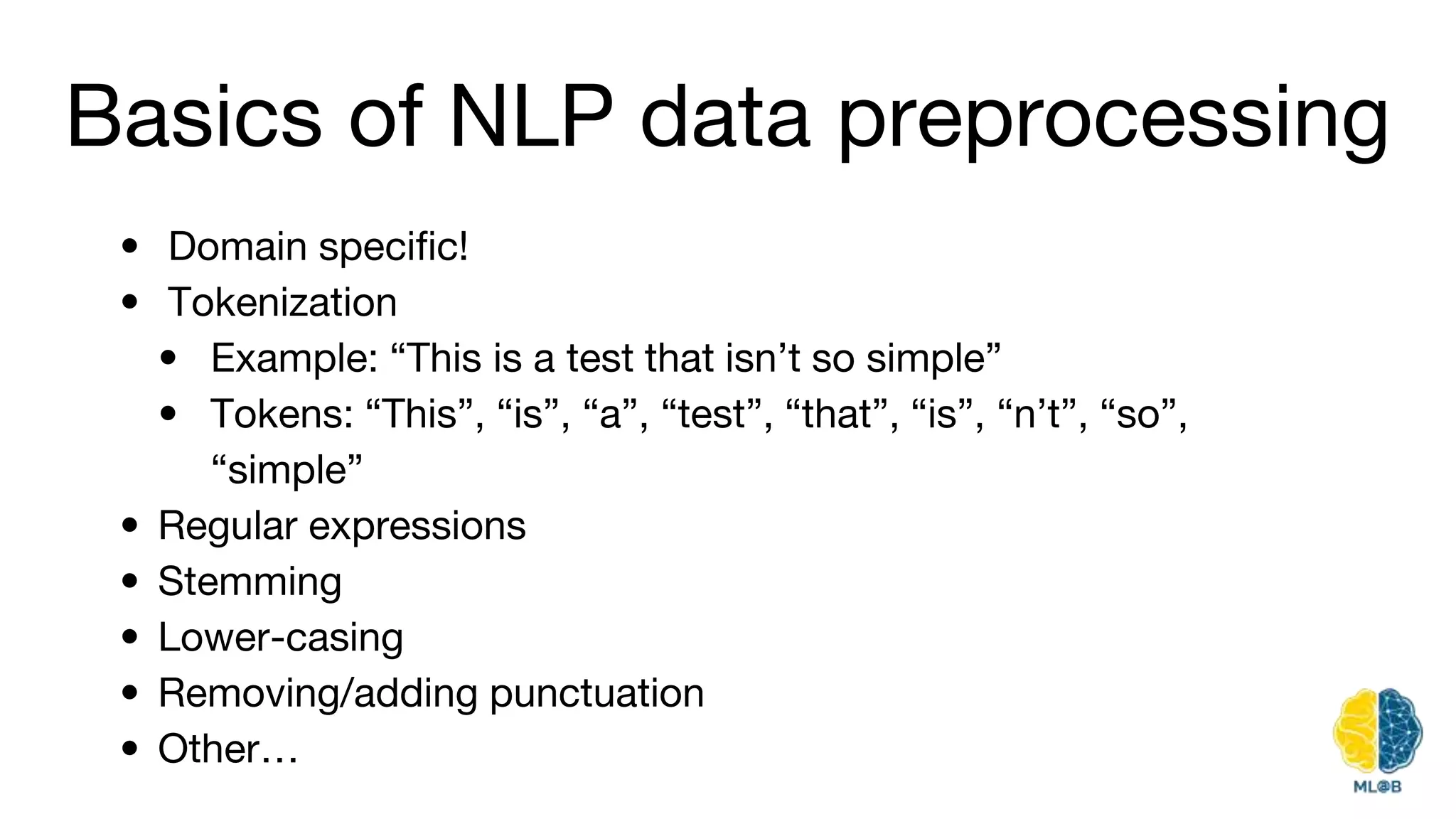

The document provides a comprehensive overview of natural language processing (NLP), including its background, task types, challenges, and advancements such as machine translation and conversation agents. It discusses various techniques for word representation, including one-hot vectors, singular value decomposition, and modern deep learning methods like word2vec. The future of NLP is highlighted with emphasis on improved user interaction, analyzing unstructured information, and the increasing role of AI in industry applications.