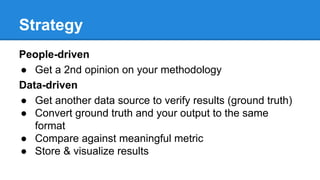

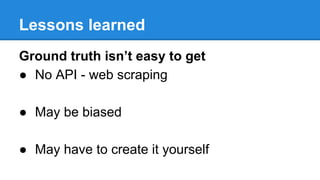

Karolina Alexiou discusses the importance of evaluation in big data analysis, emphasizing the need for a robust framework to measure the effectiveness of algorithms. She shares insights from Teralytics' case study on traffic congestion estimation, highlighting the challenges of obtaining accurate ground truth data and the pitfalls of comparison methods. Key action points include asking critical questions about data verification and visualization, and leveraging appropriate tools like Python and pandas for effective analysis.