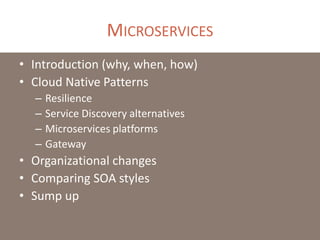

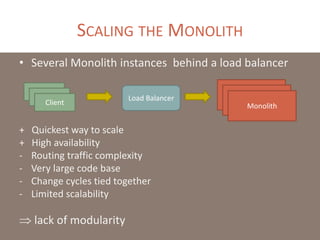

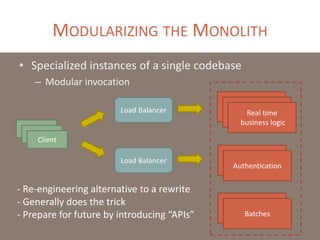

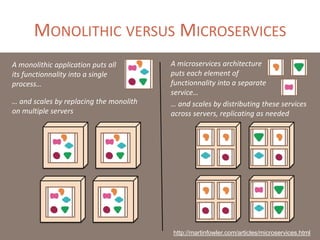

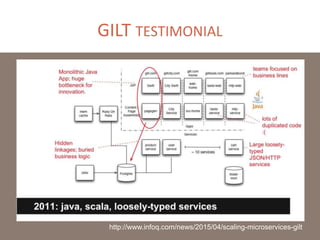

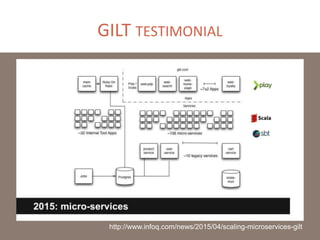

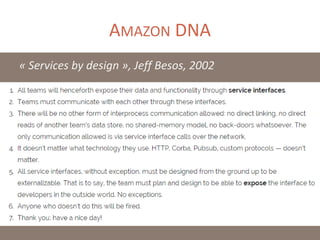

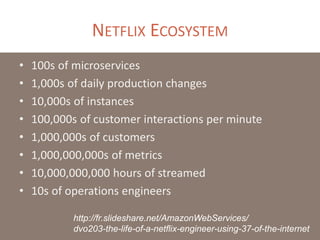

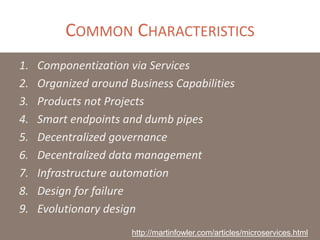

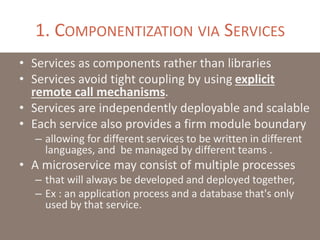

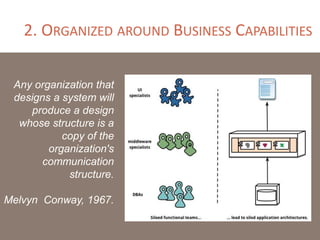

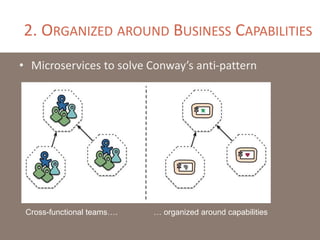

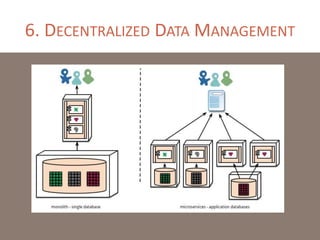

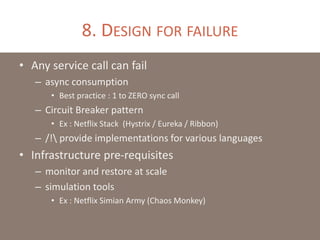

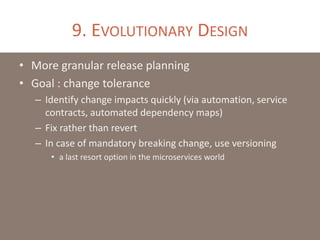

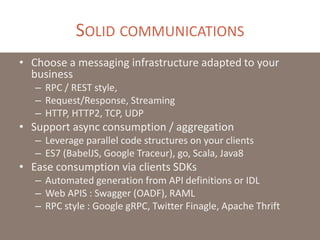

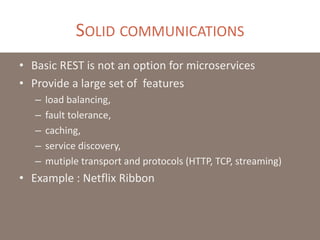

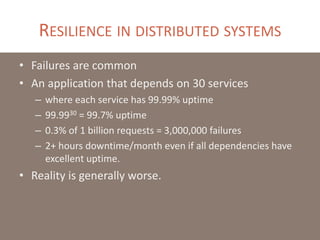

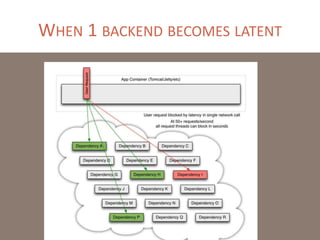

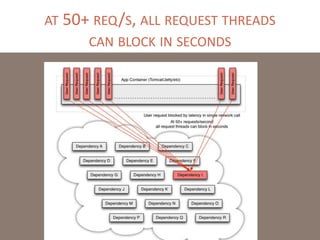

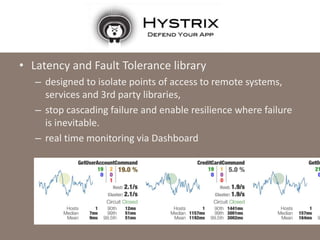

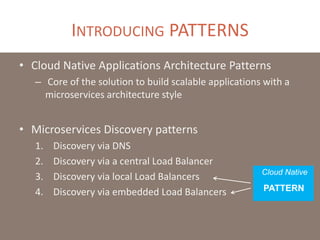

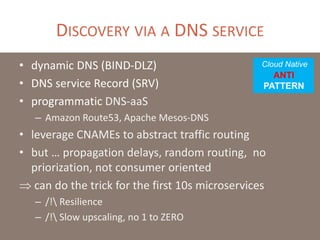

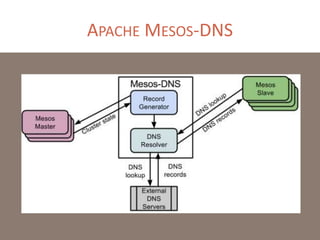

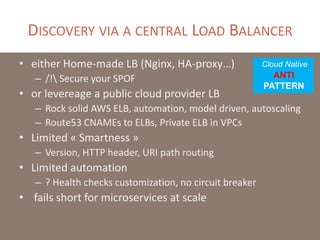

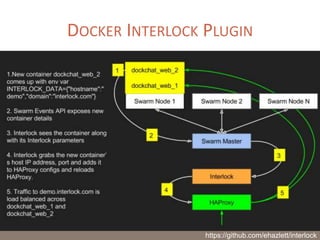

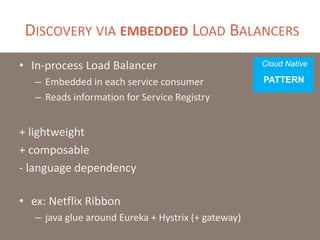

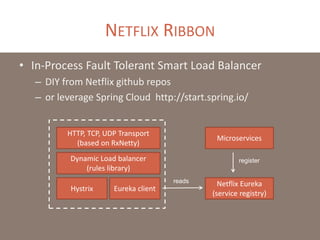

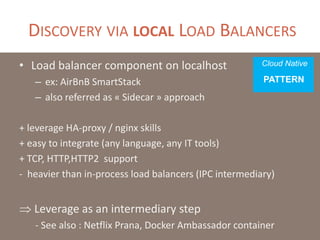

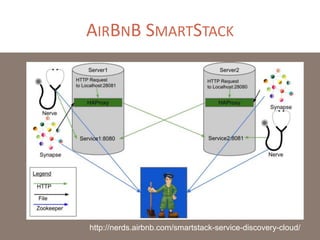

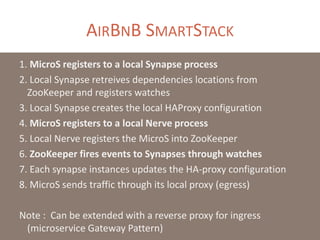

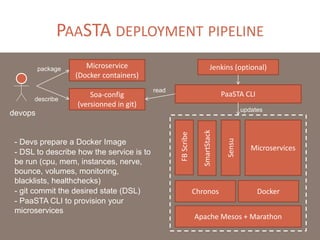

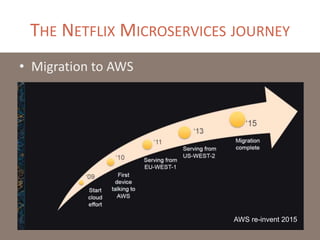

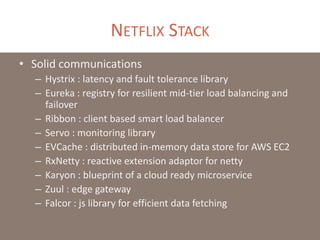

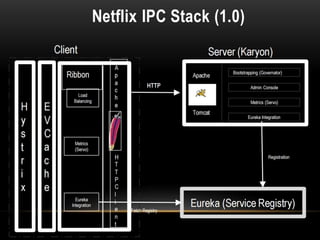

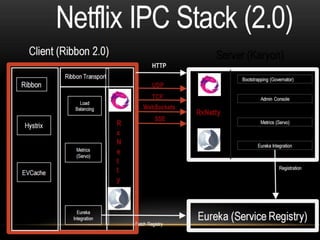

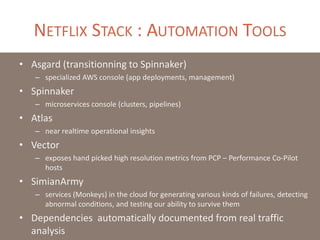

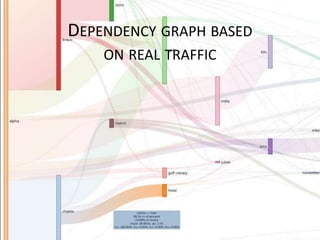

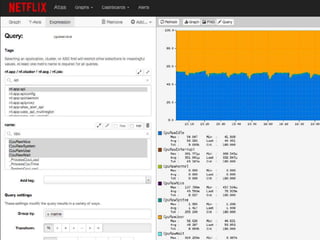

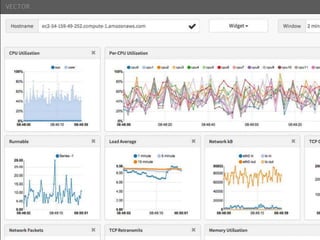

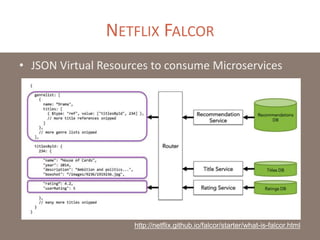

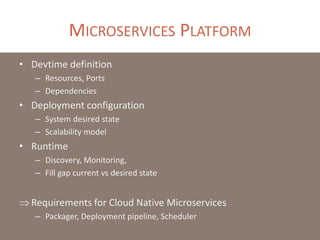

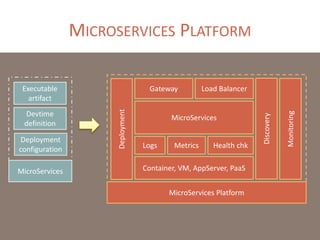

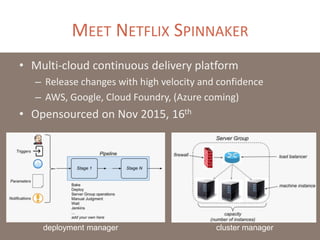

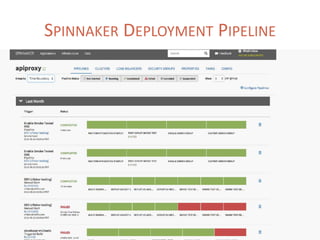

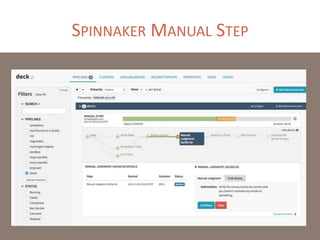

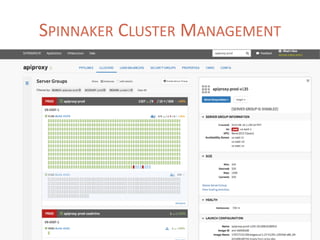

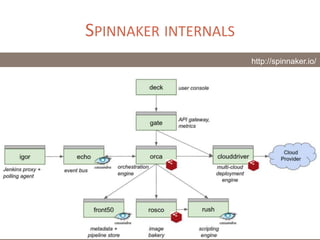

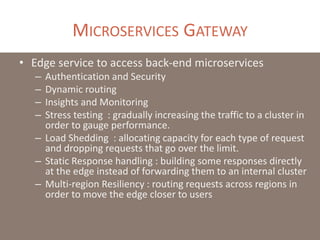

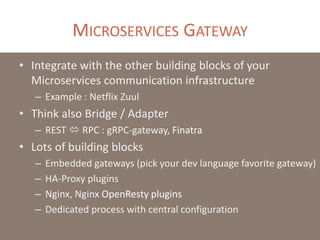

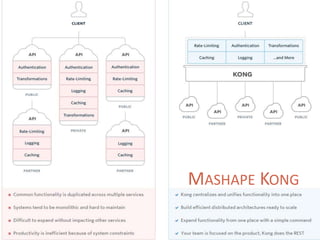

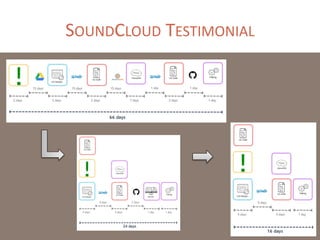

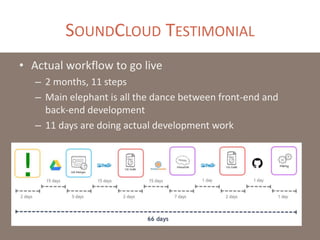

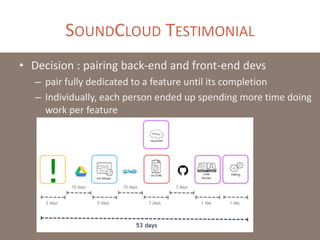

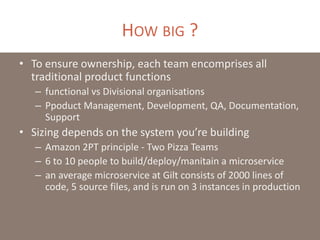

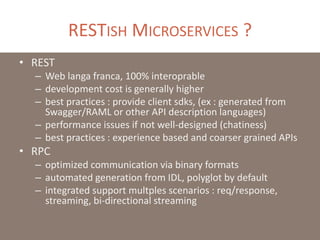

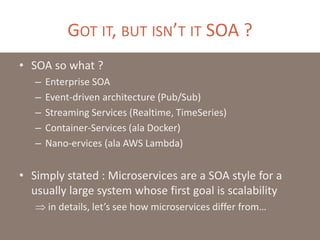

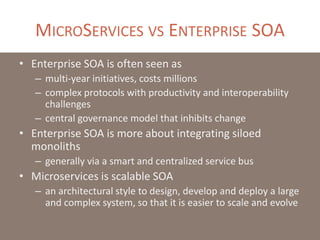

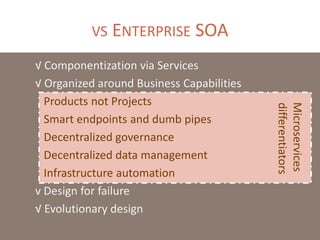

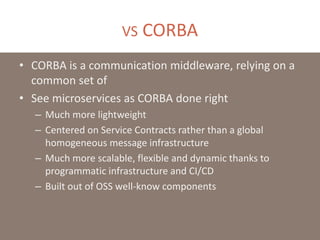

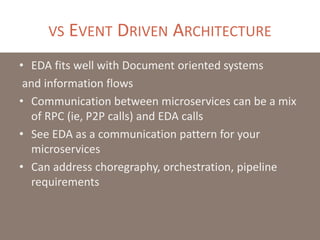

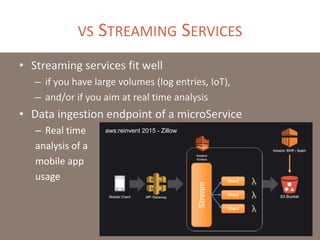

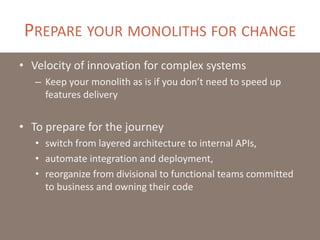

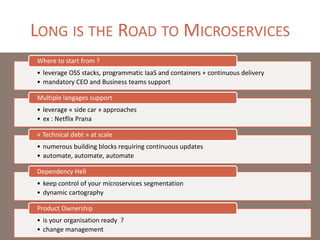

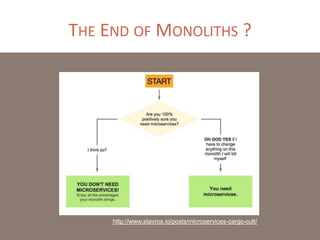

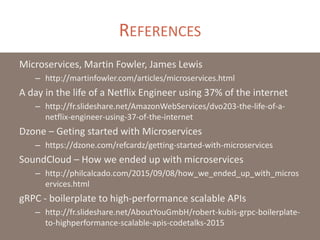

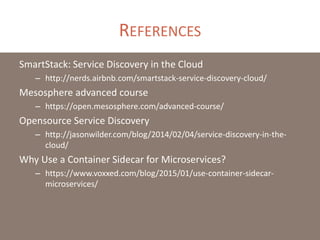

The document provides a comprehensive overview of microservices architecture, highlighting its advantages over monolithic applications and discussing cloud-native patterns, resilience, service discovery, and organizational changes required for successful implementation. It explores case studies from industry pioneers like Netflix and Amazon, elaborating on best practices for microservices development, deployment, and management tools. Additionally, it emphasizes the importance of team ownership and flexibility within an organization to adapt to the evolving demands of microservices-based applications.